tensorflow2------保存加载模型并转换为TF Lite

1. callbacks.ModelCheckpoint中保存keras h5模型、权重参数

save_weights_only=True 仅保存权重参数

output_model_file=os.path.join(logdir,"fashion_mnist_weights.h5")#在logdir中创建一个模型文件.h5

#定义一个callbacks数组

callbacks = [

keras.callbacks.TensorBoard(logdir),

# 这里第二个参数表示仅保存最好的那个模型, 但是 evaluate 使用的是最后的epoch模型来进行评估;

# save_weights_only默认即为False,既保存权重参数又保存模型,True表示仅保存权重参数

keras.callbacks.ModelCheckpoint(output_model_file,save_best_only=True,save_weights_only=True),

keras.callbacks.EarlyStopping(patience=5,min_delta=1e-3)#

]

#fit用于训练

history=model.fit(x_train_scaled, y_train, epochs=10, #epochs用于遍历训练集次数

validation_data=(x_valid_scaled,y_valid),#加入验证集,每隔一段时间就对验证集进行验证

callbacks=callbacks)上图代码中我们仅保存了训练效果最后的权重参数,并没有保存模型,在另一份代码中我们无需对模型进行 fit,在建立模型后仅加载权重参数即可,如下所示:

output_model_file=os.path.join(logdir,"fashion_mnist_weights.h5")#在logdir中创建一个模型文件.h5

'''

#定义一个callbacks数组

callbacks = [

keras.callbacks.TensorBoard(logdir),

# 这里第二个参数表示仅保存最好的那个模型, 但是 evaluate 使用的是最后的epoch模型来进行评估;

# save_weights_only默认即为False,既保存权重参数又保存模型,True表示仅保存权重参数

keras.callbacks.ModelCheckpoint(output_model_file,save_best_only=True,save_weights_only=True),

keras.callbacks.EarlyStopping(patience=5,min_delta=1e-3)#

]

#fit用于训练

history=model.fit(x_train_scaled, y_train, epochs=10, #epochs用于遍历训练集次数

validation_data=(x_valid_scaled,y_valid),#加入验证集,每隔一段时间就对验证集进行验证

callbacks=callbacks)

'''

model.load_weights(output_model_file)save_weights_only=False 既保存权重又保存参数(默认)

当我们设置ModelCheckpoint中的第三个参数save_weights_only=False时,我们可以使用load_model来加载模型

loaded_model = keras.models.load_model(output_model_file)#载入保存的模型、权重参数,若载入的是权重参数,则报No model错误

loaded_model.evaluate(x_test_scaled, y_test)#因为加载的模型是保存的accuracy最高的,所以test概率和上面有可能不一致,上面使用的是最后一次epoch的模型2. keras模型转换为SavedModel

保存为SavedModel

tf.saved_model.save(model,'./keras_saved_graph')#保存 savedModel

saved_model_cli工具用户查看模型的输入输出信息,其中:

-

tag-set等于 server,类似于模型的版本信息的意思

-

SignatureDefs 表示 模型签名

-

第一个模型签名 __saved_model_init_op 表示模型初始化

-

第二个模型签名 serving_default 表示模型具体的一些信息,有 inputs / outputs / Method name

!saved_model_cli show --dir ./keras_saved_graph --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['__saved_model_init_op']:

The given SavedModel SignatureDef contains the following input(s):

The given SavedModel SignatureDef contains the following output(s):

outputs['__saved_model_init_op'] tensor_info:

dtype: DT_INVALID

shape: unknown_rank

name: NoOp

Method name is:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['flatten_1_input'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 28, 28)

name: serving_default_flatten_1_input:0

The given SavedModel SignatureDef contains the following output(s):

outputs['dense_21'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 10)

name: StatefulPartitionedCall:0

Method name is: tensorflow/serving/predict指定 tag_set 和 signature_def

!saved_model_cli show --dir ./keras_saved_graph --tag_set serve --signature_def serving_default

The given SavedModel SignatureDef contains the following input(s):

inputs['flatten_1_input'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 28, 28)

name: serving_default_flatten_1_input:0

The given SavedModel SignatureDef contains the following output(s):

outputs['dense_21'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 10)

name: StatefulPartitionedCall:0

Method name is: tensorflow/serving/predict--input_exprs 表示 通过python表达式传递输入,这里传入两个28*28的矩阵,矩阵值均是1

!saved_model_cli run --dir ./keras_saved_graph --tag_set serve --signature_def serving_default --input_exprs 'flatten_1_input=np.ones((2,28,28))'

#通过输入两个28*28的矩阵得到两个概率分布,每个概率分布都有十个值,意味着在十类中的预测

......

Result for output key dense_21:

[[1.6484389e-02 6.5989010e-02 6.6968925e-02 1.2968344e-02 7.1568429e-01

3.8513019e-05 1.0842297e-01 2.8989429e-04 1.2914705e-02 2.3890496e-04]

[1.6484389e-02 6.5989010e-02 6.6968925e-02 1.2968344e-02 7.1568429e-01

3.8513019e-05 1.0842297e-01 2.8989429e-04 1.2914705e-02 2.3890496e-04]]加载SavedModel

loaded_saved_model = tf.saved_model.load('./keras_saved_graph/')#加载本地的模型

print(list(loaded_saved_model.signatures.keys()))#打印所有的模型签名

['serving_default']

#通过上面的签名获取一个函数句柄inference

inference = loaded_saved_model.signatures['serving_default']

print(inference)

print(inference.structured_outputs)#输出dense_21,与前面的saved_model_cli命令输出一致

{'dense_21': TensorSpec(shape=(None, 10), dtype=tf.float32, name='dense_21')} #使用inference对象来做一个推理

resultes = inference(tf.constant(x_test_scaled[0:1]))#向这个句柄中传入test集合中的第一个图像输入

print(resultes)#输出为一个字典

print(resultes['dense_21'])

{'dense_21': }

tf.Tensor(

[[1.2782556e-08 2.0183863e-05 1.2078361e-09 2.2799457e-08 1.7268558e-08

8.7200322e-05 3.8458791e-07 6.5368288e-03 1.4606912e-06 9.9335396e-01]], shape=(1, 10), dtype=float32) 3. 签名函数转为 SavedModel

定义一个带签名的函数cube

#input_signature表示 输入签名,即对输入的参数类型做出限定

@tf.function(input_signature=[tf.TensorSpec([None,],tf.int32,name='x')])

def cube(z):

return tf.pow(z, 3)签名函数cube通过调用get_concrete_function来生成一个具体函数cube_func_int32

#@tf.function py func -> graph

#get_concreate_function -> add input signature -> SavedModel

#使用get_concreate_function可以将上面的@tf.function加上input signature,从而可以使用 SavedModel

cube_func_int32 = cube.get_concrete_function(tf.TensorSpec([None],tf.int32))

print(cube_func_int32)

print(cube_func_int32 is cube.get_concrete_function(tf.TensorSpec([5],tf.int32)))

print(cube_func_int32 is cube.get_concrete_function(tf.constant([1,2,3])))

cube_func_int32.graph

True

True

print(cube(tf.constant([1,2,3])))

tf.Tensor([ 1 8 27], shape=(3,), dtype=int32)

新建一个Module,然后将其成员变量设置为cube,调用saved_model.save接口保存SavedModel

#将签名函数保存为SavedModel

to_export = tf.Module()

to_export.cube = cube

tf.saved_model.save(to_export,'./signature_to_saved_model')

INFO:tensorflow:Assets written to: ./signature_to_saved_model/assets

!saved_model_cli show --dir ./signature_to_saved_model --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['__saved_model_init_op']:

The given SavedModel SignatureDef contains the following input(s):

The given SavedModel SignatureDef contains the following output(s):

outputs['__saved_model_init_op'] tensor_info:

dtype: DT_INVALID

shape: unknown_rank

name: NoOp

Method name is:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['x'] tensor_info:

dtype: DT_INT32

shape: (-1)

name: serving_default_x:0

The given SavedModel SignatureDef contains the following output(s):

outputs['output_0'] tensor_info:

dtype: DT_INT32

shape: (-1)

name: PartitionedCall:0

Method name is: tensorflow/serving/predict加载上面保存的模型,然后调用cube函数

imported = tf.saved_model.load('./signature_to_saved_model')

imported.cube(tf.constant([2]))

4. 转换为具体函数 concrete_function

keras model ---> concrete_function

loaded_keras_model = keras.models.load_model('./graph_def_and_weights/fashion_mnist_weights.h5')

loaded_keras_model(np.ones((1,28,28,1)))

run_model = tf.function(lambda x : loaded_keras_model(x))

keras_concrete_function = run_model.get_concrete_function(#设置输入参数为一个tensor

tf.TensorSpec(loaded_keras_model.inputs[0].shape,

loaded_keras_model.inputs[0].dtype)

)

keras_concrete_function(tf.constant(np.ones((1,28,28,1), dtype=np.float32)))

savedModel ---> concrete_function

savedModel中的签名实际上都是一个concrete function,savedModel是concrete_function的集合

inference = loaded_saved_model.signatures['serving_default']

5. 转换为TF lite模型

tensorflow官方网址:https://tensorflow.google.cn/lite/convert/python_api?hl=en

keras model转换为 tflite

tf.lite.TFLiteConverter.from_keras_model

loaded_keras_model = keras.models.load_model('./graph_def_and_weights/fashion_mnist_weights.h5')

loaded_keras_model(np.ones((1,28,28,1)))

#keras model 保存为 TFLite

keras_to_tflite_converter = tf.lite.TFLiteConverter.from_keras_model(loaded_keras_model)

#量化tflite 模型,可以在损失较小精度或不影响精度的情况下减小模型大小

keras_to_tflite_converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

keras_tflite=keras_to_tflite_converter.convert()

if not os.path.exists('./tflite_models'):

os.mkdir('./tflite_models')

#with open('./tflite_models/keras_tflite', 'wb') as f:

# f.write(keras_tflite)

#保存量化的 tflite 模型

with open('./tflite_models/quantized_keras_tflite', 'wb') as f:

f.write(keras_tflite)concrete function 转换为 tflite

tf.lite.TFLiteConverter.from_concrete_functions

run_model = tf.function(lambda x : loaded_keras_model(x)) #这里的loaded_keras_model就是上一个代码中加载的keras model

keras_concrete_function = run_model.get_concrete_function(

tf.TensorSpec(loaded_keras_model.inputs[0].shape,

loaded_keras_model.inputs[0].dtype)

)

#示例

keras_concrete_function(tf.constant(np.ones((1,28,28,1), dtype=np.float32)))

#concrete function 保存为 TFLite

concrete_func_to_tflite_converter = tf.lite.TFLiteConverter.from_concrete_functions([keras_concrete_function])

#量化tflite 模型,可以在损失较小精度或不影响精度的情况下减小模型大小

concrete_func_to_tflite_converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

concrete_func_tflite = concrete_func_to_tflite_converter.convert()

#with open('./tflite_models/concrete_func_tflite', 'wb') as f:

# f.write(concrete_func_tflite)

#保存量化的 tflite 模型

with open('./tflite_models/quantized_concrete_func_tflite', 'wb') as f:

f.write(concrete_func_tflite)savedModel 转换为 tflite

tf.lite.TFLiteConverter.from_saved_model

#savedModel 保存为 TFLite

saved_model_to_tflite_converter = tf.lite.TFLiteConverter.from_saved_model('./keras_saved_graph/')

#量化tflite 模型,可以在损失较小精度或不影响精度的情况下减小模型大小

saved_model_to_tflite_converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

saved_model_tflite = saved_model_to_tflite_converter.convert()

#with open('./tflite_models/saved_model_tflite', 'wb') as f:

# f.write(saved_model_tflite)

#保存量化的 tflite 模型

with open('./tflite_models/quantized_saved_model_tflite', 'wb') as f:

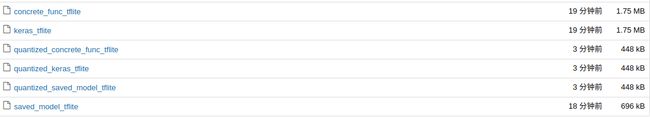

f.write(concrete_func_tflite) 上图显示生成的tflite模型,这里显示经过quentized量化后的模型大小仅占非量化模型的1/4大小

6. 加载并运行tf lite模型

使用tf.lite.Interpreter加载模型并运行推理

#

# tflite 解释器

#

#以前面的concrete_func_tflite为例,也可以直接加载模型路径

#interpreter = tf.lite.Interpreter(model_path='./xx/xx_tflite')

interpreter = tf.lite.Interpreter(model_content=concrete_func_tflite)

interpreter.allocate_tensors()#给所有的tensor分配内存

#获取 input 和 output tensor

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

#输出字典,我们需要利用里面的输出来设置输入输出的相关参数

print(input_details)

print(output_details)

[{'name': 'x', 'index': 76, 'shape': array([ 1, 28, 28, 1], dtype=int32), 'dtype': , 'quantization': (0.0, 0)}]

[{'name': 'Identity', 'index': 0, 'shape': array([ 1, 10], dtype=int32), 'dtype': , 'quantization': (0.0, 0)}] #手动设置输入参数

input_shape = input_details[0]['shape']#设置输入维度

input_data = tf.constant(np.ones(input_shape, dtype=np.float32))

interpreter.set_tensor(input_details[0]['index'],input_data)

#执行预测

interpreter.invoke()

output_results = interpreter.get_tensor(output_details[0]['index'])

print(output_results)#十个标签概率值的概率分布

[[2.3221240e-08 2.5767106e-01 1.1340029e-03 6.2593401e-08 2.9228692e-12

3.0864484e-07 3.4217976e-12 6.7463984e-06 2.1669313e-01 5.2449471e-01]]最后我们可以将该模型部署到移动端设备上,例如nvidia jetson nano上

在我的另一篇博文中有相关指导具体如何在jetson nano上搭建tf lite相关环境以及如何部署运行

jetson nano上部署运行 tensorflow lite模型