Interpretable Models - Decision Rules

可以说是最简单的model了,IF-THEN的结构

一、The usefulness of a decision rule is usually summarized in two numbers: Support and accuracy。

Support(coverage of a rule):走进该rule的实例占比,The percentage of instances to which the condition of a rule applies is called the support.

Accuracy(confidence of a rule):The accuracy of a rule is a measure of how accurate the rule is in predicting the correct class for the instances to which the condition of the rule applies.

在support和accuracy之间存在tradeoff,rule需要进行组合,组合中会遇到两个问题:

-

Rules can overlap(规则重叠): What if I want to predict the value of a house and two or more rules apply and they give me contradictory predictions?

-

No rule applies(无规则可用): What if I want to predict the value of a house and none of the rules apply?

二、有两种组合形式可以去解决规则重叠的问题:

1、Decision lists (ordered)

只返回第一个匹配的规则

2、Decision sets (unordered)

多数投票选举,weight可以用rule的accuracy等来确定

针对no rule applied 的问题可以加个default rule来解决。

三、下面具体介绍三个具体的decision-rule-based方法

3.1 Learn Rules from a Single Feature (OneR)

从众多特征中选出month来作为rule,有overfit的风险

3.2 Sequential Covering

The sequential covering algorithm for two classes (one positive, one negative) works like this:

-

Start with an empty list of rules (rlist).

-

Learn a rule r.

-

While the list of rules is below a certain quality threshold (or positive examples are not yet

covered):

– Add rule r to rlist.

– Remove all data points covered by rule r. – Learn another rule on the remaining data. -

Return the decision list.

即不断学习新的rule加入到list中,而且每个rule覆盖到的点都会被去除。

接下来的问题是怎么学习一个rule?

这里不能用oneR因为它是覆盖整个feature space的。

可以用decision tree。

具体的流程如下:

-

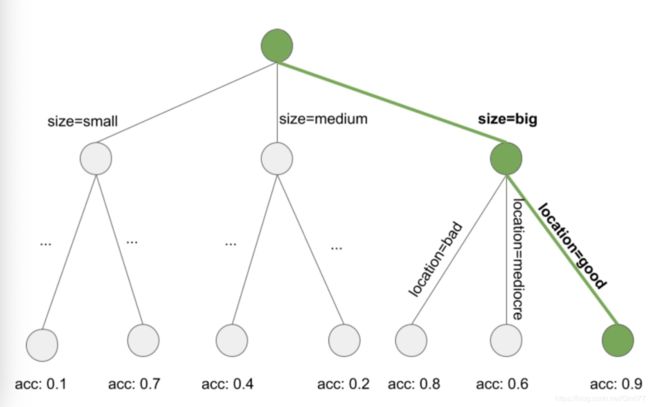

Learn a decision tree (with CART or another tree learning algorithm).

-

Start at the root node and recursively select the purest node (e.g. with the lowest misclassifi-

cation rate).

-

The majority class of the terminal node is used as the rule prediction; the path leading to that

node is used as the rule condition.把多数的类的叶子结点作为rule的预测值,path作为rule。

3.3 Bayesian Rule Lists

BRL uses Bayesian statistics to learn decision lists from frequent patterns which are pre-mined with the FP-tree algorithm

1. Pre-mine frequent patterns from the data that can be used as conditions for the decision rules.

feature value的频繁项集,可以用Apriori or FP-Growth。

2. Learn a decision list from a selection of the pre-mined rules.

3.3 Learning Bayesian Rule Lists

Our goal is to find the list that maximizes this posterior probability. Since it is not possible to find the exact best list directly from the distributions of lists, BRL suggests the following recipe:

1) Generate an initial decision list, which is randomly drawn from the priori distribution.

2) Iteratively modify the list by adding, switching or removing rules, ensuring that the resulting lists follow the posterior distribution of lists.

3) Select the decision list from the sampled lists with the highest probability according to the posteriori distribution.

寻找后验概率最大的rule list