zookeeper与hadoop整合

介绍

在Hadoop1.X版本中namenode只支持一个,我们知道HDFS的读或者写都需要先去访问namenode,namenode其实就相当于Hadoop集群中的Master,如果namenode挂掉,整个Hadoop集群将无法使用。这就是我们说的单点故障问题。在Hadoop2.X版本之后提供了Hadoop的高可用,namenode可以配置两个,由Zookeeper管理,一个namenode处于active状态,另外一个处于standby状态。由active状态的namenode对外提供服务,standby状态的namenode去同步active状态namenode的数据,保持数据一致,当active状态的namenode挂掉,standby状态的namenode自动切换到active状态,继续工作,保证hadoop集群的可靠性和高可用性。

对配置文件修改

对hadoop中的core-site.xml文件进行修改

![]()

需要进入的目录找到文件

进入文件进行修改

[root@zhiyou101 hadoop]# vi core-site.xml 命令

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<!--需要在此标签添加如下内容-->

<configuration>

<!-- 指定HDFS中NameNode的地址,我们配置的是高可用的namenode

我们暂时引用一个ns变量,这个ns变量我们在hdfs-site.xml文件中会进行配置-->

<!-- 指定hdfs的nameservice为ns -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns</value>

</property>

<!-- 指定hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/var/hadoop/data</value>

</property>

<!--指定zookeeper地址-->

<property>

<name>ha.zookeeper.quorum</name>

<value>zhiyou101:2181,zhiyou102:2181,zhiyou103:2181</value>

</property>

<!--设置重连次数,默认10次-->

<property>

<name>ipc.client.connect.max.retries</name>

<value>100</value>

</property>

<!--设置客户端在重试建立服务器连接之前等待的毫秒数,默认1000-->

<property>

<name>ipc.client.connect.retry.interval</name>

<value>10000</value>

</property>

</configuration>

还需要对同目录下的hdfs-site.xml文件进行修改

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<!--这个需要修改的文件较多-->

<configuration>

<!-- 指定HDFS副本的数量,默认是三份

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>zhiyou102:50090</value>

</property>

-->

<!--指定hdfs的nameservice为ns,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns</value>

</property>

<!-- ns下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn1</name>

<value>zhiyou101:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn1</name>

<value>zhiyou101:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn2</name>

<value>zhiyou102:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn2</name>

<value>zhiyou102:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://zhiyou101:8485;zhiyou102:8485;zhiyou103:8485/ns

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/var/hadoop/journal</value>

</property>

<!-- 开启NameNode故障时自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!--namenode文件存储路径-->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///var/hadoop/hdfs/name</value>

</property>

<!--datanode文件存储路径-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///var/hadoop/hdfs/data</value>

</property>

<!--备份数量-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 在NN和DN上开启WebHDFS (REST API)功能,不是必须 -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

现在修改mapred-site.xml文件

"1.0"?>

"text/xsl" href="configuration.xsl"?>

mapreduce.framework.name

yarn

"mapred-site.xml" 26L, 881C

配置yarn-site.xml文件

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

<!-- 开启RM高可用 -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 指定RM的cluster id -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<!-- 指定RM的名字 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- 分别指定RM的地址 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>zhiyou102</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>zhiyou103</value>

</property>

<!-- 指定zk集群地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>zhiyou101:2181,zhiyou102:2181,zhiyou103:2181</value>

</property>

<!--mapreduce获取数据的方式-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

还有最后一个slaves配置文件进行配置

配置datanode节点

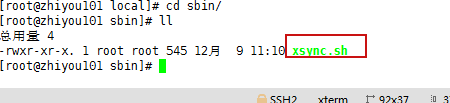

最后就是同步hadoop

#!/bin/bash

#1 获取输入参数个数,如果没有参数,直接退出

pcount=$#

if((pcount==0)); then

echo no args;

exit;

fi

#2 获取文件名称

p1=$1

fname=`basename $p1`

echo fname=$fname

#3 获取上级目录到绝对路径

pdir=`cd -P $(dirname $p1); pwd`

echo pdir=$pdir

#4 获取当前用户名称

user=`whoami`

#5 循环

for((host=101; host<104; host++)); do

#echo $pdir/$fname $user@test$host:$pdir

echo --------------- zhiyou$host ----------------

rsync -rvl $pdir/$fname $user@zhiyou$host:$pdir

done

~

启动高可用集群

1、分别启动zookeeper

[root@zhiyou101 etc]# /usr/local/zookeeper/bin/zkServer.sh start

2、在zhiyou101,102,103分别上启动journalnode集群

[root@zhiyou101 sbin]# ./hadoop-daemon.sh start journalnode

3、在zhiyou101上格式化zkfc

[root@zhiyou101 sbin]# hdfs zkfc -formatZK

4、在zhiyou101上格式化namenode

[root@zhiyou101 sbin]# hadoop namenode -format

5、在zhiyou101上启动namenode

[root@zhiyou101 sbin]# ./hadoop-daemon.sh start namenode

6、在zhiyou102上启动数据同步和standby的namenode

同步数据

[root@zhiyou102 hadoop]# hdfs namenode -bootstrapStandby

启动standby的namenode

[root@zhiyou102 hadoop]# hadoop-daemon.sh start namenode

7、再启动所有的datanode

[root@zhiyou101 sbin]# ./hadoop-daemons.sh start datanode

8、在RM节点上启动yarn

[root@zhiyou102 hadoop]# start-yarn.sh

只会启动102上的RM和三台节点上的NM,我们103上还有一个RM

9、在zhiyou103上单独启动resourcemanager

[root@zhiyou103 bin]# yarn-daemon.sh start resourcemanager

10、在zhiyou101上启动zkfc

zkfc节点的作用就是来自动切换namenode的。我们的namenode在101和102上,这两台节点上分别有一个zkfc进程。

[root@zhiyou101 sbin]# hadoop-daemons.sh start zkfc

测试

待更