Pytorch版faster rcnn的配置记录

使用的代码是ruotian luo的开源代码,github地址:https://github.com/ruotianluo/pytorch-faster-rcnn

0. 安装依赖包

Torchvision 0.3 ,opencv-python, easydict1.6,tensorboard-pytorch,scipy,pyyaml…

一. 下载项目代码,安装COCO API

- 下载项目代码

git clone https://github.com/ruotianluo/pytorch-faster-rcnn.git

- 安装python COCO API

进入项目的根目录

cd data

git clone https://github.com/pdollar/coco.git

cd coco/PythonAPI

make # install pycocotools locally make install :install pycocotools to the Python site-packages

cd ../../..

二. 准备数据集

主要下载coco和voc数据集,这里以voc2007的下载为例(voc2012操作完全相同)

- 下载voc的test、trainval和工具包

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCdevkit_08-Jun-2007.tar

- 将压缩包解压

tar xvf VOCtrainval_06-Nov-2007.tar

tar xvf VOCtest_06-Nov-2007.tar

tar xvf VOCdevkit_08-Jun-2007.tar

- 为数据集创建软链接

# $FRCN_ROOT指是faster rcnn的根目录,这里是指代,大家使用时改成自己的地址

# $VOCdevkit表示数据集的实际存放路径,创建链接时最好使用绝对路径,避免使用相对路径

cd $FRCN_ROOT/data

ln -s $VOCdevkit VOCdevkit2007 #如果使用的是voc2012则ln -s $VOCdevkit VOCdevkit2012

对于coco数据集进行相同处理

三. 使用预训练模型进行测试

这里的预训练模型指的是预先训练好的faster rcnn模型

- 下载预训练模型

google drive here

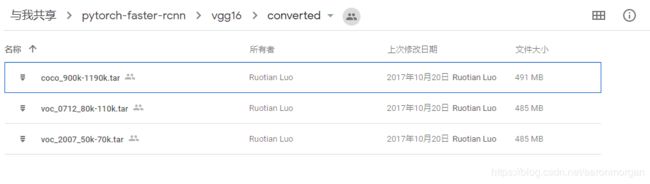

提供了五大类模型:基于mobile,resnet50,resnet101,resnet152,vgg16.

每个文件夹下分两部分,一部分是作者训练得到,一部分直接由tf-faster-rcnn转换得到

训练时又分成三类数据voc2007,voc2007+2012,coco

用户也可以自己下载tf-faster-rcnn的预训练模型:通过链接下载或者使用data\scripts下的数据下载脚本fetch_faster_rcnn_models.sh进行下载。

用户也可以自己下载tf-faster-rcnn的预训练模型:通过链接下载或者使用data\scripts下的数据下载脚本fetch_faster_rcnn_models.sh进行下载。

最后通过作者提供的脚本转换到pytorch下(ruotianluo_faster_rcnn_pytorch/tools提供了由resnet,mobile,vgg的转换脚本)。将下载的模型解压后运行:

python tools/convert_from_tensorflow.py --tensorflow_model resnet_model.ckpt

python tools/convert_from_tensorflow_vgg.py --tensorflow_model vgg_model.ckpt

会在tensorflow模型目录下创建一样名字的.pth文件。

- 创建存放预训练模型的文件夹和软链接来使用预训练模型

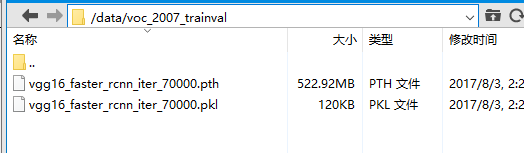

这里下载了vgg16下的voc_2007_50k-70k.tar模型,则在data/下建立voc_2007_trainval的文件夹(voc_0712_80k-110k.tar对应建立voc_2007_trainval+voc_2012_trainva文件夹)。把下载并解压好的预训练模型文件放进去。

tar zxvf voc_2007_50k-70k.tar

解压后的文件目录

在项目的根目录下,执行以下命令(终端输入时以;隔开):

NET=vgg16;

TRAIN_IMDB=voc_2007_trainval; # 等号两边不能有空格

mkdir -p output/${NET}/${TRAIN_IMDB};

cd output/${NET}/${TRAIN_IMDB};

ln -s ../../../data/voc_2007_trainval ./default;

cd ../../..

- 使用预训练模型测试自定义图像(demo.py或者demo.ipynb)

# 同样在根目录下操作

GPU_ID=0;

CUDA_VISIBLE_DEVICES=${GPU_ID} python ./tools/demo.py --net vgg16 --dataset pascal_voc

–net 的默认值是res101,–dataset的默认值是pascal_voc_0712

跑完以后大概是这样的效果:

想自己加几张图片测试的话,可以把jpg结尾的图片放进 data/demo里,然后把图片的名称加进 demo.py的代码里

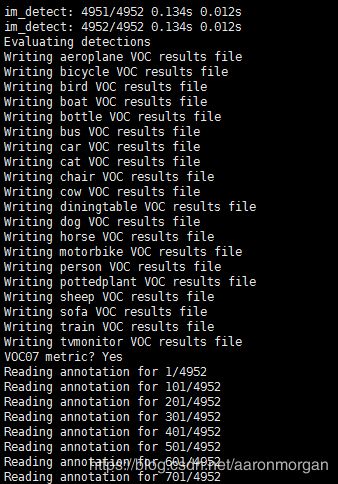

- 使用预训练模型跑测试集

GPU_ID=0;

./experiments/scripts/test_faster_rcnn.sh $GPU_ID pascal_voc vgg16

测试集上的精度如果太低,低于作者给出的值太低,可能是是NMS没有被合适地编译。参考 Issue 5

四、训练自己的模型

- 获取imagenet上的预训练模型(即特征提取部分使用的网络在imagenet上的预训练模型)

对于vgg16模型,在项目根目录下运行

mkdir -p data/imagenet_weights

cd data/imagenet_weights

python # open python in terminal and run the following Python code

import torch

from torch.utils.model_zoo import load_url

from torchvision import models

sd = load_url("https://s3-us-west-2.amazonaws.com/jcjohns-models/vgg16-00b39a1b.pth")

sd['classifier.0.weight'] = sd['classifier.1.weight']

sd['classifier.0.bias'] = sd['classifier.1.bias']

del sd['classifier.1.weight']

del sd['classifier.1.bias']

sd['classifier.3.weight'] = sd['classifier.4.weight']

sd['classifier.3.bias'] = sd['classifier.4.bias']

del sd['classifier.4.weight']

del sd['classifier.4.bias']

torch.save(sd, "vgg16.pth")

或者手动下载 模型pytorch-vgg

cd ../..

对于Resnet101:

mkdir -p data/imagenet_weights

cd data/imagenet_weights

# download from my gdrive (link in )

mv resnet101-caffe.pth res101.pth

cd ../..

模型下载链接:pytorch-resnet (下载名字中带有caffe的模型)

对于Mobilenet V1:

mkdir -p data/imagenet_weights

cd data/imagenet_weights

# download from my gdrive (https://drive.google.com/open?id=0B7fNdx_jAqhtZGJvZlpVeDhUN1k)

mv mobilenet_v1_1.0_224.pth.pth mobile.pth

cd ../..

- 训练

在项目根目录下:

./experiments/scripts/train_faster_rcnn.sh [GPU_ID] [DATASET] [NET]

# GPU_ID is the GPU you want to test on

# NET in {vgg16, res50, res101, res152} is the network arch to use

# DATASET {pascal_voc, pascal_voc_0712, coco} is defined in train_faster_rcnn.sh

# Examples:

./experiments/scripts/train_faster_rcnn.sh 0 pascal_voc vgg16

./experiments/scripts/train_faster_rcnn.sh 1 coco res101

注意:如果要训练自己的模型,请删除之前demo时用到的软连接 ln -s …/…/…/data/voc_2007_trainval ./default

因为新训练好的模型也会保存在这个目录下 (训练时,会判读该路径下是否存在snapshot,有的话会restore接着该snapshot继续训练下去)

- 利用tensorboardx可视化训练过程`:

tensorboard --logdir=tensorboard/vgg16/voc_2007_trainval/ --port=7001

tensorboard --logdir=tensorboard/vgg16/coco_2014_train+coco_2014_valminusminival/ --port=7002

- 测试和评估

./experiments/scripts/test_faster_rcnn.sh [GPU_ID] [DATASET] [NET]

# GPU_ID is the GPU you want to test on

# NET in {vgg16, res50, res101, res152} is the network arch to use

# DATASET {pascal_voc, pascal_voc_0712, coco} is defined in test_faster_rcnn.sh

# Examples:

./experiments/scripts/test_faster_rcnn.sh 0 pascal_voc vgg16

./experiments/scripts/test_faster_rcnn.sh 1 coco res101

You can use tools/reval.sh for re-evaluation ??

By default, trained networks are saved under:

output/[NET]/[DATASET]/default/

Test outputs are saved under:

output/[NET]/[DATASET]/default/[SNAPSHOT]/

测试结果保存成 detections.pkl, 里面是一个2-d list 变量all_boxes。

all_boxes[cls][image] = N x 5 array of detections in (x1, y1, x2, y2, score)

all_boxes[j][i]: detected bbox(location and score) in image i under class j

得到测试结果后,进行评估,评估时会将测试集中每张图片的annotations构建成一个dict, key是image name, value是该图像中的所有objects构成的list,其中每个object又是一个dict,有’name’,‘pose’,‘truncated’,‘difficult’,‘bbox’(list,[x1,y1,x2,y2])属性。最终这个dict会保存到 :

/data/VOCdevkit2007/VOC2007/ImageSets/Main/test.txt_annots.pkl

下次会直接加载这个dict,不再重新构建。

对每个类别分别计算其在整个测试集上的ap(np.int),precision(num_dets,), recall(num_dets,)并以字典的形式{‘rec’: rec, ‘prec’: prec, ‘ap’: ap}保存到对应文件:

/output/vgg16/voc_2007_test/default/vgg16_faster_rcnn_iter_70000/classname_pr.pkl

Tensorboard information for train and validation is saved under:

tensorboard/[NET]/[DATASET]/default/

tensorboard/[NET]/[DATASET]/default_val/

屏幕的日志保存在:

experiments/logs/${NET}_${TRAIN_IMDB}_${EXTRA_ARGS_SLUG}_${NET}.txt.`date +'%Y-%m-%d_%H-%M-%S

数据集的缓存文件保存在:

data/cache/voc_2007_trainval_gt_roidb.pkl

五、在自己的数据上进行训练

最简单的方法,把自己的数据处理成pascal_voc_2007数据的格式,然后覆盖原数据。按照“四”中进行训练

如何制作数据集并替换,可参考:

https://blog.csdn.net/u011574296/article/details/78953681

参考链接:

【1】https://blog.csdn.net/xzy5210123/article/details/81530993#commentBox

【2】 https://github.com/ruotianluo/pytorch-faster-rcnn/tree/0.4