【OpenCV】使用官方YOLOv3模型进行目标检测

文章目录

- 前期准备

- 处理步骤

- 效果

- 代码

参考:

YOLO官网: https://pjreddie.com/darknet/yolo/

OpenCV官方文档: https://docs.opencv.org/3.4.5/da/d9d/tutorial_dnn_yolo.html

大佬博客: https://www.learnopencv.com/deep-learning-based-object-detection-using-yolov3-with-opencv-python-c/

大佬代码: https://github.com/spmallick/learnopencv/blob/master/ObjectDetection-YOLO/object_detection_yolo.cpp

前期准备

本人用的是VS2015+OpenCV3.4.5(版本太低的话无法支持yolo3)

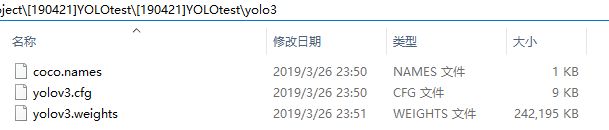

YOLO3模型下载:

1.(yolov3.weights)权重文件:https://pjreddie.com/media/files/yolov3.weights

2.(yolov3.cfg)配置文件:https://github.com/pjreddie/darknet/blob/master/cfg/yolov3.cfg

3.(coco.names)对象名称文件:https://github.com/pjreddie/darknet/blob/master/data/coco.names

**提供我的百度云打包下载(* ̄︶ ̄):链接:https://pan.baidu.com/s/1S--B32JVWJEKVb7L97mtJA 提取码:1r04

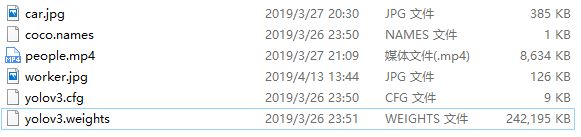

把3个模型文件放到项目文件中,之后程序通过路径调用。

(本人是在项目文件中新建了一个yolo3的文件,因此在程序中调用时记得修改路径)

顺便把检测对象(图片或视频)也放到同一个路径里面。

处理步骤

(这部分主要是参考翻译自大佬的文章,如果只是想跑程序,不想了解过程,可以直接跳过这一节)

1.初始化参数

YOLOv3算法生成边界框作为预测的检测输出,每个预测框都与置信度得分相关。主要涉及以下几个参数:

(1)置信阈值参数(confThreshold):首先,将忽略置信阈值参数下的所有框以进行进一步处理,置信得分在该阈值以下的识别对象会被去除掉;

(2)非最大抑制参数(nmsThreshold):之后,剩下的框将进行非最大抑制,以删除多余的重叠边界框。该参数如果太低的话会检测不到有重叠的对象,参数太高可能会出现同一个对象有几个重复的框;

(3)宽度(inpWidth)和高度(inpHeight):接下来,设置网络输入图像的输入宽度和高度的默认值。将它们中的每一个设置为416,这样就可以将我们的运行与Yolov3的作者给出的Darknet的C代码进行比较。(还可以将这两个选项都更改为320以获得更快的结果,或者更改为608以获得更准确的结果)

// Initialize the parameters

floatconfThreshold = 0.5;// Confidence threshold

floatnmsThreshold = 0.4;// Non-maximum suppression threshold

intinpWidth = 416;// Width of network's input image

intinpHeight = 416;// Height of network's input image

2.导入模型和类

之前我们准备的YOLO3模型3个文件在这里导入,包括:(coco.names)对象名称文件,(yolov3.weights)权重文件,(yolov3.cfg)配置文件。(记得修改路径)

这里将DNN后端设置为OpenCV,目标为CPU。这里可以尝试将首选目标设置为cv.dnn.dnn_target_opencl以在GPU上运行。但是,当前的opencv版本只能在英特尔的GPU上测试,如果没有英特尔的GPU,它会自动切换到CPU。

// Load names of classes

string classesFile = "coco.names";

ifstream ifs(classesFile.c_str());

string line;

while(getline(ifs, line)) classes.push_back(line);

// Give the configuration and weight files for the model

String modelConfiguration = "yolov3.cfg";

String modelWeights = "yolov3.weights";

// Load the network

Net net = readNetFromDarknet(modelConfiguration, modelWeights);

net.setPreferableBackend(DNN_BACKEND_OPENCV);

net.setPreferableTarget(DNN_TARGET_CPU);

3.读取输入

这一步就是OpenCV的常规操作,可以读取图片、视频或者是摄像头,另外就是还可以设置一个输出来保存我们检测的效果。

if (parser.has("image"))

{

// Open the image file

str = parser.get<String>("image");

ifstream ifile(str);

if (!ifile) throw("error");

cap.open(str);

str.replace(str.end()-4, str.end(), "_yolo_out.jpg");

outputFile = str;

}

else if (parser.has("video"))

{

// Open the video file

str = parser.get<String>("video");

ifstream ifile(str);

if (!ifile) throw("error");

cap.open(str);

str.replace(str.end()-4, str.end(), "_yolo_out.avi");

outputFile = str;

}

// Open the webcaom

else cap.open(parser.get<int>("device"));

4.处理每一帧图像

神经网络的输入图像需要采用一种称为blob的特定格式。

从输入图像或视频流中读取帧后,将通过blobFromImage函数将其转换为神经网络的输入blob。 在此过程中,它使用比例因子1/255将图像像素值缩放到0到1的目标范围。 它还将图像的大小调整为给定大小(416,416)而不进行裁剪。

(PS:我们不在此处执行任何均值减法,因此将[0,0,0]传递给函数的mean参数,并将swapRB参数保持为其默认值1。)

之后输出blob作为输入传递到网络,并运行正向传递以获得预测边界框列表作为网络输出。 这些框经过后处理步骤,滤除了低置信度分数。 这里在图像左上角打印出每帧的推理时间, 然后将检测图像输出。

// Process frames.

while (waitKey(1) < 0)

{

// get frame from the video

cap >> frame;

// Stop the program if reached end of video

if (frame.empty()) {

cout << "Done processing !!!" << endl;

cout << "Output file is stored as " << outputFile << endl;

waitKey(3000);

break;

}

// Create a 4D blob from a frame.

blobFromImage(frame, blob, 1/255.0, cvSize(inpWidth, inpHeight), Scalar(0,0,0), true, false);

//Sets the input to the network

net.setInput(blob);

// Runs the forward pass to get output of the output layers

vector<Mat> outs;

net.forward(outs, getOutputsNames(net));

// Remove the bounding boxes with low confidence

postprocess(frame, outs);

// Put efficiency information. The function getPerfProfile returns the

// overall time for inference(t) and the timings for each of the layers(in layersTimes)

vector<double> layersTimes;

double freq = getTickFrequency() / 1000;

double t = net.getPerfProfile(layersTimes) / freq;

string label = format("Inference time for a frame : %.2f ms", t);

putText(frame, label, Point(0, 15), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255));

// Write the frame with the detection boxes

Mat detectedFrame;

frame.convertTo(detectedFrame, CV_8U);

if (parser.has("image")) imwrite(outputFile, detectedFrame);

else video.write(detectedFrame);

}

下面介绍一些代码中的调用函数。

4a.获得输出层名称

OpenCV的Net类中的 forward函数 需要知道结束层,它应该在网络中运行。 由于我们想要遍历整个网络,因此需要确定网络的最后一层。 我们通过使用函数 getUnconnectedOutLayers() 来实现这一点,该函数给出了未连接的输出层的名称,这些输出层基本上是网络的最后一层。 然后我们运行网络的正向传递以从输出层获得输出,如前面的代码片段net.forward(outs, getOutputsNames(net))。

// Get the names of the output layers

vector<String> getOutputsNames(const Net& net)

{

static vector<String> names;

if (names.empty())

{

//Get the indices of the output layers, i.e. the layers with unconnected outputs

vector<int> outLayers = net.getUnconnectedOutLayers();

//get the names of all the layers in the network

vector<String> layersNames = net.getLayerNames();

// Get the names of the output layers in names

names.resize(outLayers.size());

for (size_t i = 0; i < outLayers.size(); ++i)

names[i] = layersNames[outLayers[i] - 1];

}

return names;

}

4b.对网络输出进行后处理

网络输出边界框均由类的数量+5长度的向量表示。

前5个元素分别表示 中心x、 中心y、 宽度 、 高度 和边界框包围对象的 置信度。

其余的元素是与每个类(即对象类型)相关联的置信度,最后该框被分配给对应于最高置信度分数的类。

一个边界框中的最高分数也被称为 置信度 。如果该框的置信度小于给定阈值,则边界框将被删除,不考虑进一步处理。

置信度等于或大于置信阈值的方框将受到非最大抑制参数的影响,以减少重叠框的数量。

// Remove the bounding boxes with low confidence using non-maxima suppression

void postprocess(Mat& frame, const vector<Mat>& outs)

{

vector<int> classIds;

vector<float> confidences;

vector<Rect> boxes;

for (size_t i = 0; i < outs.size(); ++i)

{

// Scan through all the bounding boxes output from the network and keep only the

// ones with high confidence scores. Assign the box's class label as the class

// with the highest score for the box.

float* data = (float*)outs[i].data;

for (int j = 0; j < outs[i].rows; ++j, data += outs[i].cols)

{

Mat scores = outs[i].row(j).colRange(5, outs[i].cols);

Point classIdPoint;

double confidence;

// Get the value and location of the maximum score

minMaxLoc(scores, 0, &confidence, 0, &classIdPoint);

if (confidence > confThreshold)

{

int centerX = (int)(data[0] * frame.cols);

int centerY = (int)(data[1] * frame.rows);

int width = (int)(data[2] * frame.cols);

int height = (int)(data[3] * frame.rows);

int left = centerX - width / 2;

int top = centerY - height / 2;

classIds.push_back(classIdPoint.x);

confidences.push_back((float)confidence);

boxes.push_back(Rect(left, top, width, height));

}

}

}

// Perform non maximum suppression to eliminate redundant overlapping boxes with

// lower confidences

vector<int> indices;

NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, indices);

for (size_t i = 0; i < indices.size(); ++i)

{

int idx = indices[i];

Rect box = boxes[idx];

drawPred(classIds[idx], confidences[idx], box.x, box.y,

box.x + box.width, box.y + box.height, frame);

}

}

4c.绘制预测框

最后,我们在输入图像上绘制通过非最大抑制参数过滤后的框,并给出它们对应的类标签和置信度。

// Draw the predicted bounding box

void drawPred(int classId, float conf, int left, int top, int right, int bottom, Mat& frame)

{

//Draw a rectangle displaying the bounding box

rectangle(frame, Point(left, top), Point(right, bottom), Scalar(0, 0, 255));

//Get the label for the class name and its confidence

string label = format("%.2f", conf);

if (!classes.empty())

{

CV_Assert(classId < (int)classes.size());

label = classes[classId] + ":" + label;

}

//Display the label at the top of the bounding box

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(frame, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(255,255,255));

}

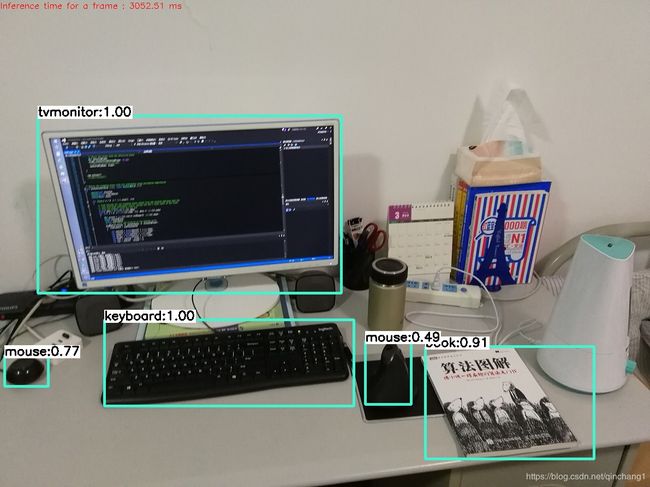

效果

博主做了一个YOLO检测视频的效果,内容是《逃避可耻》片尾曲《恋》的MV,感兴趣的可以通过以下链接观看:

https://www.bilibili.com/video/av50257274

记得投个硬币(* ̄︶ ̄)

YOLOv3官方模型可以识别80种物体,分别如下:(大家可以都试试)

| person | bicycle | car | motorbike | aeroplane |

| bus | train | truck | boat | traffic light |

| fire hydrant | stop sign | parking meter | bench | bird |

| cat | dog | horse | sheep | cow |

| elephant | bear | zebra | giraffe | backpack |

| umbrella | handbag | tie | suitcase | frisbee |

| skis | snowboard | sports ball | kite | baseball bat |

| baseball glove | skateboard | surfboard | tennis racket | bottle |

| wine glass | cup | fork | knife | spoon |

| bowl | banana | apple | sandwich | orange |

| broccoli | carrot | hot dog | pizza | donut |

| cake | chair | sofa | pottedplant | bed |

| diningtable | toilet | tvmonitor | laptop | mouse |

| remote | keyboard | cell phone | microwave | oven |

| toaster | sink | refrigerator | book | clock |

| vase | scissors | teddy bear | hair drier | toothbrush |

代码

#include 代码说明:

//**************************** You should change ******************************//

//Dir of object (choose the input source, image or video)

const char* keys = "{image | yolo3/table.jpg | input image }"

"{video | yolo3/people.mp4 | input video }"

"{device | 0 | input video }";

//Dir of yolo3 model

string classesFile = "yolo3/coco.names"; //Names of classes

String modelConfiguration = "yolo3/yolov3.cfg"; //Configuration file

String modelWeights = "yolo3/yolov3.weights"; //Weight file

// Initialize the parameters

float confThreshold = 0.4; // Confidence threshold

float nmsThreshold = 0.3; // Non-maximum suppression threshold

int inpWidth = 416; // Width of network's input image

int inpHeight = 416; // Height of network's input image

//*****************************************************************************//

代码开头的这部分需要修改,分为3部分:

(1)第一部分为图片或视频输入的路径,这里默认是图片输入,如果要视频输入的话,将图片路径改为“none”,例如:

const char* keys = "{image | | input image }"

"{video | yolo3/people.mp4 | input video }"

"{device | 0 | input video }";

如果要改成摄像头输入的话,把图片和视频都改成“none”,例如:

const char* keys = "{image | | input image }"

"{video | | input video }"

"{device | 0 | input video }";

(2)第二部分为YOLO模型的三个文件输入路径,这个前面有说明。

(3)第三部分是参数设置,这部分在前面也有说明。

如果错误,欢迎指正!