爬取的是整个笔趣阁的多个类型下的小说,分四层进行爬取,并存储在mysql数据库中,为网站提供数据源

代码如下:

import re

import urllib.request

# 数据库的操作

import pymysql

class Sql(object):

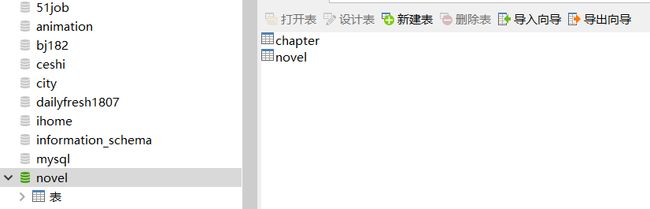

db = pymysql.connect(host="localhost", port=3306, db="novel", user="root", password="root", charset="utf8")

print('连接上了!')

def addnovel(self, sort_id, sort_name, bookname, imgurl, description, status, author):

cur = self.db.cursor()

cur.execute(

'insert into novel(booktype,sortname,name,imgurl,description,status,author) values("%s","%s","%s","%s","%s","%s","%s")' % (

sort_id, sort_name, bookname, imgurl, description, status, author))

lastrowid = cur.lastrowid

cur.close()

self.db.commit()

return lastrowid

def addchapter(self, lastrowid, chaptname, content):

cur = self.db.cursor()

cur.execute('insert into chapter(novelid,title,content)value("%s","%s","%s")' % (lastrowid, chaptname, content))

cur.close()

self.db.commit()

#

#

mysql = Sql()

def type(): # 获取小说类型

html = urllib.request.urlopen("https://www.duquanben.com/").read()

html = html.decode('gbk').replace('\n', '').replace('\t', '').replace('\r', '')

reg = r'fn-left(.*?)subnav'

html = re.findall(reg, html)

for i in html:

html = re.findall(r'book(.*?)/0/1/">(.*?)', i)

for sort_id, sort_name in html:

getList(sort_id, sort_name)

def getList(sort_id, sort_name): # 获取书的链接

html = urllib.request.urlopen('https://www.duquanben.com/book%s/0/1/' % sort_id).read().decode('gbk')

# print(html)

reg = r'.*?href="(.*?)" target=".*?">.*? '

urlList = re.findall(reg, html)

for url in urlList:

# print(urlList)

Novel(url, sort_id, sort_name)

def Novel(url, sort_id, sort_name):

html = urllib.request.urlopen(url).read().decode('gbk').replace('\n', '').replace('\t', '').replace('\r',

'').replace(

'

', '').replace(' ', '')

# print(html)

chapturl, bookname = re.findall(

'投票推荐开始阅读',

html)[0]

description = re.findall(r'内容简介.*?intro.*?>(.*?)