软件篇-03-基于ORB_SLAM2手写SLAM稠密地图构建实现

/-----------------------------------

本篇文章首发于知乎专栏

https://zhuanlan.zhihu.com/QIQI-HITwh

/---------------------------------------------------------------------------------

声明: 本文使用的方法不是从内部修改ORBSLAM2源码以获取稠密点云,而是先从ZED2

sdk获取以摄像头坐标系为描述的三维点云/作为点云地图的一个子集,然后融合IMU与ORB_SLAM2进行实时定位,通过点云滤波,点云融合建图。

以下是在室内实验的demo,由于是纯双目,没有深度传感器,在白墙和地板上有些失真,下次等移动平台到了我会去室外实验。

一、获取实时坐标和点云图

使用ORBSLAM2获取当前姿态,同时ZED2 利用其IMU数据对速度加速度积分得出另一个姿态,考虑到ORBSLAM2的响应及时性和IMU数据的漂移,当两者数据相差较大时停止建图,等待恢复正常,否则以ORB_SLAM2的姿态信息为准,同时手动添加损失量对IMU姿态信息进行校准。在某些情况下ORB_SLAM2可能会跟丢,此时通过IMU数据积分获取迪卡尔空间位移变化量,并回到之前的位置重新确定位置。

if(!startTimer)

{

timeLast = ros::Time::now().toSec();

startTimer = 1;

ROS_ERROR("\noffect between two poseMsgs is too big, stop mapping...");

ROS_WARN("the offset from zed2Pose to orbPose2 is:\nx:%f y:%f z:%f \n-------------"

,carTF_zed2.pose.position.x - carTF_orb.pose.position.x

,carTF_zed2.pose.position.y - carTF_orb.pose.position.y

,carTF_zed2.pose.position.z - carTF_orb.pose.position.z);

}else if(timeNow = ros::Time::now().toSec() - timeLast > 10)

{

startTimer = 0;

timeLast = timeNow = 0;

ROS_WARN("Don't warry, it seems that something wrong happend, trying to fix it...");

x_bias = carTF_zed2.pose.position.x - carTF_orb.pose.position.x;

y_bias = carTF_zed2.pose.position.y - carTF_orb.pose.position.y;

z_bias = carTF_zed2.pose.position.z - carTF_orb.pose.position.z;

}

这里我订阅ZED2 sdk输出的实时点云,有一点需要注意的是实时的点云和姿态信息必需要时间戳同步,不然融合出来的地图会发生很大的偏移和扭曲。使用ros message_filters管理消息同步,可以设置弱同步和强同步。

imu_sub = n.subscribe("/zed/zed_node/imu/data", 1, &MapBuild::imuCallback,this);

carTF_orb_sub = n.subscribe("/orb_slam2_stereo/pose", 1, &MapBuild::carTF_orb_Callback,this);

pointCloud_sub = new message_filters::Subscriber<sensor_msgs::PointCloud2>

( n, "/zed2/zed_node/point_cloud/cloud_registered", 1);

carTF_zed2_sub = new message_filters::Subscriber<geometry_msgs::PoseStamped>

(n, "/zed2/zed_node/pose", 1);

sync_ = new message_filters::Synchronizer<sync_pol> (sync_pol(10), *pointCloud_sub, *carTF_zed2_sub);

sync_->registerCallback(boost::bind(&MapBuild::buildMap_callback, this, _1, _2));

二、点云坐标系变换

我们当前的点云是相对摄像头坐标系的,但是建图就要将这些点转换到世界坐标系,点的坐标系变换我这里就不讲了,不懂的去看一下《机器人学导论》/题外话“英文的原汁原味”。要用到的工具当然是Eigen了。这里吐槽一下,Eigen和geometry_msgs::PoseStamped里有关四元数的写法顺序是颠倒的,大家注意一下。

Quaterniond quaternion(carTF_zed2.pose.orientation.w,

carTF_zed2.pose.orientation.x,

carTF_zed2.pose.orientation.y,

carTF_zed2.pose.orientation.z);

Matrix3d rotation_matrix;

rotation_matrix=quaternion.toRotationMatrix();

// transform the cloud link to the "map" frame

Vector3d position_transform

(carTF_zed2.pose.position.x - x_bias,

carTF_zed2.pose.position.y - y_bias,

carTF_zed2.pose.position.z - z_bias);

for (int i=0; i<cloud_xyz->width; i++)

{

Vector3d position_(cloud_xyz->at(i).x,cloud_xyz->at(i).y,cloud_xyz->at(i).z);

Vector3d position = rotation_matrix*position_ + position_transform;

cloud_xyz->at(i).x = position[0];

cloud_xyz->at(i).y = position[1];

cloud_xyz->at(i).z = position[2];

}

三、点云滤波

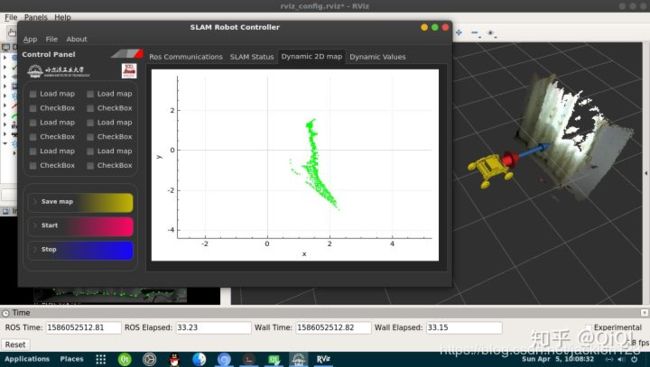

可以看到,图中绿色的点云原本是墙面和窗帘,但是在边缘却有很多飞点

可以看到,图中绿色的点云原本是墙面和窗帘,但是在边缘却有很多飞点

我们获取的原始点云有很多的噪声点并且密度太大,TX2吃不消,因此这里要对这些点云进行滤波,这里使用pcl的体素滤波和直通滤波。

// Perform the actual filtering

// VoxelGrid(decrease the memory occupation) & PassThrough(delete some incorrect points)

pcl::PCLPointCloud2* cloud2 = new pcl::PCLPointCloud2;

pcl::PCLPointCloud2ConstPtr cloudPtr(cloud2);

pcl_conversions::toPCL(*cloud, *cloud2);

// VoxelGrid

pcl::PCLPointCloud2* cloud_filtered_1 = new pcl::PCLPointCloud2;

pcl::PCLPointCloud2ConstPtr cloud_filter_1_Ptr(cloud_filtered_1);

pcl::VoxelGrid<pcl::PCLPointCloud2> filter_1;

filter_1.setInputCloud (cloudPtr);

filter_1.setLeafSize (0.03, 0.03, 0.03);

filter_1.filter(*cloud_filtered_1);

// PassThrough

pcl::PCLPointCloud2* cloud_filtered_2 = new pcl::PCLPointCloud2;

pcl::PCLPointCloud2ConstPtr cloud_filter_2_Ptr(cloud_filtered_2);

pcl::PassThrough<pcl::PCLPointCloud2> filter_2;

filter_2.setInputCloud (cloud_filter_1_Ptr);

filter_2.setFilterFieldName ("y");

filter_2.setFilterLimits (-1.2, 1.2);

// filter_2.setFilterLimitsNegative (true);

filter_2.filter(*cloud_filtered_2);

pcl::PCLPointCloud2 cloud_filtered_3;

filter_2.setInputCloud (cloud_filter_2_Ptr);

filter_2.setFilterFieldName ("z");

filter_2.setFilterLimits (-2, 2);

// filter_2.setFilterLimitsNegative (true);

filter_2.filter(cloud_filtered_3);

四、点云融合

// fused the current cloud to the fused cloud

*cloud_xyzFused += *cloud_xyz;

pcl::toROSMsg(*cloud_xyzFused, mPointcloudFusedMsg);

mPointcloudFusedMsg.header.frame_id = "map";

pointCloudFused_pub.publish(mPointcloudFusedMsg);

五、去除重复的点云

实验过程中不可避免的会回到某个之前来过的姿态,这个时候不能任由重复的点云在我的地图上大行其道,因此需要实时判断当前的点云是否已经添加到地图中去了,使用pcl::registration::CorrespondenceEstimation判断当前点云和地图有多少重复的点,该数目与点云总体数目之比如果大于某个阈值,则丢弃该点云。

pcl::registration::CorrespondenceEstimation<pcl::PointXYZRGB, pcl::PointXYZRGB> est;

cloud_xyzFusedPtr = cloud_xyzFused->makeShared();

cloud_xyzPtr = cloud_xyz->makeShared();

est.setInputSource (cloud_xyzPtr);

est.setInputTarget (cloud_xyzFusedPtr);

pcl::Correspondences all_correspondences;

// Determine all reciprocal correspondences

est.determineReciprocalCorrespondences (all_correspondences);

// filter the reciprocal points cloud

if(1.0*all_correspondences.size()/cloud_xyz->width < 0.9)

{

// fused the current cloud to the fused cloud

*cloud_xyzFused += *cloud_xyz;

pcl::toROSMsg(*cloud_xyzFused, mPointcloudFusedMsg);

mPointcloudFusedMsg.header.frame_id = "map";

pointCloudFused_pub.publish(mPointcloudFusedMsg);

}

我们下期再见

./下期就有小车实际的实验了