hive on spark 利用maven重新编译spark

缘由:使用hive on spark 进行hivesql操作的时候报以下错误:

Failed to execute spark task, with exception 'org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create spark client.)' FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask查了一堆资料之后,有的说hiveException 30041是内存不足的原因,有的说是yarn-site.xml内存配置的问题,试过之后都不行,于是决定重新编译以下spark。

------------------------------------------------------

1. 安装maven

// 1)将安装包解压到指定目录

[hadoop@hadoop01 apache-maven-3.6.0]$ tar -zxf /opt/maven/apache-maven-3.5.3-bin.tar.gz -C /home/hadoop/apps

// 2)配置maven环境变量,并测试maven是否安装成功

[hadoop@hadoop01 apache-maven-3.6.0]$ vi ~/.bash_profile

# maven

export MAVEN_HOME=/home/hadoop/apps/apache-maven-3.6.0

export PATH=$PATH:$MAVEN_HOME/bin

#export MAVEN_OPTS="-Xmx2048m -XX:MetaspaceSize=1024m -XX:MaxMetaspaceSize=1524m -Xss2m"

#export PATH=$PATH:$MAVEN_HOME/bin

// 3)更新环境变量

[hadoop@hadoop01 apache-maven-3.6.0]$ source ~/.bash_profile

// 4)运行命令查看maven是否配置成功

[hadoop@hadoop01 apache-maven-3.6.0]$ mvn -version

Apache Maven 3.6.0 (3383c37e1f9e9b3bc3df5050c29c8aff9f295297; 2018-012-21T03:31:02-04:00)

Maven home: /home/hadoop/apps/apache-maven-3.6.0

Java version: 1.8.0_171, vendor: Oracle Corporation

Java home: /usr/local/jdk1.8.0_171/jre

Default locale: en_US, platform encoding: UTF-8

OS name: "linux", version: "3.10.0-123.el7.x86_64", arch: "amd64", family: "unix"

// 5)建议配置一下阿里云镜像

[hadoop@hadoop01 apps]$cd /home/hadoop/app/maven-3.6.0/conf

[hadoop@hadoop01 conf]$vi settings.xml

nexus-aliyun

central

Nexus aliyun

http://maven.aliyun.com/nexus/content/groups/public

2.下载Spark源码

1)解压spark-2.3.1到工作目录

[hadoop@hadop01 apps]$ tar -zxf spark-2.3.1.tgz

[hadoop@hadop01 apps]$ cd spark-2.3.1

[root@master work]# ll

total 4

drwxrwxr-x. 29 andy andy 4096 Jun 1 13:34 spark-2.3.1

[hadoop@hadop01 apps]$ cd spark-2.3.1/

[hadoop@hadop01 spark-2.3.1]$ ll

total 228

-rw-rw-r--. 1 andy andy 2318 Jun 1 13:34 appveyor.yml

drwxrwxr-x. 3 andy andy 43 Jun 1 13:34 assembly

drwxrwxr-x. 2 andy andy 4096 Jun 1 13:34 bin

drwxrwxr-x. 2 andy andy 75 Jun 1 13:34 build

drwxrwxr-x. 9 andy andy 4096 Jun 1 13:34 common

drwxrwxr-x. 2 andy andy 4096 Jun 1 13:34 conf

-rw-rw-r--. 1 andy andy 995 Jun 1 13:34 CONTRIBUTING.md

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 core

drwxrwxr-x. 5 andy andy 47 Jun 1 13:34 data

drwxrwxr-x. 6 andy andy 4096 Jun 1 13:34 dev

drwxrwxr-x. 9 andy andy 4096 Jun 1 13:34 docs

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 examples

drwxrwxr-x. 15 andy andy 4096 Jun 1 13:34 external

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 graphx

drwxrwxr-x. 2 andy andy 20 Jun 1 13:34 hadoop-cloud

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 launcher

-rw-rw-r--. 1 andy andy 18045 Jun 1 13:34 LICENSE

drwxrwxr-x. 2 andy andy 4096 Jun 1 13:34 licenses

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 mllib

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 mllib-local

-rw-rw-r--. 1 andy andy 24913 Jun 1 13:34 NOTICE

-rw-rw-r--. 1 andy andy 101718 Jun 1 13:34 pom.xml

drwxrwxr-x. 2 andy andy 4096 Jun 1 13:34 project

drwxrwxr-x. 6 andy andy 4096 Jun 1 13:34 python

drwxrwxr-x. 3 andy andy 4096 Jun 1 13:34 R

-rw-rw-r--. 1 andy andy 3809 Jun 1 13:34 README.md

drwxrwxr-x. 5 andy andy 64 Jun 1 13:34 repl

drwxrwxr-x. 5 andy andy 46 Jun 1 13:34 resource-managers

drwxrwxr-x. 2 andy andy 4096 Jun 1 13:34 sbin

-rw-rw-r--. 1 andy andy 17624 Jun 1 13:34 scalastyle-config.xml

drwxrwxr-x. 6 andy andy 4096 Jun 1 13:34 sql

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 streaming

drwxrwxr-x. 3 andy andy 30 Jun 1 13:34 tools3.编译Spark源码

//编译spark之前要确保 scala与Java的环境变量已经配置好了

#scala

export SCALA_HOME=/home/hadoop/apps/scala-2.12.7

export PATH=$PATH:$SCALA_HOME/bin

#jdk

export JAVA_HOME=/home/hadoop/apps/jdk1.8.0_171

export PATH=$PATH:$JAVA_HOME/bin

#spark

export SPARK_HOME=/home/hadoop/apps/spark-2.3.1-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin

// 1) 设置Maven内存使用,您需要通过MAVEN_OPTS配置Maven的内存使用量,官方推荐配置如下:

[hadoop@hadoop01 spark-2.3.1]$ set -Xmx2048m -XX:MetaspaceSize=1024m -XX:MaxMetaspaceSize

=1524m -Xss2m

// 2) 编译 (命令查询网址:http://spark.apache.org/docs/latest/building-spark.html)

// 2.1) 首先,在spark-2.3.1目录下执行:

[hadoop@hadoop01 spark-2.3.1]$./build/mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.3 -DskipTests clean package

/**

* 注意 2.1执行成功之后才能执行2.2,顺序不能乱,否则报错

*/

// 2.2) 然后,在spark-2.3.1/dev目录下执行:

[hadoop@hadoop01 dev]$./make-distribution.sh --name "hadoop2-without-hive" --tgz "-Pyarn,hadoop-provided,hadoop-2.7.3,parquet-provided"

[INFO] Scanning for projects...

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/apache/18/apache-18.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/apache/18/apache-18.pom (16 kB at 4.8 kB/s)

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Build Order:

[INFO]

[INFO] Spark Project Parent POM [pom]

[INFO] Spark Project Tags [jar]

[INFO] Spark Project Sketch [jar]

[INFO] Spark Project Local DB [jar]

[INFO] Spark Project Networking [jar]

[INFO] Spark Project Shuffle Streaming Service [jar]

[INFO] Spark Project Unsafe [jar]

[INFO] Spark Project Launcher [jar]

[INFO] Spark Project Core [jar]

[INFO] Spark Project ML Local Library [jar]

[INFO] Spark Project GraphX [jar]

[INFO] Spark Project Streaming [jar]

[INFO] Spark Project Catalyst [jar]

[INFO] Spark Project SQL [jar]

[INFO] Spark Project ML Library [jar]

[INFO] Spark Project Tools [jar]

[INFO] Spark Project Hive [jar]

[INFO] Spark Project REPL [jar]

[INFO] Spark Project YARN Shuffle Service [jar]

[INFO] Spark Project YARN [jar]

[INFO] Spark Project Hive Thrift Server [jar]

[INFO] Spark Project Assembly [pom]

[INFO] Spark Integration for Kafka 0.10 [jar]

[INFO] Kafka 0.10 Source for Structured Streaming [jar]

[INFO] Spark Project Examples [jar]

[INFO] Spark Integration for Kafka 0.10 Assembly [jar]

[INFO]

[INFO] -----------------< org.apache.spark:spark-parent_2.11 >-----------------

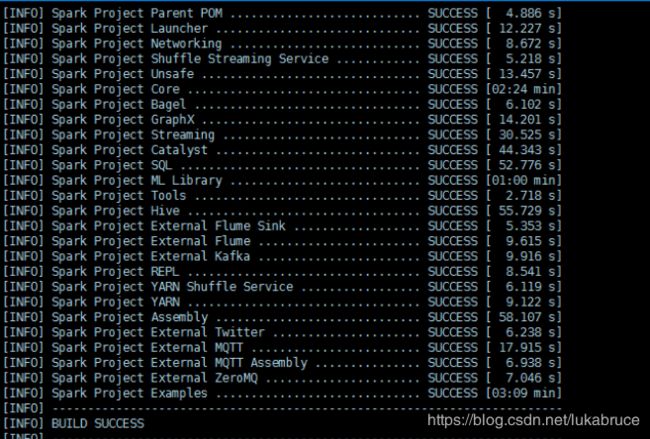

3)编译成功

注:

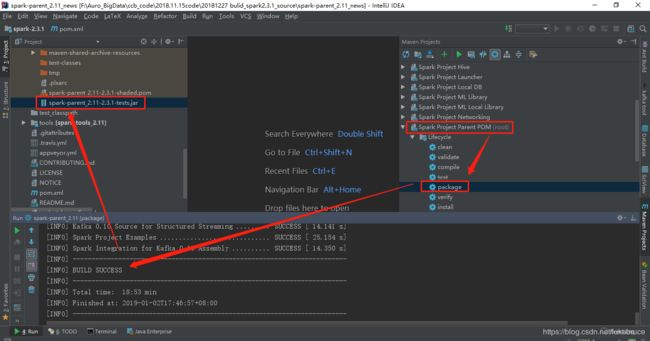

(1)如果idea上的maven编译到spark-core的时候报错

解决办法:

直接到spark-2.3.1源码包下启动git-bash,然后执行:

$ ./build/mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.3 -DskipTests clean package

执行时间大概一个小时左右,具体看网速,成功之后,再次启动idea 的maven项目下打包