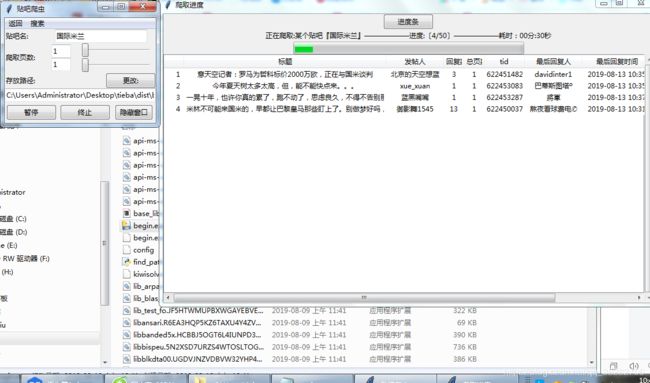

python tkinter界面 多进程启动scrapy爬取百度贴吧的回复,显示爬取进度,并可以搜索回帖人,指定时间生成词云图,用pyinstaller打包成exe(十)

所有py文件已经讲解了,剩下的就是如何打包成exe了!

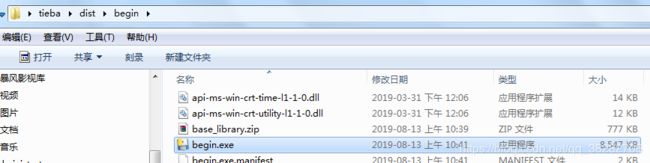

scrapy打包成exe特别麻烦,而且很大,最后出来,居然有88m!!!

而且不能打包成单个文件,只能一堆文件,下面是这次所需的文件:

所需配置文件:

scrapy(文件夹)

/mime.types

/VERSION

scrapy.cfg

wordcloud (文件夹):

/stopwords

/simsun.ttc字体文件

还得有自写的py文件,所以比较麻烦,我就直接用spec 的方法打包了!!

begin.spec

# -*- mode: python -*-

import sys

sys.setrecursionlimit(5000)

block_cipher = None

a = Analysis(["begin.py"],

pathex=["C:\\Users\\Administrator\\Desktop\\tieba"],

binaries=[],

datas=[(".\\scrapy","scrapy"),(".\\scrapy.cfg","."),(".\\tieba","tieba"),

(".\\my_tk.py","."),(".\\tieba_log.py","."),(".\\search.py","."),(".\\find_path.py","."),

(".\\wordcloud","wordcloud")],

hiddenimports = ["scrapy.spiderloader",

"scrapy.statscollectors",

"scrapy.logformatter",

"scrapy.dupefilters",

"scrapy.squeues",

"scrapy.extensions.spiderstate",

"scrapy.extensions.corestats",

"scrapy.extensions.telnet",

"scrapy.extensions.logstats",

"scrapy.extensions.memusage",

"scrapy.extensions.memdebug",

"scrapy.extensions.feedexport",

"scrapy.extensions.closespider",

"scrapy.extensions.debug",

"scrapy.extensions.httpcache",

"scrapy.extensions.statsmailer",

"scrapy.extensions.throttle",

"scrapy.core.scheduler",

"scrapy.core.engine",

"scrapy.core.scraper",

"scrapy.core.spidermw",

"scrapy.core.downloader",

"scrapy.downloadermiddlewares.stats",

"scrapy.downloadermiddlewares.httpcache",

"scrapy.downloadermiddlewares.cookies",

"scrapy.downloadermiddlewares.useragent",

"scrapy.downloadermiddlewares.httpproxy",

"scrapy.downloadermiddlewares.ajaxcrawl",

"scrapy.downloadermiddlewares.chunked",

"scrapy.downloadermiddlewares.decompression",

"scrapy.downloadermiddlewares.defaultheaders",

"scrapy.downloadermiddlewares.downloadtimeout",

"scrapy.downloadermiddlewares.httpauth",

"scrapy.downloadermiddlewares.httpcompression",

"scrapy.downloadermiddlewares.redirect",

"scrapy.downloadermiddlewares.retry",

"scrapy.downloadermiddlewares.robotstxt",

"scrapy.spidermiddlewares.depth",

"scrapy.spidermiddlewares.httperror",

"scrapy.spidermiddlewares.offsite",

"scrapy.spidermiddlewares.referer",

"scrapy.spidermiddlewares.urllength",

"scrapy.pipelines",

#"scrapy.pipelines.images",

"scrapy.core.downloader.handlers.http",

"scrapy.core.downloader.contextfactory",

"json", "csv", "re","scrapy",'copy','codecs',

'emoji','webbrowser','subprocess','shutil','urllib',

'numpy','PIL','wordcloud','matplotlib','datetime',

'jieba','traceback','subprocess','shutil','urllib'

],

hookspath=[],

runtime_hooks=[],

excludes=[],

win_no_prefer_redirects=False,

win_private_assemblies=False,

cipher=block_cipher)

pyz = PYZ(a.pure, a.zipped_data,

cipher=block_cipher)

exe = EXE(pyz,

a.scripts,

[],

exclude_binaries=True,

name="begin",

debug=False,

bootloader_ignore_signals=False,

strip=False,

upx=True,

console=False)

coll = COLLECT(exe,

a.binaries,

a.zipfiles,

a.datas,

strip=False,

upx=True,

name="begin")注意路径,spec里面不能有中文!!

结果:

完成!!

我把完整项目放到了github上,可以直接下载!!

https://github.com/zhishiluguoliu6/crawl-baidu-tieba