基于tensorflow下的DenseNet 简易搭建

基于tensorflow下的DenseNet 简易搭建

前言

笔者最近在研究深度学习网络,正好看到了DenseNet网络,觉得简易可实现,然后基于了一维数据集完成了DenseNet网络搭建。

DenseNet网络结构

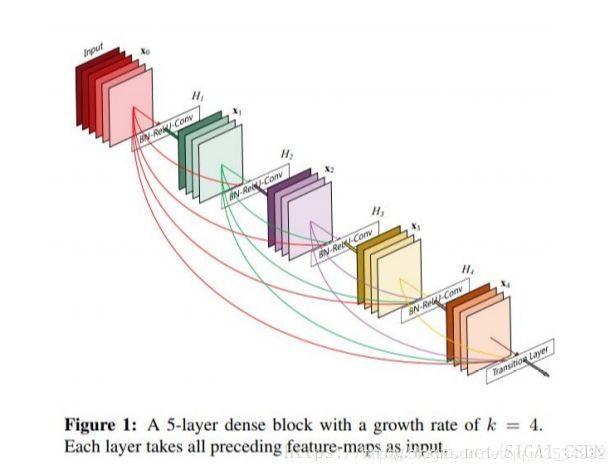

他主要是在每一个Dense_block层中,每一个卷积层都与前面的卷积层相关,具体操作是tf.concat操作,简单理解一下,就是在每个卷积层数据数据,都要并联前几个卷积层数据,这与一般的ResNet不同。从下面一张图中,可以清晰显示出来。

代码实现

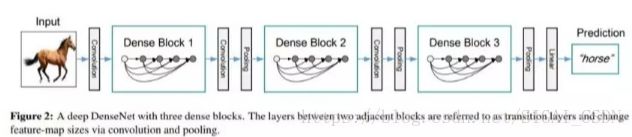

我们这里主要分了两部分dense_block与transition_layer,一个实现了denseNet的每一个模块的功能,另一个完成每一个模块的相连(BN+Relu+卷积+池化)

dense_block

def dense_block(p,layers_nums=3):

for i in range(layers_nums):

x = tf.layers.batch_normalization(p)

x = tf.nn.relu(x)

x=tf.layers.conv2d(inputs=x, filters=4, kernel_size=[1,3], strides=1, padding='same', kernel_initializer=tf.random_normal_initializer(stddev=0.01))

x=tf.concat([x, p], axis=3)

p=x

p代表输入数据,layers_nums代表,、每个模块有多少卷积层。我们默认为3.

transition_layer

def transition_layer(x):

x = tf.layers.batch_normalization(x)

x = tf.nn.relu(x)

n_inchannels = x.get_shape().as_list()[3]

n_outchannels = int(n_inchannels * 0.5)

x = tf.layers.conv2d(inputs=x, filters=n_outchannels, kernel_size=1, strides=1, padding='same', kernel_initializer=tf.random_normal_initializer(stddev=0.01))

x = tf.layers.average_pooling2d(inputs=x, pool_size=[1,2], strides=2)

return x

完成模块之间的连接

剩下的功能实现与正常CNN相似,就不详细介绍了,接下来贴出详细代码。

完整代码

import tensorflow as tf

import numpy as np

import tensorflow.contrib.slim as slim

import time

matix=np.loadtxt("D:/深度学习/all_feature/15—class_label15_four_train80.txt")

matix1=np.loadtxt("D:/深度学习/all_feature/15—class_label15_four_test40.txt")

tf.reset_default_graph()

batch_size = 100

learning_rate = 0.001

learning_rate_decay = 0.95

#num_layers_in_dense_block=3

def conv_layer(x):

return tf.layers.conv2d(inputs=x, filters=4, kernel_size=[1,3], strides=1, padding='same', kernel_initializer=tf.random_normal_initializer(stddev=0.01))

def dense_block(p,layers_nums=3):

for i in range(layers_nums):

x = tf.layers.batch_normalization(p)

x = tf.nn.relu(x)

x=tf.layers.conv2d(inputs=x, filters=4, kernel_size=[1,3], strides=1, padding='same', kernel_initializer=tf.random_normal_initializer(stddev=0.01))

x=tf.concat([x, p], axis=3)

p=x

return x

def transition_layer(x):

x = tf.layers.batch_normalization(x)

x = tf.nn.relu(x)

n_inchannels = x.get_shape().as_list()[3]

n_outchannels = int(n_inchannels * 0.5)

x = tf.layers.conv2d(inputs=x, filters=n_outchannels, kernel_size=1, strides=1, padding='same', kernel_initializer=tf.random_normal_initializer(stddev=0.01))

x = tf.layers.average_pooling2d(inputs=x, pool_size=[1,2], strides=2)

return x

def inference(inputs):

x = tf.reshape(inputs,[-1,1,1015,1])

conv_1 = conv_layer(x)

dense_1 = dense_block(conv_1,3)

block_1=transition_layer(dense_1)

# dense_2 = dense_block(block_1,4)

# block_2=transition_layer(dense_2)

net_flatten = slim.flatten(block_1,scope='flatten')

fc_1 = slim.fully_connected(slim.dropout(net_flatten,0.8),200,activation_fn=tf.nn.tanh,scope='fc_1')

output = slim.fully_connected(slim.dropout(fc_1,0.8),15,activation_fn=None,scope='output_layer')

return output,net_flatten

def train():

x = tf.placeholder(tf.float32, [None, 1015])

y = tf.placeholder(tf.float32, [None, 15])

y_outputs,net = inference(x)

global_step = tf.Variable(0, trainable=False)

entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y_outputs, labels=tf.argmax(y, 1))

loss = tf.reduce_mean(entropy)

train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss, global_step=global_step)

prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_outputs, 1))

accuracy = tf.reduce_mean(tf.cast(prediction, tf.float32))

# saver = tf.train.Saver()

print('Start to train the DenseNet......')

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(3000):

train_op_, loss_, step = sess.run([train_op, loss, global_step], feed_dict={x: matix[:,:1015], y: matix[:,1015:]})

if i % 1 == 0:

result = sess.run(loss, feed_dict={x: matix1[:,:1015], y: matix1[:,1015:]})

acc_1=sess.run(accuracy, feed_dict={x: matix1[:,:1015], y: matix1[:,1015:]})

print(str(i)+' '+str(result)+' '+str(acc_1))

def main(_):

train()

if __name__ == '__main__':

t0 = time.clock()

tf.app.run()

print(time.clock() - t0, " seconds process time ")