CS224d: Deep Learning for NLP Lecture1 听课记录

| 重点提炼 |

- 自然语言处理的一般处理过程/NLP levels

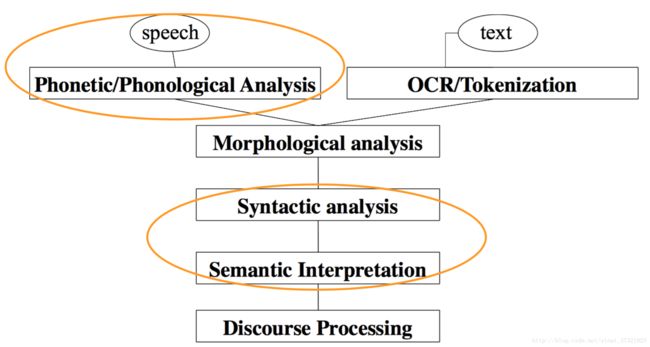

初始输入数据分为语音和文本两大类,对于语音一般进行语音分析转换成文本(语音识别),对于文本一般进行词条化处理。而后两者处理过程类似,即依次进行词法分析,句法分析,语义分析,语篇加工等。

- 深度学习的优势

(1) 工程师手动设计的特征往往只针对一个领域,并且需要花费大量的时间设计和验证特征,也有可能因为数据量巨大,不能得到完整的特征

(2) 深度学习可以通过学习初始数据自动得到特征,学习速度较快,并且这些特征灵活,具有普适性,可以用于语言,视觉等许多领域

(3) 深度学习既可以解决非监督学习问题,又可以解决监督学习问题,并且能够获得较高的准确率 - 向量

从音素,词素,单词,到短语,语法结构,意义,这些不同等级的语言单元都被表征为向量(Representations at NLP levels) - 应用

拼写检查,关键词搜索,关键词提取,情感分析,信息检索,语音识别,比较难的有问答系统,对话系统,机器翻译等等 - 深度学习对于倾斜数据无法免疫,需要进行特殊处理

| 视频原文 |

写下老师上课的内容,只是为了让自己上课可以专心。橙红色字体是我个人觉得比较重要的 (以下内容一般不会再看)。

You can see deep learning as a type of representation learning which essentially attempts to automatically learn these features or representations by giving it the raw data in the case of images with just the raw pixels, in our case it would be just the raw words or even lower-level just the characters.

And then you give it the raw characters, you say this sentence is positive this sentence is negative if you give it thousand of examples of positive or negative sentences and then you ask the model to figure out which words seem relevant, which order of the word seems to matter that this was not a good movie but well. So you know it’s not before the adjective and then becomes negative for things like that. You don’t want to manually define any of that stuff just want to give it a lot of examples and have it figure out how to describe it and then make a final prediction.

We will be a little fuzzy(模糊) with the term deep turns out for instance next lecture we will go deeply into word vectors , but the word vectors are not going to be actually deep models. They are actually going to be very shallow models but there are ways for us to start seeing everything in the right kind of light and with the right kind of mindset(心态).

slide 15: A little bit of the history and the term of deep learning.

Deep learning actually also includes a lot of so-called graphical models and probabilistic generative model. We will not focus on those. We will focus the entirety(全部) of the class on the neural network family which is really now the dominant model family inside the field of deep learning in research as well as in an industry.

You might be a little snarky(尖刻的) and say well this whole deep learning thing in neural networks isn’t it just kind of clever terminology for combining a bunch of logistic regression units? And if you’ve taken CS299(machine learning Ng.), one thing you will notice is that throughout the classes really have a lot of logistic regressions kind of put together in all kinds of different ways and so there’s some truth to this, at the same time, there are a lot of interesting modeling principles such as end-to-end learning I give you the raw input, I give you a final output, you learn from the final output all the way to the raw input and everything in between really putting the artificial back into artificial intelligence if you will.

It was really a lot of human intelligence that went into a lot of these different applications and in some cases there are actual real connections to neuroscience though I have to say that we will not go there. This is not going to be a neuroscientist class. I’m not a neuroscientist. I published once or twice or some and I know some things but I really did the neural inspiration of these models are very high-level and fuzzy, especially for natural language processing, where I think we have even less of a good understanding of how the human brain really represents.

We will not be taking a historical approach. I will give you the links but I will not ask you to read sort of historical papers in many cases. They are superseded(取代) and while a lot of the very core ideas of deep learning have been around for several decades. There’s still a lot of new techniques and I think you can get a lot out of the class without necessarily knowing the full history of it.

slide 16: Why does it really make sense for you to take this class

I mentioned a couple of these before. But basically the field of AI had really been a lot about understanding your domain incredibly(难以置信地) well. Designing features that often turn out to be overly specific to the problem that you were looking at or incomplete because you can’t look at as much data as computer could and it took a very long time to design and validate(验证) those kinds of features. On the other hand, with deep learning we will learn these features and that will make them easier to adapt much faster to learn and literally(逐字的).

You’ll be able to solve problems in this class and your project that used to take two or three years for teams of 10, 20 up to hundred people. How good you are in your project and do this fully automatically. It’s quite magical if you know sort of ten years ago how long those kinds things would have taken. Deep learning provides a very flexible and almost universal learnable framework for representing not just linguistic knowledge but also world knowledge and visual kinds of information. Deep learning will also, we will see that starting next class, be able to learn an unsupervised way. We will give it a bunch of text and it will figure out interesting relationships and semantics, the actual meaning of words just from looking at a lot of raw data. And it’s also good at solving supervised problems, trying to very accurately predict a certain outcome.

slide 17: Why is this only the second time this class was offered for deep learning for NLP

You might think if some of these techniques have been around for so long why is this so relevant now, why is this only the second time this class was offered for deep learning for NLP and not you know the 50 years or tenth time.

It basically started roughly in around 2006 that deep learning techniques actually outperformed these traditional machine learning techniques. So up until then it was really all the hand design features that did so well for all the different tasks that you were using and even in 2006 you are probably already using a lot of machine learning when you see the search engine or something like that. Why now basically deep learning techniques benefit a lot more from the data because we will ask the models to learn the features by itself. It also requires a lot of data. If you only have ten examples and this is already kind of relevant for thinking about your class project and how to get started on that if you only have ten examples for something and you ask the model you know nothing about language right the model starts being a completely random gibberish(胡乱言语) kind of algorithm that doesn’t make any accurate predictions and you only give a 10 or 15 examples, it won’t be to understand all the complexity of whatever domain it is that you’re trying to describe. However when you give it the millions of examples then it can start to figure out all these patterns by itself without you hand-holding and describing these various features and so deep learning techniques and somewhat benefit but also require a lot more training data.

Then we will have a lot faster machines and especially multi-core(多核) kinds of architectures. We’ll notice down the class(在接下来的课程中,我们将注意到) that a lot of the things will boil down to making very large matrix multiplications just W times X and it turns out that those are very easily parallelizable(可平行的) and so if you have multiple cores(多核), you’ll be able to make use of those and now some of the GPUs have 4000 cores and it turns out with deep learning techniques. You can very efficiently use those 4000 cores and paralyze very nicely. And this is sometimes overlooked(忽略) but I think it’s quite relevant especially for natural language processing in the last couple of years to have also been a lot of new models and algorithms and optimization techniques and ideas that helped us make use of these very powerful techniques. And that overall resulted in just much much better performance and accuracy. It starts with speech in computer vision but now has definitely arrived in natural language processing as well. So let me give you at least a very sort of one slide per breakthrough kind of overview of how we got to where we are.

slide 18: It started out with deep learning for speech

It started out with deep learning for speech where we had all the sudden very large data sets and basically we’re able to take in not quite just a raw frequency but some very raw features of the sound and then basically can predict phonemes and words. Here we see a word error rate and the word error rate we drop significantly. So when you actually now speak into your phone, your Android or your iPhone, you will very likely use a neural network in the back and that actually understands what you’re saying. And if you tried speech recognition like a decade ago or was for the most part a joke and it’s really now that it’s become so accurate that it makes sense to talk to your phone even sometimes with noisy backgrounds.

slide 19: The other breakthrough had been in computer vision

The other breakthrough had been in computer vision. Computer vision was also what most deep learning groups had focused on until maybe two years ago. So their majority of the efforts and so we understand neural networks models a lot better for images. For instance when you take in the raw pixels the first layer of a neural network basically finds little edges and then once in the second layer we will combine some of these edges into more complex textures(纹理) and shapes and then in the layer three you will have more complex kinds of combinations and maybe you have neurons that fire when they see a tire and things like that.

In natural language processing we won’t have beautiful visualizations like that unfortunately. And there won’t be as kind of obvious connections of we put these two characters together and then they form kind of this positive meaning or something like that. But we will have some visualizations also that help us understand and we’ll see that in text it will be very hard to understand exactly what one word would be represented as. For instance, the example of duck, let’s say we try to represent duck should it be close in our representation to a lot of verbs like hiding and things like that or should it be closer to other bird names, it’s unclear. So you already kind of see that the language understanding and representation part is often much more complex than the visual one.

slide 20: Deep Learning + NLP = Deep NLP

So basically when we say deep natural language processing or deep NLP, we want to try to combine these two ideas and goals basically taking the goal of natural language processing and then try to use representation learning deep learning methods to really solve them end to end.

So in the last half-hour I will go through a couple of these representations that we have for the different levels that we mentioned in the beginning speech, morphology, syntax and semantics and give you a high-level overview of what we mean with these different levels and then we’ll also cover a couple of applications that have already been transformed by deep learning.

slide 21: Representations at NLP Levels: Phonology

Let’s go through these different layers of representation and let’s start with phonology. Originally if you try to understand words from spoken language and transcribe it into written language and you study linguistics you would basically take a whole quarter on phonology where you basically have here the international phonetic alphabet and you try to find all the different closest for instance of ‘the’ is a plosive(爆破音) and fricative(摩擦音), and dental(齿音) where you actually use your teeth. So you basically would spend a lot of time trying to describe all the subtleties(微妙) of how human sound can be transcribed. Now in deep learning what we’ll do instead is just describe it as a vector at a list of numbers and we won’t worry try to make any of these fine distinction between the different ways to pronounce a word.

slide 22: Representations at NLP Levels: Morphology

Now moving on from just knowing what the sounds are, the next level might be understanding morphemes which is basically the science or field of morphology where you might want to understand a word like uninterested might be chopped up into prefix on and you see that prefix modifying lots of different words and changing potentially the meaning. Later on we have the stem interest and you have a suffix. The suffix and different languages can change you know that time for instance interesting versus interested things like that. Now again in deep learning, every morpheme will just be a vector with a list of numbers and we will train a neural network just combine two vectors into one vector and then we hope that the resulting vector captures whatever we need to capture for our task. So this is modeled by Thang Lung, one of the PhD students in Stanford and Opie group and he had used a so-called recursive neural network. It’s also a family of networks we’ll cover in this class that basically combine just a list of numbers that represent ‘un’ and one that represents ‘fortunate’ and we’ll combine those two to ‘unfortunate’. We won’t make any kind of explicit assumptions about what ‘unfortunate’ should look like. We’ll just let the model figure that out.

slide 23: Neural word vectors-visualization

So now word vectors you won’t see them very well but I’ll zoom it in a next level after we understand sort of the parts of that words are combined from we want to understand the words themselves and I’ll zoom it in a second but basically with unsupervised deep learning techniques. We’ll understand that certain words are similar to one another and they’re in certain relationships and so we will go.

We will learn just by giving it all of the text of Wikipedia for instance and we will find a neural network or even more shallow very simple techniques to learn to map words also into a vector space. This is really going to be one of the fundamental ideas and the first main idea of this class and one that we’ll see throughout this whole lecture as long as we can map any kind of input into a list of numbers, we’re going to be in the world of deep learning and we can use all different techniques. Words will also just be lists of numbers and usually will represent a word in something like 50 or 500 dimensions of course. We can’t visualize those. If you want to understand what’s actually going on inside the model, what we often do is to project them down to two dimensions.

And then we actually see interesting patterns for instance that countries all map into some where a similar sub area of this high dimensional vector space and for instance countries like France and Germany that actually close to one another in some fuzzy way you know geographically also culturally may be a little bit interesting enough. They’re vectors also closer to one another than other countries that are further away and those might be also more similar again to one another. These are things that will be learned automatically from just giving it a bunch of raw text and again you’ll see that it doesn’t always make sense. There will be problems when you represent everything as just a single list of numbers, a single vector but it will be very useful to solve a lot of different tasks. Here a couple of verbs and say ‘think’ and ‘expect’ are closer to one another for instance that ‘come’, ‘go’, ‘take’, ‘get’ and so on. Here we have a bunch of auxiliary(助动词) verbs ‘are’, ‘is’ ‘were’, and ‘was’ are close to one another.

slide 24: Representations at NLP Levels: Syntax

Now once we understand how to represent words. Words in isolation are not that useful usually when we communicate, we combine words into longer sentences. And so traditionally people would describe sentences with very discrete structures and with discrete tree structures like this one. Here ‘the house at the end of the street is red’, we might say ‘the’ is a determiner and don’t worry if you don’t understand all that linguistic terminology. We will go through it as we go through the class. But basically there’s a determiner ‘the’(D), a noun ‘street(N)’ and that together combines into this discrete noun phrase(NP) now unfortunately just saying this is a noun phrase, it’s not that useful right? You still don’t know what it actually mean ‘the street’. How does ‘the street’ relate to‘ the road’ or ‘the side alley(小路)’ or ‘the alleyway’ which I was born or things like that. It’s very hard to capture all that in one discrete category but that has been sort of traditionally the way to describe syntactic categories just to structure in the grammar of language and so in the end you might say all right that whole thing is a sentence and the sentence is just the category to discrete category s.

Now in deep learning and you will start to see the pattern here well since we’re already describing all the words as vectors. We’ll also describe all the phrases as vectors. And then we can actually compare that the phrase ‘the cat’ with the phrase ‘our dog’ right now. They’re not just a noun phrase but will actually have a vector and we hope that the vectors for phrases that are similar to one another in some way and again because every word and phrase will be a vector, we can use standard neural networks, standard functions that map vectors to other vectors to learn to make certain kinds of predictions.

slide 25: Representations at NLP Levels: Semantics

Now let’s go to the next level above which is semantics. Traditionally we’re going through sort of long history of the whole field of linguistics and again morphology, phonology, syntax, semantics. You can literally have one to four quarters of classes about each of those separately. So we’re sort of not doing them complete justice and they’re actually very interesting. But if you are trying to make predictions, trying to have a computer automatically understand and make predictions based on the inputs. It turns out we can in some sense get away with not having to understand all these details. We can just make the computer do accurate predictions without us understanding all the subtle(精细的) notions(概念) of these different layers of representation.

So let’s look at semantics. If we for instance try to understand the sentence ‘Marry likes Sue’, then if you took a semantics class and maybe then another advanced semantics class, you would go to eventually what we call lambda calculus where you say ‘likes’ is this complex function that takes its inout some other variable y and then takes this input another variable x and then can basically combine these different representations and don’t worry if you don’t fully follow this but if you know a little bit of functional programming, there’s actually a lot of connections to those. So here you might say ‘Sue’ for instance is just represented as some discrete symbol ’s’ and when we combine ‘Sue’ with the verb ‘likes’ then what we’ll get is basically a reduction(减少) here of lambda and now we’ll get this thing which this expression is lambda calculus expressing where we replace one of the free variables with this concrete term ’s’ and then if we combine ‘Marry’ here on the left side we’ll replace that variable x with ‘Marry’ and in the end we have this discrete representation ‘likes(m, x)’. Now of course again if we try to ask how similar is now that sentence ‘Mary likes Sue’ in that representation to the sentence ‘Marry and Sue hang out together’. I like that sentence already will be quite tough and I’m the calculus to represent not to be represented and they’re very discrete and they’re not fuzzy long lists of numbers and there were not in a vector space but we have very discrete representations and so there’s no notion of similarity or the fuzziness of language when we use these kinds of representations.

And now in deep learning again, every word and phrase and even every logical expression will just be a vector and because of that we can combine different vectors again using neural networks into other vectors and then we can still make it a classification problem. For instance, we could say ‘All reptiles walk’ versus ‘some turtles move’ and then we can ask this one actually logically entailed(包含) the other. If all reptiles walk and we know that turtles are reptiles and some of them move, then this clearly has to be true if this is also true. This is the entailment problem. So studied by Bowman also be a Stanford PhD student in linguistics and basically instead of us having now to define turtles are subsets of reptiles and moving is a general expression and walking is a special subtype of moving and any of these things he basically says everything is a vector. Let me just train a model with the final output that I care about with a simple logistic regression at the top and just say yes or no. These two sentences are in this relationship or they’re not and I learn everything in between and eventually amazingly the model without us having to manually define that reptiles is a super group of turtles and moving is basically more general than walking. The model figure it out to make the final correct prediction that one entails the other. So we basically were able to see that for all these different levels of linguistic representation starting from speech to phonemes and morphemes, words, phrases, grammatical structure and the meaning. Everything will essentially be mapped to a vector.

slide 26: NLP Applications: Sentiment Analysis

Now let’s look at a couple of applications. So here’s one for sentiment analysis and just again to help you understand how amazing it is that we’ll be able to do in this class and we’ll be able to build some sentiment analysis models that are very very accurate. I’ll tell you a little bit about traditionally what it meant to do very well in sentiment analysis. Basically you have to have a curated(引导的) sentiment dictionary and you said if we try to do this really accurately, let’s all sit down and think about all the positive words that we have like wonderful, awesome, amazing, great, suite and so on and then you look at your errors you say all right now let’s just basically sum up some positive words and sum up some negative words and for each new sentence we say if there are more positive words than the negative words, we say this is a positive sentence and that’s our algorithm, very simple algorithm. We look up something and some dictionary and do that and then in that kind of project, what you would notice once you look at the errors that your algorithm makes you see oh well there’s also negation(否定) and so on. I’d say well if I see any of these positive words and then I see ‘not’ in front of it, I’ll just say it’s negative so ‘not good’ , ‘not wonderful’ now say it’s negative. Then you look at that you see well ‘not awesome’ but still okay might actually be not negative but it might be neutral or it’s just slightly less positive. You keep doing that until you realize you’re never going to actually be able to write down all the rules by hand.

And now what we’re able to do is we basically give it a lot of examples of phrases and sentences and we can train a single model that takes in these raw labeled phrases and makes a final prediction and then was able to outperform all this human engineering of this domain of sentiment. And here’s again an example, we have the word ‘humor’ which is very positive, somewhat sadly ‘intelligent’ not as positive as just ‘humor’ but basically this whole phrase ‘intelligent humor’ is identified as positive by this model. Now ‘any other kind of intelligent humor’ is still positive. ‘Cleverness’ and ‘wit or other kinds of intelligent humor’ is positive to ‘care about’ all these positive things is still positive but then or neutral here so this is a miss classification but then once the ‘movie doesn’t care about them’ the algorithm learned that now it’s actually negative. So not caring about a bunch of positive things turns out to be negative and again we didn’t give the algorithm this kind of rule like not caring about positive things and so on, it just learned it automatically. (Neural networks are not immune to problems of skew data e.g.95% positive examples and 5% negative examples).

slide 27: NLP Applications: Question Answering

So now let’s go over another example and then I think we only have two or three more and then we’ll end with a live demo of a very powerful known network model. So question answering is one of these really tough problem in natural language processing where generally you need a lot of feature engineering in past methods and even in methods that are as little as two years old where you may have to have regular expressions for instance and finding specific features like a main verb does it trigger something very specific because you have a wh questions for a subject or an object and things like that or you define who’s the agent of that sentence and things like that. So pretty complex kinds of features that you have to engineer to do well.

And basically in deep learning there’s some folks to use the exact same model architecture, same kind of neural network architecture that we use in morphology, in syntax and logical semantics and sentiment. In this case it was a recursive neural network, the same kind of recursive neural network that we used for all these different levels turn out to also be able to answer questions for more complex kinds of things and in the end it stored a lot of the facts that were asked about during training in vectors.

So when you zoom into again these visualizations the model had automatically learned that Ronald Reagan, Jimmy Carter and Woodrow Wilson for instance are all somewhat similar. Now how exactly they’re similar well we know but that’s again required world knowledge but somehow the model was able to say in this area of my vector space I represent it in some combination of numbers that these were former US presidents of maybe a certain time frame. We didn’t have to go in and teach it about what presidents are and what time frames and different times are. And you know put for instance George Washington, Thomas Jefferson and John Adams and yet another part of the space and those are closer to one another or to other presidents but if you go over here you’ll have for instance policies or explorers and emperors or wars, rebellions and battles. So these are all kinds of questions that were asked during training and those are in a completely different part of the space and again the model learned that automatically just by having asked and seen a lot of answers for different kinds of questions.

slide 28,29,30: NLP Applications: Machine Translation

Now machine translation I mentioned that was one of the toughest tasks in NLP and again just to give you a very high-level overview of the history, there’re a lot of different techniques that people have tried to translate from one language into another. Some people said first we maybe can just directly translate from one sentence to the other. But then people said no we first need to understand the grammatical structure and then from one chromatic instructor we can translate to another grammatical structure. Another said no what we really need is to understand the meaning first and then we can translate easily from one meaning into another language at that same sentence. So they’re traditionally extremely complex kinds of models that require hundreds of gigabytes(千兆字节) of RAM if you want to keep them in RAM for instance because they have to store all possible phrase pairs and things like that.

It’s a very complex model and it’s a huge area of research we obviously a lot of us use international translation. It’s one of the most useful tasks in natural language processing and used to literally require many years and many many different people to build systems that were at all decent(相当好的) and none of them are still great. Nobody in machine translation comes even close to human translation but even for like reasonably. Decent system was a huge efforts for many years and now I might ask you what do you think is the interlingua the sort of main way to represent language if you want to do translation. Vectors is the interlingua. So everything will be mapped into a vector and we’ll go over this model in a couple of weeks where you basically take any kind of input language. You map it with a neural network into a single vector and then you translate from that vector and you generate the translation sentence so if that doesn’t convince you that vectors are useful then nothing well. So there will be always fuzziness like ‘duck’ should be the vector B here close to hiding should be the vector B here close to bird.

slide 31,32: Dynamic Memory Network by MetaMind

But in the end when we look at these kinds of impressive applications we will see that no matter how we represent it internally. It’s just incredibly useful and so to give you one idea of this actually have a little demo of a model that can basically be asked any kind of question and it’s the same kind of architecture that underlines all these different applications so I can ask for instance what is the sentiment of this kind of defendants. The best way to hope for any chance of enjoying this film is by lowering your expectations. So this is one of those really hard sentiment examples that we literally only in the last half-year are able to consistently correctly predict and the algorithm correctly says that this is negative despite having lots of positive words like best and hope and enjoying but in the end to do all these things you have to lower your expectations. So it’s negative.

I will wrap up basically the next lecture we will learn. The first step that will always need for all these different models which is to learn how to represent words as vectors.

视频连接: https://www.youtube.com/watch?v=Qy0oEkCZkBI

幻灯片连接: http://cs224d.stanford.edu/lectures/CS224d-Lecture1.pdf