coco测评代码部分解析

1、以val2017为例,跑出来的结果是gt框+单人的方式

def evaluate(self):

'''

Run per image evaluation on given images and store results (a list of dict) in self.evalImgs

:return: None

'''

tic = time.time()

print('Running per image evaluation...')

p = self.params

# add backward compatibility if useSegm is specified in params

if not p.useSegm is None:

#pdb.set_trace()

p.iouType = 'segm' if p.useSegm == 1 else 'bbox'

print('useSegm (deprecated) is not None. Running {} evaluation'.format(p.iouType))

print('Evaluate annotation type *{}*'.format(p.iouType))

p.imgIds = list(np.unique(p.imgIds))

if p.useCats:

p.catIds = list(np.unique(p.catIds))

p.maxDets = sorted(p.maxDets)

self.params=p

self._prepare()

# loop through images, area range, max detection number

catIds = p.catIds if p.useCats else [-1]

if p.iouType == 'segm' or p.iouType == 'bbox':

computeIoU = self.computeIoU

elif p.iouType == 'keypoints':

computeIoU = self.computeOks

self.ious = {(imgId, catId): computeIoU(imgId, catId) \ #存储的是5000张验证集的结果,每个ious是一个(ixj)的矩阵,i是dt的数量,j是gt的数量

for imgId in p.imgIds

for catId in catIds}

evaluateImg = self.evaluateImg

maxDet = p.maxDets[-1]

self.evalImgs = [evaluateImg(imgId, catId, areaRng, maxDet) #存储的是15000个结果,在不同的尺度上的结果呢

for catId in catIds #只有一个人的分类

for areaRng in p.areaRng #3中区域面积来进行衡量

for imgId in p.imgIds #5000张验证集图片

]

pdb.set_trace()

self._paramsEval = copy.deepcopy(self.params)

toc = time.time()

print('DONE (t={:0.2f}s).'.format(toc-tic)) def evaluateImg(self, imgId, catId, aRng, maxDet):

'''

perform evaluation for single category and image

:return: dict (single image results)

'''

p = self.params

if p.useCats:

gt = self._gts[imgId,catId]

dt = self._dts[imgId,catId] #加在对应的imgId和catId对应的信息

else:

gt = [_ for cId in p.catIds for _ in self._gts[imgId,cId]]

dt = [_ for cId in p.catIds for _ in self._dts[imgId,cId]]

#if(len(gt)==0):

# print 'hello'

# print gt

# pdb.set_trace()

if len(gt) == 0 and len(dt) ==0: #如果对应的gt和dt都没有,返回None

return None

for g in gt:

if g['ignore'] or (g['area']aRng[1]): #设置gt中的每个标注是不是要ignore

g['_ignore'] = 1

else:

g['_ignore'] = 0

# sort dt highest score first, sort gt ignore last

gtind = np.argsort([g['_ignore'] for g in gt], kind='mergesort') #把gt中不忽略的放在前面,dt中分数高的放前面,gtind对应的也是索引!!!

gt = [gt[i] for i in gtind]

dtind = np.argsort([-d['score'] for d in dt], kind='mergesort') #dtind对应的是索引!!!!!!!!!!

dt = [dt[i] for i in dtind[0:maxDet]] #如果dt人数过多,只取前maxDets个

iscrowd = [int(o['iscrowd']) for o in gt] #加在iscrowd信息

# load computed ious

ious = self.ious[imgId, catId][:, gtind] if len(self.ious[imgId, catId]) > 0 else self.ious[imgId, catId]

#把不需要ignore的gt的计算好的拿出来,最后的ious应该是(i,j1)的矩阵,j1是gt中不需要ignore的gt的总的数量

T = len(p.iouThrs) #设置最后的阈值卡关

G = len(gt) #总的gt数量,包括要忽略的

D = len(dt) #总的dt数量

gtm = np.zeros((T,G)) #设置gtm的大小是TXG

dtm = np.zeros((T,D)) #设置Dtm的大小是TXD gtIg = np.array([g['_ignore'] for g in gt]) #把ignore的样本拿出来,此时的gt已经按照gt的ignore拍好了顺序的 dtIg = np.zeros((T,D)) #dtIg的大小是TxD # if not len(ious)==0: #此时的ious仅仅是不忽略的那些gt对应的样本 for tind, t in enumerate(p.iouThrs): for dind, d in enumerate(dt): # information about best match so far (m=-1 -> unmatched) iou = min([t,1-1e-10]) m = -1 for gind, g in enumerate(gt): #在gt和dt之间进行遍历 # if this gt already matched, and not a crowd, continue if gtm[tind,gind]>0 and not iscrowd[gind]: #不是人群并且已经做好了匹配的忽略掉 continue # if dt matched to reg gt, and on ignore gt, stop if m>-1 and gtIg[m]==0 and gtIg[gind]==1: #什么时候跳出本次循环呢??等待已经匹配成功,m此时一定是正数,并且匹配的这个不是ignore,并且下一个就是ignore,这样才跳出循环,这样保证会一直寻找最优的配置 break # continue to next gt unless better match made #此时重叠小于阈值iou,所以忽略继续 if ious[dind,gind] < iou: continue # if match successful and best so far, store appropriately iou=ious[dind,gind] #此时表示匹配成功了,m存储的是匹配成功的gtind,但是还是会继续,将iou赋予新值,是为了找到更好的匹配者 m=gind # if match made store id of match for both dt and gt if m ==-1: continue dtIg[tind,dind] = gtIg[m] #dind对应的那个gt是不是ignore dtm[tind,dind] = gt[m]['id'] #dind对应的gt的id是多少 gtm[tind,m] = d['id'] #本次搜索dind对应的那个gt,存储那个gt所对应的dt的id,也就是本次的dind对应的id # set unmatched detections outside of area range to ignore a = np.array([d['area']aRng[1] for d in dt]).reshape((1, len(dt))) dtIg = np.logical_or(dtIg, np.logical_and(dtm==0, np.repeat(a,T,0))) # store results for given image and category return { 'image_id': imgId, 'category_id': catId, 'aRng': aRng, 'maxDet': maxDet, 'dtIds': [d['id'] for d in dt], 'gtIds': [g['id'] for g in gt], 'dtMatches': dtm, 'gtMatches': gtm, 'dtScores': [d['score'] for d in dt], 'gtIgnore': gtIg, 'dtIgnore': dtIg, }

1)dtIg存储的是每个dt配对的那个gt是不是忽略的,大小是10xD

2)dtm存储的是每个dt匹配成功的那个gt的id

3)gtm存储的是匹配成功的那个gt对应的dt所对应的id, 大小是10xG

接下来有了每张图片的匹配结果的话,就开始accumulate吧

![]()

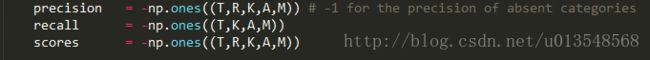

定义了precision,recall,scores,的大小

def accumulate(self, p = None):

'''

Accumulate per image evaluation results and store the result in self.eval

:param p: input params for evaluation

:return: None

'''

print('Accumulating evaluation results...')

tic = time.time()

if not self.evalImgs:

print('Please run evaluate() first')

# allows input customized parameters

if p is None:

p = self.params

p.catIds = p.catIds if p.useCats == 1 else [-1]

T = len(p.iouThrs)

R = len(p.recThrs)

K = len(p.catIds) if p.useCats else 1

A = len(p.areaRng)

M = len(p.maxDets)

precision = -np.ones((T,R,K,A,M)) # -1 for the precision of absent categories

recall = -np.ones((T,K,A,M))

scores = -np.ones((T,R,K,A,M))

# create dictionary for future indexing

_pe = self._paramsEval

catIds = _pe.catIds if _pe.useCats else [-1]

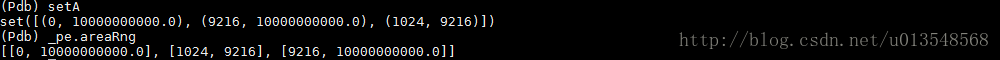

setK = set(catIds)

setA = set(map(tuple, _pe.areaRng))

setM = set(_pe.maxDets)

setI = set(_pe.imgIds)

# get inds to evaluate

k_list = [n for n, k in enumerate(p.catIds) if k in setK] #[0]

m_list = [m for n, m in enumerate(p.maxDets) if m in setM] #[20]

a_list = [n for n, a in enumerate(map(lambda x: tuple(x), p.areaRng)) if a in setA] #[0, 1, 2]

i_list = [n for n, i in enumerate(p.imgIds) if i in setI] #[0...4999]

I0 = len(_pe.imgIds)

A0 = len(_pe.areaRng)

#pdb.set_trace()

# retrieve E at each category, area range, and max number of detections

for k, k0 in enumerate(k_list):

Nk = k0*A0*I0 #Nk=0

for a, a0 in enumerate(a_list):

Na = a0*I0 #Na = [0,5000,10000]

for m, maxDet in enumerate(m_list):

E = [self.evalImgs[Nk + Na + i] for i in i_list] #去掉用第Nk+Na+i个结果,len(E)=5000

E = [e for e in E if not e is None] #如果不是None,就把他检出来,len(E)=2693

if len(E) == 0:

continue

dtScores = np.concatenate([e['dtScores'][0:maxDet] for e in E]) #6352

# different sorting method generates slightly different results.

# mergesort is used to be consistent as Matlab implementation.

inds = np.argsort(-dtScores, kind='mergesort') #6352

dtScoresSorted = dtScores[inds]

#pdb.set_trace()

dtm = np.concatenate([e['dtMatches'][:,0:maxDet] for e in E], axis=1)[:,inds] #10x6352

dtIg = np.concatenate([e['dtIgnore'][:,0:maxDet] for e in E], axis=1)[:,inds] #10x6352

gtIg = np.concatenate([e['gtIgnore'] for e in E]) #11004

npig = np.count_nonzero(gtIg==0 ) #6352

#pdb.set_trace()

if npig == 0:

continue #如果全都不用忽略的话,就直接结束吧

tps = np.logical_and( dtm, np.logical_not(dtIg) ) #true positive (10x6532)

fps = np.logical_and(np.logical_not(dtm), np.logical_not(dtIg) ) #false positive (10x6532)

tp_sum = np.cumsum(tps, axis=1).astype(dtype=np.float) #63520

fp_sum = np.cumsum(fps, axis=1).astype(dtype=np.float) #63520

for t, (tp, fp) in enumerate(zip(tp_sum, fp_sum)): #遍历所有的tp和fp样本

tp = np.array(tp)

fp = np.array(fp)

nd = len(tp)

rc = tp / npig #计算recall

pr = tp / (fp+tp+np.spacing(1)) #计算precision

q = np.zeros((R,))

ss = np.zeros((R,))

if nd:

recall[t,k,a,m] = rc[-1] #recall的话取最后一个

else:

recall[t,k,a,m] = 0

# numpy is slow without cython optimization for accessing elements

# use python array gets significant speed improvement

pr = pr.tolist(); q = q.tolist()

for i in range(nd-1, 0, -1):

if pr[i] > pr[i-1]:

pr[i-1] = pr[i]

inds = np.searchsorted(rc, p.recThrs, side='left')

try:

for ri, pi in enumerate(inds):

q[ri] = pr[pi] #precision的话取100个阈值来计算,是一个更加合理的测试方法

ss[ri] = dtScoresSorted[pi]

except:

pass

precision[t,:,k,a,m] = np.array(q)

scores[t,:,k,a,m] = np.array(ss)

#pdb.set_trace()

self.eval = {

'params': p,

'counts': [T, R, K, A, M],

'date': datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'),

'precision': precision,

'recall': recall,

'scores': scores,

}

toc = time.time()

print('DONE (t={:0.2f}s).'.format( toc-tic))precision:10*101*1*3*1

recall:10*1*3*1

接下来是summarize的方法

def summarize(self):

'''

Compute and display summary metrics for evaluation results.

Note this functin can *only* be applied on the default parameter setting

'''

def _summarize( ap=1, iouThr=None, areaRng='all', maxDets=100 ):

p = self.params

iStr = ' {:<18} {} @[ IoU={:<9} | area={:>6s} | maxDets={:>3d} ] = {:0.3f}'

titleStr = 'Average Precision' if ap == 1 else 'Average Recall'

typeStr = '(AP)' if ap==1 else '(AR)'

iouStr = '{:0.2f}:{:0.2f}'.format(p.iouThrs[0], p.iouThrs[-1]) \

if iouThr is None else '{:0.2f}'.format(iouThr)

aind = [i for i, aRng in enumerate(p.areaRngLbl) if aRng == areaRng]

mind = [i for i, mDet in enumerate(p.maxDets) if mDet == maxDets]

if ap == 1:

# dimension of precision: [TxRxKxAxM]

s = self.eval['precision']

tmp = s

#pdb.set_trace()

# IoU

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0] #找到对应的threshold的得分,如果在输入参数里面制定了阈值范围

s = s[t]

s = s[:,:,:,aind,mind]

else:

# dimension of recall: [TxKxAxM]

s = self.eval['recall']

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0]

s = s[t]

s = s[:,:,aind,mind]

if len(s[s>-1])==0:

mean_s = -1

else:

mean_s = np.mean(s[s>-1])

print(iStr.format(titleStr, typeStr, iouStr, areaRng, maxDets, mean_s))

return mean_s

def _summarizeDets():

stats = np.zeros((12,))

stats[0] = _summarize(1)

stats[1] = _summarize(1, iouThr=.5, maxDets=self.params.maxDets[2])

stats[2] = _summarize(1, iouThr=.75, maxDets=self.params.maxDets[2])

stats[3] = _summarize(1, areaRng='small', maxDets=self.params.maxDets[2])

stats[4] = _summarize(1, areaRng='medium', maxDets=self.params.maxDets[2])

stats[5] = _summarize(1, areaRng='large', maxDets=self.params.maxDets[2])

stats[6] = _summarize(0, maxDets=self.params.maxDets[0])

stats[7] = _summarize(0, maxDets=self.params.maxDets[1])

stats[8] = _summarize(0, maxDets=self.params.maxDets[2])

stats[9] = _summarize(0, areaRng='small', maxDets=self.params.maxDets[2])

stats[10] = _summarize(0, areaRng='medium', maxDets=self.params.maxDets[2])

stats[11] = _summarize(0, areaRng='large', maxDets=self.params.maxDets[2])

return stats

def _summarizeKps():

stats = np.zeros((10,))

stats[0] = _summarize(1, maxDets=20)

stats[1] = _summarize(1, maxDets=20, iouThr=.5)

stats[2] = _summarize(1, maxDets=20, iouThr=.75)

stats[3] = _summarize(1, maxDets=20, areaRng='medium')

stats[4] = _summarize(1, maxDets=20, areaRng='large')

stats[5] = _summarize(0, maxDets=20)

stats[6] = _summarize(0, maxDets=20, iouThr=.5)

stats[7] = _summarize(0, maxDets=20, iouThr=.75)

stats[8] = _summarize(0, maxDets=20, areaRng='medium')

stats[9] = _summarize(0, maxDets=20, areaRng='large')

return stats

if not self.eval:

raise Exception('Please run accumulate() first')

iouType = self.params.iouType

if iouType == 'segm' or iouType == 'bbox':

summarize = _summarizeDets

elif iouType == 'keypoints':

summarize = _summarizeKps

self.stats = summarize()

def __str__(self):

self.summarize()

1)计算oks的时候首先利用score进行了排序

evalImg的时候也是先进行了score的排序

一共进行了两次score的排序,第一次是每个dt进行目标计算的时候对每张图片的dt进行了计算,另外一个是在最后进行总的汇总的时候对所有的图片的dt都拿出来进行了一个总的排序,这样在提交结果的时候取阈值为负无穷即可,只要负样本的分数都很低即可

2)有哪些需要ignore呢?首先匹配上的框本身即是ignore的,另外没有匹配到的在面积范围之外的也要ignore。

3)最后的评测就是PR曲线的一个代表,在不同的recall条件下去取precision,获得一个平均,也即获得PR曲线的面积