基于CNN的简单笑脸识别

转载

Python版本还挺重要的,不然会不停的给你报错,路径错误、路径错误、路径错误(晕)。

数据集介绍:数据为人脸表情图片。 数据分为训练数据和测试数据,分别保存在train_happy.h5、test_happy.h5两个文件中。其中训练数据为600张图片,维度为(64,64,3),标签维度(600,1);测试数据为150张图片,图片维度为(64,64,3),标签维度(150,1)。

import keras.backend as K

import math

import numpy as np

import h5py

import matplotlib.pyplot as plt

def mean_pred(y_true, y_pred):

return K.mean(y_pred)

def load_dataset():

train_dataset = h5py.File('C:\\Users\\HP\\Desktop\\train_happy.h5')

train_set_x_orig = np.array(train_dataset["train_set_x"][:]) # your train set features

train_set_y_orig = np.array(train_dataset["train_set_y"][:]) # your train set labels

test_dataset = h5py.File('C:\\Users\\HP\\Desktop\\test_happy.h5')

test_set_x_orig = np.array(test_dataset["test_set_x"][:]) # your test set features

test_set_y_orig = np.array(test_dataset["test_set_y"][:]) # your test set labels

classes = np.array(test_dataset["list_classes"][:]) # the list of classes

train_set_y_orig = train_set_y_orig.reshape((1, train_set_y_orig.shape[0]))

test_set_y_orig = test_set_y_orig.reshape((1, test_set_y_orig.shape[0]))

return train_set_x_orig, train_set_y_orig, test_set_x_orig, test_set_y_orig, classes

CNN主程序代码.py

import numpy as np

from keras.layers import Input, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D

from keras.layers import AveragePooling2D, MaxPooling2D, Dropout, GlobalMaxPooling2D, GlobalAveragePooling2D

from keras.models import Model

from keras.preprocessing import image

from keras.applications.imagenet_utils import preprocess_input

import kt_utils

import keras.backend as K

K.set_image_data_format('channels_last')

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

#加载数据

#图像维度:(64,64,3)训练集数量:600 测试集数量:150

X_train, Y_train, X_test, Y_test, classes = kt_utils.load_dataset()

#挑选一张图片查看

plt.imshow(X_train[0])

print(Y_train[:,0])

# Normalize image vectors

X_train = X_train/255.

X_test = X_test/255.

# Reshape

Y_train = Y_train.T

Y_test = Y_test.T

def HappyModel(input_shape):

X_input = Input(input_shape)

X = ZeroPadding2D((3, 3))(X_input)

X = Conv2D(32, (7, 7), strides=(1, 1), name='conv0')(X)

X = BatchNormalization(axis=3, name='bn0')(X)

X = Activation('relu')(X)

X = MaxPooling2D((2, 2), name='max_pool')(X)

#降维,矩阵转化为向量 + 全连接层

X = Flatten()(X)

X = Dense(1, activation='sigmoid', name='fc')(X)

model = Model(inputs=X_input, outputs=X, name='HappyModel')

return model

#创建一个模型实体

happy_model = HappyModel(X_train.shape[1:])

#编译模型

happy_model.compile("adam","binary_crossentropy", metrics=['accuracy'])

#训练

#初始化优化器和模型

history = happy_model.fit(X_train,Y_train,batch_size=20,epochs=50,

validation_split=0.2)

score = happy_model.evaluate(X_test,Y_test)

print("test score:",score[0])

print("test accuracy",score[1])

#列出所有历史数据

print(history.history.keys())

#汇总损失函数历史数据

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model_loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train','test'],loc='upper left')

plt.show()

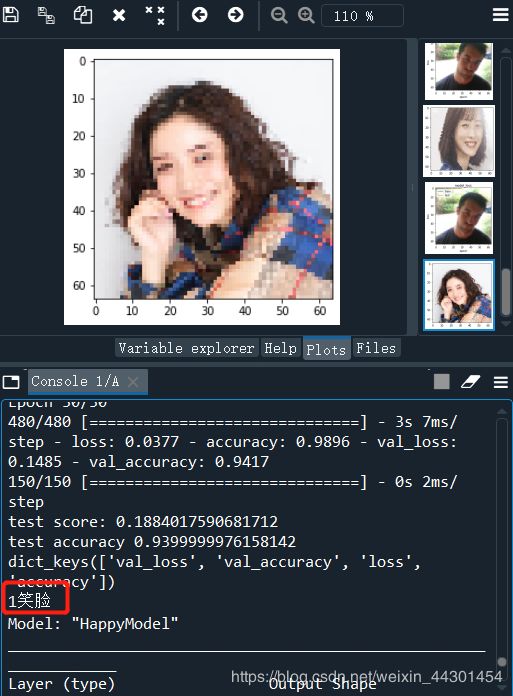

#测试自己的图片

img_path = 'C:\\Users\\HP\\Desktop\\img03.png'

img = image.load_img(img_path, target_size=(64, 64))

imshow(img)

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

if happy_model.predict(x)==1:

print("1笑脸")

else:

print("0哭脸")

happy_model.summary()

运行代码之后就会知道,结果是十分的不准确的。数据集是标准的标签数据,测试的数据需要数据量大,训练后准确率才能上来。如果数据量小,那识别率就是很低的。

最后记得一定要保存为.py文件哦,放在Python可以正常运行路径下,不然你就会发现他报了莫名其妙的错误。

本来小博主也是想在大神的基础上改一下代码的,结果太懒啦!大脑停止思考,so…只能照搬了哈哈哈

比如说可以改一下全连接层的层数,增加学习率,提高训练效率等等哈!