keras 搭建神经网络

1 简单的回归

#coding=utf-8

import numpy as np

np.random.seed(1337)

from keras.models import Sequential

from keras.layers import Dense

import matplotlib.pyplot as plt

# create some data

X = np.linspace(-1, 1, 200)

np.random.shuffle(X)

Y = 0.5*X + 2 + np.random.normal(0, 0.05, (200,))

#plot data

plt.scatter(X, Y)

plt.show()

X_train, Y_train = X[:160], Y[:160]

X_test, Y_test = X[160:], Y[160:]

plt.scatter(X_test, Y_test)

plt.show()

# build a neural network from the 1st layer to the last layer

model = Sequential()

model.add(Dense(units=1, input_shape=(1,)))

# choose loss function and optimizing method

model.compile(optimizer='sgd', loss='mse')

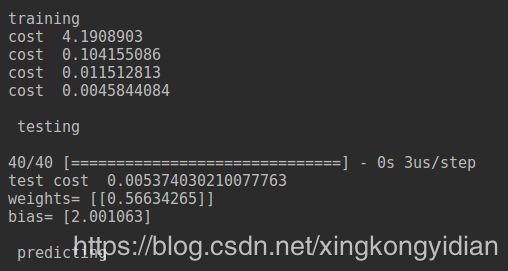

#training

print('\ntraining')

for step in range(301):

cost=model.train_on_batch(X_train, Y_train)

if (step %100 == 0):

print('cost ', cost)

#test

print('\n testing')

cost = model.evaluate(X_test, Y_test, batch_size=40)

print('test cost ', cost)

W, b = model.layers[0].get_weights()

print('weights=', W, '\nbias=', b)

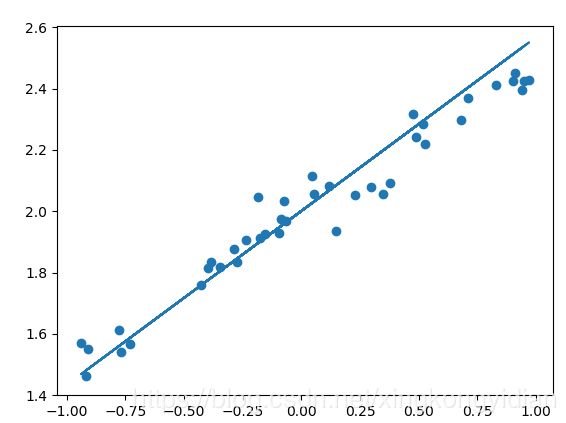

# plotting the prediction

print('\n predicting')

Y_pred = model.predict(X_test)

plt.scatter(X_test, Y_test) # scatter 点

plt.plot(X_test, Y_pred) # plot 线

plt.show()

2 简单的分类

#coding=utf-8

import numpy as np

np.random.seed(1337)

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.datasets import mnist

from keras.utils import np_utils

from keras.layers import Dense, Activation

from keras.optimizers import RMSprop

#X shape(60000 28*28) , y shape(10000, )

(X_train, y_train), (X_test, y_test)=mnist.load_data()

# data pre-processing

X_train =X_train.reshape(X_train.shape[0], -1)/255 # normalize

X_test = X_test.reshape(X_test.shape[0], -1)/255 # normalize

y_train = np_utils.to_categorical(y_train, num_classes=10) # one-hot

y_test = np_utils.to_categorical(y_test, num_classes=10) # one-hot

print('x_train shape: %d' %(X_train.shape[0]))

print('\nx_test shape: %d' %(X_test.shape[0]))

# Another way to build neural net

model = Sequential([

Dense(32, input_dim=784),

Activation('relu'),

Dense(10),

Activation('softmax'),

])

# Another way to define optimizer

rmsprop =RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)

# add metrics to get more results we want

model.compile(optimizer=rmsprop,

loss='categorical_crossentropy',

metrics=['accuracy'])

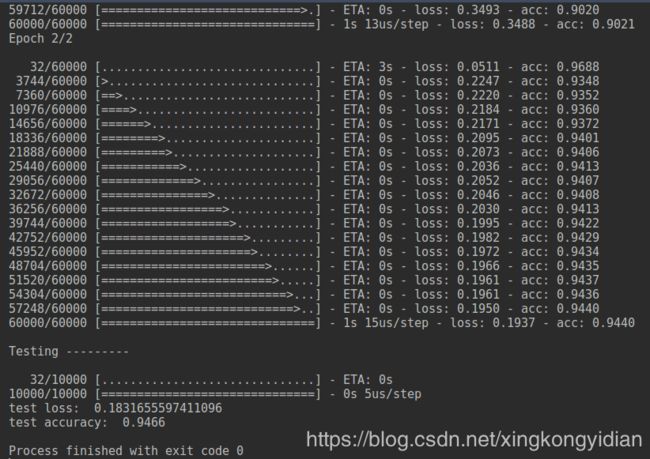

print('Training --------')

# Another way to train model

model.fit(X_train, y_train, epochs=2, batch_size=32)

print('\nTesting ---------')

# evaluate the model with the metrics we defined earlier

loss, accuracy = model.evaluate(X_test, y_test)

print('test loss: ', loss)

print('test accuracy: ', accuracy)

3 CNN

#coding=utf-8

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense, Activation, Convolution2D, MaxPooling2D, Flatten

from keras.optimizers import Adam

# download the mnist to the path '~/.keras/datasets/' if it is the first time to be called

# training X shape (60000, 28x28), Y shape (60000, ). test X shape (10000, 28x28), Y shape (10000, ) 1

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# data pre-processing 4

x_train = x_train.reshape(-1, 1, 28, 28)/255.

x_test = x_test.reshape(-1, 1, 28, 28)/255.

y_train = np_utils.to_categorical(y_train, num_classes=10)

y_test = np_utils.to_categorical(y_test, num_classes=10)

# Another way to build your CNN 1

model = Sequential()

# Conv layer 1 output shape (32, 28, 28)

model.add(

Convolution2D(

batch_input_shape=(None, 1, 28, 28),

filters=32,

kernel_size=(5, 5),

strides=1,

padding='same',

data_format='channels_first',

)

)

model.add(Activation('relu'))

# Pooling layer 1 (max pooling) output shape (32, 14, 14)

model.add(MaxPooling2D(

pool_size=2,

strides=2,

padding='same',

data_format='channels_first',

))

# Conv layer 2 output shape (64, 14, 14)

model.add(Convolution2D(filters=64,

kernel_size=(2, 2),

strides=(2, 2),

padding='same',

data_format='channels_first',

))

model.add(Activation('relu'))

# Pooling layer 2 (max pooling) output shape (64, 7, 7)

model.add(MaxPooling2D(pool_size=(2, 2),

data_format='channels_first'

))

# Fully connected layer 1 input shape (64 * 7 * 7) = (3136), output shape (1024) 3

model.add(Flatten())

model.add(Dense(1024))

model.add(Activation('relu'))

# Fully connected layer 2 to shape (10) for 10 classes 2

model.add(Dense(10))

model.add(Activation('softmax'))

# Another way to define your optimizer 1

adam = Adam(lr=1e-4)

# We add metrics to get more results you want to see 1

model.compile(optimizer=adam,

loss='categorical_crossentropy',

metrics=['accuracy'])

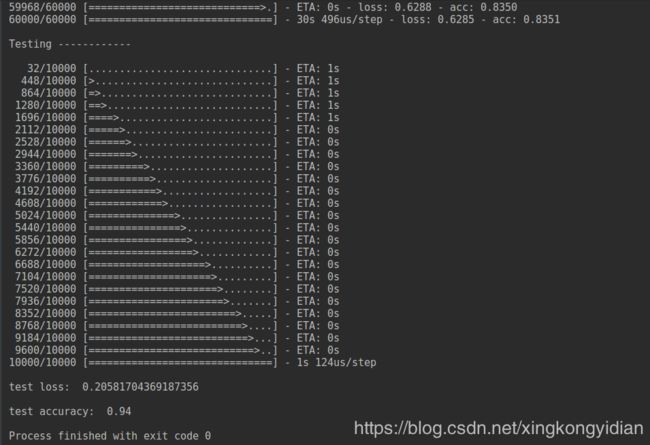

print('Training ------------')

# Another way to train the model 1

model.fit(x=x_train, y=y_train, epochs=1, batch_size=64,)

print('\nTesting ------------')

# Evaluate the model with the metrics we defined earlier 1

loss, accuracy = model.evaluate(x=x_test, y=y_test)

print('\ntest loss: ', loss)

print('\ntest accuracy: ', accuracy)

4 RNN classifier

#coding=utf-8

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import SimpleRNN, Activation, Dense

from keras.optimizers import Adam

# 设置time_steps, input_size, batch_size, batch_index , output_size, cell_size 和lr

TIME_STEPS = 28

INPUT_SIZE = 28

BATCH_SIZE = 50

BATCH_INDEX = 0

OUTPUT_SIZE = 10

CELL_SIZE = 50

LR = 0.0001

# download the mnist to the path '~/.keras/datasets/' if it is the first time to be called

# X shape (60,000 28x28), y shape (10,000, )

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# data pre-processing

x_train = x_train.reshape(-1, 28, 28)/255 #normlize

x_test = x_test.reshape(-1, 28, 28)/255

y_train = np_utils.to_categorical(y_train, num_classes=10)

y_test = np_utils.to_categorical(y_test, num_classes=10)

# build RNN model

model = Sequential()

# RNN cell

model.add(SimpleRNN(

batch_input_shape=(None, TIME_STEPS, INPUT_SIZE),

units=CELL_SIZE

))

# output layer

model.add(Dense(

OUTPUT_SIZE

))

model.add(Activation('softmax'))

# optimizer

adam = Adam(LR)

model.compile(optimizer=adam,

loss='categorical_crossentropy',

metrics=['accuracy'])

# training

for step in range(8001):

X_batch = x_train[BATCH_INDEX: BATCH_INDEX + BATCH_SIZE,:,:]

Y_batch = y_train[BATCH_INDEX: BATCH_INDEX + BATCH_SIZE,:]

cost = model.train_on_batch(X_batch, Y_batch)

BATCH_INDEX +=BATCH_SIZE

BATCH_INDEX =0 if BATCH_INDEX>= x_train.shape[0] else BATCH_INDEX

if step % 500 ==0:

cost, accuracy = model.evaluate(x_test, y_test, batch_size=x_test.shape[0], verbose=False)

print('test cost:', cost, 'test accuracy: ', accuracy)

5 RNN regressor

#coding =utf-8

import numpy as np

np.random.seed(1337) # for reproducibility

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import LSTM, TimeDistributed, Dense

from keras.optimizers import Adam

#设置 batch_start、 time_steps 、 batch_size、 input_size output_size cell_size 和LR

BATCH_START = 0

TIME_STEPS = 20

BATCH_SIZE = 50

INPUT_SIZE = 1

OUTPUT_SIZE = 1

CELL_SIZE =20

LR = 0.006

def get_batch():

global BATCH_START, TIME_STEPS

# xs shape (50batch, 20steps)

xs = np.arange(BATCH_START, BATCH_START+TIME_STEPS*BATCH_SIZE).reshape((BATCH_SIZE, TIME_STEPS)) / (10*np.pi)

seq = np.sin(xs)

res = np.cos(xs)

BATCH_START += TIME_STEPS

# plt.plot(xs[0, :], res[0, :], 'r', xs[0, :], seq[0, :], 'b--')

# plt.show()

return [seq[:, :, np.newaxis], res[:, :, np.newaxis], xs]

#build model

model = Sequential()

# build a LSTM RNN

model.add(LSTM(

batch_input_shape=(BATCH_SIZE, TIME_STEPS, INPUT_SIZE),

units=CELL_SIZE,

return_sequences=True,

stateful=True

))

# add output layer

model.add(TimeDistributed(Dense(OUTPUT_SIZE)))

adam = Adam(lr=LR)

model.compile(optimizer=adam,

loss='mse'

)

print('Training ------------')

for step in range(501):

X_batch, Y_batch, xs =get_batch()

cost = model.train_on_batch(X_batch, Y_batch)

pred = model.predict(X_batch, BATCH_SIZE)

plt.plot(xs[0, :], Y_batch[0].flatten(), 'r',

xs[0, :], pred.flatten()[:TIME_STEPS], 'b--')

plt.ylim((-1.2, 1.2))

plt.draw()

plt.pause(0.1)

if step %10 ==0:

print('train cost: ', cost)

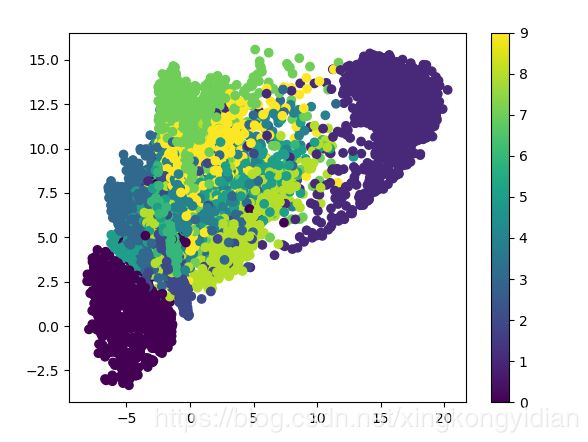

6 autoencoder

#coding=utf-8

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.datasets import mnist

from keras.models import Model

from keras.layers import Dense, Input

import matplotlib.pyplot as plt

# download the mnist to the path '~/.keras/datasets/' if it is the first time to be called

# X shape (60,000 28x28), y shape (10,000, ) 1

(x_train, _) , (x_test, y_test) = mnist.load_data()

# data pre-processing minmax-normlize

x_train = x_train.astype('float32')/255. - 0.5

x_test = x_test.astype('float32')/255. - 0.5

x_train = x_train.reshape((x_train.shape[0], -1))

x_test = x_test.reshape((x_test.shape[0], -1))

print(x_train.shape)

print(x_test.shape)

# in order to plot in a 2D figure

encoding_dim = 2

# this is our input placeholder 1

input_img = Input(shape=(784,))

# encoder layers 4 layers 128 64 10 encoding_dim

encoded = Dense(128, activation='relu')(input_img)

encoded = Dense(64, activation='relu')(encoded)

encoded = Dense(10, activation='relu')(encoded)

encoder_output = Dense(encoding_dim)(encoded)

# decoder layers 4 layers

decoded = Dense(10, activation='relu')(encoder_output)

decoded = Dense(64, activation='relu')(decoded)

decoded = Dense(128, activation='relu')(decoded)

decoder_output = Dense(784, activation='tanh')(decoded)

# construct the autoencoder model 1

autoencoder = Model(input=input_img, output=decoder_output)

# construct the encoder model for plotting 1

encoder = Model(input=input_img, output=encoder_output)

# compile autoencoder 1

autoencoder.compile(optimizer='adam', loss='mse')

# training

autoencoder.fit(x=x_train, y=x_train,

epochs=20,

batch_size=256,

shuffle=True)

# plotting

encoded_imgs = encoder.predict(x_test)

plt.scatter(encoded_imgs[:, 0], encoded_imgs[:, 1], c=y_test)

plt.colorbar()

plt.show()

7 save

#coding=utf-8

import numpy as np

np.random.seed(1337)

from keras.models import Sequential

from keras.layers import Dense

from keras.models import load_model

X = np.linspace(-1, 1, 200)

np.random.shuffle(X)

Y = 0.5*X + 2 + np.random.normal(0, 0.05, (200, ))

X_train, Y_train = X[:160], Y[:160]

X_test, Y_test = X[160:], Y[160:]

model = Sequential()

model.add(Dense(units=1, input_shape=(1,)))

model.compile(loss='mse', optimizer='sgd')

for step in range(301):

cost = model.train_on_batch(X_train, Y_train)

if step %100 == 0:

print('cost ', cost)

# save

print('test before save: ', model.predict(X_test[0:2]))

model.save('my_model.h5')

del model

# load

model = load_model('my_model.h5')

print('test after load: ', model.predict(X_test[0:2]))