PYNQ-Z2调试笔记:基于PYNQ-Z2的远程人脸检测程序

非常感谢您可以看到此博客,这是我的第一篇CSDN博客,感谢您的支持!

基于PYNQ-Z2的远程人脸检测程序

-

准备工作

-

硬件外设

-

代码及操作

1、准备工作

PYNQ-Z2就不多说了,关于配置及启动方法详见官方视频教程http://www.digilent.com.cn/studyinfo/67.html,很明显,我们本次的远程人脸检测程序是属于PS(Processing system)端开发,所以通过SD卡中的镜像文件启动开发板。

2、硬件外设

PYNQ-Z2开发板一块,通过Mircro-USB供电或者电源适配器供电,网口插网线连接到互联网(其实也可以通过无线网卡连接到WiFi,方法在我其他博客里有讲到),外设一个USB摄像头连接到开发板。

3、代码及操作

代码文件分为两部分,sever.ipynb运行在开发板上,client.py运行在电脑上。

sever.ipynb全部代码如下:

import socket

import threading

import struct

import time

import cv2

import numpy

class Carame_Accept_Object:

def __init__(self, S_addr_port=("", 8880)):

self.resolution = (640, 480) # 分辨率

self.img_fps = 15 # 每秒传输多少帧数

self.addr_port = S_addr_port

self.Set_Socket(self.addr_port)

# 设置套接字

def Set_Socket(self, S_addr_port):

self.server = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.server.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1) # 端口可复用

self.server.bind(S_addr_port)

self.server.listen(5)

# print("the process work in the port:%d" % S_addr_port[1])

def check_option(object, client):

# 按格式解码,确定帧数和分辨率

info = struct.unpack('lhh', client.recv(8))

if info[0] > 888:

object.img_fps = int(info[0]) - 888 # 获取帧数

object.resolution = list(object.resolution)

# 获取分辨率

object.resolution[0] = info[1]

object.resolution[1] = info[2]

object.resolution = tuple(object.resolution)

return 1

else:

return 0

def RT_Image(object, client, D_addr):

if (check_option(object, client) == 0):

return

camera = cv2.VideoCapture(0) # 从摄像头中获取视频

img_param = [int(cv2.IMWRITE_JPEG_QUALITY), object.img_fps] # 设置传送图像格式、帧数

while (1):

time.sleep(0.1) # 推迟线程运行0.1s

_, object.img = camera.read() # 读取视频每一帧

# 人脸识别

np_frame = object.img

#face_cascade = cv2.CascadeClassifier(

# r'.\haarcascade_frontalface_default.xml')

#eye_cascade = cv2.CascadeClassifier(

# r'.\haarcascade_eye.xml')

face_cascade = cv2.CascadeClassifier(

'/home/xilinx/jupyter_notebooks/base/video/data/'

'haarcascade_frontalface_default.xml')

eye_cascade = cv2.CascadeClassifier(

'/home/xilinx/jupyter_notebooks/base/video/data/'

'haarcascade_eye.xml')

gray = cv2.cvtColor(np_frame, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

cv2.rectangle(np_frame, (x, y), (x + w, y + h), (255, 0, 0), 2)

roi_gray = gray[y:y + h, x:x + w]

roi_color = np_frame[y:y + h, x:x + w]

eyes = eye_cascade.detectMultiScale(roi_gray)

for (ex, ey, ew, eh) in eyes:

cv2.rectangle(roi_color, (ex, ey), (ex + ew, ey + eh), (0, 255, 0), 2)

object.img = cv2.resize(object.img, object.resolution) # 按要求调整图像大小(resolution必须为元组)

_, img_encode = cv2.imencode('.jpg', object.img, img_param) # 按格式生成图片

img_code = numpy.array(img_encode) # 转换成矩阵

object.img_data = img_code.tostring() # 生成相应的字符串

try:

# 按照相应的格式进行打包发送图片

client.send(

struct.pack("lhh", len(object.img_data), object.resolution[0], object.resolution[1]) + object.img_data)

except:

camera.release() # 释放资源

return

if __name__ == '__main__':

camera = Carame_Accept_Object()

while (1):

client, D_addr = camera.server.accept()

clientThread = threading.Thread(None, target=RT_Image, args=(camera, client, D_addr,))

clientThread.start()

client.py全部代码如下:

#客户端

import socket

import cv2

import threading

import struct

import numpy

class Camera_Connect_Object:

def __init__(self,D_addr_port=["",8880]):

self.resolution=[384,288]

self.addr_port=D_addr_port

self.src=888+15 #双方确定传输帧数,(888)为校验值

self.interval=0 #图片播放时间间隔

self.img_fps=15 #每秒传输多少帧数

def Set_socket(self):

self.client=socket.socket(socket.AF_INET,socket.SOCK_STREAM)

self.client.setsockopt(socket.SOL_SOCKET,socket.SO_REUSEADDR,1)

def Socket_Connect(self):

self.Set_socket()

self.client.connect(self.addr_port)

print("IP is %s:%d" % (self.addr_port[0],self.addr_port[1]))

def RT_Image(self):

#按照格式打包发送帧数和分辨率

self.name=self.addr_port[0]+" Camera"

self.client.send(struct.pack("lhh", self.src, self.resolution[0], self.resolution[1]))

while(1):

info=struct.unpack("lhh",self.client.recv(8))

buf_size=info[0] #获取读的图片总长度

if buf_size:

try:

self.buf=b"" #代表bytes类型

temp_buf=self.buf

while(buf_size): #读取每一张图片的长度

temp_buf=self.client.recv(buf_size)

buf_size-=len(temp_buf)

self.buf+=temp_buf #获取图片

data = numpy.fromstring(self.buf, dtype='uint8') #按uint8转换为图像矩阵

self.image = cv2.imdecode(data, 1) #图像解码

cv2.imshow(self.name, self.image) #展示图片

except:

pass;

finally:

if(cv2.waitKey(10)==27): #每10ms刷新一次图片,按‘ESC’(27)退出

self.client.close()

cv2.destroyAllWindows()

break

def Get_Data(self,interval):

showThread=threading.Thread(target=self.RT_Image)

showThread.start()

if __name__ == '__main__':

camera=Camera_Connect_Object()

camera.addr_port[0]=input("Please input IP:")

camera.addr_port=tuple(camera.addr_port)

camera.Socket_Connect()

camera.Get_Data(camera.interval)

(1)首先,确保开发板初始化完成联网,查询开发板端口号,以打开共享文件夹,将sever.ipynb文件拷贝到开发板的jupyter-notebook文件夹中。

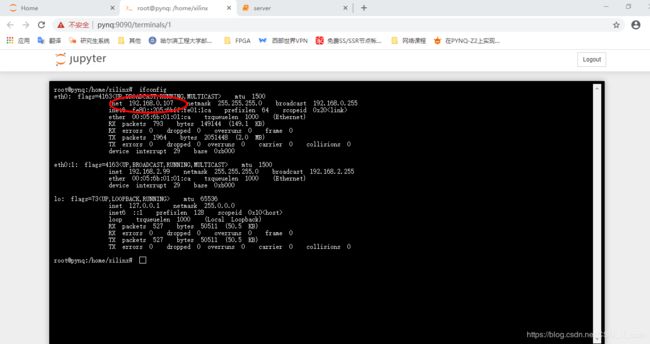

查询方法:在浏览器上输入http://pynq:9090进入jupyter,在jupyter 界面点击new,然后点击Terminal进入终端命令界面,输入:ifconfig

打开我的电脑,在路径栏输入如下:\\192.168.0.107\xilinx,按Enter键打开共享文件夹,进入jupyter_notebooks文件夹,将sever.ipynb文件拷贝到里面。

(2)然后,在浏览器上jupyter,找到sever.ipynb文件,打开并运行。

(3)最后,在电脑端,这里使用Pycharm运行client.py,根据程序提示输入前面查询到的开发板IP地址,按Enter键即可弹出窗口动态显示摄像头抓取到的画面,并进行人脸检测!

效果如图:

下面是调试现场图:

源码文件以及手册百度网盘链接:链接:https://pan.baidu.com/s/19XyZUIpR8oRWd3xvyJivBA

提取码:bvwo

本篇博客到此就结束啦,文中有不严谨之处欢迎批评指正或有关技术交流,可以下方评论或私信我,我会尽快回复的!

但行努力,莫问前程,努力成为一只勤劳的攻城狮,每天积累,每天进步!