1、关于数据集提取中遇到的问题:

1)情况如下||

实现数据集提取的代码函数部分如下

def reconstruct_image():

for iin range(10):

if not os.path.exists('./{}'.format(i)):创建一个文件目录

os. makedirs('./{}'.format(i))

batch_size=1

for i in range(int( mnist.train.num_examples / batch_size)):

# x_data, y_data = mnist.train.next_batch(batch_size)

x_data, y_data = mnist.train.next_batch(batch_size)

img = Image.fromarray(np.reshape(np.array(x_data[0]*255, dtype="uint8"), newshape=(28,28)))

dir = np.argmax(y_data[0])

img.save('./{}/{}.bmp'.format(dir, i)保存到相应的位置中去

在过程中,应该要十分的注意这个环节|:

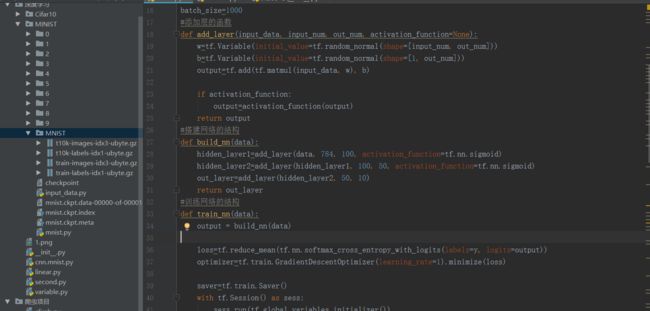

1)在实现手写体识别的时候,添加层的函数,在tensorboard中,有可视化的工具,进行卷积,计算,池化,再进行卷积,再过来就是搭建网络,随之就是训练网络的具体的代码实现如下:

#/usr/bin/python3

#-*- coding:utf-8 -*-

#Author: jackxing

#@Time: 2018/11/7

import tensorflowas tf

from tensorflow.examples.tutorials.mnistimport input_data

import os

from PILimport Image

import numpyas np

mnist=input_data.read_data_sets('./MNIST', one_hot=True)

x=tf.placeholder(dtype=tf.float32, shape=[None,784], name='x')

y=tf.placeholder(dtype=tf.float32, shape=[None,10], name='y')

batch_size=1000

#添加层的函数

def add_layer(input_data, input_num, out_num, activation_function=None):

w=tf.Variable(initial_value=tf.random_normal(shape=[input_num, out_num]))

b=tf.Variable(initial_value=tf.random_normal(shape=[1, out_num]))

output=tf.add(tf.matmul(input_data, w), b)

if activation_function:

output=activation_function(output)

return output

#搭建网络的结构

def build_nn(data):

hidden_layer1=add_layer(data, 784, 100, activation_function=tf.nn.sigmoid)

hidden_layer2=add_layer(hidden_layer1, 100, 50, activation_function=tf.nn.sigmoid)

out_layer=add_layer(hidden_layer2, 50, 10)

return out_layer

#训练网络的结构

def train_nn(data):

output = build_nn(data)

loss=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=output))

optimizer=tf.train.GradientDescentOptimizer(learning_rate=1).minimize(loss)

saver=tf.train.Saver()

with tf.Session()as sess:

sess.run(tf.global_variables_initializer())

if not os.path.exists('checkpoint'):

for iin range(50):

epoch_cost =0

for _in range(int(mnist.train.num_examples /batch_size)):

x_data, y_data = mnist.train.next_batch(batch_size)

cost, _ = sess.run([loss, optimizer], feed_dict={x: x_data, y: y_data})

epoch_cost += cost

print('Ecoch', i, ':', epoch_cost)

accuracy = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(y, 1),tf.argmax(output, 1)), tf.float32))

acc = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels})

print(acc)

saver.save(sess, './mnist.ckpt')

else:

saver.restore(sess, './mnist.ckpt')

predict('./2.jpg', sess, output)

def read_data(path):

image=cv2.imread(path, cv2.IMREAD_GRAYSCALE)

w,h=image.shape

max=max(w,h)

processed_image=cv2.resize(image, dsize=(28, 28))

processed_image=np.resize(processed_image, new_shape=(1, 784))

return image, processed_image

def predict(image_path):

image, process_image=read_data(image_path)

result = sess.run(out.put, feed_dirct={x: processed_image})

result = np.argmax(result, 1)

print('The predicttion is ', result)

cv2.putText(image, 'The prodiction is {} '.format(result), (20, 20), cv2.FONT_HERSHEY_SIMPLEX, 1, color=(255, 255, 255))

cv2.imshow(image)

cv2.waitKey(0)

cv2.destroyAllWindows()

def reconstruct_image():

for iin range(10):

if not os.path.exists('./{}'.format(i)):

os. makedirs('./{}'.format(i))

batch_size=1

for iin range(int( mnist.train.num_examples / batch_size)):

# x_data, y_data = mnist.train.next_batch(batch_size)

x_data, y_data = mnist.train.next_batch(batch_size)

img = Image.fromarray(np.reshape(np.array(x_data[0]*255, dtype="uint8"), newshape=(28,28)))

dir = np.argmax(y_data[0])

img.save('./{}/{}.bmp'.format(dir, i))

train_nn(x)

# reconstruct_image()

print("overr")

同时也是十分的注重关于学习率提高的问题,有关于学习率提高的tf.train.exponential_decay(是通过指数变换进行实践出来)