Vulkan-光线追踪(计算着色器实现)

光线追踪

本次使用计算着色器实现具有阴影和反射的简单GPU光线跟踪器。至于vulkan最新发布的光追扩展VK_KHR_ray_tracing或之前的VK-NV-ray-u跟踪扩展,本次未涉及到,以后可具体研究实现,本次具体计算着色器光追代码参照国外图形大神Inigo Quilez文章实现。

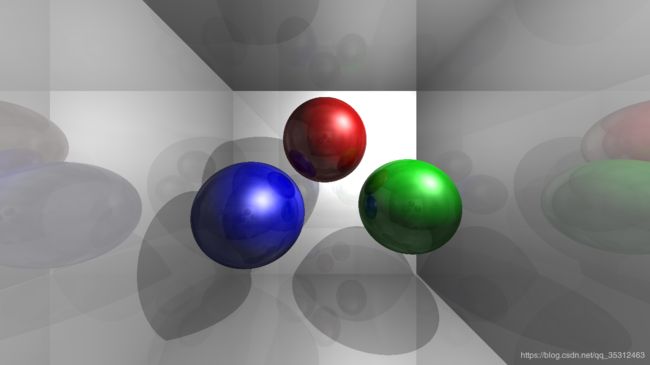

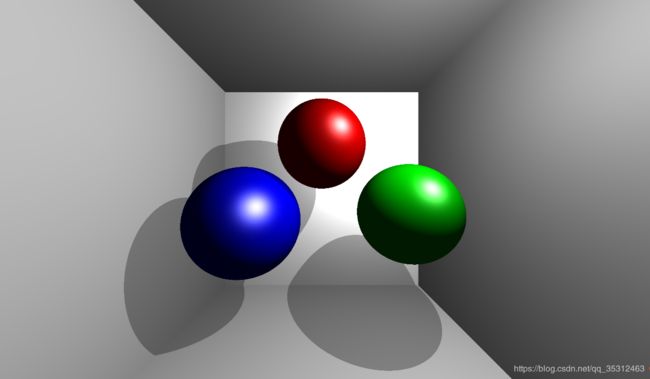

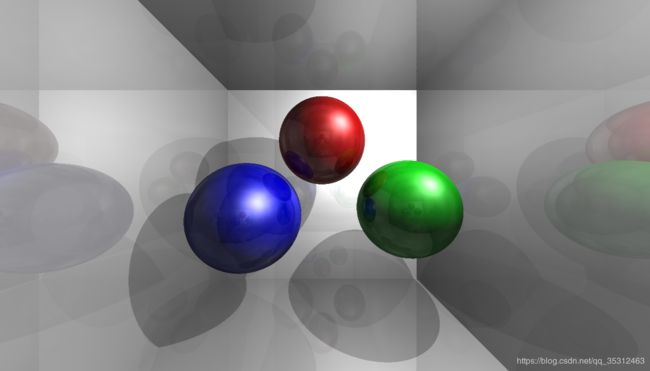

另外,本部分主要介绍计算着色器与常规创建新增的部分及光追计算着色器的具体实现逻辑,像基础的UniformBuffer、Pipeline、CommandBuffer等不再赘述,有想了解的可参照以前博文。下图展示一个单光源、场景光追反射10次的效果图:

一、计算管线及光追缓存创建

像首先计算着色器常用的结构体创建:

vks::Texture textureComputeTarget; //在计算着色器中写入数据供几何着色器使用

// 图形部分数据结构体

struct {

VkDescriptorSetLayout descriptorSetLayout; //光线追踪图像显示着色器绑定布局

VkDescriptorSet descriptorSetPreCompute; //光线追踪图像显示着色器绑定之前计算着色器图像操作

VkDescriptorSet descriptorSet; //光线追踪图像显示着色器绑定后计算着色器图像操作

VkPipeline pipeline; //光线跟踪图像显示管道

VkPipelineLayout pipelineLayout; //图形管线的布局

} graphics;

// 计算部分数据结构体

struct {

struct {

vks::Buffer spheres; //(着色器)带有场景球体的缓冲对象

vks::Buffer planes; //(着色器)带有场景平面的缓冲对象

} storageBuffers;

vks::Buffer uniformBuffer; //包含场景数据的统一缓冲对象

VkQueue queue; //用于计算命令的独立队列(队列族可能不同于用于图形的队列)

VkCommandPool commandPool; //使用单独的命令池(队列族可能不同于用于图形的命令池)

VkCommandBuffer commandBuffer; //存储调度命令和屏障的命令缓冲区

VkFence fence; //同步围栏,以避免重写计算CB如果仍在使用

VkDescriptorSetLayout descriptorSetLayout; //计算着色绑定布局

VkDescriptorSet descriptorSet; //计算着色器绑定

VkPipelineLayout pipelineLayout; //计算管道的布局

VkPipeline pipeline; //计算射线管道

struct UBOCompute { //计算着色器均匀块对象

glm::vec3 lightPos;

float aspectRatio; //视口的纵横比

glm::vec4 fogColor = glm::vec4(0.0f);

struct {

glm::vec3 pos = glm::vec3(0.0f, 0.0f, 4.0f);

glm::vec3 lookat = glm::vec3(0.0f, 0.5f, 0.0f);

float fov = 10.0f;

} camera;

} ubo;

} compute;

//SSBO中球体定义

struct Sphere { //着色器使用std140布局(所以我们只使用vec4而不是vec3)

glm::vec3 pos;

float radius;

glm::vec3 diffuse;

float specular;

uint32_t id; //用于标识用于射线跟踪的球体的Id

glm::ivec3 _pad;

};

// SSBO中平面定义

struct Plane {

glm::vec3 normal;

float distance;

glm::vec3 diffuse;

float specular;

uint32_t id;

glm::ivec3 _pad;

};

首先我们需要新增一个prepareStorageBuffers函数用于创建SSBO计算着色器存储缓冲区数据。

//设置并填充包含光线跟踪场景原语的计算着色器存储缓冲区

void prepareStorageBuffers()

{

// Spheres 球定义

std::vector<Sphere> spheres;

spheres.push_back(newSphere(glm::vec3(1.75f, -0.5f, 0.0f), 1.0f, glm::vec3(0.0f, 1.0f, 0.0f), 32.0f));

spheres.push_back(newSphere(glm::vec3(0.0f, 1.0f, -0.5f), 1.0f, glm::vec3(1.0f, 0.0f, 0.0f), 32.0f));

spheres.push_back(newSphere(glm::vec3(-1.75f, -0.75f, -0.5f), 1.25f, glm::vec3(0.0f, 0.0f, 1.0f), 32.0f));

VkDeviceSize storageBufferSize = spheres.size() * sizeof(Sphere);

// Stage 暂存缓冲区

vks::Buffer stagingBuffer;

vulkanDevice->createBuffer(

VK_BUFFER_USAGE_TRANSFER_SRC_BIT,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT | VK_MEMORY_PROPERTY_HOST_COHERENT_BIT,

&stagingBuffer,

storageBufferSize,

spheres.data());

vulkanDevice->createBuffer(

//该SSBO将被用作计算管道的存储缓冲区和图形管道中的顶点缓冲区

VK_BUFFER_USAGE_VERTEX_BUFFER_BIT | VK_BUFFER_USAGE_STORAGE_BUFFER_BIT | VK_BUFFER_USAGE_TRANSFER_DST_BIT,

VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT,

&compute.storageBuffers.spheres,

storageBufferSize);

// 复制到暂存缓冲区

VkCommandBuffer copyCmd = vulkanDevice->createCommandBuffer(VK_COMMAND_BUFFER_LEVEL_PRIMARY, true);

VkBufferCopy copyRegion = {};

copyRegion.size = storageBufferSize;

vkCmdCopyBuffer(copyCmd, stagingBuffer.buffer, compute.storageBuffers.spheres.buffer, 1, ©Region);

vulkanDevice->flushCommandBuffer(copyCmd, queue, true);

stagingBuffer.destroy();

// Planes 面定义

std::vector<Plane> planes;

const float roomDim = 4.0f;

planes.push_back(newPlane(glm::vec3(0.0f, 1.0f, 0.0f), roomDim, glm::vec3(0.7647f), 32.0f));

planes.push_back(newPlane(glm::vec3(0.0f, -1.0f, 0.0f), roomDim, glm::vec3(0.7647f), 32.0f));

planes.push_back(newPlane(glm::vec3(0.0f, 0.0f, 1.0f), roomDim, glm::vec3(0.7647f), 32.0f));

planes.push_back(newPlane(glm::vec3(0.0f, 0.0f, -1.0f), roomDim, glm::vec3(0.7647f), 32.0f));

planes.push_back(newPlane(glm::vec3(-1.0f, 0.0f, 0.0f), roomDim, glm::vec3(0.7647f), 32.0f));

planes.push_back(newPlane(glm::vec3(1.0f, 0.0f, 0.0f), roomDim, glm::vec3(0.7647f), 32.0f));

storageBufferSize = planes.size() * sizeof(Plane);

// Stage 暂存

vulkanDevice->createBuffer(

VK_BUFFER_USAGE_TRANSFER_SRC_BIT,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT | VK_MEMORY_PROPERTY_HOST_COHERENT_BIT,

&stagingBuffer,

storageBufferSize,

planes.data());

vulkanDevice->createBuffer(

//该SSBO将被用作计算管道的存储缓冲区和图形管道中的顶点缓冲区

VK_BUFFER_USAGE_VERTEX_BUFFER_BIT | VK_BUFFER_USAGE_STORAGE_BUFFER_BIT | VK_BUFFER_USAGE_TRANSFER_DST_BIT,

VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT,

&compute.storageBuffers.planes,

storageBufferSize);

// 复制到暂存缓冲区

copyCmd = vulkanDevice->createCommandBuffer(VK_COMMAND_BUFFER_LEVEL_PRIMARY, true);

copyRegion.size = storageBufferSize;

vkCmdCopyBuffer(copyCmd, stagingBuffer.buffer, compute.storageBuffers.planes.buffer, 1, ©Region);

vulkanDevice->flushCommandBuffer(copyCmd, queue, true);

stagingBuffer.destroy();

}

另外,本部分主要创建一个计算着色器Buffer,图形缓存仅是显示计算着色器中采样好的图像数据,因此图形顶点着色器和片元着色器十分简单,如下:

//顶点着色器

#version 450

layout (location = 0) out vec2 outUV;

out gl_PerVertex

{

vec4 gl_Position;

};

void main()

{

outUV = vec2((gl_VertexIndex << 1) & 2, gl_VertexIndex & 2);

gl_Position = vec4(outUV * 2.0f + -1.0f, 0.0f, 1.0f);

}

//片元着色器

#version 450

layout (binding = 0) uniform sampler2D samplerColor;

layout (location = 0) in vec2 inUV;

layout (location = 0) out vec4 outFragColor;

void main()

{

outFragColor = texture(samplerColor, vec2(inUV.s, 1.0 - inUV.t));

}

之后准备一个用于存储计算着色器计算的纹理目标:

//准备一个用于存储计算着色器计算的纹理目标

void prepareTextureTarget(vks::Texture *tex, uint32_t width, uint32_t height, VkFormat format)

{

// 获取请求的纹理格式的设备属性

VkFormatProperties formatProperties;

vkGetPhysicalDeviceFormatProperties(physicalDevice, format, &formatProperties);

// 检查所请求的图像格式是否支持图像存储操作

assert(formatProperties.optimalTilingFeatures & VK_FORMAT_FEATURE_STORAGE_IMAGE_BIT);

// Prepare blit target texture 准备blit目标纹理

tex->width = width;

tex->height = height;

VkImageCreateInfo imageCreateInfo = vks::initializers::imageCreateInfo();

imageCreateInfo.imageType = VK_IMAGE_TYPE_2D;

imageCreateInfo.format = format;

imageCreateInfo.extent = { width, height, 1 };

imageCreateInfo.mipLevels = 1;

imageCreateInfo.arrayLayers = 1;

imageCreateInfo.samples = VK_SAMPLE_COUNT_1_BIT;

imageCreateInfo.tiling = VK_IMAGE_TILING_OPTIMAL;

imageCreateInfo.initialLayout = VK_IMAGE_LAYOUT_UNDEFINED;

// 图像将在片段着色器中采样,并在计算着色器中用作存储目标

imageCreateInfo.usage = VK_IMAGE_USAGE_SAMPLED_BIT | VK_IMAGE_USAGE_STORAGE_BIT;

imageCreateInfo.flags = 0;

VkMemoryAllocateInfo memAllocInfo = vks::initializers::memoryAllocateInfo();

VkMemoryRequirements memReqs;

VK_CHECK_RESULT(vkCreateImage(device, &imageCreateInfo, nullptr, &tex->image));

vkGetImageMemoryRequirements(device, tex->image, &memReqs);

memAllocInfo.allocationSize = memReqs.size;

memAllocInfo.memoryTypeIndex = vulkanDevice->getMemoryType(memReqs.memoryTypeBits, VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT);

VK_CHECK_RESULT(vkAllocateMemory(device, &memAllocInfo, nullptr, &tex->deviceMemory));

VK_CHECK_RESULT(vkBindImageMemory(device, tex->image, tex->deviceMemory, 0));

VkCommandBuffer layoutCmd = vulkanDevice->createCommandBuffer(VK_COMMAND_BUFFER_LEVEL_PRIMARY, true);

tex->imageLayout = VK_IMAGE_LAYOUT_GENERAL;

vks::tools::setImageLayout(

layoutCmd,

tex->image,

VK_IMAGE_ASPECT_COLOR_BIT,

VK_IMAGE_LAYOUT_UNDEFINED,

tex->imageLayout);

vulkanDevice->flushCommandBuffer(layoutCmd, queue, true);

// 创建取样器

VkSamplerCreateInfo sampler = vks::initializers::samplerCreateInfo();

sampler.magFilter = VK_FILTER_LINEAR;

sampler.minFilter = VK_FILTER_LINEAR;

sampler.mipmapMode = VK_SAMPLER_MIPMAP_MODE_LINEAR;

sampler.addressModeU = VK_SAMPLER_ADDRESS_MODE_CLAMP_TO_BORDER;

sampler.addressModeV = sampler.addressModeU;

sampler.addressModeW = sampler.addressModeU;

sampler.mipLodBias = 0.0f;

sampler.maxAnisotropy = 1.0f;

sampler.compareOp = VK_COMPARE_OP_NEVER;

sampler.minLod = 0.0f;

sampler.maxLod = 0.0f;

sampler.borderColor = VK_BORDER_COLOR_FLOAT_OPAQUE_WHITE;

VK_CHECK_RESULT(vkCreateSampler(device, &sampler, nullptr, &tex->sampler));

// 创建图像视图

VkImageViewCreateInfo view = vks::initializers::imageViewCreateInfo();

view.viewType = VK_IMAGE_VIEW_TYPE_2D;

view.format = format;

view.components = { VK_COMPONENT_SWIZZLE_R, VK_COMPONENT_SWIZZLE_G, VK_COMPONENT_SWIZZLE_B, VK_COMPONENT_SWIZZLE_A };

view.subresourceRange = { VK_IMAGE_ASPECT_COLOR_BIT, 0, 1, 0, 1 };

view.image = tex->image;

VK_CHECK_RESULT(vkCreateImageView(device, &view, nullptr, &tex->view));

// 初始化描述符供以后使用

tex->descriptor.imageLayout = tex->imageLayout;

tex->descriptor.imageView = tex->view;

tex->descriptor.sampler = tex->sampler;

tex->device = vulkanDevice;

}

再后,我们生成射线跟踪图像的计算管道

//准备生成射线跟踪图像的计算管道

void prepareCompute()

{

//创建一个可计算的设备队列

//createLogicalDevice函数查找一个支持计算的队列,并优先选择只支持计算的队列族

//根据不同的实现,这可能会导致不同的队列族索引的图形和计算,

//需要适当的同步(参见buildComputeCommandBuffer中的内存屏障)

VkDeviceQueueCreateInfo queueCreateInfo = {};

queueCreateInfo.sType = VK_STRUCTURE_TYPE_DEVICE_QUEUE_CREATE_INFO;

queueCreateInfo.pNext = NULL;

queueCreateInfo.queueFamilyIndex = vulkanDevice->queueFamilyIndices.compute;

queueCreateInfo.queueCount = 1;

vkGetDeviceQueue(device, vulkanDevice->queueFamilyIndices.compute, 0, &compute.queue);

std::vector<VkDescriptorSetLayoutBinding> setLayoutBindings = {

// 绑定0:存储图像(光线跟踪输出)

vks::initializers::descriptorSetLayoutBinding(

VK_DESCRIPTOR_TYPE_STORAGE_IMAGE,

VK_SHADER_STAGE_COMPUTE_BIT,

0),

// 绑定1:统一缓冲块

vks::initializers::descriptorSetLayoutBinding(

VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER,

VK_SHADER_STAGE_COMPUTE_BIT,

1),

// 绑定1:球体的着色器存储缓冲区

vks::initializers::descriptorSetLayoutBinding(

VK_DESCRIPTOR_TYPE_STORAGE_BUFFER,

VK_SHADER_STAGE_COMPUTE_BIT,

2),

// 绑定1:平面的着色器存储缓冲区

vks::initializers::descriptorSetLayoutBinding(

VK_DESCRIPTOR_TYPE_STORAGE_BUFFER,

VK_SHADER_STAGE_COMPUTE_BIT,

3)

};

VkDescriptorSetLayoutCreateInfo descriptorLayout =

vks::initializers::descriptorSetLayoutCreateInfo(

setLayoutBindings.data(),

setLayoutBindings.size());

VK_CHECK_RESULT(vkCreateDescriptorSetLayout(device, &descriptorLayout, nullptr, &compute.descriptorSetLayout));

VkPipelineLayoutCreateInfo pPipelineLayoutCreateInfo =

vks::initializers::pipelineLayoutCreateInfo(

&compute.descriptorSetLayout,

1);

VK_CHECK_RESULT(vkCreatePipelineLayout(device, &pPipelineLayoutCreateInfo, nullptr, &compute.pipelineLayout));

VkDescriptorSetAllocateInfo allocInfo =

vks::initializers::descriptorSetAllocateInfo(

descriptorPool,

&compute.descriptorSetLayout,

1);

VK_CHECK_RESULT(vkAllocateDescriptorSets(device, &allocInfo, &compute.descriptorSet));

std::vector<VkWriteDescriptorSet> computeWriteDescriptorSets =

{

// 绑定0:输出存储映像

vks::initializers::writeDescriptorSet(

compute.descriptorSet,

VK_DESCRIPTOR_TYPE_STORAGE_IMAGE,

0,

&textureComputeTarget.descriptor),

// 绑定1:统一缓冲块

vks::initializers::writeDescriptorSet(

compute.descriptorSet,

VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER,

1,

&compute.uniformBuffer.descriptor),

// 绑定2:球体的着色器存储缓冲区

vks::initializers::writeDescriptorSet(

compute.descriptorSet,

VK_DESCRIPTOR_TYPE_STORAGE_BUFFER,

2,

&compute.storageBuffers.spheres.descriptor),

// 绑定2:平面的着色器存储缓冲区

vks::initializers::writeDescriptorSet(

compute.descriptorSet,

VK_DESCRIPTOR_TYPE_STORAGE_BUFFER,

3,

&compute.storageBuffers.planes.descriptor)

};

vkUpdateDescriptorSets(device, computeWriteDescriptorSets.size(), computeWriteDescriptorSets.data(), 0, NULL);

// 创建计算着色器管道

VkComputePipelineCreateInfo computePipelineCreateInfo =

vks::initializers::computePipelineCreateInfo(

compute.pipelineLayout,

0);

computePipelineCreateInfo.stage = loadShader(getAssetPath() + "shaders/computeraytracing/raytracing.comp.spv", VK_SHADER_STAGE_COMPUTE_BIT);

VK_CHECK_RESULT(vkCreateComputePipelines(device, pipelineCache, 1, &computePipelineCreateInfo, nullptr, &compute.pipeline));

// Separate command pool as queue family for compute may be different than graphics 单独的命令池作为计算的队列族可能与图形不同

VkCommandPoolCreateInfo cmdPoolInfo = {};

cmdPoolInfo.sType = VK_STRUCTURE_TYPE_COMMAND_POOL_CREATE_INFO;

cmdPoolInfo.queueFamilyIndex = vulkanDevice->queueFamilyIndices.compute;

cmdPoolInfo.flags = VK_COMMAND_POOL_CREATE_RESET_COMMAND_BUFFER_BIT;

VK_CHECK_RESULT(vkCreateCommandPool(device, &cmdPoolInfo, nullptr, &compute.commandPool));

// 为计算操作创建一个命令缓冲区

VkCommandBufferAllocateInfo cmdBufAllocateInfo =

vks::initializers::commandBufferAllocateInfo(

compute.commandPool,

VK_COMMAND_BUFFER_LEVEL_PRIMARY,

1);

VK_CHECK_RESULT(vkAllocateCommandBuffers(device, &cmdBufAllocateInfo, &compute.commandBuffer));

// 用于计算CB同步的栅栏

VkFenceCreateInfo fenceCreateInfo = vks::initializers::fenceCreateInfo(VK_FENCE_CREATE_SIGNALED_BIT);

VK_CHECK_RESULT(vkCreateFence(device, &fenceCreateInfo, nullptr, &compute.fence));

// 构建一个包含计算调度命令的命令缓冲区(与上节图像处理类似)

buildComputeCommandBuffer();

}

这样我们所需的管线/缓存等数据都已经完成。最后我们来详细看一下光追计算着色器。

二、光追计算着色器

先贴上光追计算着色器:

#version 450

layout (local_size_x = 16, local_size_y = 16) in;

layout (binding = 0, rgba8) uniform writeonly image2D resultImage;

#define EPSILON 0.0001

//最大长度

#define MAXLEN 1000.0

//阴影强度

#define SHADOW 0.7

//反射次数

#define RAYBOUNCES 20

//是否反射

#define REFLECTIONS true

//反射强度

#define REFLECTIONSTRENGTH 0.4

//反射减弱

#define REFLECTIONFALLOFF 0.6

struct Camera

{

vec3 pos;

vec3 lookat;

float fov;

};

layout (binding = 1) uniform UBO

{

vec3 lightPos;

float aspectRatio;

vec4 fogColor;

Camera camera;

mat4 rotMat;

} ubo;

struct Sphere

{

vec3 pos;

float radius;

vec3 diffuse;

float specular;

int id;

};

struct Plane

{

vec3 normal;

float distance;

vec3 diffuse;

float specular;

int id;

};

layout (std140, binding = 2) buffer Spheres

{

Sphere spheres[ ];

};

layout (std140, binding = 3) buffer Planes

{

Plane planes[ ];

};

// 反射光线

void reflectRay(inout vec3 rayD, in vec3 mormal)

{

rayD = rayD + 2.0 * -dot(mormal, rayD) * mormal;

}

// 漫反射

float lightDiffuse(vec3 normal, vec3 lightDir)

{

return clamp(dot(normal, lightDir), 0.1, 1.0);

}

// 镜面反射

float lightSpecular(vec3 normal, vec3 lightDir, float specularFactor)

{

vec3 viewVec = normalize(ubo.camera.pos);

vec3 halfVec = normalize(lightDir + viewVec);

return pow(clamp(dot(normal, halfVec), 0.0, 1.0), specularFactor);

}

// 光线与圆球求交

float sphereIntersect(in vec3 rayO, in vec3 rayD, in Sphere sphere)

{

vec3 oc = rayO - sphere.pos;

float b = 2.0 * dot(oc, rayD);

float c = dot(oc, oc) - sphere.radius*sphere.radius;

float h = b*b - 4.0*c;

if (h < 0.0)

{

return -1.0;

}

float t = (-b - sqrt(h)) / 2.0;

return t;

}

vec3 sphereNormal(in vec3 pos, in Sphere sphere)

{

return (pos - sphere.pos) / sphere.radius;

}

// 光线与平面求交

float planeIntersect(vec3 rayO, vec3 rayD, Plane plane)

{

float d = dot(rayD, plane.normal);

if (d == 0.0)

return 0.0;

float t = -(plane.distance + dot(rayO, plane.normal)) / d;

if (t < 0.0)

return 0.0;

return t;

}

// 场景求交

int intersect(in vec3 rayO, in vec3 rayD, inout float resT)

{

int id = -1;

for (int i = 0; i < spheres.length(); i++)

{

float tSphere = sphereIntersect(rayO, rayD, spheres[i]);

if ((tSphere > EPSILON) && (tSphere < resT))

{

id = spheres[i].id;

resT = tSphere;

}

}

for (int i = 0; i < planes.length(); i++)

{

float tplane = planeIntersect(rayO, rayD, planes[i]);

if ((tplane > EPSILON) && (tplane < resT))

{

id = planes[i].id;

resT = tplane;

}

}

return id;

}

// 计算阴影

float calcShadow(in vec3 rayO, in vec3 rayD, in int objectId, inout float t)

{

for (int i = 0; i < spheres.length(); i++)

{

if (spheres[i].id == objectId)

continue;

float tSphere = sphereIntersect(rayO, rayD, spheres[i]);

if ((tSphere > EPSILON) && (tSphere < t))

{

t = tSphere;

return SHADOW;

}

}

return 1.0;

}

vec3 fog(in float t, in vec3 color)

{

return mix(color, ubo.fogColor.rgb, clamp(sqrt(t*t)/20.0, 0.0, 1.0));

}

vec3 renderScene(inout vec3 rayO, inout vec3 rayD, inout int id)

{

vec3 color = vec3(0.0);

float t = MAXLEN;

// 获取相交构件ID

int objectID = intersect(rayO, rayD, t);

if (objectID == -1)

{

return color;

}

vec3 pos = rayO + t * rayD;

vec3 lightVec = normalize(ubo.lightPos - pos);

vec3 normal;

// Planes

for (int i = 0; i < planes.length(); i++)

{

if (objectID == planes[i].id)

{

normal = planes[i].normal;

float diffuse = lightDiffuse(normal, lightVec);

float specular = lightSpecular(normal, lightVec, planes[i].specular);

color = diffuse * planes[i].diffuse + specular;

}

}

// Spheres

for (int i = 0; i < spheres.length(); i++)

{

if (objectID == spheres[i].id)

{

normal = sphereNormal(pos, spheres[i]);

float diffuse = lightDiffuse(normal, lightVec);

float specular = lightSpecular(normal, lightVec, spheres[i].specular);

color = diffuse * spheres[i].diffuse + specular;

}

}

if (id == -1)

return color;

id = objectID;

// Shadows

t = length(ubo.lightPos - pos);

color *= calcShadow(pos, lightVec, id, t);

// Fog 光线模糊

//color = fog(t, color);

// Reflect ray for next render pass 反射光线到下一个渲染通道

reflectRay(rayD, normal);

rayO = pos;

return color;

}

void main()

{

//检索图像的尺寸

ivec2 dim = imageSize(resultImage);

// gl_GlobalInvocationID意思是获取当前代码运行的计算单元的编号,也可以理解成获取当前线程的索引。

vec2 uv = vec2(gl_GlobalInvocationID.xy) / dim;

vec3 rayO = ubo.camera.pos;

vec3 rayD = normalize(vec3((-1.0 + 2.0 * uv) * vec2(ubo.aspectRatio, 1.0), -1.0));

// primary ray

int id = 0;

vec3 finalColor = renderScene(rayO, rayD, id);

// 是否反射

if (REFLECTIONS)

{

float reflectionStrength = REFLECTIONSTRENGTH;

for (int i = 0; i < RAYBOUNCES; i++)

{

//secomdary ray

vec3 reflectionColor = renderScene(rayO, rayD, id);

finalColor = (1.0 - reflectionStrength) * finalColor + reflectionStrength * mix(reflectionColor, finalColor, 1.0 - reflectionStrength);

reflectionStrength *= REFLECTIONFALLOFF;

}

}

// 在图像中写入一个纹素

imageStore(resultImage, ivec2(gl_GlobalInvocationID.xy), vec4(finalColor, 0.0));

}

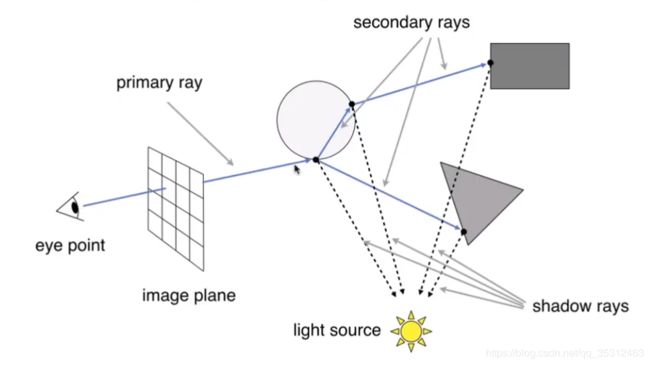

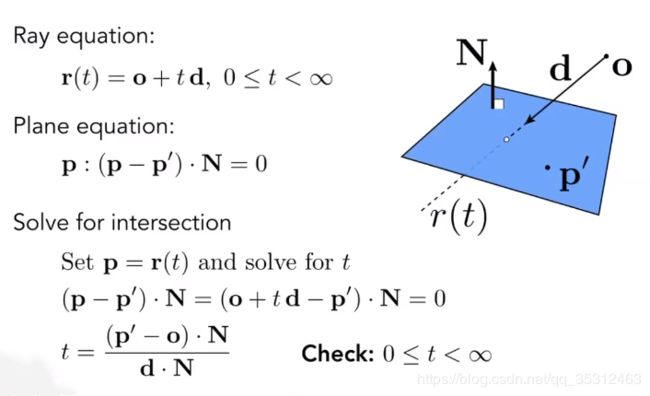

首先,我们来看一下光追的主要原理,如下图:

我们对每个像素进行采样计算,并计算其对模型场景中物体的交点,汇总其所相交处颜色的集合来当作该像素的颜色值。

接下来开我们来看两个基础知识相交的问题,这部分代码只要你有基础数学知识即可:

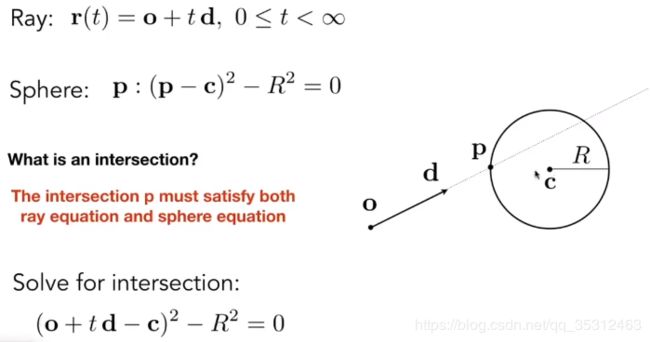

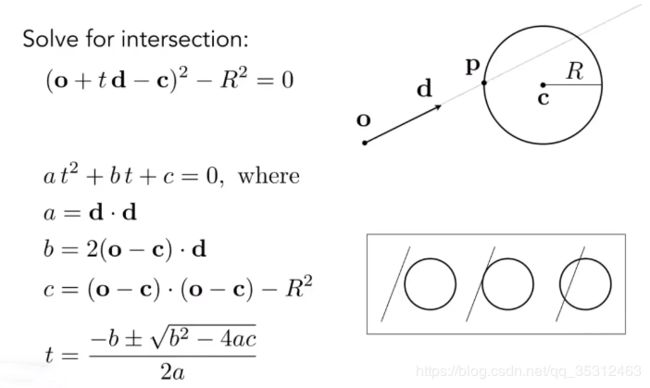

2.1 光线与圆相交

首先来看上图的定义,当一个点即在圆上又在直线上时说明光线与原存在交点,所以联立两式得下图:

从上图来看,我们只要解出一元二次方程的解即可,取其最小值,即为最新的交点,对应这计算着色器中sphereIntersect方法的处理。

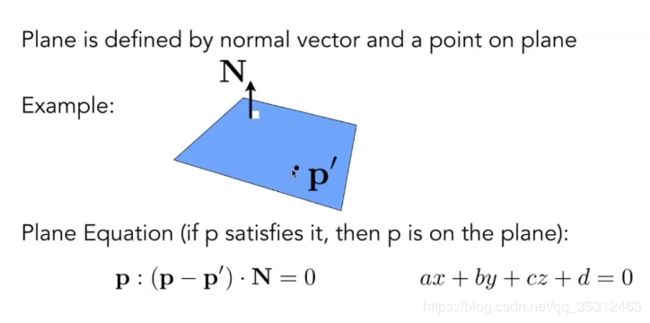

2.2 光线与平面相交

从其定义上我们可以列出方程,之后解方程:

可以得到方程解,对应计算着色器中planeIntersect方法。

2.3 计算流程

首先,我们来看一下光追计算器中几个主要的参数,后续我们也会对其进行一一解释:

//精度

#define EPSILON 0.0001

//最大长度

#define MAXLEN 1000.0

//阴影强度

#define SHADOW 0.7

//反射次数

#define RAYBOUNCES 3

//是否反射

#define REFLECTIONS false

//反射强度

#define REFLECTIONSTRENGTH 0.4

//反射减弱

#define REFLECTIONFALLOFF 0.6

总的来说,我们首先进行以相机为原点,向单个像素位置发出射线,进行一次renderScene进行场景渲染,如果仅执行此处基础渲染,不考虑光线打在物体上进行反射的话(即REFLECTIONS设置为false),我们运行可以看到如下效果:

我们可以看到,这其实也就相当于我们之前处理过的阴影效果,其实光追进行一次入射处理,也就完美的处理了光栅化渲染中深度测试部分。

接下来,我们考虑光线的折射,即光线在碰到物体后进行完美的折射,其实这也是Whitted-Style管线追踪的主要思想,但其中我们还得进行能量损失处理(即REFLECTIONFALLOFF 0.6)及反射率处理(REFLECTIONSTRENGTH 0.4 )。并且,我们还手动定义了光线反射次数(RAYBOUNCES 3)。

在计算着色器的main函数中,我们可以看到对Secondary ary的处理:

// 是否反射

if (REFLECTIONS)

{

float reflectionStrength = REFLECTIONSTRENGTH;

for (int i = 0; i < RAYBOUNCES; i++)

{

//secomdary ray

vec3 reflectionColor = renderScene(rayO, rayD, id);

finalColor = (1.0 - reflectionStrength) * finalColor + reflectionStrength * mix(reflectionColor, finalColor, 1.0 - reflectionStrength);

reflectionStrength *= REFLECTIONFALLOFF;

}

}

其中将反射强度和衰减都考虑进去后,根据反射次数叠加最终的效果,最后将最终颜色值存储到对应的纹素供图形着色器进行采样显示,运行可见如下效果:

我们明显可以看到在四周墙壁上会折射出场景中不同颜色球体的图像。

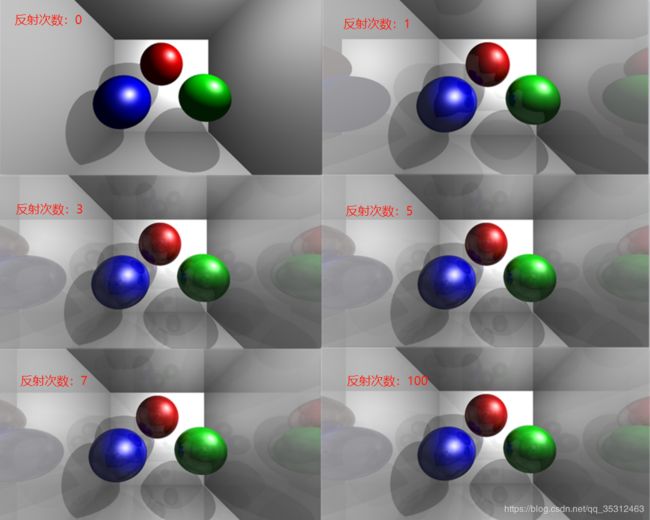

最后我们说一下,其实这儿我们手动定义了反射次数,如果考虑真实场景中,我们应该在此处递归处理光线折射次数问题,直到折射后的光线打到光源为止。这样的话我们就不能保证计算的时间复杂度,因此我们手动定义折射次数,一般在考虑了光线衰减的情况下折射7次左右对于光追效果其实就已经很不错了,下图我贴出一张不同折射次数的效果图,大家可以自己看下效果: