YOLO v2从原理到tensorflow2复现

目录

原理篇:

一、YOLO v1回顾

二、YOLO v2介绍

代码篇

三、YOLO v2的tensorflow2实现

原理篇:

一、YOLO v1回顾

下图是YOLO v1的整体网络框架图:

●主要的检测思路:

1.首先使用CNN对输入图片提取出尺寸为S*S的特征图,特征图上每个像素点映射回原图就表示原图的1个区域,所以该特征图可以把原图分成S*S个网格区域。

2.如果原图上某个目标的中心点落在某个区域上,那么这个区域就负责预测该目标的信息,包括(bounding box,目标置信度,目标类别概率)

3.进一步说,方法对每个网格区域预测B个bounding boxes(x y w h和这些boxes的置信度confidence共5个数值,以及C个类别概率(onehot形式)。网络最后输出的是形状为S*S*(B*5+C)的tensor。原文中B=2,对PASCAL VOC数据集来讲C=20。其中,x y表示物体的中心点坐标与对应网格左上角的偏差,需要除以网格大小,从而归一化到0-1。w h是除以图像的宽高,归一化到0-1。

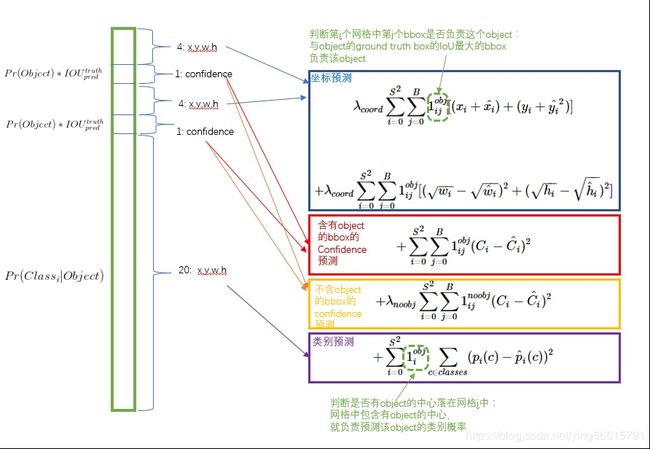

●该方法的损失函数如下:(图片来自网络)

由上图可以看出,预测的类别概率是被两个bounding box共享的,也就是说,一个网格只能预测一种目标。

●训练阶段:

对每个网格:

如果没有ground truth(目标物体)落入该网格:

1.只需要计算上图的第3项橙色框里面的置信度损失,target为0;

如果有ground truth落入该网格:

1.对该网格计算上图的第4项紫色框里面的类别概率损失。

2.计算该ground truth与网格中哪个预测的bounding box(一共B个)的iou最大,选取iou最大的那个bounding box,计算与ground truth的坐标回归损失以及置信度损失(上图的第1蓝色框、2红色框项)(这里置信度损失的target为预测的box和ground truth的iou)

3.对于另一个没被选取的bounding box,还需要计算上图的第3项橙色框里面的置信度损失。

●测试阶段:

1.将每个网格预测的类别概率分别和其中的B个bounding box的置信度相乘,得到预测的每个bounding box的class-specific confidence score:(下面公式来自原文)

![]()

等式左边第一项就是每个网格预测的类别信息,每个 bounding box 预测的 confidence其实就是第二、三项的乘积。这个score即encode 了预测的 box 属于某一类的概率,也有该 box 准确度的信息。

2.对每个网格的每个bbox执行同样操作,得到7*7*2=98 bbox个 class-specific confidence score。

3.得到每个 box 的 class-specific confidence score 以后,设置阈值,滤掉得分低的 boxes,对保留的 boxes 进行 NMS 处理,就得到最终的检测结果。

●YOLO v1的缺点:

1.YOLO对相互靠的很近的物体,还有很小的群体 检测效果不好,这是因为一个网格中只预测了两个框,并且只属于一类。

2.同一类物体出现的新的不常见的长宽比和其他情况时,泛化能力偏弱。

3.由于损失函数的问题,定位误差是影响检测效果的主要原因。尤其是大小物体的处理上,还有待加强。

4.由于输出层为全连接层,因此在检测时,YOLO训练模型只支持与训练图像相同的输入分辨率。

二、YOLO v2介绍

YOLO v2是在YOLO v1上的改进,原文分better、faster、stronger三部分介绍,这里只介绍better部分,下面直接放上better部分改进的点以及相应的提升有多大:(图片来自原文)

●下面简单介绍这些改进点。

1.batch norm,即引入了bn层

2.hi-res classifier:

2.1.先用224*224的ImageNet预训练分类网络

2.2.再使用448*448的ImageNet微调分类网络10epochs

2.3.最后在检测数据集上微调这个网络。

3.convolutional,去掉了YOLO v1的全连接层,改为卷积层。

4.anchor boxes,

4.1 对每个网格引入k个anchor boxes,这样就为每个目标引入了各种不同长宽比和不同面积大小的box

4.2 训练阶段构建样本时,GT(ground truth)会落入某个网格,每个网格有k个anchor boxes,选取与该GT的iou最大的那个anchor box作为正样本。注意,这里计算iou时只考虑anchor boxes的长宽,选择的时候其实直接把GT box与anchor box左上角对齐直接计算IOU就可以了。

4.3 这里再进一步解释下,最后我们需要得到的是真实box的中心点坐标和长宽。根据后面的坐标预测的映射函数就可以得知:最终预测的box的中心点坐标是和网格坐标有关,预测的box与anchor box有关联的只是长宽。

5.new network,引入了Darknet-19,中间只有卷积和池化层。

6.dimension priors,通过对数据集所有的目标框聚类,找到合适的anchor数目k(聚类的个数,对于PASCOL VOC,文中给的是5),以及anchor的宽高(聚类时只考虑目标框的长宽,两个框的iou越高表示距离越近)

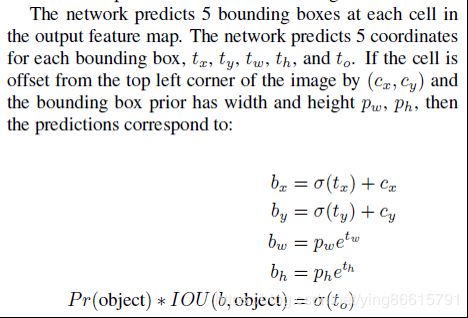

7.location prediction,不用faster rcnn那个坐标映射公式,因为会导致预测的box可能落在图像上任何一个位置。使用以下映射公式: ,

,

tx,ty经过sigmoid函数归一化到0-1,表示相对单个网格宽高的占比,如下图,cx和cy表示一个cell和图像左上角的横纵距离;pw和ph表示bounding box的宽高。黑色虚线框是anchor box,蓝色矩形框就是预测结果。

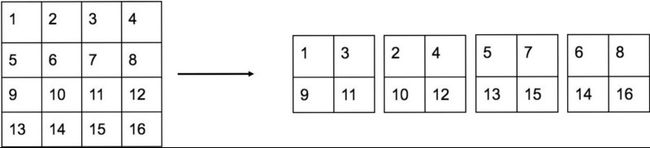

8.passthrough,添加了passthrough层,作用是将前面大尺寸的特征图做变换后,和后面的小尺寸的特征图连接,有助于检测小尺寸的目标。passthrough layer示例:(图片来自网络)

9.multi-scale,简单讲就是在训练时输入图像的size是动态变化的。

10.hi-res detector,没详细看到,应该就是模型训练完毕之后使用高分辨率的图片进行检测。

●损失函数说明(图片来自网络)

损失函数的解析参考1这里和2这里以及3这里。

1.经过对PASCAL VOC的目标框聚类,anchor boxes个数设为5,也就是每个网格预测5个boxes,这些boxes是在预设的anchor boxes上做修正的。与YOLO v1不同的是,预测的各个box之间类别向量是独立的,也就是一个网格可以预测5个不同的目标。最终的输出向量的shape为[batch_size, m, m, 5, 25](m表示最后特征图大小, m*m表示网格数量, 25=4个坐标值+1个box置信度+20个类别概率)

2.和YOLO v1类似,需要在模型训练前,事先根据各个anchor box和GT的iou判断该anchor box是否包含目标。

3.第一行是计算不包含目标的boxes的置信度损失,因为不含有目标,所以target为0.

4.第二行是计算先验框(anchor boxes?)与预测框的坐标回归损失,但是只在前12800个iterations间计算,我觉得这项应该是在训练前期使预测框快速学习到先验框的形状(靠近预设的anchor box, 但是anchor box是xy信息哪里来?)

5.第三、四、五行是计算含有目标框的boxes的坐标回归损失,box置信度损失(target是预测的box和GTbox的iou)以及类别损失。

可以看出,损失组成和YOLO v1差不多。【2019.11.22最新发现, main.py里xywh loss计算方式不对, 应该是在t上计算的, 也就是模型的原始输出, 还没经过坐标变换】

代码篇

三、YOLO v2的tensorflow2实现

代码主要参考这里

首先准备数据集:

根据论文作者的github里面贴出来的,下载PASCOL VOC 2007的train、val和test,以及2012的train、val。全部解压后,会出现文件夹VOCdevkit,数据都放里面了。

1.数据集构造

两种方式,

一种是先生成tfrecords,读取时再一个个的batch解析。调用时主函数是OB_tfrecord_dataset()

另一种是即时的从硬盘读取,不用生成tfrecords,不要要事先把每张图片的标签读进来。调用的主函数是OB_tensor_slices_dataset()

下面贴出第二种这部分主要代码:

def get_imgpath_and_annots(data_dir, years,

image_subdirectory = 'JPEGImages',

annotations_dir = 'Annotations',

ignore_difficult_instances = False):

annos_list = []

for year in years.keys():

logging.info('Reading from PASCAL %s dataset.', year)

sets = years[year]

for _set in sets:

# print('\nfor year: {}, set: {}'.format(year, _set))

examples_path = os.path.join(data_dir, year, 'ImageSets', 'Main',

_set + '.txt')

_annotations_dir = os.path.join(data_dir, year, annotations_dir)

_examples_list = dataset_util.read_examples_list(examples_path)

_annos_list = [os.path.join(_annotations_dir, example + '.xml') for example in _examples_list]

annos_list += _annos_list

img_names = []

max_obj = 200

annots = [] # 存放每个图片的boxes

print('walking annot for each img...')

for path in annos_list:

with tf.io.gfile.GFile(path, 'r') as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str)

data = dataset_util.recursive_parse_xml_to_dict(xml)['annotation']

width = int(data['size']['width'])

height = int(data['size']['height'])

boxes = []

if 'object' not in data:

continue

for obj in data['object']:

difficult = bool(int(obj['difficult']))

if ignore_difficult_instances and difficult:

continue

box = np.array([

float(obj['bndbox']['xmin']) / width,

float(obj['bndbox']['ymin']) / height,

float(obj['bndbox']['xmax']) / width,

float(obj['bndbox']['ymax']) / height,

VOC_NAME_LABEL[obj['name']]

])

boxes.append(box) # 一个图片的box可能有多个

boxes = np.stack(boxes)

annots.append(boxes)

img_path = os.path.join(data['folder'], image_subdirectory, data['filename'])

img_path = os.path.join(data_dir, img_path)

img_names.append(img_path)

print('done')

true_boxes = np.zeros([len(img_names), max_obj, 5])

for idx, boxes in enumerate(annots):

true_boxes[idx, :boxes.shape[0]] = boxes

return img_names, true_boxes

def parse_image(filename, true_boxes, img_h, img_w):

image = tf.io.read_file(filename)

image = tf.image.decode_jpeg(image)

image = tf.image.convert_image_dtype(image, tf.float32)

image = tf.image.resize(image, (img_h,img_w))

return image, true_boxes

def OB_tensor_slices_dataset(data_dir, years, batch_size, cfg, shuffle=False):

img_names, bboxes = get_imgpath_and_annots(data_dir, years,

image_subdirectory = 'JPEGImages',

annotations_dir = 'Annotations',

ignore_difficult_instances = False)

print('bboxes shape:',bboxes.shape)

dataset = tf.data.Dataset.from_tensor_slices((img_names, bboxes))

dataset = dataset.map(lambda x,y:parse_image(x, y, img_h=cfg.IMAGE_H, img_w=cfg.IMAGE_W))

if shuffle:

dataset = dataset.shuffle(buffer_size=500)

dataset = dataset.batch(batch_size)

dataset = dataset.prefetch(

buffer_size=tf.data.experimental.AUTOTUNE) #提前获取数据存在缓存里来减少gpu因为缺少数据而等待的情况

return dataset说明:OB_tensor_slices_dataset里面先获取所有图片的路径img_names(类型是list),和所有图片的标签bboxes。bboxes类型是array,shape为[len(img_names), 200, 5],由于一张图片可能存在多个目标,所以在get_imgpath_and_annots()里预设了一个目标上限max_obj=200。这样

2.模型构造

参考论文作者代码中给出的网络结构,在darknet19上做修改的。

下面贴出model.py的代码

import numpy as np

import tensorflow as tf

from tensorflow.keras.layers import Concatenate, concatenate, Dropout, LeakyReLU, Reshape, Activation, Conv2D, Input, MaxPooling2D, BatchNormalization, Flatten, Dense, Lambda

import struct

import tensorflow.keras.backend as K

def cal_iou(x1, y1, w1, h1, x2, y2, w2, h2):

'''

Calculate IOU between box1 and box2

Parameters

----------

- x, y : box center coords

- w : box width

- h : box height

Returns

-------

- IOU

'''

xmin1 = x1 - 0.5*w1

xmax1 = x1 + 0.5*w1

ymin1 = y1 - 0.5*h1

ymax1 = y1 + 0.5*h1

xmin2 = x2 - 0.5*w2

xmax2 = x2 + 0.5*w2

ymin2 = y2 - 0.5*h2

ymax2 = y2 + 0.5*h2

interx = np.minimum(xmax1, xmax2) - np.maximum(xmin1, xmin2)

intery = np.minimum(ymax1, ymax2) - np.maximum(ymin1, ymin2)

inter = interx * intery

union = w1*h1 + w2*h2 - inter

iou = inter / (union + 1e-6)

return iou

class WeightReader:

def __init__(self, weight_file):

with open(weight_file, 'rb') as w_f:

major, = struct.unpack('i', w_f.read(4))

minor, = struct.unpack('i', w_f.read(4))

revision, = struct.unpack('i', w_f.read(4))

if (major*10 + minor) >= 2 and major < 1000 and minor < 1000:

w_f.read(8)

else:

w_f.read(4)

transpose = (major > 1000) or (minor > 1000)

binary = w_f.read()

self.offset = 0

self.all_weights = np.frombuffer(binary, dtype='float32')

def read_bytes(self, size):

self.offset = self.offset + size

return self.all_weights[self.offset-size:self.offset]

def load_weights(self, model, iflast=False):

if iflast:

num_convs = 23

else:

num_convs = 22

for i in range(1,num_convs+1):

try:

conv_layer = model.get_layer('conv_' + str(i))

print("loading weights of convolution #" + str(i))

if i < 23:

norm_layer = model.get_layer('norm_' + str(i))

size = np.prod(norm_layer.get_weights()[0].shape)

beta = self.read_bytes(size) # bias

gamma = self.read_bytes(size) # scale

mean = self.read_bytes(size) # mean

var = self.read_bytes(size) # variance

weights = norm_layer.set_weights([gamma, beta, mean, var])

if len(conv_layer.get_weights()) > 1:

bias = self.read_bytes(np.prod(conv_layer.get_weights()[1].shape))

kernel = self.read_bytes(np.prod(conv_layer.get_weights()[0].shape))

kernel = kernel.reshape(list(reversed(conv_layer.get_weights()[0].shape)))

kernel = kernel.transpose([2,3,1,0])

conv_layer.set_weights([kernel, bias])

else:

kernel = self.read_bytes(np.prod(conv_layer.get_weights()[0].shape))

kernel = kernel.reshape(list(reversed(conv_layer.get_weights()[0].shape)))

kernel = kernel.transpose([2,3,1,0])

conv_layer.set_weights([kernel])

except ValueError:

print("no convolution #" + str(i))

if not iflast:

print('\ndoesn\'t load the last conv layer!\n')

layer = model.layers[-2] # last convolutional layer

layer.trainable = True

weights = layer.get_weights()

new_kernel = np.random.normal(size=weights[0].shape)/(13*13)

new_bias = np.random.normal(size=weights[1].shape)/(13*13)

layer.set_weights([new_kernel, new_bias])

def reset(self):

self.offset = 0

def space_to_depth_x2(x):

return tf.nn.space_to_depth(x, block_size=2)

def darknet_yolo(cfg):

input_image = tf.keras.layers.Input((cfg.IMAGE_H, cfg.IMAGE_W, 3), dtype='float32')

# Layer 1

x = Conv2D(32, (3,3), strides=(1,1), padding='same', name='conv_1', use_bias=False)(input_image)

x = BatchNormalization(name='norm_1')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 2

x = Conv2D(64, (3,3), strides=(1,1), padding='same', name='conv_2', use_bias=False)(x)

x = BatchNormalization(name='norm_2')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 3

x = Conv2D(128, (3,3), strides=(1,1), padding='same', name='conv_3', use_bias=False)(x)

x = BatchNormalization(name='norm_3')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 4

x = Conv2D(64, (1,1), strides=(1,1), padding='same', name='conv_4', use_bias=False)(x)

x = BatchNormalization(name='norm_4')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 5

x = Conv2D(128, (3,3), strides=(1,1), padding='same', name='conv_5', use_bias=False)(x)

x = BatchNormalization(name='norm_5')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 6

x = Conv2D(256, (3,3), strides=(1,1), padding='same', name='conv_6', use_bias=False)(x)

x = BatchNormalization(name='norm_6')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 7

x = Conv2D(128, (1,1), strides=(1,1), padding='same', name='conv_7', use_bias=False)(x)

x = BatchNormalization(name='norm_7')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 8

x = Conv2D(256, (3,3), strides=(1,1), padding='same', name='conv_8', use_bias=False)(x)

x = BatchNormalization(name='norm_8')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 9

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_9', use_bias=False)(x)

x = BatchNormalization(name='norm_9')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 10

x = Conv2D(256, (1,1), strides=(1,1), padding='same', name='conv_10', use_bias=False)(x)

x = BatchNormalization(name='norm_10')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 11

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_11', use_bias=False)(x)

x = BatchNormalization(name='norm_11')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 12

x = Conv2D(256, (1,1), strides=(1,1), padding='same', name='conv_12', use_bias=False)(x)

x = BatchNormalization(name='norm_12')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 13

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_13', use_bias=False)(x)

x = BatchNormalization(name='norm_13')(x)

x = LeakyReLU(alpha=0.1)(x)

skip_connection = x # TensorShape([None, 26, 26, 512])

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 14

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_14', use_bias=False)(x)

x = BatchNormalization(name='norm_14')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 15

x = Conv2D(512, (1,1), strides=(1,1), padding='same', name='conv_15', use_bias=False)(x)

x = BatchNormalization(name='norm_15')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 16

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_16', use_bias=False)(x)

x = BatchNormalization(name='norm_16')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 17

x = Conv2D(512, (1,1), strides=(1,1), padding='same', name='conv_17', use_bias=False)(x)

x = BatchNormalization(name='norm_17')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 18

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_18', use_bias=False)(x)

x = BatchNormalization(name='norm_18')(x)

x = LeakyReLU(alpha=0.1)(x)

# removing the last convolutional layer and instead 把最后一层卷积层移除,

# adding on three 3x3 convolutional layers with 1024 filters 添加3个3*3卷积层,

# each followed by a final 1x1 convolutional layer with

# the number of outputs we need for detection. 最后跟上一个1*1的卷积层生成预测输出

# Layer 19

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_19', use_bias=False)(x)

x = BatchNormalization(name='norm_19')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 20

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_20', use_bias=False)(x)

x = BatchNormalization(name='norm_20')(x)

x = LeakyReLU(alpha=0.1)(x)

# We also add a passthrough layer from the 导数第二层前面加入passthrough layer

# final 3x3x512 layer to the second to last convolutional layer

# Layer 21

skip_connection = Conv2D(64, (1,1), strides=(1,1), padding='same', name='conv_21', use_bias=False)(skip_connection)

skip_connection = BatchNormalization(name='norm_21')(skip_connection)

skip_connection = LeakyReLU(alpha=0.1)(skip_connection)

# skip_connection = tf.nn.space_to_depth(skip_connection, 2) # passthrough layer就是这个操作

skip_connection = Lambda(space_to_depth_x2)(skip_connection)

x = concatenate([skip_connection, x])

# Layer 22

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_22', use_bias=False)(x)

x = BatchNormalization(name='norm_22')(x)

x = LeakyReLU(alpha=0.1)(x)

# x = Dropout(0.7)(x) # add dropout

# Layer 23

x = Conv2D(cfg.NUM_ANCHORS * (4 + 1 + cfg.NUM_CLASSES), (1,1), strides=(1,1), padding='same', name='conv_23')(x)

predictions = Reshape((cfg.GRID_H, cfg.GRID_W, cfg.NUM_ANCHORS, 4 + 1 + cfg.NUM_CLASSES))(x)

model = tf.keras.models.Model(inputs=input_image, outputs=predictions)

return model说明:darknet_yolo()是获取模型的,WeightReader()是用来载入作者给的模型文件。

3.损失函数与训练

损失函数是最关键的部分。由于模型比较大,注意设置batch_size大小。yolov2-voc.weights是作者给的在VOC 2007+2012上训练的模型文件,更多信息可以参考这里。weight_reader用来读取这个模型文件,iflast表示是否读取最后一层(conv_23,分类层),这个类是参考这里的WeightReader实现的。

损失计算是在yolov2_loss()里实现的,需要注意,坐标回归损失是在最后m*m特征图的尺度上计算的。

下面贴出main.py部分代码

# -*- coding: utf-8 -*-

import os

os.environ["CUDA_VISIBLE_DEVICES"]="0"

import tensorflow as tf

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

import numpy as np

import tensorflow.keras.backend as K

from dataset import OB_tfrecord_dataset, OB_tensor_slices_dataset, transform

from utils import display_img, save_best_weights, log_loss

from model import darknet_yolo, WeightReader, cal_iou

from config import Config

import matplotlib.pyplot as plt

from tqdm import tqdm

def yolov2_loss(detector_mask, y_true_anchor_boxes, y_true_class_hot, y_true_boxes_all, y_pred, cfg):

"""

Calculate YOLO V2 loss from prediction (y_pred) and ground truth tensors

(detector_mask, y_true_anchor_boxes, y_true_class_hot, y_true_boxes_all,)

Parameters

----------

- detector_mask : tensor, shape (batch, size, GRID_W, GRID_H, anchors_count, 1)

1 if bounding box detected by grid cell, else 0

- y_true_anchor_boxes : tensor, shape (batch_size, GRID_W, GRID_H, anchors_count, 5)

Contains adjusted coords of bounding box in YOLO format

- y_true_class_hot : tensor, shape (batch_size, GRID_W, GRID_H, anchors_count, class_count)

One hot representation of bounding box label

- y_true_boxes_all : annotations : tensor (shape : batch_size, max annot, 5)

y_true_boxes_all format : x, y, w, h, c (coords unit : grid cell)

- y_pred : prediction from model. tensor (shape : batch_size, GRID_W, GRID_H, anchors count, (5 + labels count)

Returns

-------

- loss : scalar

"""

# 0-GRID_W -1 / GRID_H -1

cell_coord_x = tf.cast(tf.reshape(tf.tile(tf.range(cfg.GRID_W), [cfg.GRID_H]), (1, cfg.GRID_H, cfg.GRID_W, 1, 1)), tf.float32)

cell_coord_y = tf.transpose(cell_coord_x, (0,2,1,3,4))

cell_coords = tf.tile(tf.concat([cell_coord_x, cell_coord_y], -1), [y_pred.shape[0], 1, 1, 5, 1])

# 0-GRID_W / GRID_H

anchors = cfg.ANCHORS

# 0-GRID_W / GRID_H

pred_xy = K.sigmoid(y_pred[:,:,:,:,0:2])

pred_xy = pred_xy + cell_coords

pred_wh = K.exp(y_pred[:,:,:,:,2:4]) * anchors

#================ 1. 坐标损失

# 计算loss_wh损失时,需要根据gt的wh计算系数

# coordinate loss

lambda_wh = K.expand_dims(2-(y_true_anchor_boxes[:,:,:,:,2]/cfg.GRID_W) * (y_true_anchor_boxes[:,:,:,:,3]/cfg.GRID_H))

detector_mask = K.cast(detector_mask, tf.float32) # batch_size, GRID_W, GRID_H, n_anchors, 1

n_objs = K.sum( K.cast( detector_mask>0, tf.float32 ) )

loss_xy = cfg.LAMBDA_COORD * K.sum( detector_mask * K.square( y_true_anchor_boxes[:,:,:,:,0:2] - pred_xy)) / (n_objs + 1e-6)

loss_wh = cfg.LAMBDA_COORD * K.sum( lambda_wh * detector_mask * K.square( y_true_anchor_boxes[:,:,:,:,2:4] - pred_wh)) / (n_objs + 1e-6)

# loss_wh = cfg.LAMBDA_COORD * K.sum(detector_mask * K.square(K.sqrt(y_true_anchor_boxes[...,2:4]) -

# K.sqrt(pred_wh))) / (n_objs + 1e-6)

loss_coord = loss_xy + loss_wh

#================ 2. 类别损失

pred_class = K.softmax(y_pred[:,:,:,:,5:])

# y_true_class = tf.argmax(y_true_class_hot, -1)

# loss_cls = K.sparse_categorical_crossentropy(target=y_true_class, output=pred_class, from_logits=True)

# loss_cls = K.expand_dims(loss_cls, -1) * detector_mask

loss_cls = detector_mask * K.square( y_true_class_hot - pred_class )

loss_cls = cfg.LAMBDA_CLASS * K.sum(loss_cls) / (n_objs + 1e-6)

#================ 3. bbox置信度损失

#================ 3.1. 包含目标的预测的bbox置信度损失

# for each detector : iou between prediction and ground truth

x1 = y_true_anchor_boxes[...,0]

y1 = y_true_anchor_boxes[...,1]

w1 = y_true_anchor_boxes[...,2]

h1 = y_true_anchor_boxes[...,3]

x2 = pred_xy[...,0]

y2 = pred_xy[...,1]

w2 = pred_wh[...,0]

h2 = pred_wh[...,1]

ious = cal_iou(x1, y1, w1, h1, x2, y2, w2, h2)

ious = K.expand_dims(ious, -1)

# 在线计算预测的box和gtbox的iou作为置信度的target

# object confidence loss

pred_conf = K.sigmoid(y_pred[...,4:5])

loss_conf_obj = cfg.LAMBDA_OBJECT * K.sum(detector_mask * K.square(ious - pred_conf)) / (n_objs + 1e-6)

#================ 3.2. 不包含目标的预测的bbox置信度损失

# xmin, ymin, xmax, ymax of pred bbox

pred_xy = K.expand_dims(pred_xy, 4) # shape : batch_size, GRID_W, GRID_H, n_anchors, 1, 2

pred_wh = K.expand_dims(pred_wh, 4)

pred_wh_half = pred_wh / 2.

pred_mins = pred_xy - pred_wh_half

pred_maxes = pred_xy + pred_wh_half

# xmin, ymin, xmax, ymax of true bbox

true_boxe_shape = K.int_shape(y_true_boxes_all)

true_boxes_grid = K.reshape(y_true_boxes_all, [true_boxe_shape[0], 1, 1, 1, true_boxe_shape[1], true_boxe_shape[2]])

true_xy = true_boxes_grid[...,0:2] # shape: batch_size, 1, 1, 1, max_annot, 2

true_wh = true_boxes_grid[...,2:4] # shape: batch_size, 1, 1, 1, max_annot, 2

true_wh_half = true_wh * 0.5

true_mins = true_xy - true_wh_half

true_maxes = true_xy + true_wh_half

# 从预测的box中,计算每一个box与所有GTbox的IOU,找出最大的IOU,如果小于阈值(0.6,并且不负责GT,根据1 - detector_mask),该预测的box就加入noobj,计算置信度损失

intersect_mins = K.maximum(pred_mins, true_mins) # shape : batch_size, GRID_W, GRID_H, n_anchors, max_annot, 2

intersect_maxes = K.minimum(pred_maxes, true_maxes) # shape : batch_sizem, GRID_W, GRID_H, n_anchors, max_annot, 2

intersect_wh = K.maximum(intersect_maxes - intersect_mins, 0.) # shape : batch_size, GRID_W, GRID_H, n_anchors, max_annot, 1

intersect_areas = intersect_wh[..., 0] * intersect_wh[..., 1] # shape : batch_size, GRID_W, GRID_H, n_anchors, max_annot, 1

pred_areas = pred_wh[..., 0] * pred_wh[..., 1] # shape : batch_size, GRID_W, GRID_H, n_anchors, 1, 1

true_areas = true_wh[..., 0] * true_wh[..., 1] # shape : batch_size, GRID_W, GRID_H, n_anchors, max_annot, 1

union_areas = pred_areas + true_areas - intersect_areas

iou_scores = intersect_areas / union_areas # shape : batch_size, GRID_W, GRID_H, n_anchors, max_annot, 1

best_ious = K.max(iou_scores, axis=4) # Best IOU scores.

best_ious = K.expand_dims(best_ious) # shape : batch_size, GRID_W, GRID_H, n_anchors, 1

# no object confidence loss

no_object_detection = K.cast(best_ious < 0.6, K.dtype(best_ious))

noobj_mask = no_object_detection * (1 - detector_mask)

n_noobj = K.sum(tf.cast(noobj_mask > 0.0, tf.float32))

loss_conf_noobj = cfg.LAMBDA_NOOBJECT * K.sum(noobj_mask * K.square(-pred_conf)) / (n_noobj + 1e-6)

#================ 4. 三种损失汇总

# total confidence loss

loss_conf = loss_conf_noobj + loss_conf_obj

# total loss

loss = loss_conf + loss_cls + loss_coord

sub_loss = [loss_conf, loss_cls, loss_coord]

return loss, sub_loss

#=============== main从这里开始

#=============== prepare config and data

data_dir = r'D:\dataset\pascal_voc\VOCdevkit'

val_set = {'VOC2012':['val']}

train_set = {'VOC2007':['train', 'val', 'test'], 'VOC2012':['train']}

dset_train_path = 'data/train.tfrecord'

dset_val_path = 'data/val.tfrecord'

model_weights_path = 'weights/yolov2-voc.weights'

batch_size = 3

num_epochs = 10

num_iters = 1000

train_name = 'training_1'

cfg = Config()

# log (tensorboard)

summary_writer = tf.summary.create_file_writer(os.path.join('logs/', train_name), flush_millis=20000)

summary_writer.set_as_default()

# 用tfrecord的形式读取数据集

#dset_train = OB_tfrecord_dataset(dset_train_path, batch_size, cfg, shuffle=False)

#dset_val = OB_tfrecord_dataset(dset_val_path, batch_size, cfg, shuffle=True)

# 用tf.data.Dataset.from_tensor_slices的形式, 每次即时的从硬盘读取图片(首先会生成所有图片的Ground Truth)

dset_train = OB_tensor_slices_dataset(data_dir, train_set, batch_size, cfg, shuffle=True)

dset_val = OB_tensor_slices_dataset(data_dir, val_set, batch_size, cfg, shuffle=False)

len_batches_train = tf.data.experimental.cardinality(dset_train).numpy()

len_batches_val = tf.data.experimental.cardinality(dset_val).numpy()

#=============== prepare model

model = darknet_yolo(cfg)

weight_reader = WeightReader(model_weights_path)

weight_reader.load_weights(model, iflast=False)

#=============== prepare training

train_loss_history = []

val_loss_history = []

best_val_loss = 1e6

initial_learning_rate = 2e-5

decay_epochs = 30 * num_iters

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate,

decay_steps=decay_epochs,

decay_rate=0.5,

staircase=True)

#lr_schedule = tf.keras.optimizers.schedules.PiecewiseConstantDecay(

# [2,4], [2e-5,1e-5,2e-5])

optim = tf.keras.optimizers.Adam(learning_rate=1e-5, beta_1=0.9, beta_2=0.999, epsilon=1e-08)

#optim = tf.keras.optimizers.SGD(learning_rate=1e-4)

train_layers = ['conv_22', 'norm_22', 'conv_23']

train_vars = []

for name in train_layers:

train_vars += model.get_layer(name).trainable_variables

#raise ValueError

#=============== begin training

for epoch in range(num_epochs):

epoch_loss = []

epoch_sub_loss = []

epoch_val_loss = []

epoch_val_sub_loss = []

print('\nEpoch {} :'.format(epoch))

# train

# pbar = tqdm(total=len_batches_train)

for bs_idx, (x,y) in enumerate(dset_train):

x, detector_mask, y_true_anchor_boxes, y_true_class_hot, y_true_boxes_all = transform(x,y, cfg)

with tf.GradientTape() as tape:

y_pred = model(x, training=True)

loss, sub_loss = yolov2_loss(detector_mask, y_true_anchor_boxes, y_true_class_hot,

y_true_boxes_all, y_pred, cfg)

_loss = loss * 1.0

grads = tape.gradient(_loss, train_vars)

optim.apply_gradients(grads_and_vars = zip(grads, train_vars))

epoch_loss.append(loss)

epoch_sub_loss.append(sub_loss)

print('-', end='')

# pbar.update(1)

if (bs_idx+1)==num_iters: break

# pbar.close()

# val

# pbar = tqdm(total=len_batches_val)

for bs_idx, (x,y) in enumerate(dset_val):

x, detector_mask, y_true_anchor_boxes, y_true_class_hot, y_true_boxes_all = transform(x,y, cfg)

with tf.GradientTape() as tape:

y_pred = model(x, training=False)

loss, sub_loss = yolov2_loss(detector_mask, y_true_anchor_boxes, y_true_class_hot,

y_true_boxes_all, y_pred, cfg)

epoch_val_loss.append(loss)

epoch_val_sub_loss.append(sub_loss)

print('-', end='')

# pbar.update(1)

if (bs_idx+1)==1: break

# pbar.close()

# record

loss_avg = np.mean(np.array(epoch_loss))

sub_loss_avg = np.mean(np.array(epoch_sub_loss), axis=0)

val_loss_avg = np.mean(np.array(epoch_val_loss))

val_sub_loss_avg = np.mean(np.array(epoch_val_sub_loss), axis=0)

log_loss(loss_avg, val_loss_avg, step=epoch)

train_loss_history.append(loss_avg)

val_loss_history.append(val_loss_avg)

if val_loss_avg < best_val_loss:

print('\nfind better model for val')

best_model_path = save_best_weights(model, train_name, val_loss_avg)

best_val_loss = val_loss_avg

print(' \ntrain_loss={:.3f} (conf={:.3f}, class={:.3f}, coords={:.3f}), val_loss={:.3f} (conf={:.3f}, class={:.3f}, coords={:.3f})'.format(

loss_avg, sub_loss_avg[0], sub_loss_avg[1], sub_loss_avg[2],

val_loss_avg, val_sub_loss_avg[0], val_sub_loss_avg[1], val_sub_loss_avg[2]))

#save_best_weights(model, train_name, 666)

#=============== begin testing

model.load_weights(best_model_path)

for bs_idx, (x,y) in enumerate(dset_val):

x, detector_mask, y_true_anchor_boxes, y_true_class_hot, y_true_boxes_all = transform(x,y, cfg)

score_threshold = 0.5

iou_threshold = 0.45

display_img(x[0], model, score_threshold, iou_threshold, cfg)

if (bs_idx+1)==5: break

fig,ax = plt.subplots(1, figsize=(8,8))

ax.plot(train_loss_history[10:])

plt.show()4.一些相关参数

# -*- coding: utf-8 -*-

import numpy as np

class Config():

def __init__(self):

# 参考https://github.com/pjreddie/darknet/blob/master/cfg/yolov2-voc.cfg

# 注意,这个宽高是在最后特征图的尺度下的,不是在原图尺度上,在原图尺度上的话还要乘以步长32

# self.ANCHORS = [0.57273, 0.677385, 1.87446, 2.06253, 3.33843, 5.47434, 7.88282, 3.52778, 9.77052, 9.16828] # anchors coords unit : grid cell

self.ANCHORS = [1.3221, 1.73145, 3.19275, 4.00944, 5.05587, 8.09892, 9.47112, 4.84053, 11.2364, 10.0071]

# 0-GRID_W / GRID_H

self.ANCHORS = np.array(self.ANCHORS)

self.ANCHORS = self.ANCHORS.reshape(-1,2)

self.IMAGE_W = 416

self.IMAGE_H = 416

self.GRID_W = 13

self.GRID_H = 13

self.NUM_ANCHORS = 5

self.NUM_CLASSES = 20

self.LAMBDA_NOOBJECT = 1

self.LAMBDA_OBJECT = 5

self.LAMBDA_CLASS = 1

self.LAMBDA_COORD = 1

VOC_NAME_LABEL_CLASS = {

'none': (0, 'Background'),

'aeroplane': (1, 'Vehicle'),

'bicycle': (2, 'Vehicle'),

'bird': (3, 'Animal'),

'boat': (4, 'Vehicle'),

'bottle': (5, 'Indoor'),

'bus': (6, 'Vehicle'),

'car': (7, 'Vehicle'),

'cat': (8, 'Animal'),

'chair': (9, 'Indoor'),

'cow': (10, 'Animal'),

'diningtable': (11, 'Indoor'),

'dog': (12, 'Animal'),

'horse': (13, 'Animal'),

'motorbike': (14, 'Vehicle'),

'person': (15, 'Person'),

'pottedplant': (16, 'Indoor'),

'sheep': (17, 'Animal'),

'sofa': (18, 'Indoor'),

'train': (19, 'Vehicle'),

'tvmonitor': (20, 'Indoor'),

}

VOC_NAME_LABEL = {key:v[0] for key,v in VOC_NAME_LABEL_CLASS.items()}

VOC_LABEL_NAME = {v[0]:key for key,v in VOC_NAME_LABEL_CLASS.items()}

YOLO方法的代码细节很多没有在论文提,有疑问的话需要多查查资料。

以后会把整个工程push到github上。

待续。