Prometheus Operator 监控 Kubernetes 集群

目录[-]

系统参数:

- Prometheus Operator版本: 0.29

- Kubernetes 版本: 1.14.0

- 项目 Github 地址: https://github.com/coreos/kube-prometheus

这里推荐手动下来源码安装,不推荐 helm 方式,因为国内那道伟大的墙……内置文件中很多镜像无法拉取下来,需要进行改动。

一、介绍

1、Kubernetes Operator 介绍

在 Kubernetes 的支持下,管理和伸缩 Web 应用、移动应用后端以及 API 服务都变得比较简单了。其原因是这些应用一般都是无状态的,所以 Deployment 这样的基础 Kubernetes API 对象就可以在无需附加操作的情况下,对应用进行伸缩和故障恢复了。

而对于数据库、缓存或者监控系统等有状态应用的管理,就是个挑战了。这些系统需要应用领域的知识,来正确的进行伸缩和升级,当数据丢失或不可用的时候,要进行有效的重新配置。我们希望这些应用相关的运维技能可以编码到软件之中,从而借助 Kubernetes 的能力,正确的运行和管理复杂应用。

Operator 这种软件,使用 TPR(第三方资源,现在已经升级为 CRD) 机制对 Kubernetes API 进行扩展,将特定应用的知识融入其中,让用户可以创建、配置和管理应用。和 Kubernetes 的内置资源一样,Operator 操作的不是一个单实例应用,而是集群范围内的多实例。

2、Prometheus Operator 介绍

Kubernetes 的 Prometheus Operator 为 Kubernetes 服务和 Prometheus 实例的部署和管理提供了简单的监控定义。

安装完毕后,Prometheus Operator提供了以下功能:

- 创建/毁坏: 在 Kubernetes namespace 中更容易启动一个 Prometheus 实例,一个特定的应用程序或团队更容易使用Operator。

- 简单配置: 配置 Prometheus 的基础东西,比如在 Kubernetes 的本地资源 versions, persistence, retention policies, 和 replicas。

- Target Services 通过标签: 基于常见的Kubernetes label查询,自动生成监控target 配置;不需要学习普罗米修斯特定的配置语言。

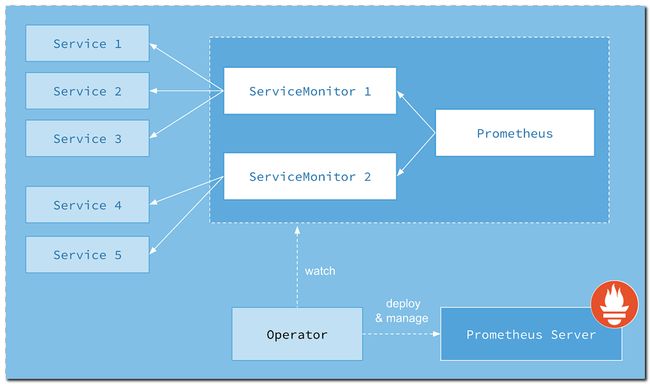

3、Prometheus Operator 系统架构图

- Operator: Operator 资源会根据自定义资源(Custom Resource Definition / CRDs)来部署和管理 Prometheus Server,同时监控这些自定义资源事件的变化来做相应的处理,是整个系统的控制中心。

- Prometheus: Prometheus 资源是声明性地描述 Prometheus 部署的期望状态。

- Prometheus Server: Operator 根据自定义资源 Prometheus 类型中定义的内容而部署的 Prometheus Server 集群,这些自定义资源可以看作是用来管理 Prometheus Server 集群的 StatefulSets 资源。

- ServiceMonitor: ServiceMonitor 也是一个自定义资源,它描述了一组被 Prometheus 监控的 targets 列表。该资源通过 Labels 来选取对应的 Service Endpoint,让 Prometheus Server 通过选取的 Service 来获取 Metrics 信息。

- Service: Service 资源主要用来对应 Kubernetes 集群中的 Metrics Server Pod,来提供给 ServiceMonitor 选取让 Prometheus Server 来获取信息。简单的说就是 Prometheus 监控的对象,例如 Node Exporter Service、Mysql Exporter Service 等等。

- Alertmanager: Alertmanager 也是一个自定义资源类型,由 Operator 根据资源描述内容来部署 Alertmanager 集群。

二、更改 kubernetes 配置

由于 Kubernetes 集群是由 kubeadm 搭建的,其中 kube-scheduler 默认绑定 IP 是 127.0.0.1 地址。Prometheus Operator 是通过节点 IP 去访问,所以 我们将 kube-scheduler 绑定的地址更改成 0.0.0.0。

编辑 /etc/kubernetes/manifests/kube-scheduler.yaml 文件

$ vim /etc/kubernetes/manifests/kube-scheduler.yaml

将 command 的 bind-address 地址更改成 0.0.0.0

......

spec:

containers:

- command:

- kube-scheduler

- --bind-address=0.0.0.0 #改为0.0.0.0

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

......

三、拉取 Prometheus Operator

先从 Github 上将源码拉取下来,利用源码项目已经写好的 kubernetes 的 yaml 文件进行一系列集成镜像的安装,如 grafana、prometheus 等等。

从 GitHub 拉取 Prometheus Operator 源码

$ git clone https://github.com/coreos/kube-prometheus.git

四、进行文件分类

由于它的文件都存放在项目源码的 manifests 文件夹下,所以需要进入其中进行启动这些 kubernetes 应用 yaml 文件。又由于这些文件堆放在一起,不利于分类启动,所以这里将它们分类。

进入源码的 manifests 文件夹

$ cd kube-prometheus/manifests/

创建文件夹并且将 yaml 文件分类

# 创建文件夹

$ mkdir -p operator node-exporter alertmanager grafana kube-state-metrics prometheus serviceMonitor adapter

# 移动 yaml 文件,进行分类到各个文件夹下

mv *-serviceMonitor* serviceMonitor/

mv 0prometheus-operator* operator/

mv grafana-* grafana/

mv kube-state-metrics-* kube-state-metrics/

mv alertmanager-* alertmanager/

mv node-exporter-* node-exporter/

mv prometheus-adapter* adapter/

mv prometheus-* prometheus/

基本目录结构如下:

manifests/

├── 00namespace-namespace.yaml

├── adapter

│ ├── prometheus-adapter-apiService.yaml

│ ├── prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

│ ├── prometheus-adapter-clusterRoleBindingDelegator.yaml

│ ├── prometheus-adapter-clusterRoleBinding.yaml

│ ├── prometheus-adapter-clusterRoleServerResources.yaml

│ ├── prometheus-adapter-clusterRole.yaml

│ ├── prometheus-adapter-configMap.yaml

│ ├── prometheus-adapter-deployment.yaml

│ ├── prometheus-adapter-roleBindingAuthReader.yaml

│ ├── prometheus-adapter-serviceAccount.yaml

│ └── prometheus-adapter-service.yaml

├── alertmanager

│ ├── alertmanager-alertmanager.yaml

│ ├── alertmanager-secret.yaml

│ ├── alertmanager-serviceAccount.yaml

│ └── alertmanager-service.yaml

├── grafana

│ ├── grafana-dashboardDatasources.yaml

│ ├── grafana-dashboardDefinitions.yaml

│ ├── grafana-dashboardSources.yaml

│ ├── grafana-deployment.yaml

│ ├── grafana-serviceAccount.yaml

│ └── grafana-service.yaml

├── kube-state-metrics

│ ├── kube-state-metrics-clusterRoleBinding.yaml

│ ├── kube-state-metrics-clusterRole.yaml

│ ├── kube-state-metrics-deployment.yaml

│ ├── kube-state-metrics-roleBinding.yaml

│ ├── kube-state-metrics-role.yaml

│ ├── kube-state-metrics-serviceAccount.yaml

│ └── kube-state-metrics-service.yaml

├── node-exporter

│ ├── node-exporter-clusterRoleBinding.yaml

│ ├── node-exporter-clusterRole.yaml

│ ├── node-exporter-daemonset.yaml

│ ├── node-exporter-serviceAccount.yaml

│ └── node-exporter-service.yaml

├── operator

│ ├── 0prometheus-operator-0alertmanagerCustomResourceDefinition.yaml

│ ├── 0prometheus-operator-0prometheusCustomResourceDefinition.yaml

│ ├── 0prometheus-operator-0prometheusruleCustomResourceDefinition.yaml

│ ├── 0prometheus-operator-0servicemonitorCustomResourceDefinition.yaml

│ ├── 0prometheus-operator-clusterRoleBinding.yaml

│ ├── 0prometheus-operator-clusterRole.yaml

│ ├── 0prometheus-operator-deployment.yaml

│ ├── 0prometheus-operator-serviceAccount.yaml

│ └── 0prometheus-operator-service.yaml

├── prometheus

│ ├── prometheus-clusterRoleBinding.yaml

│ ├── prometheus-clusterRole.yaml

│ ├── prometheus-prometheus.yaml

│ ├── prometheus-roleBindingConfig.yaml

│ ├── prometheus-roleBindingSpecificNamespaces.yaml

│ ├── prometheus-roleConfig.yaml

│ ├── prometheus-roleSpecificNamespaces.yaml

│ ├── prometheus-rules.yaml

│ ├── prometheus-serviceAccount.yaml

│ └── prometheus-service.yaml

└── serviceMonitor

├── 0prometheus-operator-serviceMonitor.yaml

├── alertmanager-serviceMonitor.yaml

├── grafana-serviceMonitor.yaml

├── kube-state-metrics-serviceMonitor.yaml

├── node-exporter-serviceMonitor.yaml

├── prometheus-serviceMonitorApiserver.yaml

├── prometheus-serviceMonitorCoreDNS.yaml

├── prometheus-serviceMonitorKubeControllerManager.yaml

├── prometheus-serviceMonitorKubelet.yaml

├── prometheus-serviceMonitorKubeScheduler.yaml

└── prometheus-serviceMonitor.yaml

五、修改源码 yaml 文件

由于这些 yaml 文件中设置的应用镜像国内无法拉取下来,所以修改源码中的这些 yaml 的镜像设置,替换镜像地址方便拉取安装。再之后因为需要将 Grafana & Prometheus 通过 NodePort 方式暴露出去,所以也需要修改这两个应用的 service 文件。

1、修改镜像

(1)、修改 operator

修改 0prometheus-operator-deployment.yaml 文件

$ vim operator/0prometheus-operator-deployment.yaml

改成如下:

- 修改 config-reloader-image 配置

- 修改 prometheus-config-reloader 配置

- 修改 image 镜像

......

spec:

replicas: 1

selector:

matchLabels:

k8s-app: prometheus-operator

template:

metadata:

labels:

k8s-app: prometheus-operator

spec:

containers:

- args:

- --kubelet-service=kube-system/kubelet

- --logtostderr=true

- --config-reloader-image=jimmidyson/configmap-reload:v0.0.1 #修改 config-reloader-image 配置

- --prometheus-config-reloader=rancher/coreos-prometheus-config-reloader:v0.29.0 #修改 prometheus-config-reloader 配置

image: rancher/coreos-prometheus-operator:v0.29.0 #修改 image 镜像

name: prometheus-operator

......

(2)、修改 adapter

修改 prometheus-adapter-deployment.yaml 文件

$ vim adapter/prometheus-adapter-deployment.yaml

改成如下:

- 修改 image 镜像

......

spec:

containers:

- args:

- --cert-dir=/var/run/serving-cert

- --config=/etc/adapter/config.yaml

- --logtostderr=true

- --metrics-relist-interval=1m

- --prometheus-url=http://prometheus-k8s.monitoring.svc:9090/

- --secure-port=6443

image: directxman12/k8s-prometheus-adapter-amd64:v0.4.1 #修改 image 镜像

name: prometheus-adapter

......

(3)、修改 alertmanager

修改 alertmanager-alertmanager.yaml 文件

$ vim alertmanager/alertmanager-alertmanager.yaml

改成如下:

- 修改 image 镜像

......

spec:

baseImage: prom/alertmanager #修改 image 镜像

nodeSelector:

beta.kubernetes.io/os: linux

replicas: 3

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: alertmanager-main

version: v0.16.2

(4)、修改 node-exporter

修改 node-exporter-daemonset.yaml 文件

$ vim node-exporter/node-exporter-daemonset.yaml

改成如下:

- 修改 image 镜像

......

spec:

containers:

- args:

- --web.listen-address=127.0.0.1:9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --collector.filesystem.ignored-mount-points=^/(dev|proc|($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|up|configfs|debugfs|devpts|pstore)$

image: prom/node-exporter:v0.17.0 #修改 image 镜像

name: node-exporter

- args:

- --logtostderr

- --secure-listen-address=$(IP):9100

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256

- --upstream=http://127.0.0.1:9100/

image: registry.cn-shanghai.aliyuncs.com/mydlq/kube-rbac-proxy:v0.4.1 #修改 image 镜像

......

(5)、修改 kube-state-metrics

修改 kube-state-metrics-deployment.yaml 文件

$ vim kube-state-metrics/kube-state-metrics-deployment.yaml

改成如下:

- 修改 image 镜像

......

spec:

containers:

- args:

- --logtostderr

- --secure-listen-address=:8443

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- --upstream=http://127.0.0.1:8081/

image: registry.cn-shanghai.aliyuncs.com/mydlq/kube-rbac-proxy:v0.4.1 #修改 image 镜像

name: kube-rbac-proxy-main

- args:

- --logtostderr

- --secure-listen-address=:9443

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- --upstream=http://127.0.0.1:8082/

image: quay.io/coreos/kube-rbac-proxy:v0.4.1

name: kube-rbac-proxy-self

- args:

- --host=127.0.0.1

- --port=8081

- --telemetry-host=127.0.0.1

- --telemetry-port=8082

image: rancher/coreos-kube-state-metrics:v1.5.0 #修改 image 镜像

name: kube-state-metrics

- command:

- /pod_nanny

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=2m

- --memory=150Mi

- --extra-memory=30Mi

- --threshold=5

- --deployment=kube-state-metrics

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: siriuszg/addon-resizer:1.8.4 #修改 image 镜像

name: addon-resizer

......

(6)、修改 node-exporter

修改 node-exporter-daemonset.yaml 文件

$ vim prometheus/prometheus-prometheus.yaml

改成如下:

- 修改 image 镜像

......

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

baseImage: prom/prometheus #修改 image 镜像

nodeSelector:

beta.kubernetes.io/os: linux

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.7.2

2、修改 Service 端口设置

(1)、修改 Prometheus Service

修改 prometheus-service.yaml 文件

$ vim prometheus/prometheus-service.yaml

修改prometheus Service端口类型为NodePort,设置nodePort端口为32101

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 32101

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP

(2)、修改 Grafana Service

修改 prometheus-service.yaml 文件

$ vim prometheus/prometheus-service.yaml

修改garafana Service端口类型为NodePort,设置nodePort端口为32102

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 32102

selector:

app: grafana

六、安装Prometheus Operator

所有文件都在 manifests 目录下执行。

1、创建 namespace

$ kubectl apply -f 00namespace-namespace.yaml

2、安装 Operator

$ kubectl apply -f operator/

查看 Pod,等 pod 创建起来在进行下一步

$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS

prometheus-operator-5d6f6f5d68-mb88p 1/1 Running 0

3、安装其它组件

$ kubectl apply -f adapter/

$ kubectl apply -f alertmanager/

$ kubectl apply -f node-exporter/

$ kubectl apply -f kube-state-metrics/

$ kubectl apply -f grafana/

$ kubectl apply -f prometheus/

$ kubectl apply -f serviceMonitor/

查看 Pod 状态

$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS

alertmanager-main-0 2/2 Running 0

alertmanager-main-1 2/2 Running 0

alertmanager-main-2 2/2 Running 0

grafana-b6bd6d987-2kr8w 1/1 Running 0

kube-state-metrics-6f7cd8cf48-ftkjw 4/4 Running 0

node-exporter-4jt26 2/2 Running 0

node-exporter-h88mw 2/2 Running 0

node-exporter-mf7rr 2/2 Running 0

prometheus-adapter-df8b6c6f-jfd8m 1/1 Running 0

prometheus-k8s-0 3/3 Running 0

prometheus-k8s-1 3/3 Running 0

prometheus-operator-5d6f6f5d68-mb88p 1/1 Running 0

七、查看 Prometheus & Grafana

1、查看 Prometheus

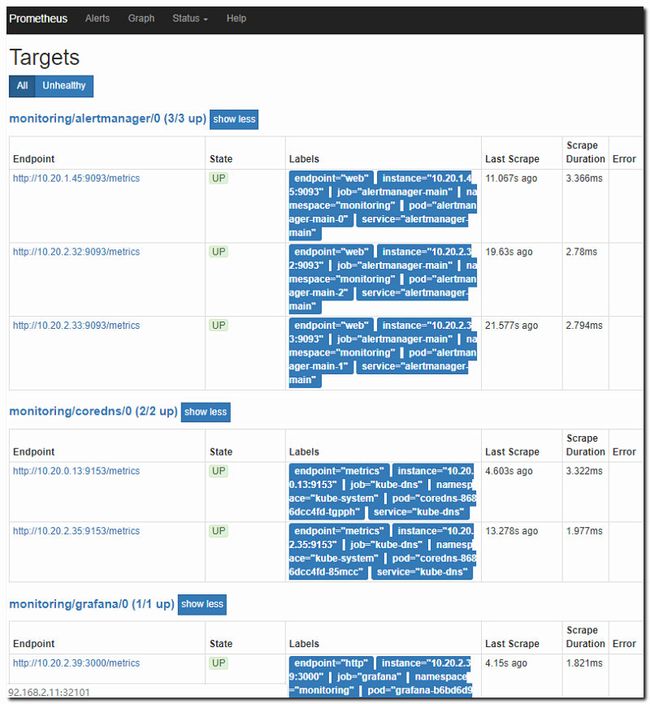

打开地址: http://192.168.2.11:32101 查看 Prometheus 采集的目标,看其各个采集服务状态有木有错误。

2、查看 Grafana

打开地址: http://192.168.2.11:32102 查看 Grafana 图表,看其 Kubernetes 集群是否能正常显示。