总体

在librbd中的代码中,几乎所有的操作都是异步的,下面以一段代码为例,分析其操作流程。

下面的代码是rbd 创建image流程中,创建id obj的步骤。其最终效果是在rbd对应的pool中创建一个名为rbd_id.的对象,该对象的内容为rbd的id。

// 创建一个op对象,后续的操作会先存入该对象,不会被执行

// 所有存入的操作会按顺序聚合成一个原子batch

// 直到aio_operate调用时才开始异步执行

// 所有操作执行完成后,会调用AioCompletion上注册的回调W函数

librados::ObjectWriteOperation op;

// 在op内存入一个create操作

op.create(true);

// 在op内存入一个set_id操作(细节与op.create不同,本质上一样)

cls_client::set_id(&op, m_image_id);

// handle_create_id_object为op上所有操作都完成后执行的回调函数

using klass = CreateRequest;

librados::AioCompletion *comp =

create_rados_callback(this);

// 开始

int r = m_ioctx.aio_operate(m_id_obj, comp, &op);

assert(r == 0);

comp->release();

上面代码涉及到的内容主要有以下几点:

- ObjectXXXXOperation对象的作用

- ceph的cls_client调用注册函数的方式

- AioCompletion是什么

- aio_operate背后做了什么

ObjectXXXXOperation

librados::ObjectWriteOperation

从ObjectWriteOperation的注释中,可以看到一些内容,该对象将多个写操作聚合成一个请求。其本身没有太多内容,只是包含了各种操作函数的接口。

/*

* ObjectWriteOperation : compound object write operation

* Batch multiple object operations into a single request, to be applied

* atomically.

*/

class CEPH_RADOS_API ObjectWriteOperation : public ObjectOperation

{

protected:

time_t *unused;

public:

ObjectWriteOperation() : unused(NULL) {}

~ObjectWriteOperation() override {}

// 开头代码片中调用的create函数

void create(bool exclusive)

{

::ObjectOperation *o = &impl->o;

o->create(exclusive);

}

...

// 成员函数,太多,略去

};

librados::ObjectOperation

所有类似ObjectWriteOperation的类,都继承自librados::ObjectOperation。其具体实现在ObjectOperationImpl中。

class CEPH_RADOS_API ObjectOperation

{

...

protected:

ObjectOperationImpl *impl;

};

librados::ObjectOperationImpl

仍然没有太多内容,关键内容应该在::ObjectOperation中。继续看。

struct ObjectOperationImpl {

::ObjectOperation o;

real_time rt;

real_time *prt;

ObjectOperationImpl() : prt(NULL) {}

};

::ObjectOperation

这个类是机制的核心。具体见注释。

struct ObjectOperation {

vector ops; // 操作数组

int flags; // flags

int priority; // 优先级

vector out_bl; // 用于存放ops每个操作的输出内容

vector out_handler; // 存放ops每个操作完成后执行的回调函数

vector out_rval; // 存放ops每个操作的返回值

// 为了便于理解上述参数内容。给出了两个函数

OSDOp& add_op(int op) {

int s = ops.size();

ops.resize(s+1);

ops[s].op.op = op;

out_bl.resize(s+1);

out_bl[s] = NULL;

out_handler.resize(s+1);

out_handler[s] = NULL;

out_rval.resize(s+1);

out_rval[s] = NULL;

return ops[s];

}

// 开头代码片中create函数最终做的事

// 仅仅是在ops数组中增加了一项,并没有执行操作

void create(bool excl) {

OSDOp& o = add_op(CEPH_OSD_OP_CREATE);

o.op.flags = (excl ? CEPH_OSD_OP_FLAG_EXCL : 0);

}

// 省略其他成员函数

...

};

cls_client

cls后端的注册和调用机制先不去关注(值得单独分析下了)。这只看cls_client如何调用已经注册的函数。

以上面的set_id为例。

cls_client::set_id

可以看到,这里是将输入编码到bufflist中,然后转调ObjectWriteOperation的exec函数。

void set_id(librados::ObjectWriteOperation *op, const std::string id)

{

bufferlist bl;

encode(id, bl);

op->exec("rbd", "set_id", bl);

}

librados::ObjectOperation::exec

可以看到,cls_client下的函数不会直接执行,也是通过ObjectOperation函数的机制来执行的。

void librados::ObjectOperation::exec(const char *cls, const char *method, bufferlist& inbl)

{

::ObjectOperation *o = &impl->o;

o->call(cls, method, inbl);

}

::ObjectOperation::call

void call(const char *cname, const char *method, bufferlist &indata) {

add_call(CEPH_OSD_OP_CALL, cname, method, indata, NULL, NULL, NULL);

}

::ObjectOperation::add_call

之前直接在op上调用的create函数,是通过add_op增加了独立的一个CEPH_OSD_OP_CREATE操作。而cls相关的函数则通过add_call增加,共享CEPH_OSD_OP_CALL操作。不同函数通过在indata中增加classname和methodname来区分。

void add_call(int op, const char *cname, const char *method,

bufferlist &indata,

bufferlist *outbl, Context *ctx, int *prval) {

OSDOp& osd_op = add_op(op);

unsigned p = ops.size() - 1;

out_handler[p] = ctx;

out_bl[p] = outbl;

out_rval[p] = prval;

osd_op.op.cls.class_len = strlen(cname);

osd_op.op.cls.method_len = strlen(method);

osd_op.op.cls.indata_len = indata.length();

osd_op.indata.append(cname, osd_op.op.cls.class_len);

osd_op.indata.append(method, osd_op.op.cls.method_len);

osd_op.indata.append(indata);

}

AioCompletion

AioCompletion主要是用于封装和调用op执行完成后的回调函数的。

其创建过程。

create_rados_callback

template

librados::AioCompletion *create_rados_callback(T *obj) {

return librados::Rados::aio_create_completion(

obj, &detail::rados_callback, nullptr);

}

aio_create_completion

librados::AioCompletion *librados::Rados::aio_create_completion(void *cb_arg,

callback_t cb_complete,

callback_t cb_safe)

{

AioCompletionImpl *c;

int r = rados_aio_create_completion(cb_arg, cb_complete, cb_safe, (void**)&c);

assert(r == 0);

return new AioCompletion(c);

}

rados_aio_create_completion

extern "C" int rados_aio_create_completion(void *cb_arg,

rados_callback_t cb_complete,

rados_callback_t cb_safe,

rados_completion_t *pc)

{

tracepoint(librados, rados_aio_create_completion_enter, cb_arg, cb_complete, cb_safe);

librados::AioCompletionImpl *c = new librados::AioCompletionImpl;

if (cb_complete)

c->set_complete_callback(cb_arg, cb_complete);

if (cb_safe)

c->set_safe_callback(cb_arg, cb_safe);

*pc = c;

tracepoint(librados, rados_aio_create_completion_exit, 0, *pc);

return 0;

}

AioCompletion

struct CEPH_RADOS_API AioCompletion {

AioCompletion(AioCompletionImpl *pc_) : pc(pc_) {}

int set_complete_callback(void *cb_arg, callback_t cb);

int set_safe_callback(void *cb_arg, callback_t cb);

int wait_for_complete();

int wait_for_safe();

int wait_for_complete_and_cb();

int wait_for_safe_and_cb();

bool is_complete();

bool is_safe();

bool is_complete_and_cb();

bool is_safe_and_cb();

int get_return_value();

int get_version() __attribute__ ((deprecated));

uint64_t get_version64();

void release();

AioCompletionImpl *pc;

};

AioCompletionImpl

struct librados::AioCompletionImpl {

Mutex lock;

Cond cond;

int ref, rval;

bool released;

bool complete;

version_t objver;

ceph_tid_t tid;

rados_callback_t callback_complete, callback_safe;

void *callback_complete_arg, *callback_safe_arg;

// for read

bool is_read;

bufferlist bl;

bufferlist *blp;

char *out_buf;

IoCtxImpl *io;

ceph_tid_t aio_write_seq;

xlist::item aio_write_list_item; //默认值为this

int set_complete_callback(void *cb_arg, rados_callback_t cb);

int set_safe_callback(void *cb_arg, rados_callback_t cb);

int wait_for_complete();

int wait_for_safe();

int is_complete();

int is_safe();

int wait_for_complete_and_cb();

int wait_for_safe_and_cb();

int is_complete_and_cb();

int is_safe_and_cb();

int get_return_value();

uint64_t get_version();

void get();

void _get();

void release();

void put();

void put_unlock();

};

aio_operate

librados::IoCtx::aio_operate

转调io_ctx_impl对应的函数。

int librados::IoCtx::aio_operate(const std::string& oid, AioCompletion *c,

librados::ObjectWriteOperation *o)

{

object_t obj(oid);

return io_ctx_impl->aio_operate(obj, &o->impl->o, c->pc,

io_ctx_impl->snapc, 0);

}

librados::IoCtxImpl::aio_operate

int librados::IoCtxImpl::aio_operate(const object_t& oid,

::ObjectOperation *o, AioCompletionImpl *c,

const SnapContext& snap_context, int flags,

const blkin_trace_info *trace_info)

{

FUNCTRACE(client->cct);

OID_EVENT_TRACE(oid.name.c_str(), "RADOS_WRITE_OP_BEGIN");

auto ut = ceph::real_clock::now();

/* can't write to a snapshot */

if (snap_seq != CEPH_NOSNAP)

return -EROFS;

// 将AioCompletion转换成Centext对象

// Context对象是ceph中标准的回调函数格式对象

Context *oncomplete = new C_aio_Complete(c);

#if defined(WITH_LTTNG) && defined(WITH_EVENTTRACE)

((C_aio_Complete *) oncomplete)->oid = oid;

#endif

c->io = this;

// 在IoCtxImpl中有一个队列,用于存储所有的AioCompletionImpl*

// 这个函数就是将AioCompletionImpl*放入该队列

queue_aio_write(c);

ZTracer::Trace trace;

if (trace_info) {

ZTracer::Trace parent_trace("", nullptr, trace_info);

trace.init("rados operate", &objecter->trace_endpoint, &parent_trace);

}

trace.event("init root span");

// 将ObjectOperation对象封装成Objecter::Op类型的对象,增加了对象位置相关的信息

Objecter::Op *op = objecter->prepare_mutate_op(

oid, oloc, *o, snap_context, ut, flags,

oncomplete, &c->objver, osd_reqid_t(), &trace);

// 将操作通过网络发送出去

objecter->op_submit(op, &c->tid);

trace.event("rados operate op submitted");

return 0;

}

Objecter::op_submit

void Objecter::op_submit(Op *op, ceph_tid_t *ptid, int *ctx_budget)

{

shunique_lock rl(rwlock, ceph::acquire_shared);

ceph_tid_t tid = 0;

if (!ptid)

ptid = &tid;

op->trace.event("op submit");

// 转调

_op_submit_with_budget(op, rl, ptid, ctx_budget);

}

Objecter::_op_submit_with_budget

budget意思是预算,这里涉及到ceph的Throttle机制,用于流量控制的。暂时不深入。

这里仍然没有具体逻辑。主要包含流量控制和超时处理的处理。

void Objecter::_op_submit_with_budget(Op *op, shunique_lock& sul,

ceph_tid_t *ptid,

int *ctx_budget)

{

assert(initialized);

assert(op->ops.size() == op->out_bl.size());

assert(op->ops.size() == op->out_rval.size());

assert(op->ops.size() == op->out_handler.size());

// 流量控制相关

// throttle. before we look at any state, because

// _take_op_budget() may drop our lock while it blocks.

if (!op->ctx_budgeted || (ctx_budget && (*ctx_budget == -1))) {

int op_budget = _take_op_budget(op, sul);

// take and pass out the budget for the first OP

// in the context session

if (ctx_budget && (*ctx_budget == -1)) {

*ctx_budget = op_budget;

}

}

// 如果设置了超时时间,则注册超时函数到定时器。

// 超时使,调用op_cancel取消操作

if (osd_timeout > timespan(0)) {

if (op->tid == 0)

op->tid = ++last_tid;

auto tid = op->tid;

op->ontimeout = timer.add_event(osd_timeout,

[this, tid]() {

op_cancel(tid, -ETIMEDOUT); });

}

// 转调

_op_submit(op, sul, ptid);

}

Objecter::_op_submit

这里完成osd的选择和发送操作。

void Objecter::_op_submit(Op *op, shunique_lock& sul, ceph_tid_t *ptid)

{

// rwlock is locked

ldout(cct, 10) << __func__ << " op " << op << dendl;

// pick target

assert(op->session == NULL);

OSDSession *s = NULL;

// 从pool到pg到osd,一层层定位,最后定位到一个osd

bool check_for_latest_map = _calc_target(&op->target, nullptr)

== RECALC_OP_TARGET_POOL_DNE;

// 建立到osd的连接会话

// Try to get a session, including a retry if we need to take write lock

int r = _get_session(op->target.osd, &s, sul);

if (r == -EAGAIN ||

(check_for_latest_map && sul.owns_lock_shared())) {

epoch_t orig_epoch = osdmap->get_epoch();

sul.unlock();

if (cct->_conf->objecter_debug_inject_relock_delay) {

sleep(1);

}

sul.lock();

if (orig_epoch != osdmap->get_epoch()) {

// map changed; recalculate mapping

ldout(cct, 10) << __func__ << " relock raced with osdmap, recalc target"

<< dendl;

check_for_latest_map = _calc_target(&op->target, nullptr)

== RECALC_OP_TARGET_POOL_DNE;

if (s) {

put_session(s);

s = NULL;

r = -EAGAIN;

}

}

}

if (r == -EAGAIN) {

assert(s == NULL);

r = _get_session(op->target.osd, &s, sul);

}

assert(r == 0);

assert(s); // may be homeless

// perf count

_send_op_account(op);

// send?

assert(op->target.flags & (CEPH_OSD_FLAG_READ|CEPH_OSD_FLAG_WRITE));

if (osdmap_full_try) {

op->target.flags |= CEPH_OSD_FLAG_FULL_TRY;

}

bool need_send = false;

// 根据状态判断是暂停发送还是开始发送

if (osdmap->get_epoch() < epoch_barrier) {

ldout(cct, 10) << " barrier, paused " << op << " tid " << op->tid

<< dendl;

op->target.paused = true;

_maybe_request_map();

} else if ((op->target.flags & CEPH_OSD_FLAG_WRITE) &&

osdmap->test_flag(CEPH_OSDMAP_PAUSEWR)) {

ldout(cct, 10) << " paused modify " << op << " tid " << op->tid

<< dendl;

op->target.paused = true;

_maybe_request_map();

} else if ((op->target.flags & CEPH_OSD_FLAG_READ) &&

osdmap->test_flag(CEPH_OSDMAP_PAUSERD)) {

ldout(cct, 10) << " paused read " << op << " tid " << op->tid

<< dendl;

op->target.paused = true;

_maybe_request_map();

} else if (op->respects_full() &&

(_osdmap_full_flag() ||

_osdmap_pool_full(op->target.base_oloc.pool))) {

ldout(cct, 0) << " FULL, paused modify " << op << " tid "

<< op->tid << dendl;

op->target.paused = true;

_maybe_request_map();

} else if (!s->is_homeless()) {

need_send = true;

} else {

_maybe_request_map();

}

MOSDOp *m = NULL;

if (need_send) {

// 准备请求,创建并填充数据到MOSDOp *m

m = _prepare_osd_op(op);

}

OSDSession::unique_lock sl(s->lock);

if (op->tid == 0)

op->tid = ++last_tid;

ldout(cct, 10) << "_op_submit oid " << op->target.base_oid

<< " '" << op->target.base_oloc << "' '"

<< op->target.target_oloc << "' " << op->ops << " tid "

<< op->tid << " osd." << (!s->is_homeless() ? s->osd : -1)

<< dendl;

_session_op_assign(s, op);

if (need_send) {

// 发送请求,后续调用了Messager模块的Connection::send_messager将m放入对应的发送队列

// 放入队列成功后直接返回

_send_op(op, m);

}

// Last chance to touch Op here, after giving up session lock it can

// be freed at any time by response handler.

ceph_tid_t tid = op->tid;

if (check_for_latest_map) {

_send_op_map_check(op);

}

if (ptid)

*ptid = tid;

op = NULL;

sl.unlock();

// 释放会话

put_session(s);

ldout(cct, 5) << num_in_flight << " in flight" << dendl;

}

至于回调函数的调用,则是通过Massenger的dispatch机制完成的。

librados::RadosClient::connect

connect函数完成RadosClient的初始化。其中有一步是以dispatcher注册objecter到messenger上

int librados::RadosClient::connect()

{

...

objecter->init();

messenger->add_dispatcher_head(&mgrclient);

messenger->add_dispatcher_tail(objecter);

...

}

Messenger::ms_deliver_dispatch

void ms_deliver_dispatch(Message *m) {

m->set_dispatch_stamp(ceph_clock_now());

for (list::iterator p = dispatchers.begin();

p != dispatchers.end();

++p) {

if ((*p)->ms_dispatch(m))

return;

}

lsubdout(cct, ms, 0) << "ms_deliver_dispatch: unhandled message " << m << " " << *m << " from "

<< m->get_source_inst() << dendl;

assert(!cct->_conf->ms_die_on_unhandled_msg);

m-

Objecter::ms_dispatch

bool Objecter::ms_dispatch(Message *m)

{

ldout(cct, 10) << __func__ << " " << cct << " " << *m << dendl;

switch (m->get_type()) {

// these we exlusively handle

case CEPH_MSG_OSD_OPREPLY:

handle_osd_op_reply(static_cast(m));

return true;

case CEPH_MSG_OSD_BACKOFF:

handle_osd_backoff(static_cast(m));

return true;

case CEPH_MSG_WATCH_NOTIFY:

handle_watch_notify(static_cast(m));

m->put();

return true;

case MSG_COMMAND_REPLY:

if (m->get_source().type() == CEPH_ENTITY_TYPE_OSD) {

handle_command_reply(static_cast(m));

return true;

} else {

return false;

}

case MSG_GETPOOLSTATSREPLY:

handle_get_pool_stats_reply(static_cast(m));

return true;

case CEPH_MSG_POOLOP_REPLY:

handle_pool_op_reply(static_cast(m));

return true;

case CEPH_MSG_STATFS_REPLY:

handle_fs_stats_reply(static_cast(m));

return true;

// these we give others a chance to inspect

// MDS, OSD

case CEPH_MSG_OSD_MAP:

handle_osd_map(static_cast(m));

return false;

}

return false;

}

有关Objecter的补充

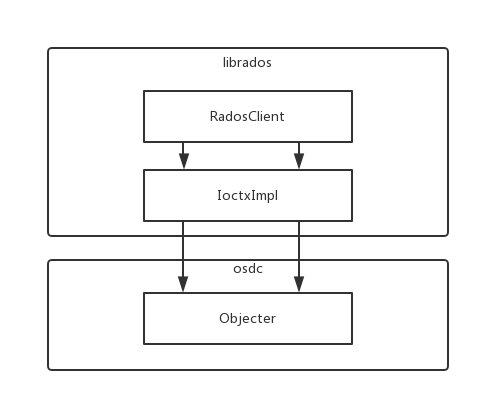

在librados中,RadosClient是其核心管理类,处理rados层面和pool层面的管理,并负责创建和维护Objecter等组件。IoctxImpl保存一个pool的上下文,负责单个pool的操作。osdc模块负责请求的封装和通过网络模块发送请求的工作,其核心类就是Objecter。