Spark存储体系——BlockInfoManager

1 Block锁的基本概念

BlockInfoManager是BlockManager内部的子组件之一,BlockInfoManager对Block的锁管理采用了共享锁与排他锁,其中读锁是共享锁,写锁是排他锁。

BlockInfoManager的成员属性有以下几个:

- infos:BlockId与BlockInfo之间映射关系的缓存

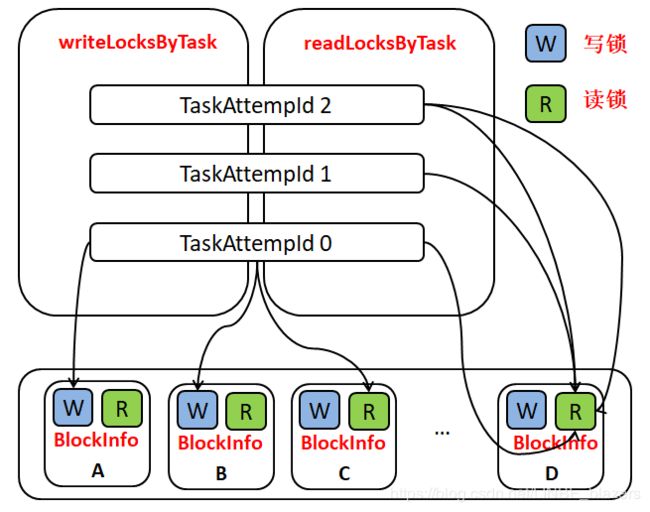

- writeLocksByTask:每次任务执行尝试的标识TaskAttemptId与执行获取的Block的写锁之间的映射关系。TaskAttemptId与写锁之间是一对多的关系,即一次任务尝试执行会获取零到多个Block的写锁。

- readLocksByTask:每次任务尝试执行的标识TaskAttemptId与执行获取的Block的读锁之间的映射关系。TaskAttemptId与读锁之间是一对多的关系,即一次任务尝试执行会获取到多个Block的读锁,并且会记录对于同一个Block的读锁的占用次数。

可用下图来展示BlockInfoManager对Block的锁管理:

由图可知以下内容:

- 由 TaskAttempId 0 标记的任务尝试执行线程获取了BlockInfo A 和 BlockInfo B 的写锁,并且获取了 BlockInfo C 和 BlockInfo D 的读锁

- 由 TaskAttemptId 1 标记的任务尝试执行线程获取了 BlockInfo D 的读锁

- 由 TaskAttemptId 2 标记的任务尝试执行线程多次获取了BlockInfo D 的读锁,这说明 Block 的读锁是可以重入的

根据图中三个任务尝试执行线程获取锁的不同展示,可知一个任务尝试执行线程可以同时获得零到多个不同 Block 的写锁或零到多个一同 Block 的读锁,但不能同时获得同一个Block的读锁与写锁。读锁是可以重入的,但是写锁不能重入。

2 Block锁的实现

2.1 registerTask

注册TaskAttemptId。

//org.apache.spark.storage.BlockInfoManager

def registerTask(taskAttemptId: TaskAttemptId): Unit = synchronized {

require(!readLocksByTask.contains(taskAttemptId),

s"Task attempt $taskAttemptId is already registered")

readLocksByTask(taskAttemptId) = ConcurrentHashMultiset.create()

}2.2 currentTaskAttmeptId

获取任务上下文TaskContext中当前正在执行的任务尝试的TaskAttemptId。如果任务上下文TaskContext中没有任务尝试的TaskAttemptId,那么返回BlockInfo.NOM_TASK_WRITER。

//org.apache.spark.storage.BlockInfoManager

private def currentTaskAttemptId: TaskAttemptId = {

Option(TaskContext.get()).map(_.taskAttemptId()).getOrElse(BlockInfo.NON_TASK_WRITER)

}2.3 lockForReading锁定读

//org.apache.spark.storage.BlockInfoManager

def lockForReading(

blockId: BlockId,

blocking: Boolean = true): Option[BlockInfo] = synchronized {

logTrace(s"Task $currentTaskAttemptId trying to acquire read lock for $blockId")

do {

infos.get(blockId) match {

case None => return None

case Some(info) =>

if (info.writerTask == BlockInfo.NO_WRITER) {

info.readerCount += 1

readLocksByTask(currentTaskAttemptId).add(blockId)

logTrace(s"Task $currentTaskAttemptId acquired read lock for $blockId")

return Some(info)

}

}

if (blocking) {

wait()

}

} while (blocking)

None

}- 1)从infos中获取BlockId对应的BlockInfo。如果缓存infos中没有对应的BlockInfo,则返回None,否则进入下一步

- 2)如果Block的写锁没有被其它任务尝试线程占用,则由当前任务尝试线程持有读锁并返回BlockInfo,否则进入下一步

- 3)如果允许阻塞(即blocking为true),那么当前线程将等待,直到占用写锁的线程释放Block的写锁后唤醒当前线程。如果占用写锁的线程一直不释放写锁,那么当前线程将出现“饥饿”状况,即可能无限期等待下去。

2.4 lockForWriting锁定写

//org.apache.spark.storage.BlockInfoManager

def lockForWriting(

blockId: BlockId,

blocking: Boolean = true): Option[BlockInfo] = synchronized {

logTrace(s"Task $currentTaskAttemptId trying to acquire write lock for $blockId")

do {

infos.get(blockId) match {

case None => return None

case Some(info) =>

if (info.writerTask == BlockInfo.NO_WRITER && info.readerCount == 0) {

info.writerTask = currentTaskAttemptId

writeLocksByTask.addBinding(currentTaskAttemptId, blockId)

logTrace(s"Task $currentTaskAttemptId acquired write lock for $blockId")

return Some(info)

}

}

if (blocking) {

wait()

}

} while (blocking)

None

}- 1)从infos中获取BlockId对应的BlockInfo,如果缓存infos中没有对应的BlockInfo,则返回None,否则进入下一步

- 2)如果Block的写锁没有被其它任务尝试线程占用,且没有线程正在读取此Block,则由当前任务尝试线程持有写锁并返回BlockInfo,否则进入下一步。写锁没有被占用并且没有线程正在读取此Block的条件也说明了任务尝试执行线程不能同时获得一个Block的读锁与写锁

- 3)如果允许阻塞,那么当前线程将等待,直到占用写锁的线程释放Block的写锁后唤醒当前线程。如果占有写锁的线程一直不释放写锁,那么当前线程将出现“饥饿”,即可能出现无限期等待下去

lockForReading和lockForWriting这两个方法共同实现了写锁与写锁、写锁与读锁之间的互斥性,同时也实现了读锁与读锁之间的共享。此外,这两个方法都提供了阻塞的方式。这种方式在读锁或写锁的争用较少或锁的持有时间都非常短暂,能够带来一定的性能提升。如果获取锁的线程发现锁被占用,就立即失败,然后这个锁很快又被释放了,结果是获取锁的线程无法正常执行。如果获取锁的线程可以等待的话,很快它就发现自己能重新获得锁了,然后推进当前线程继续执行。

2.5 get:获取BlockId对应的BlockInfo

//org.apache.spark.storage.BlockInfoManager

private[storage] def get(blockId: BlockId): Option[BlockInfo] = synchronized {

infos.get(blockId)

}2.6 unlock:释放BlockId对应

//org.apache.spark.storage.BlockInfoManager

def unlock(blockId: BlockId): Unit = synchronized {

logTrace(s"Task $currentTaskAttemptId releasing lock for $blockId")

val info = get(blockId).getOrElse {

throw new IllegalStateException(s"Block $blockId not found")

}

if (info.writerTask != BlockInfo.NO_WRITER) {

info.writerTask = BlockInfo.NO_WRITER

writeLocksByTask.removeBinding(currentTaskAttemptId, blockId)

} else {

assert(info.readerCount > 0, s"Block $blockId is not locked for reading")

info.readerCount -= 1

val countsForTask = readLocksByTask(currentTaskAttemptId)

val newPinCountForTask: Int = countsForTask.remove(blockId, 1) - 1

assert(newPinCountForTask >= 0,

s"Task $currentTaskAttemptId release lock on block $blockId more times than it acquired it")

}

notifyAll()

}- 1)获取BlockId对应的BlockInfo

- 2)如果当前任务尝试线程已经获得了Block的写锁,则释放当前Block的写锁

- 3)如果当前任务尝试线程没有获得Block的写锁,则释放当前Block的读锁。释放读锁实际是减少了当前任务尝试线程已经获得的Block的读锁次数

2.7 downgradeLock:锁降级

//org.apache.spark.storage.BlockInfoManager

def downgradeLock(blockId: BlockId): Unit = synchronized {

logTrace(s"Task $currentTaskAttemptId downgrading write lock for $blockId")

val info = get(blockId).get

require(info.writerTask == currentTaskAttemptId,

s"Task $currentTaskAttemptId tried to downgrade a write lock that it does not hold on" + s" block $blockId")

unlock(blockId)

val lockOutcome = lockForReading(blockId, blocking = false)

assert(lockOutcome.isDefined)

}- 1)获取BlockId对应的BlockInfo

- 2)调用unlock方法释放当前任务尝试线程从BlockId对应Block获取的写锁

- 3)由于已经释放了BlockId对应Block的写锁,所以用非阻塞方式获取BlockId对应Block的读锁

2.8 lockNewBlockForWriting:写新Block时获得写锁

//org.apache.spark.storage.BlockInfoManager

def lockNewBlockForWriting(

blockId: BlockId,

newBlockInfo: BlockInfo): Boolean = synchronized {

logTrace(s"Task $currentTaskAttemptId trying to put $blockId")

lockForReading(blockId) match {

case Some(info) =>.

false

case None =>

infos(blockId) = newBlockInfo

lockForWriting(blockId)

true

}

}- 1)获取BlockId对应的Block的读锁

- 2)如果上一步能够获取Block的读锁,则说明BlockId对应的Block已经存在。这种情况发生在多个线程在写同一个Block时产生竞争,已经有线程率先一步,当前线程将没有必要再获得写锁,只需要返回false

- 3)如果第1步没有获取到Block的读锁,则说明BlockId对应的Block还不存在。这种情况下,当前线程首先将BlockId与新的BlockInfo的映射关系放入infos,然后获取BlockId对应的Block的写锁,最后返回true

2.9 removeBlock:移除BlockId对应的BlockInfo

//org.apache.spark.storage.BlockInfoManager

def removeBlock(blockId: BlockId): Unit = synchronized {

logTrace(s"Task $currentTaskAttemptId trying to remove block $blockId")

infos.get(blockId) match {

case Some(blockInfo) =>

if (blockInfo.writerTask != currentTaskAttemptId) {

throw new IllegalStateException(

s"Task $currentTaskAttemptId called remove() on block $blockId without a write lock")

} else {

infos.remove(blockId)

blockInfo.readerCount = 0

blockInfo.writerTask = BlockInfo.NO_WRITER

writeLocksByTask.removeBinding(currentTaskAttemptId, blockId)

}

case None =>

throw new IllegalArgumentException(

s"Task $currentTaskAttemptId called remove() on non-existent block $blockId")

}

notifyAll()

}- 1)获取BlockId对应的BlockInfo

- 2)如果对BlockInfo正在写入的任务尝试线程是当前线程的话,当前线程才有权利去移除BlockInfo。移除BlockInfo操作如下:

- ①将BlockInfo从infos中移除

- ②将BlockInfo的读线程数清零

- ③将BlockInfo的writeTask置为BlockInfo.NO_WRITER

- ④将任务尝试线程与BlockId的关系清除

- 3)通知所有的BlockId对应的Block的锁上等待的线程