使用tensorflow基于lenet-5模型识别手写数字

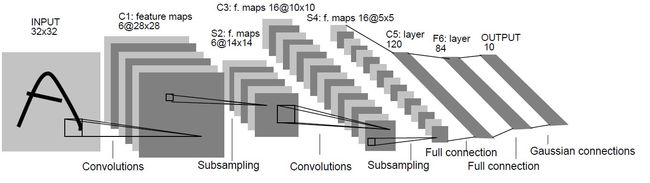

lenet-5模式是卷积神经网络的经典模型,它的结构如下:

第一层,卷积层

输入为原始图像,大小为32*32*1,一个个卷积层过滤器尺寸为5x5,深度为6,不使用全零填充,步长为1.因此输出尺寸为28,深度为6。

第二层,池化层

输入为第一层的输出,是28*28*6的矩阵,本层过滤器大小2x2,长宽步长均为2,输出大小为14x14x6.

第三层,卷积层

输入为14x14x6,使用过滤器大小5x5,深度为16,本层不使用全零填充,步长为1,输出矩阵大小为10x10x16.

第四次,池化层

输入矩阵大小10x10x16,过滤器大小2x2,步长为2,本层输出5x5x16.

第五层,卷积层

输入矩阵5x5x16,因为过滤器大小也是5x5,其实和全连接层没有区别,之后的tensorflow程序中也会将这层看成全连接层,本层输出节点个数120个。

第六次,全连接层

输入节点个数120,输出节点个数84个

第七层,全连接层

输入节点84个,输出节点个数10个。

代码如下,由于mnist的手写数字输入是28*28*1,所以做了一点修改,第一个卷积层使用的全0填充,这样就是的输出是28*28*6.

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import os

import numpy as np

from tensorflow.contrib.factorization.examples.mnist import fill_feed_dict

from CNN.LeNet5_infernece import CONV1_SIZE, NUM_CHANNELS, CONV1_DEEP

INPUT_NODE=784

OUTPUT_NODE=10

IMAGE_SIZE=28

NUM_LAYERS=1

NUM_LABELS=10

CONV1_DEEP=6

CONV1_SIZE=5

CONV2_DEEP=16

CONV2_SIZE=5

FC1_SIZE=120

FC2_SIZE=84

BATCH_SIZE=100

LEARNING_RATE_BASE=0.01

LEARNING_RATE_DECAY=0.99

REGULARIZATION_RATE = 0.0001

TRAINING_STEPS = 10000

MOVING_AVERAGE_DECAY = 0.99

def inference(input_tensor,train,regularizer):

with tf.variable_scope('layer1-conv1'):

conv1_weights=tf.get_variable("weight", [CONV1_SIZE, CONV1_SIZE,NUM_CHANNELS,CONV1_DEEP], initializer=tf.truncated_normal_initializer(stddev=0.1))

conv1_biases=tf.get_variable("biases",[CONV1_DEEP],initializer=tf.constant_initializer(0.0))

conv1=tf.nn.conv2d(input_tensor,conv1_weights,strides=[1,1,1,1],padding='SAME')

relu1=tf.nn.relu(tf.nn.bias_add(conv1, conv1_biases))

with tf.variable_scope('layer2-pool1'):

pool1=tf.nn.max_pool(relu1, ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

with tf.variable_scope('layer3-conv2'):

conv2_weights=tf.get_variable("weight",[CONV2_SIZE,CONV2_SIZE,CONV1_DEEP,CONV2_DEEP],initializer=tf.truncated_normal_initializer(stddev=0.1))

conv2_biases=tf.get_variable("biases",[CONV2_DEEP],initializer=tf.constant_initializer(0.0))

conv2=tf.nn.conv2d(pool1,conv2_weights,[1,1,1,1],padding='VALID')

relu2=tf.nn.relu(tf.nn.bias_add(conv2,conv2_biases))

with tf.variable_scope('layer4-pool2'):

pool2=tf.nn.max_pool(relu2,ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

pool_shape=pool2.get_shape().as_list()

print(pool_shape)

nodes=pool_shape[1]*pool_shape[2]*pool_shape[3]

reshaped=tf.reshape(pool2,[pool_shape[0],nodes])

with tf.variable_scope('layer5-fc1'):

fc1_weights=tf.get_variable("weights",[nodes,FC1_SIZE],initializer=tf.truncated_normal_initializer(stddev=0.1))

#权重正则化

if regularizer!=None:

tf.add_to_collection("losses",regularizer(fc1_weights))

fc1_biases=tf.get_variable("bias",[FC1_SIZE],initializer=tf.constant_initializer(0.1))

fc1=tf.nn.relu(tf.matmul(reshaped,fc1_weights)+fc1_biases)

if train:

fc1=tf.nn.dropout(fc1,0.5)

with tf.variable_scope('layer6-fc2'):

fc2_weights=tf.get_variable("weights",[FC1_SIZE,FC2_SIZE],initializer=tf.truncated_normal_initializer(stddev=0.1))

#权重正则化

if regularizer!=None:

tf.add_to_collection("losses",regularizer(fc2_weights))

fc2_biases=tf.get_variable("bias",[FC2_SIZE],initializer=tf.constant_initializer(0.1))

fc2=tf.nn.relu(tf.matmul(fc1,fc2_weights)+fc2_biases)

if train:

fc2=tf.nn.dropout(fc2,0.5)

with tf.variable_scope("layer7-fc3"):

fc3_weights=tf.get_variable("weights",[FC2_SIZE,OUTPUT_NODE],initializer=tf.truncated_normal_initializer(stddev=0.1))

#权重正则化

if regularizer!=None:

tf.add_to_collection("losses",regularizer(fc3_weights))

fc3_biases=tf.get_variable("bias",[OUTPUT_NODE],initializer=tf.constant_initializer(0.1))

logit=tf.matmul(fc2,fc3_weights)+fc3_biases

return logit

def train(mnist):

x=tf.placeholder(tf.float32, [BATCH_SIZE,IMAGE_SIZE,IMAGE_SIZE,NUM_LAYERS],name='x-input')

y_=tf.placeholder(tf.float32,[None,OUTPUT_NODE],name='y-input')

regularizer = tf.contrib.layers.l2_regularizer(REGULARIZATION_RATE)

y=inference(x,True, regularizer)

global_step=tf.Variable(0,trainable=False)

variable_averages=tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY,global_step)

variable_averages_op=variable_averages.apply(tf.trainable_variables())

cross_entropy=tf.nn.sparse_softmax_cross_entropy_with_logits(labels=tf.arg_max(y_, 1), logits=y)

cross_entropy_mean=tf.reduce_mean(cross_entropy)

loss=cross_entropy_mean+tf.add_n(tf.get_collection('losses'))

learning_rate=tf.train.exponential_decay(LEARNING_RATE_BASE, global_step=global_step, decay_steps=mnist.train.num_examples / BATCH_SIZE, decay_rate=LEARNING_RATE_DECAY, staircase=True)

train_step=tf.train.GradientDescentOptimizer(learning_rate).minimize(loss,global_step=global_step)

with tf.control_dependencies([train_step,variable_averages_op]):

train_op=tf.no_op(name='train')

correct_prediction=tf.equal(tf.arg_max(y,1), tf.arg_max(y_,1))

accuracy=tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

saver=tf.train.Saver()

with tf.Session() as sess:

tf.global_variables_initializer().run()

for i in range(TRAINING_STEPS):

xs,ys=mnist.train.next_batch(BATCH_SIZE)

reshaped_xs=np.reshape(xs,(BATCH_SIZE,IMAGE_SIZE,IMAGE_SIZE,NUM_LAYERS))

_, loss_value,accu, step = sess.run([train_op, loss,accuracy, global_step], feed_dict={x: reshaped_xs, y_: ys})

if i%1000==0:

print('step',step,'loss is',loss_value)

print('step',step,'accuracy is',accu)

def main(argv=None):

mnist = input_data.read_data_sets("C:/Users/xuwei/Desktop", one_hot=True)

train(mnist)

if __name__ == '__main__':

main()

整个训练过程还是挺快的,10000轮后的准确率在98%左右。