【项目小结】爬虫学习进阶:获取百度指数历史数据

目录

序言

问题描述

问题解决

登录百度账号

接口参数说明以及注意事项

参数word

参数startDate与endDate

参数area

JS逆向获取解密逻辑

源码

baiduindex_manage.py

baiduindex_config.py

baiduindex_index.py

baiduindex_utils.py

结语

序言

前排致谢longxiaofei@github的repository: spider-BaiduIndex

前几天前室友yy询问笔者是否做过百度指数的爬虫,笔者没有尝试过,随即打开百度指数的网站做了一些分析,发现呈现数据的canvas画布上的数据都被加密了(Figure 1)

考虑到之前在网易云音乐爬虫编写上有过一些JS逆向解密的经验,正好也有一段时间没有写点爬虫了,并不想用借助selenium驱动浏览器对canvas画布上的折线图进行图像识别来获取数据,想借这个机会再试试JS逆向,可是百度的JS实在是又臭又长,熬了一夜再加整了一天也没搞清楚究竟是在哪里发生解密的(PS:笔者很想知道这种解密JS代码的定位到底有什么比较高效率的方法,比如通过断点调试什么的)。

无奈在同性交友网上找找有没有人做过类似的事情,于是找到了spider-BaiduIndex,笔者一直觉得像这种需要编写解密逻辑的爬虫时效性是很差的,只要稍微修改一下密钥或者加密方法就完全不可行了,该repository截至2020-07-29依然可行。但是longxiaofei@github并没有在README里详细描述爬取思路,笔者在借鉴该脚本后,在本文中将详细解析百度指数爬取的思路,并对spider-BaiduIndex进行一定程度的完善。

声明:如有侵权,私信删除!

问题描述

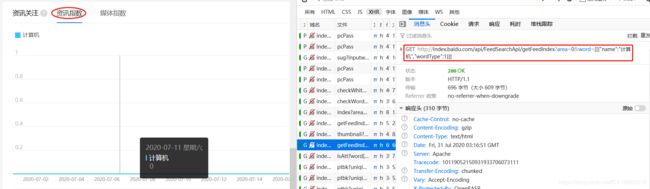

事实上百度指数搜索结果页面上除了搜索指数,媒体指数与资讯指数三张canvas图表上的数据存在被加密的情况(Figure 2 ~ Figure 7)

其他的数据如地域分布以及性别年龄兴趣分布,包括媒体指数具体的新闻来源信息都是没有被加密的(Figure 8 ~ Figure 10),在爬虫获取上是没有太大障碍的,因此不在本文的涉及范围内

以下列出上述提到的各个接口的URL,以及接口需要提交的参数信息示例,本文主要是对前三个接口(搜索指数:API_SEARCH_INDEX,媒体指数:API_NEWS_INDEX,资讯指数:API_FEEDSEARCH_INDEX)数据获取并解密过程的详细说明,文末附上完整源码

API_SEARCH_INDEX = "http://index.baidu.com/api/SearchApi/index?{}".format # 搜索指数查询接口

API_NEWS_INDEX = "http://index.baidu.com/api/NewsApi/getNewsIndex?{}".format # 媒体指数查询接口

API_FEEDSEARCH_INDEX = "http://index.baidu.com/api/FeedSearchApi/getFeedIndex?{}".format # 资讯指数查询接口

API_NEWS_SOURCE = "http://index.baidu.com/api/NewsApi/checkNewsIndex?{}".format # 新闻来源查询接口: 媒体指数数据来源

API_SEARCH_THUMBNAIL = "http://index.baidu.com/api/SearchApi/thumbnail?{}".format # 搜索指数缩略图: 目前我不确定这个数据是用来做什么的, 我猜是搜索指数在很长一段时间内的概况, 因为并不能与Searchapi/index?接口得到的结果匹配上, 而且其指数数据量有几百天, 不太清楚具体是什么

API_INDEX_BY_REGION = "http://index.baidu.com/api/SearchApi/region?{}".format # 搜索指数分地区情况统计

API_INDEX_BY_SOCIAL = "http://index.baidu.com/api/SocialApi/baseAttributes?{}".format # 搜索指数分年龄性别兴趣统计

### API_SEARCH_INDEX参数列表

KWARGS_SEARCH_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} # * Tips: 可以不传入startDate与endDate而改为days参数, 即获取最近days天的指数

### API_NEWS_INDEX参数列表

KWARGS_NEWS_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} # * Tips: 可以不传入startDate与endDate而改为days参数, 即获取最近days天的指数

### API_FEEDSEARCH_INDEX参数列表

KWARGS_FEEDSEARCH_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} # * Tips: 可以不传入startDate与endDate而改为days参数, 即获取最近days天的指数

### API_NEWS_SOURCE参数列表

KWARGS_NEWS_SOURCE = {

"dates[]": "2020-07-02,2020-07-04", # dates[]: 逗号拼接的%Y-%m-%d格式的日期字符串

"type": "day", # type: 默认按日获取

"words": "围棋", # words: 关键词, 该接口应该只能支持单关键词的查询

}

### API_SEARCH_THUMBNAIL参数列表

KWARGS_SEARCH_THUMBNAIL = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

}

### API_INDEX_BY_REGION参数列表

KWARGS_INDEX_BY_REGION = {

"region": 0, # region: 这个region很可能是可以既可以指省份, 也可以指华东华北这样的大区域的

"word": "围棋,象棋", # word: 多关键词请使用逗号分隔

"startDate": "2020-06-30", # startDate: 起始日期(包含该日)

"endDate": "2020-07-30", # endDate: 中止日期(包含该日)

"days": "", # days: 默认空字符串

}

### API_INDEX_BY_SOCIAL参数列表

KWARGS_INDEX_BY_SOCIAL = {

"wordlist[]": "围棋,象棋", # wordlist[]: 多关键词请使用逗号分隔

}

问题解决

解决获取三种指数问题,本文分以下几点展开:

- 登录百度账号

- 接口参数说明以及注意事项

- JS逆向获取解密逻辑

登录百度账号

百度指数的获取是需要登录百度账号的,为了避开爬虫登录百度账号的问题,我们直接从百度首页进行登录后,随便选取一个域名为www.baidu.com的数据包,取得其中请求头的Cookie信息即可(Figure 11)

为了验证Cookie是否可用,可以使用以下代码进行测试,代码逻辑是通过携带Cookie信息访问百度首页以确定用户名图标,退出登录按钮以及登录按钮是否存在来进行判定,但是随着百度首页的更新可能需要有所修正,之所以选取了三个元素也是增强判定的可信度

import math

import json

import requests

from bs4 import BeautifulSoup

from datetime import datetime,timedelta

from baiduindex_config import *

HEADERS = {

"Host": "index.baidu.com",

"Connection": "keep-alive",

"X-Requested-With": "XMLHttpRequest",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:78.0) Gecko/20100101 Firefox/78.0",

}

URL_BAIDU = "https://www.baidu.com/" # 百度首页

def request_with_cookies(url,cookies,timeout=30): # 重写requests.get方法: 百度指数爬虫中大部分request请求都需要设置cookies后访问

headers = HEADERS.copy() # 使用config中默认的伪装头

headers["Cookie"] = cookies # 设置cookies

response = requests.get(url,headers=headers,timeout=timeout) # 发起请求

if response.status_code!=200: raise requests.Timeout # 请求异常: 一般为超时异常

return response # 返回响应内容

def is_cookies_valid(cookies): # 检查cookies是否可用: 通过访问百度首页并检查相关元素是否存在

response = request_with_cookies(URL_BAIDU,cookies) # 使用cookie请求百度首页, 获取响应内容

html = response.text # 获取响应的页面源代码

with open("1.html","w") as f:

f.write(html)

soup = BeautifulSoup(html,"lxml") # 解析页面源代码

flag1 = soup.find("a",class_="quit") is not None # "退出登录"按钮(a标签)是否存在

flag2 = soup.find("span",class_="user-name") is not None # 用户名(span标签)是否存在

flag3 = soup.find("a",attrs={"name":"tj_login"}) is None # "登录"按钮(a标签)是否存在

return flag3 and (flag1 or flag2) # cookies可用判定条件: 登录按钮不存在且用户名与退出登录按钮至少存在一个

接口参数说明以及注意事项

通过简单抓包观察可以发现,搜索指数:API_SEARCH_INDEX,媒体指数:API_NEWS_INDEX,资讯指数:API_FEEDSEARCH_INDEX的参数都是相同的

import json

KWARGS_SEARCH_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} 以下分别对每个参数的说明以及注意点:

参数word

参数word是一个json格式的二元列表,因为百度指数支持多关键词指数查询以及不同关键词之间的对比查询(Figure 12),目前支持至多5组关键词的对比,每组关键词的数量不超过3,因此需要对传入的关键词组进行有效性检查

MAX_KEYWORD_GROUP_NUMBER = 5 # 最多可以将MAX_KEYWORD_GROUP_NUMBER组关键词进行比较

MAX_KEYWORD_GROUP_SIZE = 3 # 最多可以将MAX_KEYWORD_GROUP_SIZE个关键词进行组合

def is_keywords_valid(keywords): # 检查关键词集合是否合法: 满足最大的关键词数量限制, keywords为二维列表

flag1 = len(keywords)<=MAX_KEYWORD_GROUP_NUMBER # MAX_KEYWORD_GROUP_NUMBER

flag2 = max(map(len,keywords))<=MAX_KEYWORD_GROUP_SIZE # MAX_KEYWORD_GROUP_SIZE

return flag1 and flag2

is_keywords_valid([['拳皇','街霸','铁拳'],['英雄联盟'],['围棋'],['崩坏'],['明日方舟']])此外并非每个关键词百度指数都有收录,通过抓包可以看到在指数查询前会有对该关键词的存在性进行检查的接口调用(Figure 13),可以查询的关键词(如计算机)与无法查询的关键词(如囚生CY)如下所示(Figure 14 & Figure 15)

由上图发现可以查询的关键词响应结果的result字段为空列表,而不可查询的关键词响应结果的result字段是非空的,通过这个规律我们可以对每个关键词进行预筛选(注意一定要在登录状态下访问该check接口,否则返回结果的message字段将会是not login)

API_BAIDU_INDEX_EXIST = "http://index.baidu.com/api/AddWordApi/checkWordsExists?word={}".format # 百度指数关键词是否存在检查

def is_keyword_existed(keyword,cookies): # 检查需要查询百度指数的关键词是否存在: keyword为单个关键词字符串

response = request_with_cookies(API_BAIDU_INDEX_EXIST(keyword),cookies)

json_response = json.loads(response.text)

if json_response["message"]=="not login": # message=="not login"表明cookies已经失效

raise Exception("Cookies has been expired !")

flag1 = len(json_response["data"])==1 # 正常关键词响应结果的data字段里只有一个字段值: result

flag2 = len(json_response["data"]["result"])==0 # 且result字段值应该是空列表

#print(flag1)

#print(flag2)

return flag1 or flag2 # 二者满足其一即认为关键词有效

参数startDate与endDate

在Figure 2 Figure 4 Figure 6中我们看到请求参数并没有startDate与endDate,而是days,这是因为默认显示的指数时间跨度为最近30天,需要在百度指数页面上自定义设置后再抓包就可以看到这两个请求参数(Figure 16)

这里需要注意的是,时间跨度的天数超过366天(截至20200731测试结果)后,指数的折现图将不再按照每天展示,而更改为每周显示(Figure 17 & Figure 18)

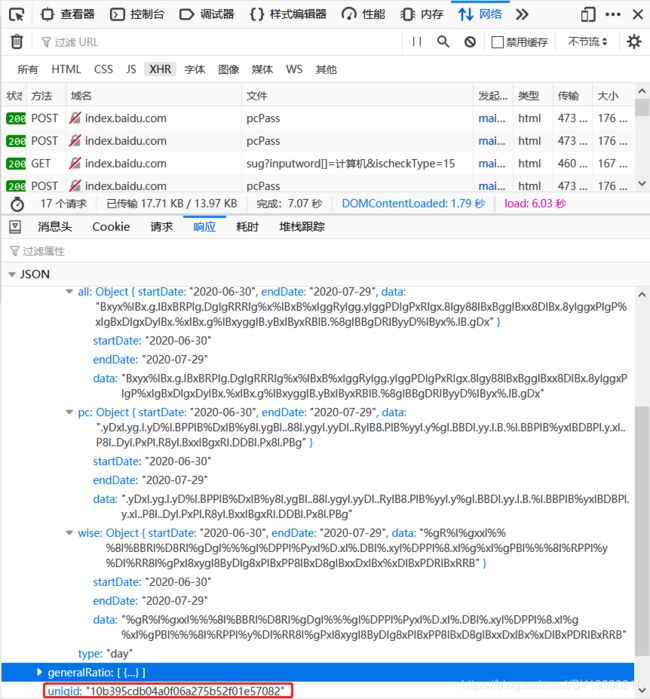

指数数据是按日或按周可以通过响应结果中的type字段看出,如果是按周,则type字段值将为week(Figure 18)

出于可适性考虑笔者建议不要使得时间跨度超过一年(366天),使用按日的数据总是要比按周的要更划算的。

参数area

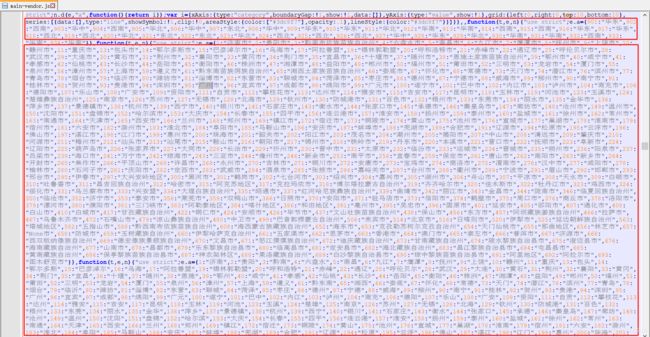

参数area的默认值为0,表示统计全国的指数,也可以分地区考虑,通过查询文件名开头为main_vendor的JS文件可以找到全国各个区域,各个省份,各个城市的编号(Figure 19 ~ Figure 21)

简单点直接从JS里复制下来用就可以了

# 1. 百度指数js常用变量

## 1.1 省份区域索引表: CODE2AREA

## - CODE2PROVINCE来源: main-vendor.xxxxxxxxxxxxxxxxxxx.js源码中直接复制可得

## - js源码URL: e.g. http://index.baidu.com/v2/static/js/main-vendor.dbf1ed721cfef2e2755d.js

CODE2AREA = {

901:"华东",902:"西南",903:"华中",904:"西南",905:"华北",

906:"华中",907:"东北",908:"华中",909:"华东",910:"华东",

911:"华北",912:"华南",913:"华南",914:"西南",915:"西南",

916:"华东",917:"华东",918:"西北",919:"西北",920:"华北",

921:"东北",922:"东北",923:"华北",924:"西北",925:"西北",

926:"西北",927:"华中",928:"华东",929:"华北",930:"华南",

931:"华南",932:"西南",933:"华南",934:"华南",

}

## 1.2 省份编号索引表: CODE2PROVINCE, PROVINCE2CODE

## - CODE2PROVINCE来源: main-vendor.xxxxxxxxxxxxxxxxxxx.js源码中直接复制可得

## - js源码URL: e.g. http://index.baidu.com/v2/static/js/main-vendor.dbf1ed721cfef2e2755d.js

CODE2PROVINCE = {

901:"山东",902:"贵州",903:"江西",904:"重庆",905:"内蒙古",

906:"湖北",907:"辽宁",908:"湖南",909:"福建",910:"上海",

911:"北京",912:"广西",913:"广东",914:"四川",915:"云南",

916:"江苏",917:"浙江",918:"青海",919:"宁夏",920:"河北",

921:"黑龙江",922:"吉林",923:"天津",924:"陕西",925:"甘肃",

926:"新疆",927:"河南",928:"安徽",929:"山西",930:"海南",

931:"台湾",932:"西藏",933:"香港",934:"澳门",

}

PROVINCE2CODE = {province:str(code) for code,province in CODE2PROVINCE.items()}

## 1.3 城市编号索引表: CODE2CITY, CITY2CODE

## - CODE2PROVINCE来源: main-vendor.xxxxxxxxxxxxxxxxxxx.js源码中直接复制可得

## - js源码URL: e.g. http://index.baidu.com/v2/static/js/main-vendor.dbf1ed721cfef2e2755d.js

CODE2CITY = {

1:"济南",2:"贵阳",3:"黔南",4:"六盘水",5:"南昌",

6:"九江",7:"鹰潭",8:"抚州",9:"上饶",10:"赣州",

11:"重庆",13:"包头",14:"鄂尔多斯",15:"巴彦淖尔",16:"乌海",

17:"阿拉善盟",19:"锡林郭勒盟",20:"呼和浩特",21:"赤峰",22:"通辽",

25:"呼伦贝尔",28:"武汉",29:"大连",30:"黄石",31:"荆州",

32:"襄阳",33:"黄冈",34:"荆门",35:"宜昌",36:"十堰",

37:"随州",38:"恩施",39:"鄂州",40:"咸宁",41:"孝感",

42:"仙桃",43:"长沙",44:"岳阳",45:"衡阳",46:"株洲",

47:"湘潭",48:"益阳",49:"郴州",50:"福州",51:"莆田",

52:"三明",53:"龙岩",54:"厦门",55:"泉州",56:"漳州",

57:"上海",59:"遵义",61:"黔东南",65:"湘西",66:"娄底",

67:"怀化",68:"常德",73:"天门",74:"潜江",76:"滨州",

77:"青岛",78:"烟台",79:"临沂",80:"潍坊",81:"淄博",

82:"东营",83:"聊城",84:"菏泽",85:"枣庄",86:"德州",

87:"宁德",88:"威海",89:"柳州",90:"南宁",91:"桂林",

92:"贺州",93:"贵港",94:"深圳",95:"广州",96:"宜宾",

97:"成都",98:"绵阳",99:"广元",100:"遂宁",101:"巴中",

102:"内江",103:"泸州",104:"南充",106:"德阳",107:"乐山",

108:"广安",109:"资阳",111:"自贡",112:"攀枝花",113:"达州",

114:"雅安",115:"吉安",117:"昆明",118:"玉林",119:"河池",

123:"玉溪",124:"楚雄",125:"南京",126:"苏州",127:"无锡",

128:"北海",129:"钦州",130:"防城港",131:"百色",132:"梧州",

133:"东莞",134:"丽水",135:"金华",136:"萍乡",137:"景德镇",

138:"杭州",139:"西宁",140:"银川",141:"石家庄",143:"衡水",

144:"张家口",145:"承德",146:"秦皇岛",147:"廊坊",148:"沧州",

149:"温州",150:"沈阳",151:"盘锦",152:"哈尔滨",153:"大庆",

154:"长春",155:"四平",156:"连云港",157:"淮安",158:"扬州",

159:"泰州",160:"盐城",161:"徐州",162:"常州",163:"南通",

164:"天津",165:"西安",166:"兰州",168:"郑州",169:"镇江",

172:"宿迁",173:"铜陵",174:"黄山",175:"池州",176:"宣城",

177:"巢湖",178:"淮南",179:"宿州",181:"六安",182:"滁州",

183:"淮北",184:"阜阳",185:"马鞍山",186:"安庆",187:"蚌埠",

188:"芜湖",189:"合肥",191:"辽源",194:"松原",195:"云浮",

196:"佛山",197:"湛江",198:"江门",199:"惠州",200:"珠海",

201:"韶关",202:"阳江",203:"茂名",204:"潮州",205:"揭阳",

207:"中山",208:"清远",209:"肇庆",210:"河源",211:"梅州",

212:"汕头",213:"汕尾",215:"鞍山",216:"朝阳",217:"锦州",

218:"铁岭",219:"丹东",220:"本溪",221:"营口",222:"抚顺",

223:"阜新",224:"辽阳",225:"葫芦岛",226:"张家界",227:"大同",

228:"长治",229:"忻州",230:"晋中",231:"太原",232:"临汾",

233:"运城",234:"晋城",235:"朔州",236:"阳泉",237:"吕梁",

239:"海口",241:"万宁",242:"琼海",243:"三亚",244:"儋州",

246:"新余",253:"南平",256:"宜春",259:"保定",261:"唐山",

262:"南阳",263:"新乡",264:"开封",265:"焦作",266:"平顶山",

268:"许昌",269:"永州",270:"吉林",271:"铜川",272:"安康",

273:"宝鸡",274:"商洛",275:"渭南",276:"汉中",277:"咸阳",

278:"榆林",280:"石河子",281:"庆阳",282:"定西",283:"武威",

284:"酒泉",285:"张掖",286:"嘉峪关",287:"台州",288:"衢州",

289:"宁波",291:"眉山",292:"邯郸",293:"邢台",295:"伊春",

297:"大兴安岭",300:"黑河",301:"鹤岗",302:"七台河",303:"绍兴",

304:"嘉兴",305:"湖州",306:"舟山",307:"平凉",308:"天水",

309:"白银",310:"吐鲁番",311:"昌吉",312:"哈密",315:"阿克苏",

317:"克拉玛依",318:"博尔塔拉",319:"齐齐哈尔",320:"佳木斯",322:"牡丹江",

323:"鸡西",324:"绥化",331:"乌兰察布",333:"兴安盟",334:"大理",

335:"昭通",337:"红河",339:"曲靖",342:"丽江",343:"金昌",

344:"陇南",346:"临夏",350:"临沧",352:"济宁",353:"泰安",

356:"莱芜",359:"双鸭山",366:"日照",370:"安阳",371:"驻马店",

373:"信阳",374:"鹤壁",375:"周口",376:"商丘",378:"洛阳",

379:"漯河",380:"濮阳",381:"三门峡",383:"阿勒泰",384:"喀什",

386:"和田",391:"亳州",395:"吴忠",396:"固原",401:"延安",

405:"邵阳",407:"通化",408:"白山",410:"白城",417:"甘孜",

422:"铜仁",424:"安顺",426:"毕节",437:"文山",438:"保山",

456:"东方",457:"阿坝",466:"拉萨",467:"乌鲁木齐",472:"石嘴山",

479:"凉山",480:"中卫",499:"巴音郭楞",506:"来宾",514:"北京",

516:"日喀则",520:"伊犁",525:"延边",563:"塔城",582:"五指山",

588:"黔西南",608:"海西",652:"海东",653:"克孜勒苏柯尔克孜",654:"天门仙桃",

655:"那曲",656:"林芝",657:"None",658:"防城",659:"玉树",

660:"伊犁哈萨克",661:"五家渠",662:"思茅",663:"香港",664:"澳门",

665:"崇左",666:"普洱",667:"济源",668:"西双版纳",669:"德宏",

670:"文昌",671:"怒江",672:"迪庆",673:"甘南",674:"陵水黎族自治县",

675:"澄迈县",676:"海南",677:"山南",678:"昌都",679:"乐东黎族自治县",

680:"临高县",681:"定安县",682:"海北",683:"昌江黎族自治县",684:"屯昌县",

685:"黄南",686:"保亭黎族苗族自治县",687:"神农架",688:"果洛",689:"白沙黎族自治县",

690:"琼中黎族苗族自治县",691:"阿里",692:"阿拉尔",693:"图木舒克",

}

CITY2CODE = {city:str(code) for code,city in CODE2CITY.items()}例如实际使用中将area参数替换成901就可以获得山东省的指数数据。

JS逆向获取解密逻辑

最后就是应当如何处理Figure 3 Figure 5 Figure 7中红框里的加密数据了,我们再重新仔细看一遍Figure 18

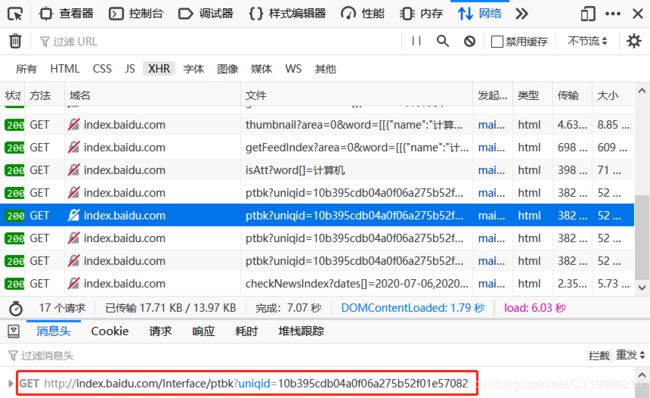

红框里的uniqid字段非常关键,现在我们还不能知道这个字段究竟是用于做什么的。

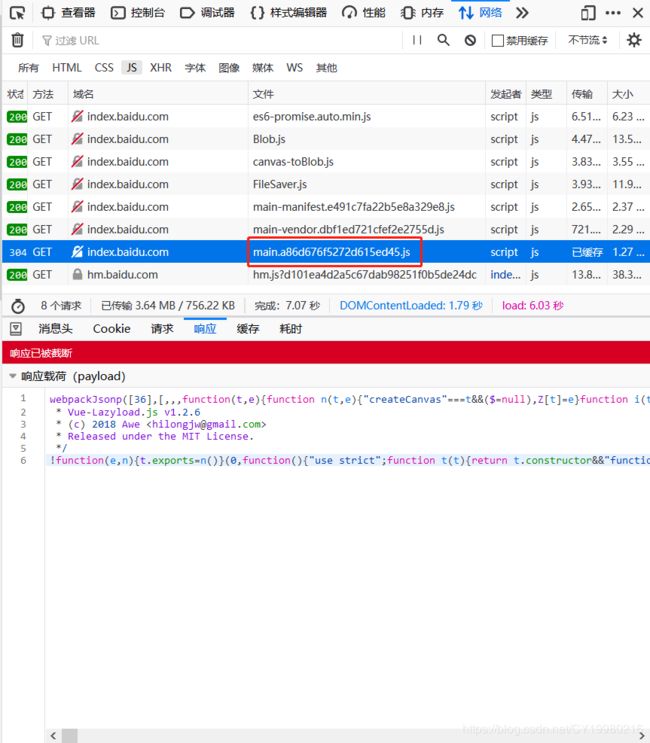

这里可以看到加密数据显然不可能是通用的标准加密算法得到的,因为显然加密结果并非随机,加密结果中频繁且规律性地出现相同字符。笔者起初试图在JS中找到解密算法的逻辑,但是百度指数的JS实在是又臭又长,没有指向性地去读JS实在是非人地折磨,后来又想通过找规律的方法试出这个加密算法,又无所得。最后终于还是在文件名以main.开头的JS文件中找到了加密逻辑(Figure 21 & Figure 22)

其实笔者得出一个小经验,如果想在JS里找到加解密的逻辑,先试试搜索关键词encrypt和decrypt,如果写JS的码农还是个人(假设他不能是个狗),他应该不会给加解密函数随便起个没意义的名字(不过网易云爬虫里面涉及加解密的那部分还真是用abcdef来命名加解密函数的,不过好在网易云的JS很短,很容易就查到在哪里加解密了)。

言归正传,Figure 22划线部分即为解密逻辑,function(t,e)中变量t显然是Figure 3 Figure 5 Figure 7里那一长串的字符,变量e则是解密的密钥,可以将划线部分用python代码复现

def decrypt_index(key,data): # 指数数据解密

cipher2plain = {} # 密文明文映射字典

plain_chars = [] # 解密得到的明文字符

for i in range(len(key)//2): cipher2plain[key[i]] = key[len(key)//2+i]

for i in range(len(data)): plain_chars.append(cipher2plain[data[i]])

index_list = "".join(plain_chars).split(",") # 所有明文字符拼接后按照逗号分隔

return index_list

可以看出密钥key其实可以转化为一个映射字典,可以将data里的每个字符映射成实际的数据,其实就是一个非常简单的映射加密(想不到百度竟然都不用标准的加密算法来加密,实在是懒得不行)。问题是如何取得密钥key?

这时候我们一个个检查XHR中的抓包结果,终于可以在一个文件名以ptbk?uniqid开头的数据包中找到线索(Figure 23 & Figure 24)

可以看到该数据包的请求用到了Figure 20中的uniqid,对比Figure 24中的响应data与Figure 20中那些加密后的字符串,发现加密字符串中的字符无一例外都可以在Figure 24中的响应的data中找到。有理由相信这个data就是可以用于解密的密钥

print(decrypt_index("RN7.xgl8EPKy%BD4.%209,8-5+3716","""Bxyx%lBx.g.lBxBRPlg.DglgRRRlg%x%lBxB%xlggRylgg.ylggPDlgPxRlgx.8lgy88lBxBgglBxx8DlBx.8ylggxPlgP%xlgBxDlgxDylBx.%xlBx.g%lBxygglB.yBxlByxRBlB.%8glBBgDRlByyD%lByx%.lB.gDx"""))

# 输出

"""

['10307', '10292', '10145', '9269', '9444', '9707', '10170', '9943', '9923', '9956', '9504', '9028', '9388', '10199', '10086', '10283', '9905', '9570', '9106', '9063', '10270', '10297', '10399', '12310', '13041', '12789', '11964', '13367', '13072', '12960']

"""至此,百度指数爬虫大部分的问题要点已经解决。

源码

以下四个py文件置于同一目录即可,运行第一个baiduindex_manage.py即可

baiduindex_manage.py

# -*- coding: UTF-8 -*-

# @author: caoyang

# @email: [email protected]

from baiduindex_index import Index

from baiduindex_utils import *

cookies = """百度首页登录百度账号后,抓取域名为www.baidu.com的数据包并复制其cookies粘贴到此处即可"""

keywords = [["拳皇","街霸"],["英雄联盟","绝地求生"],["围棋","象棋"]]

start_date = "2020-01-01"

end_date = "2020-06-30"

area = 0

index = Index(

keywords=keywords,

start_date=start_date,

end_date=end_date,

cookies=cookies,

area=0,

)

for data in index.get_index(index_name="search"):

print(data)

input("暂停...")

for data in index.get_index(index_name="news"):

print(data)

input("暂停...")

for data in index.get_index(index_name="feedsearch"):

print(data)

baiduindex_config.py

# -*- coding: UTF-8 -*-

# @author: caoyang

# @email: [email protected]

import json

# 0. 全局默认变量

MAX_KEYWORD_GROUP_NUMBER = 5 # 最多可以将MAX_KEYWORD_GROUP_NUMBER组关键词进行比较

MAX_KEYWORD_GROUP_SIZE = 3 # 最多可以将MAX_KEYWORD_GROUP_SIZE个关键词进行组合

MAX_DATE_LENGTH = 360 # 日期区间长度应当小于366, 取个整数就用360天即可

########################################################################

# 1. 百度指数js常用变量

## 1.1 省份区域索引表: CODE2AREA

## - CODE2PROVINCE来源: main-vendor.xxxxxxxxxxxxxxxxxxx.js源码中直接复制可得

## - js源码URL: e.g. http://index.baidu.com/v2/static/js/main-vendor.dbf1ed721cfef2e2755d.js

CODE2AREA = {

901:"华东",902:"西南",903:"华中",904:"西南",905:"华北",

906:"华中",907:"东北",908:"华中",909:"华东",910:"华东",

911:"华北",912:"华南",913:"华南",914:"西南",915:"西南",

916:"华东",917:"华东",918:"西北",919:"西北",920:"华北",

921:"东北",922:"东北",923:"华北",924:"西北",925:"西北",

926:"西北",927:"华中",928:"华东",929:"华北",930:"华南",

931:"华南",932:"西南",933:"华南",934:"华南",

}

## 1.2 省份编号索引表: CODE2PROVINCE, PROVINCE2CODE

## - CODE2PROVINCE来源: main-vendor.xxxxxxxxxxxxxxxxxxx.js源码中直接复制可得

## - js源码URL: e.g. http://index.baidu.com/v2/static/js/main-vendor.dbf1ed721cfef2e2755d.js

CODE2PROVINCE = {

901:"山东",902:"贵州",903:"江西",904:"重庆",905:"内蒙古",

906:"湖北",907:"辽宁",908:"湖南",909:"福建",910:"上海",

911:"北京",912:"广西",913:"广东",914:"四川",915:"云南",

916:"江苏",917:"浙江",918:"青海",919:"宁夏",920:"河北",

921:"黑龙江",922:"吉林",923:"天津",924:"陕西",925:"甘肃",

926:"新疆",927:"河南",928:"安徽",929:"山西",930:"海南",

931:"台湾",932:"西藏",933:"香港",934:"澳门",

}

PROVINCE2CODE = {province:str(code) for code,province in CODE2PROVINCE.items()}

## 1.3 城市编号索引表: CODE2CITY, CITY2CODE

## - CODE2PROVINCE来源: main-vendor.xxxxxxxxxxxxxxxxxxx.js源码中直接复制可得

## - js源码URL: e.g. http://index.baidu.com/v2/static/js/main-vendor.dbf1ed721cfef2e2755d.js

CODE2CITY = {

1:"济南",2:"贵阳",3:"黔南",4:"六盘水",5:"南昌",

6:"九江",7:"鹰潭",8:"抚州",9:"上饶",10:"赣州",

11:"重庆",13:"包头",14:"鄂尔多斯",15:"巴彦淖尔",16:"乌海",

17:"阿拉善盟",19:"锡林郭勒盟",20:"呼和浩特",21:"赤峰",22:"通辽",

25:"呼伦贝尔",28:"武汉",29:"大连",30:"黄石",31:"荆州",

32:"襄阳",33:"黄冈",34:"荆门",35:"宜昌",36:"十堰",

37:"随州",38:"恩施",39:"鄂州",40:"咸宁",41:"孝感",

42:"仙桃",43:"长沙",44:"岳阳",45:"衡阳",46:"株洲",

47:"湘潭",48:"益阳",49:"郴州",50:"福州",51:"莆田",

52:"三明",53:"龙岩",54:"厦门",55:"泉州",56:"漳州",

57:"上海",59:"遵义",61:"黔东南",65:"湘西",66:"娄底",

67:"怀化",68:"常德",73:"天门",74:"潜江",76:"滨州",

77:"青岛",78:"烟台",79:"临沂",80:"潍坊",81:"淄博",

82:"东营",83:"聊城",84:"菏泽",85:"枣庄",86:"德州",

87:"宁德",88:"威海",89:"柳州",90:"南宁",91:"桂林",

92:"贺州",93:"贵港",94:"深圳",95:"广州",96:"宜宾",

97:"成都",98:"绵阳",99:"广元",100:"遂宁",101:"巴中",

102:"内江",103:"泸州",104:"南充",106:"德阳",107:"乐山",

108:"广安",109:"资阳",111:"自贡",112:"攀枝花",113:"达州",

114:"雅安",115:"吉安",117:"昆明",118:"玉林",119:"河池",

123:"玉溪",124:"楚雄",125:"南京",126:"苏州",127:"无锡",

128:"北海",129:"钦州",130:"防城港",131:"百色",132:"梧州",

133:"东莞",134:"丽水",135:"金华",136:"萍乡",137:"景德镇",

138:"杭州",139:"西宁",140:"银川",141:"石家庄",143:"衡水",

144:"张家口",145:"承德",146:"秦皇岛",147:"廊坊",148:"沧州",

149:"温州",150:"沈阳",151:"盘锦",152:"哈尔滨",153:"大庆",

154:"长春",155:"四平",156:"连云港",157:"淮安",158:"扬州",

159:"泰州",160:"盐城",161:"徐州",162:"常州",163:"南通",

164:"天津",165:"西安",166:"兰州",168:"郑州",169:"镇江",

172:"宿迁",173:"铜陵",174:"黄山",175:"池州",176:"宣城",

177:"巢湖",178:"淮南",179:"宿州",181:"六安",182:"滁州",

183:"淮北",184:"阜阳",185:"马鞍山",186:"安庆",187:"蚌埠",

188:"芜湖",189:"合肥",191:"辽源",194:"松原",195:"云浮",

196:"佛山",197:"湛江",198:"江门",199:"惠州",200:"珠海",

201:"韶关",202:"阳江",203:"茂名",204:"潮州",205:"揭阳",

207:"中山",208:"清远",209:"肇庆",210:"河源",211:"梅州",

212:"汕头",213:"汕尾",215:"鞍山",216:"朝阳",217:"锦州",

218:"铁岭",219:"丹东",220:"本溪",221:"营口",222:"抚顺",

223:"阜新",224:"辽阳",225:"葫芦岛",226:"张家界",227:"大同",

228:"长治",229:"忻州",230:"晋中",231:"太原",232:"临汾",

233:"运城",234:"晋城",235:"朔州",236:"阳泉",237:"吕梁",

239:"海口",241:"万宁",242:"琼海",243:"三亚",244:"儋州",

246:"新余",253:"南平",256:"宜春",259:"保定",261:"唐山",

262:"南阳",263:"新乡",264:"开封",265:"焦作",266:"平顶山",

268:"许昌",269:"永州",270:"吉林",271:"铜川",272:"安康",

273:"宝鸡",274:"商洛",275:"渭南",276:"汉中",277:"咸阳",

278:"榆林",280:"石河子",281:"庆阳",282:"定西",283:"武威",

284:"酒泉",285:"张掖",286:"嘉峪关",287:"台州",288:"衢州",

289:"宁波",291:"眉山",292:"邯郸",293:"邢台",295:"伊春",

297:"大兴安岭",300:"黑河",301:"鹤岗",302:"七台河",303:"绍兴",

304:"嘉兴",305:"湖州",306:"舟山",307:"平凉",308:"天水",

309:"白银",310:"吐鲁番",311:"昌吉",312:"哈密",315:"阿克苏",

317:"克拉玛依",318:"博尔塔拉",319:"齐齐哈尔",320:"佳木斯",322:"牡丹江",

323:"鸡西",324:"绥化",331:"乌兰察布",333:"兴安盟",334:"大理",

335:"昭通",337:"红河",339:"曲靖",342:"丽江",343:"金昌",

344:"陇南",346:"临夏",350:"临沧",352:"济宁",353:"泰安",

356:"莱芜",359:"双鸭山",366:"日照",370:"安阳",371:"驻马店",

373:"信阳",374:"鹤壁",375:"周口",376:"商丘",378:"洛阳",

379:"漯河",380:"濮阳",381:"三门峡",383:"阿勒泰",384:"喀什",

386:"和田",391:"亳州",395:"吴忠",396:"固原",401:"延安",

405:"邵阳",407:"通化",408:"白山",410:"白城",417:"甘孜",

422:"铜仁",424:"安顺",426:"毕节",437:"文山",438:"保山",

456:"东方",457:"阿坝",466:"拉萨",467:"乌鲁木齐",472:"石嘴山",

479:"凉山",480:"中卫",499:"巴音郭楞",506:"来宾",514:"北京",

516:"日喀则",520:"伊犁",525:"延边",563:"塔城",582:"五指山",

588:"黔西南",608:"海西",652:"海东",653:"克孜勒苏柯尔克孜",654:"天门仙桃",

655:"那曲",656:"林芝",657:"None",658:"防城",659:"玉树",

660:"伊犁哈萨克",661:"五家渠",662:"思茅",663:"香港",664:"澳门",

665:"崇左",666:"普洱",667:"济源",668:"西双版纳",669:"德宏",

670:"文昌",671:"怒江",672:"迪庆",673:"甘南",674:"陵水黎族自治县",

675:"澄迈县",676:"海南",677:"山南",678:"昌都",679:"乐东黎族自治县",

680:"临高县",681:"定安县",682:"海北",683:"昌江黎族自治县",684:"屯昌县",

685:"黄南",686:"保亭黎族苗族自治县",687:"神农架",688:"果洛",689:"白沙黎族自治县",

690:"琼中黎族苗族自治县",691:"阿里",692:"阿拉尔",693:"图木舒克",

}

CITY2CODE = {city:str(code) for code,city in CODE2CITY.items()}

## 1.4 搜索指数的搜索来源: 注意媒体指数与资讯指数是没有该选项的

SEARCH_MODE = ["all","pc","wise"] # 移动+PC, PC, 移动

########################################################################

# 2. 爬虫伪装配置

## 2.1 请求头伪装配置

HEADERS = {

"Host": "index.baidu.com",

"Connection": "keep-alive",

"X-Requested-With": "XMLHttpRequest",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:78.0) Gecko/20100101 Firefox/78.0",

}

########################################################################

# 3. 常用URL与API变量

## 3.1 常用URL

URL_BAIDU = "https://www.baidu.com/" # 百度首页

URL_BAIDU_INDEX = "https://index.baidu.com/v2/index.html#/" # 百度指数首页

## 3.2 简易API接口: 只需要提供一个参数

API_BAIDU_INDEX_ENGINE = "http://index.baidu.com/v2/main/index.html#/trend/{0}?words={0}".format # 百度指数搜索引擎接口

API_BAIDU_INDEX_EXIST = "http://index.baidu.com/api/AddWordApi/checkWordsExists?word={}".format # 百度指数关键词是否存在检查

API_BAIDU_INDEX_KEY = "http://index.baidu.com/Interface/ptbk?uniqid={}".format # 指数密钥请求接口

## 3.3 复杂API接口: 提供多元不确定的参数

API_SEARCH_INDEX = "http://index.baidu.com/api/SearchApi/index?{}".format # 搜索指数查询接口

API_NEWS_INDEX = "http://index.baidu.com/api/NewsApi/getNewsIndex?{}".format # 媒体指数查询接口

API_FEEDSEARCH_INDEX = "http://index.baidu.com/api/FeedSearchApi/getFeedIndex?{}".format # 资讯指数查询接口

API_NEWS_SOURCE = "http://index.baidu.com/api/NewsApi/checkNewsIndex?{}".format # 新闻来源查询接口: 媒体指数数据来源

API_SEARCH_THUMBNAIL = "http://index.baidu.com/api/SearchApi/thumbnail?{}".format # 搜索指数缩略图: 目前我不确定这个数据是用来做什么的, 我猜是搜索指数在很长一段时间内的概况, 因为并不能与Searchapi/index?接口得到的结果匹配上, 而且其指数数据量有几百天, 不太清楚具体是什么

API_INDEX_BY_REGION = "http://index.baidu.com/api/SearchApi/region?{}".format # 搜索指数分地区情况统计

API_INDEX_BY_SOCIAL = "http://index.baidu.com/api/SocialApi/baseAttributes?{}".format # 搜索指数分年龄性别兴趣统计

## 3.4. 复杂API接口参数列表示例

### API_SEARCH_INDEX参数列表

KWARGS_SEARCH_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} # * Tips: 可以不传入startDate与endDate而改为days参数, 即获取最近days天的指数

### API_NEWS_INDEX参数列表

KWARGS_NEWS_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} # * Tips: 可以不传入startDate与endDate而改为days参数, 即获取最近days天的指数

### API_FEEDSEARCH_INDEX参数列表

KWARGS_FEEDSEARCH_INDEX = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"startDate": "2020-01-01", # startDate: 起始日期(包含该日)

"endDate": "2020-06-30", # endDate: 中止日期(包含该日)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

} # * Tips: 可以不传入startDate与endDate而改为days参数, 即获取最近days天的指数

### API_NEWS_SOURCE参数列表

KWARGS_NEWS_SOURCE = {

"dates[]": "2020-07-02,2020-07-04", # dates[]: 逗号拼接的%Y-%m-%d格式的日期字符串

"type": "day", # type: 默认按日获取

"words": "围棋", # words: 关键词, 该接口应该只能支持单关键词的查询

}

### API_SEARCH_THUMBNAIL参数列表

KWARGS_SEARCH_THUMBNAIL = {

"word": json.dumps([[{"name":"围棋","wordType":1}]]), # word: json字符串为二维列表, 第一维是可以比较多组关键词(目前至多5组), 第二维是组合关键词(我理解是指数相加)

"area": 0, # area: 区域编码, 默认0指统计全国指数, 具体省份编号见本文档CODE2PROVINCE

}

### API_INDEX_BY_REGION参数列表

KWARGS_INDEX_BY_REGION = {

"region": 0, # region: 这个region很可能是可以既可以指省份, 也可以指华东华北这样的大区域的

"word": "围棋,象棋", # word: 多关键词请使用逗号分隔

"startDate": "2020-06-30", # startDate: 起始日期(包含该日)

"endDate": "2020-07-30", # endDate: 中止日期(包含该日)

"days": "", # days: 默认空字符串

}

### API_INDEX_BY_SOCIAL参数列表

KWARGS_INDEX_BY_SOCIAL = {

"wordlist[]": "围棋,象棋", # wordlist[]: 多关键词请使用逗号分隔

}

baiduindex_index.py

# -*- coding: UTF-8 -*-

# @author: caoyang

# @email: [email protected]

import json

import requests

from urllib.parse import urlencode

from datetime import datetime,timedelta

from baiduindex_utils import *

from baiduindex_config import *

class Index(object):

def __init__(self,keywords,start_date,end_date,cookies,area=0):

# 1. 参数校验

print("正在检查参数可行性...")

assert is_cookies_valid(cookies),"cookies不可用!" # 检查cookie是否可用

print(" - cookies检测可用!")

assert is_keywords_valid(keywords),"keywords集合不合规范!" # 检查keywords集合是否符合规范

print(" - keywords集合符合规范!")

print(" - 检查每个keyword是否存在可查询的指数...")

for i in range(len(keywords)): # 检查每个keyword是否有效

for j in range(len(keywords[i])):

flag = is_keyword_existed(keywords[i][j],cookies)

print(" + 关键词'{}'有效性: {}".format(keywords[i][j],flag))

if not flag: keywords[i][j] = str() # 置空无效关键词

keywords = list(filter(None,map(lambda x: list(filter(None,x)),keywords))) # 删除无效关键词与无效关键词组

print(" - 删除无效关键词后的keywords集合: {}".format(keywords))

assert days_duration(start_date,end_date)+1<=MAX_DATE_LENGTH,"start_date与end_date间隔不在可行范围内: 0~{}".format(MAX_DATE_LENGTH)

print(" - 日期间隔符合规范!")

assert int(area)==0 or int(area) in CODE2PROVINCE,"未知的area!" # 检查area是否在CODE2PROVINCE中, 0为全国

print(" - area编号可以查询!")

print("参数校验通过!")

# 2. 类构造参数

self.keywords = keywords # 关键词: 二维列表: [["拳皇","街霸"],["英雄联盟","绝地求生"],["围棋","象棋"]]

self.start_date = start_date # 起始日期: yyyy-MM-dd

self.end_date = end_date # 终止日期: yyyy-MM-dd

self.cookies = cookies # cookie信息

self.area = area # 地区编号: 整型数, 默认值全国(0)

# 3. 类常用参数

self.index_names = ["search","news","feedsearch"] # 被加密的三种指数: 搜索指数, 媒体指数, 资讯指数

def get_decrypt_key(self,uniqid): # 根据uniqid请求获得解密密钥

response = request_with_cookies(API_BAIDU_INDEX_KEY(uniqid),self.cookies)

json_response = json.loads(response.text)

decrypt_key = json_response["data"]

return decrypt_key

def get_encrypt_data(self,api_name="search"): # 三个API接口(搜索指数, 媒体指数, 资讯指数)获取加密响应的方式: api_name取值范围{"search","news","feedsearch"}

word_list = [[{"name":keyword,"wordType":1} for keyword in group] for group in self.keywords]

assert api_name in self.index_names,"Expect param 'api_name' in {} but got {} .".format(self.index_names,api_name)

if api_name=="search": # 搜索指数相关参数

field_name = "userIndexes"

api = API_SEARCH_INDEX

kwargs = KWARGS_SEARCH_INDEX

if api_name=="news": # 媒体指数相关参数

field_name = "index"

api = API_NEWS_INDEX

kwargs = KWARGS_NEWS_INDEX

if api_name=="feedsearch": # 资讯指数相关参数

field_name = "index"

api = API_FEEDSEARCH_INDEX

kwargs = KWARGS_FEEDSEARCH_INDEX

query_string = kwargs.copy()

query_string["word"] = json.dumps(word_list)

query_string["startDate"] = self.start_date

query_string["endDate"] = self.end_date

query_string["area"] = self.area

query_string = urlencode(query_string)

response = request_with_cookies(api(query_string),self.cookies)

json_response = json.loads(response.text)

uniqid = json_response["data"]["uniqid"]

encrypt_data = []

for data in json_response["data"][field_name]: encrypt_data.append(data)

return encrypt_data,uniqid

def get_index(self,index_name="search"): # 获取三大指数: 搜索指数, 媒体指数, 资讯指数

index_name = index_name.lower().strip()

assert index_name in self.index_names,"Expect param 'index_name' in {} but got {} .".format(self.index_names,index_name)

encrypt_data,uniqid = self.get_encrypt_data(api_name=index_name)# 获取加密数据与密钥请求参数

decrypt_key = self.get_decrypt_key(uniqid) # 获取解密密钥

if index_name=="search": # 搜索指数区别于媒体指数与资讯指数, 搜索指数分是分SEARCH_MODE的

for data in encrypt_data: # 遍历每个数据

for mode in SEARCH_MODE: # 遍历所有搜索方式解密: ["all","pc","wise"]

data[mode]["data"] = decrypt_index(decrypt_key,data[mode]["data"])

keyword = str(data["word"])

for mode in SEARCH_MODE: # 遍历所有搜索方式格式化: ["all","pc","wise"]

_start_date = datetime.strptime(data[mode]["startDate"],"%Y-%m-%d")

_end_date = datetime.strptime(data[mode]["endDate"],"%Y-%m-%d")

dates = [] # _start_date到_end_date(包头包尾)的日期列表

while _start_date<=_end_date: # 遍历_start_date到_end_date所有日期

dates.append(_start_date)

_start_date += timedelta(days=1)

index_list = data[mode]["data"]

if len(index_list)==len(dates): print("指数数据长度与日期长度相同!")

else: print("警告: 指数数据长度与日期长度不一致, 指数一共有{}个, 日期一共有{}个, 可能是因为日期跨度太大!".format(len(index_list),len(dates)))

for i in range(len(dates)): # 遍历所有日期

try: index_data = index_list[i] # 存在可能日期数跟index数匹配不上的情况

except IndexError: index_data = "" # 如果意外匹配不上就置空好了

output_data = {

"keyword": [keyword_info["name"] for keyword_info in json.loads(keyword.replace("'",'"'))],

"type": mode, # 标注是哪种设备的搜索指数

"date": dates[i].strftime('%Y-%m-%d'),

"index": index_data if index_data else "0", # 指数信息

}

yield output_data

if index_name in ["news","feedsearch"]:

for data in encrypt_data:

data["data"] = decrypt_index(decrypt_key,data["data"]) # 将data字段替换成解密后的结果

keyword = str(data["key"])

_start_date = datetime.strptime(data["startDate"],"%Y-%m-%d")

_end_date = datetime.strptime(data["endDate"],"%Y-%m-%d")

dates = [] # _start_date到_end_date(包头包尾)的日期列表

while _start_date<=_end_date: # 遍历_start_date到_end_date所有日期

dates.append(_start_date)

_start_date += timedelta(days=1)

index_list = data["data"]

if len(index_list)==len(dates): print("指数数据长度与日期长度相同!")

else: print("警告: 指数数据长度与日期长度不一致, 指数一共有{}个, 日期一共有{}个, 可能是因为日期跨度太大!".format(len(index_list),len(dates)))

for i in range(len(dates)): # 遍历所有日期

try: index_data = index_list[i] # 存在可能日期数跟index数匹配不上的情况

except IndexError: index_data = "" # 如果意外匹配不上就置空好了

output_data = {

"keyword": [keyword_info["name"] for keyword_info in json.loads(keyword.replace("'",'"'))],

"date": dates[i].strftime('%Y-%m-%d'),

"index": index_data if index_data else "0", # 没有指数对应默认为0

}

yield output_data

if __name__ == "__main__":

pass

baiduindex_utils.py

# -*- coding: UTF-8 -*-

# @author: caoyang

# @email: [email protected]

# 百度指数: 工具函数

import math

import json

import requests

from bs4 import BeautifulSoup

from datetime import datetime,timedelta

from baiduindex_config import *

def request_with_cookies(url,cookies,timeout=30): # 重写requests.get方法: 百度指数爬虫中大部分request请求都需要设置cookies后访问

headers = HEADERS.copy() # 使用config中默认的伪装头

headers["Cookie"] = cookies # 设置cookies

response = requests.get(url,headers=headers,timeout=timeout) # 发起请求

if response.status_code!=200: raise requests.Timeout # 请求异常: 一般为超时异常

return response # 返回响应内容

def is_cookies_valid(cookies): # 检查cookies是否可用: 通过访问百度首页并检查相关元素是否存在

response = request_with_cookies(URL_BAIDU,cookies) # 使用cookie请求百度首页, 获取响应内容

html = response.text # 获取响应的页面源代码

with open("1.html","w") as f:

f.write(html)

soup = BeautifulSoup(html,"lxml") # 解析页面源代码

flag1 = soup.find("a",class_="quit") is not None # "退出登录"按钮(a标签)是否存在

flag2 = soup.find("span",class_="user-name") is not None # 用户名(span标签)是否存在

flag3 = soup.find("a",attrs={"name":"tj_login"}) is None # "登录"按钮(a标签)是否存在

return flag3 and (flag1 or flag2) # cookies可用判定条件: 登录按钮不存在且用户名与退出登录按钮至少存在一个

def is_keywords_valid(keywords): # 检查关键词集合是否合法: 满足最大的关键词数量限制, keywords为二维列表

flag1 = len(keywords)<=MAX_KEYWORD_GROUP_NUMBER # MAX_KEYWORD_GROUP_NUMBER

flag2 = max(map(len,keywords))<=MAX_KEYWORD_GROUP_SIZE # MAX_KEYWORD_GROUP_SIZE

return flag1 and flag2

def is_keyword_existed(keyword,cookies): # 检查需要查询百度指数的关键词是否存在: keyword为单个关键词字符串

response = request_with_cookies(API_BAIDU_INDEX_EXIST(keyword),cookies)

json_response = json.loads(response.text)

if json_response["message"]=="not login": # message=="not login"表明cookies已经失效

raise Exception("Cookies has been expired !")

flag1 = len(json_response["data"])==1 # 正常关键词响应结果的data字段里只有一个字段值: result

flag2 = len(json_response["data"]["result"])==0 # 且result字段值应该是空列表

#print(flag1)

#print(flag2)

return flag1 or flag2 # 二者满足其一即认为关键词有效

def days_duration(start_date,end_date,format="%Y-%m-%d"): # 计算两个日期之间的间隔: 包头不包尾

_start_date = datetime.strptime(start_date,format)

_end_date = datetime.strptime(end_date,format)

return (_end_date-_start_date).days

def split_datetime_block(start_date,end_date,format="%Y-%m-%d",size=360):# 给定起止日期按照size进行划分成一个个单独的块: 包头包尾

blocks = []

_start_date = datetime.strptime(start_date,format)

_end_date = datetime.strptime(end_date,format)

while True:

temp_date = _start_date+timedelta(days=size)

if temp_date>_end_date:

blocks.append((_start_date,_end_date))

break

blocks.append((_start_date,temp_date))

_start_date = temp_date+timedelta(days=1)

return blocks

def decrypt_index(key,data): # 指数数据解密

cipher2plain = {} # 密文明文映射字典

plain_chars = [] # 解密得到的明文字符

for i in range(len(key)//2): cipher2plain[key[i]] = key[len(key)//2+i]

for i in range(len(data)): plain_chars.append(cipher2plain[data[i]])

index_list = "".join(plain_chars).split(",") # 所有明文字符拼接后按照逗号分隔

return index_list

if __name__=="__main__":

print(decrypt_index("Yo%R68P0l1.,X3I-430,7251+.6%89","""l,8I%6l,oP36lIRRI6PR,,86P%IoP6P0,oI6PRlP%6l0Il%6l,,0o6l,3oR6l8ll%6l80R%6l,o3o6lIoR86l3,3R6PR%%,6PRI8R6P%,,R6PlPPo6PRIPR6PPPR,6P%R%,6P08P86PP%o06Po,oR6P30,%6%R%ll6PI0o36%R%l36%ll0o6%PloP6%lPPo6%lR%06%%l3l6%R%II6P,8806%Rl%I6P8oR06P8PIR6%o,,%6Po0,l6P8PP,6%RP8o6%l0%l6%0RlP6%,RIP6%0l,P6%oo3o6%ol306%P,l,6%PloP6%l,PP6%0PR%6%8lI36oloII6o8oPo6oP%R06%80,P6%0PP%6%oPII6%%3P%6%%%l,6%%8%06%ll306P3%%P6%PPl36PoPo%6l,PPl6l%o8%6l8o%,6lo%I06lo%Ro6loRP36lo8RR6l00o36l,I386lII%,6l3,P86Plo086lII%%6l38,P6l3P,%6l3l,P6l3Rl36l830P6l03%86lo0086lR30,6llIP06lP88l6l%%oR6l8I%,6lo0RR6l8%%06loIo06l%%%06l0RR,6l%I,,6PPR%06l33086l,o%o6l%0II6l%lRI6llo3,6l%oIl63ooP6lRIll6ll3R%6l%P0I6loP,R6l0%ol6lP3oP6lRoIo6lRP386lRo3P6lRo0I6lRo%36l%I,I6I%o06I,R36IR0o6lRIlo63I3o638IR6IPoR6lRR886lR0I36lR3RR6IPI%6I8086ll,3o6llRRo6lR80o6lR,Pl6lll%%6llRoP6lR0oo6lRol36lR3,l63,lI638I%6lRPR%63Ro06lRII363oo363P,o630,I6I,,I63,,l63%I063P3363Pl,63Rl863%8l6803R6,33%683I%63I,06338063IPP6I00R6lR33R63P3l6,3o368,3R6880063PIR633lo63oPR63%%l63%,R6IRl%6I3Pl6lR3,o63,,I6IR306IlRl6Il,I6I,Io6I33l6lR%oR6I33P6lR,,36lR%l36lR3806lR8P,6ll,Il6llR3I6lll806ll0%36ll0ol630l06lR0o36I%,l6I,R86lP%%o6lRP%06Io006I8306IoPR63PlI68880638I%6I,0o6I,,86IP0o63I00633%%6ll,I06Ilo86I3,I6lRl006llP%%6ll8oP6ll8,86lPP3o6lPI%36lP0l%6I,l36lR38I6lR80,6ll%,R6llRoR6I8006lRRR36lRIoP6I30o6I8036lR33R6lRRRP6lRlPI6lRR8R6I8386lR,3l6lll%P6lPlR%6lP0RR6l%8,06loR,o6loR%I6l%3P06l%P306llIlI6lRoP363,PR6I,P%6I00%6llRPI6I,8R63P8l63I,R63I08630%I63RP863IPR63,0l68I,363ll0688PI68IP8630%068,o868,%868I%o63l0I630o863PI3633336838,6838,63R8P68,I3688o0680,o6888368RP%6,oo%680,l68Po36,,IP68ll868P%068o%,680o36,P%R6,oo,68RoP68%3R6,I,l68%P368R086,8Io6,lI86,,oI6,IlI6,l%o60,006,P3I6088l6,l8l6,,l%60,%06,oPo60IIo60I,R6,o,o6,8ol6,0l%6,%%P6,P,R6,lo36,R8I6,lII6,ll86,RR86,0336,8o,68R086,,8060IoR68RPP6,IPR68l8368olo68,%o68Po068RlR6,,036,8o06,o3R68R00630oI63,30680,06888%68%0%6,3%86,33o6,,386,,lI6,o8,6,0,R6,olo6,IIl68I%%63PP863PR%680Po6,8oP6,I%R6,00R6,l8o68%8o608ol6,R8,6033I60%Io60%IP60P3P60R,l608Ro60o0860PoP60l,,60%P060Pll60PIl60%8360,o360o8l60R8l6oIRo6o3lI6ol,P6oP,I6oo306o0Io6,l0368ol%6llI306lP,%,6loPll6lPP3P6l%lo36l,RPR6l3o306l8%8%6l3Po06l,00R6l%Ro36lP3RR6loR,I6%,0I6%RRI6%%R,603Rl6%oRI6%l386%Pl86080o63I,o6lRlI86lRo8l6lR38%608%l6lllR,68P8,6033360,8l60PI36o3IP6oII,60IP86,03l6,,0R68%lo683Il68R,068%,%68Plo6,88I60P%P6ooo,6oloI6oP8I60R0360o8o600I8608l,6,R8%6,,38603R860IR36,%l%6,%P068lo3680Po68%oR68o%%688o368,%368%%o6,,8I6oo3,6%IIP6%,PI6%0%l6%o0%6%o,o6%%306%P0P6%%RP6%I306o08I6o,l36oIPP6%3886o%I36o8%%6o08P6oo,%6o,0R6o,8,60PPP600Ro60%oP60R,I60%II600P,60l886o0o86o%%36%08l6oRlI6o%Io6oP,06oPR06oPlo608%360PP%6o0IR6oPIP6%00060ll06%olR6%%Pl6%%RR6%l8R6%ll,6%l836%PI36%ool6%o836%oR36%0ll6%%0R6%%I%6%%386%%lR6%%PP"""))

结语

文末再次鸣谢longxiaofei@github的repository: spider-BaiduIndex,本文的代码主要以此基础进行地相关改进与完善。

分享学习,共同进步。