Hadoop的搭建与使用

目录

- 1、需求分析(或者应用场景)

- 2、环境说明

- 3、项目运行效果

- 4、项目完成步骤

- 4.1 修改主机名

- 4.2修改/etc/hosts文件,配置主机域名映射

- 4.3 关闭防火墙(三台同时关闭)

- 4.4 安装并配置NTP服务,用于时间同步

- 4.5 配置免密码登录(只需要配置从主节点到从节点即可)

- 4.6 将安装上传到服务器中

- 4.7 安装和配置jdk

- 4.8 安装Hadoop

- 4.9 修改Hadoop的配置文件

- 4.10 将修改好的环境复制到其他的从节点

- 4.11 格式化Hadoop

- 4.12 启动

- 4.13 验证启动情况

- 5、项目使用说明

- 5.1显示目录内容,加参数-R可递归显示

- 5.2 创建目录

- 5.3 创建文件并往该文件内写入内容

- 5.4 删除文件(如:删除test的文件)

- 5.5 将本地文件上传到HDFS

- 5.6 将HDFS上的文件下载到本地

- 5.7 复制文件

- 5.8 移动文件

1、需求分析(或者应用场景)

随着数据量的“暴增”趋势,本地处理方式已经难以满足需求,现急需一套方案来解决这个问题,故推荐使用大数据分析平台——Hadoop。

大数据的特点:容量大、种类多、速度快、价值高

- 数据量低,处理难度大,但是蕴含的价值也大;

- 数据种类多样,更加个性化,针对不同数据源进行多样化的方式处理,结果更加精确;

- 要求对数据进行及时处理,追求更极致、更完善的用户体验;

- 数据的成为新的资源,掌握数据就等于掌握了巨大的财富;

针对以上现状,提出采用Hadoop的部署方式:

Hadoop是Apache的一套开源软件平台,可根据自己的需求进行二次开发,可利用性强,投入成本低,并且Hadoop提供了很多的功能:利用服务器集群,根据用户的自定义业务逻辑,对海量数据进行分布式处理。

2、环境说明

序号 主机名 IP地址 备注

1 master-yly 192.168.91.134 主节点

2 slave01-yly 192.168.91.135 从节点

3 slave02-yly 192.168.91.136 从节点

操作系统 CentOS 7

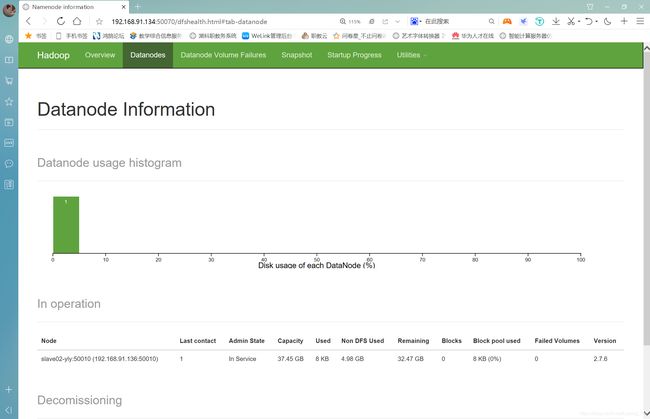

3、项目运行效果

4、项目完成步骤

4.1 修改主机名

master-yly:

[root@localhost ~]# hostnamectl set-hostname master-yly

[root@localhost ~]# bash

slave01-yly:

[root@localhost ~]# hostnamectl set-hostname slave01-yly

[root@localhost ~]# bash

slave02-yly:

[root@localhost ~]# hostnamectl set-hostname slave02-yly

[root@localhost ~]# bash

4.2修改/etc/hosts文件,配置主机域名映射

master-yly:

[root@master-yly ~]# vim /etc/hosts

192.168.91.134 master-yly

192.168.91.135 slave01-yly

192.168.91.136 slave02-yly

slave01-yly:

[root@slave01-yly ~]# vim /etc/hosts

192.168.91.134 master-yly

192.168.91.135 slave01-yly

192.168.91.136 slave02-yly

slave02-yly:

[root@slave02-yly ~]# vim /etc/hosts

192.168.91.134 master-yly

192.168.91.135 slave01-yly

192.168.91.136 slave02-yly

4.3 关闭防火墙(三台同时关闭)

[root@master-yly ~]# systemctl stop firewalld

[root@slave01-yly ~]# systemctl stop firewalld

[root@slave02-yly ~]# systemctl stop firewalld

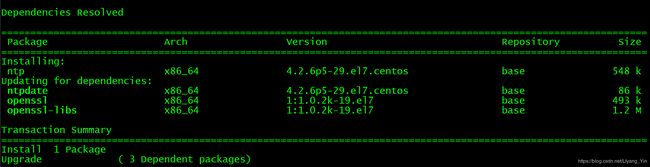

4.4 安装并配置NTP服务,用于时间同步

master-yly:

[root@master-yly ~]# yum install -y ntp

#修改配置文件,在末尾添加如下两条配置:

[root@master-yly ~]# vim /etc/ntp.conf

server 127.127.1.0

fudge 127.127.1.0 startum 10

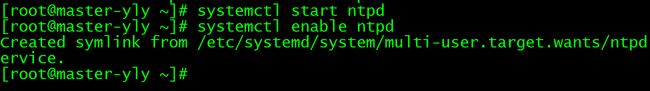

#启动服务并添加到开机自启动:

[root@master-yly ~]# systemctl start ntpd

[root@master-yly ~]# systemctl enable ntpd

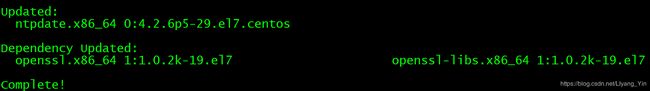

slave01-yly:

[root@slave01-yly ~]# yum install -y ntpdate

[root@slave01-yly ~]# ntpdate master-yly

![]()

slave02-yly:

[root@slave02-yly ~]# yum install -y ntpdate

[root@slave02-yly ~]# ntpdate master-yly

![]()

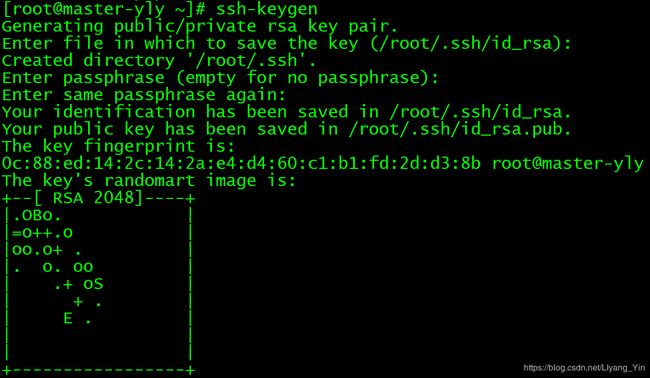

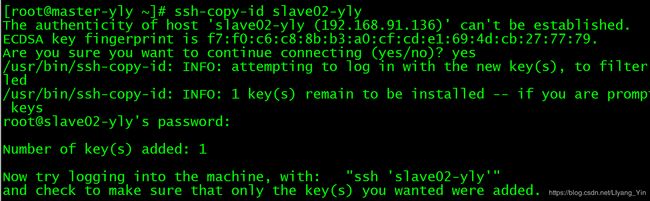

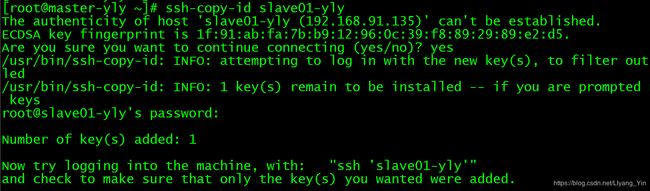

4.5 配置免密码登录(只需要配置从主节点到从节点即可)

[root@master-yly ~]# ssh-keygen

[root@master-yly ~]# ssh-copy-id master-yly

[root@master-yly ~]# ssh-copy-id slave02-yly

[root@master-yly ~]# ssh-copy-id slave01-yly

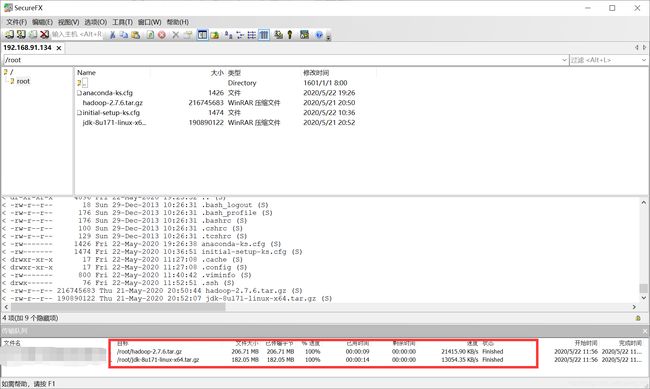

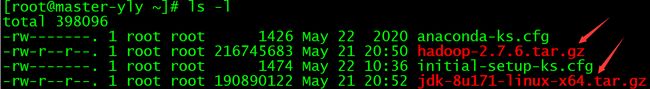

4.6 将安装上传到服务器中

4.7 安装和配置jdk

# 新建一个目录用来存放Hadoop的相关安装包

[root@master-yly ~]# mkdir /opt/Hadoop

# 将安装包移动到新建的目录下

[root@master-yly ~]# mv hadoop-2.7.6.tar.gz /opt/hadoop/

[root@master-yly ~]# mv jdk-8u171-linux-x64.tar.gz /opt/hadoop/

[root@master-yly ~]# cd /opt/hadoop/

# 将jdk安装包进行解压

[root@master-yly hadoop]# tar -zxvf jdk-8u171-linux-x64.tar.gz

# 编辑/etc/profile文件,配置环境变量

[root@master-yly hadoop]# vim /etc/profile

export JAVA_HOME=/opt/hadoop/jdk1.8.0_171

export PATH=$PATH:$JAVA_HOME/bin

# 刷新环境变量,使修改的变量生效

[root@master-yly hadoop]# source /etc/profile

# 验证是否安装成功

[root@master-yly hadoop]# java -version

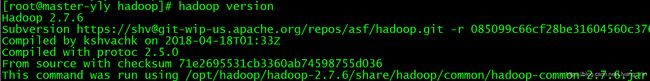

4.8 安装Hadoop

# 解压Hadoop的安装包

[root@master-yly hadoop]# tar -zxvf hadoop-2.7.6.tar.gz

# 编辑/etc/profile文件,配置环境变量

[root@master-yly hadoop]# vim /etc/profile

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.6

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

# 刷新环境变量,使修改的变量生效

[root@master-yly hadoop]# source /etc/profile

# 验证是否安装成功

[root@master-yly hadoop]# hadoop version

4.9 修改Hadoop的配置文件

PS:配置文件在:/opt/hadoop/ hadoop-2.7.6/etc/hadoop/目录下

# 修改core-site.xml

[root@master-yly hadoop]# cd hadoop-2.7.6/etc/hadoop/

[root@master-yly hadoop]# vim core-site.xml

fs.defaultFS

hdfs://master-yly:9000

hadoop.tmp.dir

/opt/hadoop/hadoop-2.7.6/hdfs

# 修改hdfs-site.xml

[root@master-yly hadoop]# vim hdfs-site.xml

dfs.replication

3

dfs.namenode.secondary.http-address

slave02-yly:9001

# 修改mapred-site.xml

[root@master-yly hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@master-yly hadoop]# vim mapred-site.xml

mapreduce.framework.name

yarn

# 修改yarn-site.xml

[root@master-yly hadoop]# vim yarn-site.xml

yarn.resourcemanager.hostname

master-yly

yarn.nodemanager.aux-services

mapreduce_shuffle

# 修改slaves文件

[root@master-yly hadoop]# vim slaves

master-yly

slave01-yly

slave02-yly

# 修改hadoop-env.sh文件

[root@master-yly hadoop]# vim hadoop-env.sh

export JAVA_HOME=/opt/hadoop/jdk1.8.0_171

4.10 将修改好的环境复制到其他的从节点

# 将jdk复制到其他的从节点

[root@master-yly ~]# scp -r /opt/hadoop/jdk1.8.0_171/ slave01-yly:/opt/hadoop/

[root@master-yly ~]# scp -r /opt/hadoop/jdk1.8.0_171/ slave02-yly:/opt/hadoop/

# 将Hadoop复制到其他从节点

[root@master-yly ~]# scp -r /opt/hadoop/hadoop-2.7.6/ slave01-yly:/opt/hadoop/

[root@master-yly ~]# scp -r /opt/hadoop/hadoop-2.7.6/ slave01-yly:/opt/hadoop/

# 将环境变量复制到其他从节点

[root@master-yly hadoop]# scp /etc/profile slave01-yly:/etc/

[root@master-yly hadoop]# scp /etc/profile slave02-yly:/etc/

4.11 格式化Hadoop

[root@master-yly hadoop]# hdfs namenode -format

4.12 启动

[root@master-yly hadoop]# start-all.sh

4.13 验证启动情况

# master-yly:

[root@master-yly ~]# jps

16626 DataNode

16916 ResourceManager

16468 NameNode

17048 NodeManager

18095 Jps

# slave01-yly:

[root@slave01-yly ~]# jps

16993 NodeManager

16823 DataNode

17320 Jps

# slave02-yly:

[root@slave02-yly ~]# jps

48538 NodeManager

48906 Jps

48204 DataNode

48367 SecondaryNameNode

5、项目使用说明

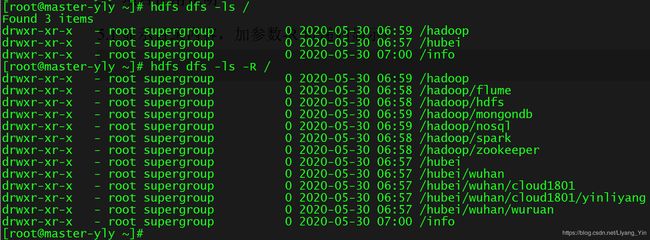

5.1显示目录内容,加参数-R可递归显示

[root@master-yly ~]# hdfs dfs -ls -R /

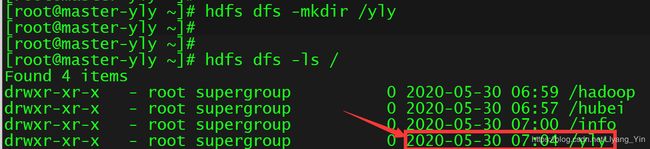

5.2 创建目录

[root@master-yly ~]# hdfs dfs -mkdir /yly

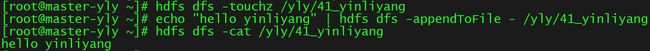

5.3 创建文件并往该文件内写入内容

[root@master-yly ~]# hdfs dfs -touchz /yly/41_yinliyang

[root@master-yly ~]# echo "hello yinliyang" | hdfs dfs -appendToFile - /yly/41_yinliyang

[root@master-yly ~]# hdfs dfs -cat /yly/41_yinliyang

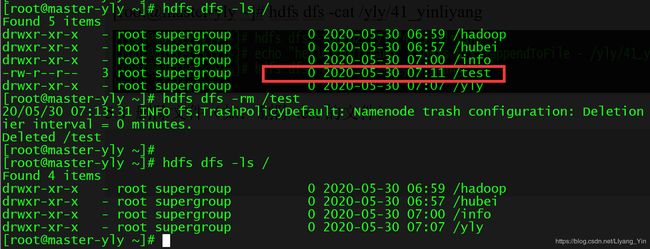

5.4 删除文件(如:删除test的文件)

[root@master-yly ~]# hdfs dfs -rm /test

5.5 将本地文件上传到HDFS

[root@master-yly ~]# hdfs dfs -put test /yly

5.6 将HDFS上的文件下载到本地

[root@master-yly ~]# hdfs dfs -get /yly/test /home/

5.7 复制文件

[root@master-yly ~]# hdfs dfs -cp /yly/41_yinliyang /hadoop

5.8 移动文件

[root@master-yly ~]# hdfs dfs -mv /hadoop/41_yinliyang /info