【OpenCV 笔记】金字塔光流法追踪运动目标

原理部分见https://blog.csdn.net/zouxy09/article/details/8683859

环境:OpenCV3.3+VS2017

光流简言之就是像素在某时刻运动的瞬时速度,利用这个瞬时速度可以估计到上一帧该像素点在下一帧的对应位置。

在上面链接中有五种计算光流的方法,其中金字塔光流法来自论文:”Pyramidal Implementation of the Lucas Kanade Feature TrackerDescription of the algorithm”,该论文详细论述了金字塔光流法的原理、数学推导以及伪代码实现,对于理解金字塔光流法有很大的帮助。

在OpenCV3.3中,金字塔光流法被封装为calcOpticalFlowPyrLK的函数

void calcOpticalFlowPyrLK( InputArray prevImg, InputArray nextImg,

InputArray prevPts, InputOutputArray nextPts,

OutputArray status, OutputArray err,

Size winSize = Size(21,21), int maxLevel = 3,

TermCriteria criteria = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 30, 0.01),

int flags = 0, double minEigThreshold = 1e-4 );prevImg,nextImg是相邻两帧的图像;

prevPts是前一帧图像中需要做匹配的点集的坐标,即需要跟踪的点,nextPts存放在下一帧中找到的匹配点的坐标;

status用来标志每一个光流是否成功找到,且内部元素只能为uchar型,err用来记录误差;

winSize为金字塔每层的搜索框大小,面积越大结果越精确,但是消耗时间也会增加,maxLevel为金字塔层数,按照论文中的论述,金字塔3-4层足矣,这里默认为3,算上初始层实际上是4层,一般不需要变动;

criteria是迭代结束的条件,如果需要改变,自己声明一个TermCriteria对象设置好类型、迭代次数和期望精度然后传入即可;

flags和minEigThreshold分别涉及到误差测量和滤除坏点,一般不用改变。

以下是本人练习使用OpenCV进行运动跟踪时写的C++代码,供读者借鉴,本人编程菜鸟,代码写的有点乱,望见谅。

#include "stdafx.h"

#include "opencv2/video/tracking.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/videoio.hpp"

#include "opencv2/highgui.hpp"

#include

#include

using namespace cv;

using namespace std;

#define WINDOW_CAP "运动捕捉"

#define WINDOW_PARAM "参数调整"

Mat curFrame;

Mat curGrayFrame, prevGrayFrame;//将图像转换为灰度图再进行处理

Mat ROIFrame, newFrame;//newFrame和形成拖拽矩形相关,ROIFrame用来对感兴趣区域进行处理

vector status;

vector err;

/*以下为滚动条事件所需的变量*/

int nTrackLevel = 1;//设置两帧之间点的移动距离大于一定数值才会被做绿色标记

int nPlay = 0;//是否播放

int nPyrLevel = 3;//金字塔层数

int nWinSize = 21;//金字塔光流搜索窗大小

enum DIRECTION {

KEEP = 0,

OTHER_DIRECTIONS = 1

};

float getDistance(Point pointO, Point pointA);

void drawLine(Mat img, Point SourceP, Point TargetP, DIRECTION type);

DIRECTION getDirection(Point SourceP, Point TargetP);

/*以下5行声明的是和绘制矩形框相关*/

Rect g_rectangle;

bool g_bDrawingBox = false;

bool g_bDrawFinished = false;

void on_MouseHandle(int event, int x, int y, int flags, void* param);

void DrawRectangle(Mat& img, Rect box);

int main()

{

VideoCapture cap;

cap.open("剪辑视频-敦刻尔克.avi");

if (!cap.isOpened())

{

cout << "图像读取失败";

return false;

}

namedWindow(WINDOW_CAP);

namedWindow(WINDOW_PARAM);

createTrackbar("捕捉敏感度", WINDOW_PARAM, &nTrackLevel, 15);

createTrackbar("暂停/继续", WINDOW_CAP, &nPlay, 1);

createTrackbar("金字塔层数", WINDOW_PARAM, &nPyrLevel, 3);

createTrackbar("搜索窗大小", WINDOW_PARAM, &nWinSize, 30);

setMouseCallback(WINDOW_CAP, on_MouseHandle, (void*)&newFrame);

cap >> curFrame;

imshow(WINDOW_CAP, curFrame);

while (!curFrame.empty())

{

curFrame.copyTo(newFrame);

DrawRectangle(newFrame, g_rectangle);

if (g_bDrawFinished)

{

ROIFrame = curFrame(Rect(g_rectangle.x, g_rectangle.y,

g_rectangle.width, g_rectangle.height));

}

if (nPlay)

{

cap >> curFrame;

if (curFrame.empty())

return false;

vector points[2];

/*如果对每个像素都进行操作,处理一帧都相当耗时间,因此每隔10像素取一次*/

for (int i = 0; i < curFrame.cols; i += 10)

for (int j = 0; j < curFrame.rows; j += 10)

{

points[0].push_back(Point(i, j));

}

cvtColor(curFrame, curGrayFrame, COLOR_BGR2GRAY);

if (!prevGrayFrame.empty())

{

/*使用GPU处理稀疏光流得到位置点矩阵,绘制标记操作扔在CPU中进行*/

/*程序运行时很明显感受到初始化gpu花费了大约半秒时间*/

UMat UprevGrayFrame, UcurGrayFrame;

prevGrayFrame.getUMat(ACCESS_READ).copyTo(UprevGrayFrame);

curGrayFrame.getUMat(ACCESS_READ).copyTo(UcurGrayFrame);

calcOpticalFlowPyrLK(UprevGrayFrame, UcurGrayFrame,

points[0], points[1], status, err, Size(nWinSize, nWinSize), nPyrLevel);

for (int i = 0; i < points[1].size(); i++)

{

int distance = getDistance(points[0][i], points[1][i]);

/*相邻帧必定为小范围移动,<10可以过滤干扰*/

/*大范围的干扰不知道从何而来*/

if (distance>= nTrackLevel&&distance<10)

{

DIRECTION dir = getDirection(points[0][i], points[1][i]);

/*

位置是相对于ROI区域来说的,但是

得到的坐标是在curFrame整幅图象中

的坐标,因此必须减掉矩形框的起始

得到相对值

*/

points[0][i].y -= g_rectangle.y;

points[0][i].x -= g_rectangle.x;

points[1][i].y -= g_rectangle.y;

points[1][i].x -= g_rectangle.x;

drawLine(ROIFrame, points[0][i], points[1][i], dir);

}

}

}

curGrayFrame.copyTo(prevGrayFrame);

}

imshow(WINDOW_CAP, newFrame);

waitKey(42);

}

waitKey();

}

void drawLine(Mat img, Point SourceP, Point TargetP, DIRECTION type)

{

switch (type)

{

case OTHER_DIRECTIONS:

line(img, SourceP, TargetP, Scalar(0, 255, 0), 2);

break;

/*KEEP此处没用*/

case KEEP:

circle(img, SourceP, 2, Scalar(0, 255, 0), -1, 8);

break;

default:

break;

}

}

float getDistance(Point pointO, Point pointA)

{

float distance;

distance = powf((pointO.x - pointA.x), 2) + powf((pointO.y - pointA.y), 2);

distance = sqrtf(distance);

return distance;

}

DIRECTION getDirection(Point SourceP, Point TargetP)

{

if (SourceP.x == TargetP.x&&SourceP.y == TargetP.y)

return DIRECTION::KEEP;

return DIRECTION::OTHER_DIRECTIONS;

}

void on_MouseHandle(int event, int x, int y, int flags, void* param)

{

Mat& image = *(Mat*)param;

switch (event)

{

case EVENT_MOUSEMOVE:

{

if (g_bDrawingBox)

{

g_rectangle.width = x - g_rectangle.x;

g_rectangle.height = y - g_rectangle.y;

}

}

break;

case EVENT_LBUTTONDOWN:

{

g_bDrawFinished = false;

g_bDrawingBox = true;

g_rectangle = Rect(x, y, 0, 0);

}

break;

case EVENT_LBUTTONUP:

{

g_bDrawingBox = false;

g_bDrawFinished = true;

if (g_rectangle.width < 0)

{

g_rectangle.x += g_rectangle.width;

g_rectangle.width *= -1;

}

if (g_rectangle.height < 0)

{

g_rectangle.y += g_rectangle.height;

g_rectangle.height *= -1;

}

DrawRectangle(image, g_rectangle);

}

break;

}

}

void DrawRectangle(Mat& img, Rect box)

{

rectangle(img, box.tl(), box.br(), Scalar(225,

105, 65), 2);

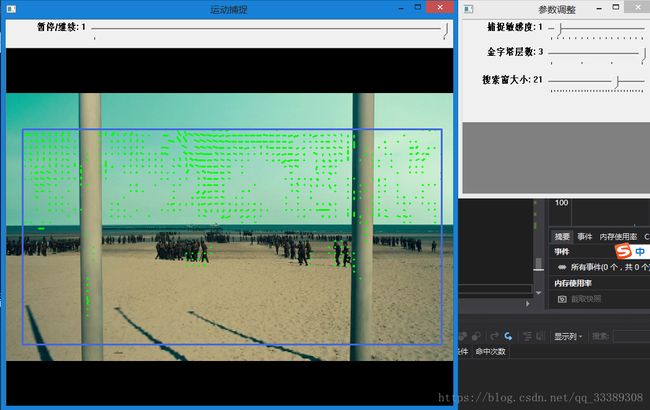

} 以下是效果图:视频中间走动的士兵成功地识别出来并且做了标记,由于镜头有远近拉伸,故天空和栏杆也有运动标记。

本人起初使用CPU计算光流卡顿明显,一秒处理5-6帧,经过百度发现OpenCV3的GPU计算非常方便,只需要将Mat声明为UMat程序会自动进行GPU运算,Mat和UMat的转换也是相当方便,改用GPU进行光流计算后速度提升显著,同样的参数下能够达到一秒处理30帧以上。