支持向量机分类中的SMO算法以及Python实现

上一篇博客讲到支持向量机分类,而本文将介绍支持向量机分类最常用的学习算法序列最小最优化(SMO)。SMO算法是分解方法(decomposition method)的一种极端情况,即每次迭代的工作子集(working set)只含有两个变量。SMO算法存在多种不同的WSS(working set selection)启发式搜索准则。本文主要讲Platt(1999)年首次提出的SMO算法以及Fan(2005)提出的一个WSS准则。另外,SMO具体应用到支持向量机分类和回归时存在细小差别,但核心思想是一致的。本文暂只讲SMO应用于支持向量机分类的情况。

核心思想

每次更新两个拉格朗日乘子直到所有的拉格朗日乘子都满足KKT条件

算法流程

本算法基于Platt(1999)首次提出的SMO算法。

- Input: 训练数据集(X,y),精度epsilon,最大迭代次数maxstep, 惩罚因子C,核函数及其参数

- Output: 所有样本对应的拉格朗日乘子,阈值b

- Step1:初始化样本的拉格朗日乘子及误差,计算核内积矩阵

- Step2:挑选alpha1。具体先搜索间隔边界上的支持向量( 0<α<C 0 < α < C ),若不满足KKT条件则选中。若间隔边界上的样本均满足KKT条件,则搜索整个数据集。若整个数据集样本在精度epsilon范围内均满足KKT条件,算法终止,反之转步骤3

- Step3: 挑选alpha2。从非边界支持向量的样本中,挑选出使得|E1-E2|(E为预测误差)最大的alpha,若不存在则随机选择一个与第一个不同的样本的alpha

- Step4:更新alpha1, alpha2,对应的预测误差及阈值b。转步骤2

注:具体更新公式比较复杂,参考李航《统计学习方法》

启发式搜索

每个迭代,如何挑选最合适的两个变量alpha是至关重要的。本文另外实现了Rong-En Fan等提出的WSS准则(文中WSS3),经初步测试效果比Platt(1999)提出的启发式搜索方法预测准确率更高。下面简单介绍下(代码在文末):

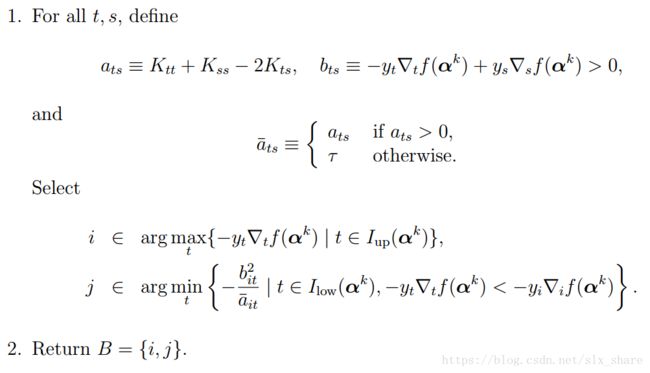

该准测核心是根据梯度从正类负类中各挑选一个alpha组成“violating pair”(违背最严重的一对alpha)作为“working set”。 如何描述违背最严重?WSS的判定标准是选择的working set构成的子问题的优化函数的值最小:

其中 −b2ita¯it − b i t 2 a ¯ i t 就是子问题的解。式中K是指核内积。 ▽tf(αk) ▽ t f ( α k ) 是梯度,值为 Qα−e Q α − e ( Q=yiyjKij,e为元素全部为1的向量 Q = y i y j K i j , e 为 元 素 全 部 为 1 的 向 量 )

(下文代码根据Fan的伪码书写,用Q替代了K,本质不变不影响子问题解的大小比较,详细可看论文)

代码

"""

支持向量机

学习方法:不等式约束的最优化问题,求解凸二次规划的最优化算法, 本程序采用序列最小最优化算法(SOM)

"""

import numpy as np

import math

class SVM:

def __init__(self, epsilon=1e-5, maxstep=500, C=1.0, kernel_option=True, gamma=None):

self.epsilon = epsilon

self.maxstep = maxstep

self.C = C

self.kernel_option = kernel_option # 是否选择核函数

self.gamma = gamma # 高斯核参数

self.kernel_arr = None # n*n 存储核内积

self.X = None # 训练数据集

self.y = None # 类标记值,是计算w,b的参数,故存入模型中

self.alpha_arr = None # 1*n 存储拉格朗日乘子, 每个样本对应一个拉格朗日乘子

self.b = 0 # 阈值b, 初始化为0

self.err_arr = None # 1*n 记录每个样本的预测误差

self.N = None

def init_param(self, X_data, y_data):

# 初始化参数, 包括核内积矩阵、alpha和预测误差

self.N = X_data.shape[0]

self.X = X_data

self.y = y_data

if self.gamma is None:

self.gamma = 1.0 / X_data.shape[1]

self.cal_kernel(X_data)

self.alpha_arr = np.zeros(self.N)

self.err_arr = - self.y # 将拉格朗日乘子全部初始化为0,则相应的预测值初始化为0,预测误差就是-y_data

return

def _gaussian_dot(self, x1, x2):

# 计算两个样本之间的高斯内积

return math.exp(-self.gamma * np.square(x1 - x2).sum())

def cal_kernel(self, X_data):

# 计算核内积矩阵

if self.kernel_option:

self.kernel_arr = np.ones((self.N, self.N))

for i in range(self.N):

for j in range(i + 1, self.N):

self.kernel_arr[i, j] = self._gaussian_dot(X_data[i], X_data[j])

self.kernel_arr[j, i] = self.kernel_arr[i, j]

else:

self.kernel_arr = X_data @ X_data.T # 不使用高斯核,线性分类器

return

def select_second_alpha(self, ind1):

# 挑选第二个变量alpha, 返回索引

E1 = self.err_arr[ind1]

ind2 = None

max_diff = 0 # 初始化最大的|E1-E2|

candidate_alpha_inds = np.nonzero(self.err_arr)[0] # 存在预测误差的样本作为候选样本

if len(candidate_alpha_inds) > 1:

for i in candidate_alpha_inds:

if i == ind1:

continue

tmp = abs(self.err_arr[i] - E1)

if tmp > max_diff:

max_diff = tmp

ind2 = i

if ind2 is None: # 随机选择一个不与ind1相等的样本索引

ind2 = ind1

while ind2 == ind1:

ind2 = np.random.choice(self.N)

return ind2

def update(self, ind1, ind2):

# 更新挑选出的两个样本的alpha、对应的预测值及误差和阈值b

old_alpha1 = self.alpha_arr[ind1]

old_alpha2 = self.alpha_arr[ind2]

y1 = self.y[ind1]

y2 = self.y[ind2]

if y1 == y2:

L = max(0.0, old_alpha2 + old_alpha1 - self.C)

H = min(self.C, old_alpha2 + old_alpha1)

else:

L = max(0.0, old_alpha2 - old_alpha1)

H = min(self.C, self.C + old_alpha2 - old_alpha1)

if L == H:

return 0

E1 = self.err_arr[ind1]

E2 = self.err_arr[ind2]

K11 = self.kernel_arr[ind1, ind1]

K12 = self.kernel_arr[ind1, ind2]

K22 = self.kernel_arr[ind2, ind2]

# 更新alpha2

eta = K11 + K22 - 2 * K12

if eta <= 0:

return 0

new_unc_alpha2 = old_alpha2 + y2 * (E1 - E2) / eta # 未经剪辑的alpha2

if new_unc_alpha2 > H:

new_alpha2 = H

elif new_unc_alpha2 < L:

new_alpha2 = L

else:

new_alpha2 = new_unc_alpha2

# 更新alpha1

if abs(old_alpha2 - new_alpha2) < self.epsilon * (

old_alpha2 + new_alpha2 + self.epsilon): # 若alpha2更新变化很小,则忽略本次更新

return 0

new_alpha1 = old_alpha1 + y1 * y2 * (old_alpha2 - new_alpha2)

self.alpha_arr[ind1] = new_alpha1

self.alpha_arr[ind2] = new_alpha2

# 更新阈值b

new_b1 = -E1 - y1 * K11 * (new_alpha1 - old_alpha1) - y2 * K12 * (new_alpha2 - old_alpha2) + self.b

new_b2 = -E2 - y1 * K12 * (new_alpha1 - old_alpha1) - y2 * K22 * (new_alpha2 - old_alpha2) + self.b

if 0 < new_alpha1 < self.C:

self.b = new_b1

elif 0 < new_alpha2 < self.C:

self.b = new_b2

else:

self.b = (new_b1 + new_b2) / 2

# 更新对应的预测误差

self.err_arr[ind1] = np.sum(self.y * self.alpha_arr * self.kernel_arr[ind1, :]) + self.b - y1

self.err_arr[ind2] = np.sum(self.y * self.alpha_arr * self.kernel_arr[ind2, :]) + self.b - y2

return 1

def satisfy_kkt(self, y, err, alpha):

# 在精度范围内判断是否满足KTT条件

r = y * err

# r<0,则yg<1, alpha=C则符合;r>0,则yg>1, alpha=0则符合

if (r < -self.epsilon and alpha < self.C) or (r > self.epsilon and alpha > 0):

return False

return True

def fit(self, X_data, y_data):

# 训练主函数

self.init_param(X_data, y_data)

# 启发式搜索第一个alpha时,当间隔边界上的支持向量全都满足KKT条件时,就搜索整个数据集。

# 整个训练过程需要在边界支持向量与所有样本集之间进行切换搜索,以防止无法收敛

entire_set = True

step = 0

change_pairs = 0

while step < self.maxstep and (change_pairs > 0 or entire_set): # 当搜寻全部样本,依然没有改变,则停止迭代

step += 1

change_pairs = 0

if entire_set: # 搜索整个样本集

for ind1 in range(self.N):

if not self.satisfy_kkt(y_data[ind1], self.err_arr[ind1], self.alpha_arr[ind1]):

ind2 = self.select_second_alpha(ind1)

change_pairs += self.update(ind1, ind2)

else: # 搜索间隔边界上的支持向量(bound_search)

bound_inds = np.where((0 < self.alpha_arr) & (self.alpha_arr < self.C))[0]

for ind1 in bound_inds:

if not self.satisfy_kkt(y_data[ind1], self.err_arr[ind1], self.alpha_arr[ind1]):

ind2 = self.select_second_alpha(ind1)

change_pairs += self.update(ind1, ind2)

if entire_set: # 当前是对整个数据集进行搜索,则下一次搜索间隔边界上的支持向量

entire_set = False

elif change_pairs == 0:

entire_set = True # 当前是对间隔边界上的支持向量进行搜索,若未发生任何改变,则下一次搜索整个数据集

return

def predict(self, x):

# 预测x的类别

if self.kernel_option:

kernel = np.array([self._gaussian_dot(x, sample) for sample in self.X])

g = np.sum(self.y * self.alpha_arr * kernel)

else:

g = np.sum(self.alpha_arr * self.y * (np.array([x]) @ self.X.T)[0])

return np.sign(g + self.b)

if __name__ == "__main__":

from sklearn.datasets import load_digits

data = load_digits(n_class=2)

X_data = data['data']

y_data = data['target']

inds = np.where(y_data == 0)[0]

y_data[inds] = -1

from machine_learning_algorithm.cross_validation import validate

g = validate(X_data, y_data)

for item in g:

X_train, y_train, X_test, y_test = item

S = SVM(kernel_option=False, maxstep=1000, epsilon=1e-6, C=1.0)

S.fit(X_train, y_train)

score = 0

for X, y in zip(X_test, y_test):

if S.predict(X) == y:

score += 1

print(score / len(y_test))

Fan等的WSS代码

其他部分相同(除了添加了矩阵Q)

def get_working_set(self, y_data):

# 挑选两个变量alpha, 返回索引

ind1 = -1

ind2 = -1

max_grad = - float('inf')

min_grad = float('inf')

# 挑选第一个alpha, 正类

for i in range(self.N):

if (y_data[i] == 1 and self.alpha_arr[i] < self.C) or (y_data[i] == -1 and self.alpha_arr[i] > 0):

tmp = -y_data[i] * self.grad[i]

if tmp >= max_grad:

ind1 = i

max_grad = tmp

# 挑选第二个alpha, 负类

ab_obj = float('inf')

for i in range(self.N):

if (y_data[i] == 1 and self.alpha_arr[i] > 0) or (y_data[i] == -1 and self.alpha_arr[i] < self.C):

tmp = y_data[i] * self.grad[i]

b = max_grad + tmp

if -tmp < min_grad:

min_grad = -tmp

if b > 0:

a = self.Q[ind1][ind1] + self.Q[i][i] - 2 * y_data[ind1] * y_data[i] * \

self.Q[ind1][i]

if a <= 0:

a = 1e-12

if - b ** 2 / a < ab_obj:

ind2 = i

ab_obj = - b ** 2 / a

if max_grad - min_grad >= self.epsilon: # 收敛条件

return ind1, ind2

return -1, -1

def update(self, ind1, ind2):

# 更新挑选出的两个样本的alpha、对应的预测值及误差和阈值b

old_alpha1 = self.alpha_arr[ind1]

old_alpha2 = self.alpha_arr[ind2]

y1 = self.y[ind1]

y2 = self.y[ind2]

a = self.Q[ind1][ind1] + self.Q[ind2][ind2] - 2 * y1 * y2 * self.Q[ind1][ind2]

if a <= 0:

a = 1e-12

b = -y1 * self.grad[ind1] + y2 * self.grad[ind2]

new_alpha1 = old_alpha1 + y1 * b / a

# 剪辑

s = y1 * old_alpha1 + y2 * old_alpha2

if new_alpha1 > self.C:

new_alpha1 = self.C

if new_alpha1 < 0:

new_alpha1 = 0

new_alpha2 = y2 * (s - y1 * new_alpha1)

if new_alpha2 > self.C:

new_alpha2 = self.C

if new_alpha2 < 0:

new_alpha2 = 0

new_alpha1 = y1 * (s - y2 * new_alpha2)

self.alpha_arr[ind1] = new_alpha1

self.alpha_arr[ind2] = new_alpha2

# 更新梯度

delta1 = new_alpha1 - old_alpha1

delta2 = new_alpha2 - old_alpha2

for i in range(self.N):

self.grad[i] += self.Q[i][ind1] * delta1 + self.Q[i][ind2] * delta2

return

def fit(self, X_data, y_data):

# 训练主函数

self.init_param(X_data, y_data)

step = 0

while step < self.maxstep:

step += 1

ind1, ind2 = self.get_working_set(y_data)

if ind2 == -1:

break

self.update(ind1, ind2)

# 计算阈值b

alpha0_inds = set(np.where(self.grad == 0)[0])

alphaC_inds = set(np.where(self.grad == self.C)[0])

alpha_inds = set(range(self.N)) - alphaC_inds - alpha0_inds

label_inds1 = set(np.where(y_data == 1)[0])

r1_inds = list(label_inds1 & alpha_inds)

if r1_inds:

r1 = self.grad[r1_inds].sum()

else:

min_r1 = self.grad[list(alpha0_inds & label_inds1)].min()

max_r1 = self.grad[list(alphaC_inds & label_inds1)].max()

r1 = (min_r1 + max_r1) / 2

label_inds2 = set(np.where(y_data == -1)[0])

r2_inds = list(label_inds1 & alpha_inds)

if r2_inds:

r2 = self.grad[r2_inds].sum()

else:

min_r2 = self.grad[list(alpha0_inds & label_inds2)].min()

max_r2 = self.grad[list(alphaC_inds & label_inds2)].max()

r2 = (min_r2 + max_r2) / 2

self.b = (r2 - r1) / 2

return我的GitHub

注:如有不当之处,请指正。