- [实践应用] 深度学习之优化器

YuanDaima2048

深度学习工具使用pytorch深度学习人工智能机器学习python优化器

文章总览:YuanDaiMa2048博客文章总览深度学习之优化器1.随机梯度下降(SGD)2.动量优化(Momentum)3.自适应梯度(Adagrad)4.自适应矩估计(Adam)5.RMSprop总结其他介绍在深度学习中,优化器用于更新模型的参数,以最小化损失函数。常见的优化函数有很多种,下面是几种主流的优化器及其特点、原理和PyTorch实现:1.随机梯度下降(SGD)原理:随机梯度下降通过

- 个人学习笔记7-6:动手学深度学习pytorch版-李沐

浪子L

深度学习深度学习笔记计算机视觉python人工智能神经网络pytorch

#人工智能##深度学习##语义分割##计算机视觉##神经网络#计算机视觉13.11全卷积网络全卷积网络(fullyconvolutionalnetwork,FCN)采用卷积神经网络实现了从图像像素到像素类别的变换。引入l转置卷积(transposedconvolution)实现的,输出的类别预测与输入图像在像素级别上具有一一对应关系:通道维的输出即该位置对应像素的类别预测。13.11.1构造模型下

- 【安装环境】配置MMTracking环境

xuanyu22

安装环境机器学习神经网络深度学习python

版本v0.14.0安装torchnumpy的版本不能太高,否则后面安装时会发生冲突。先安装numpy,因为pytorch的安装会自动配置高版本numpy。condainstallnumpy=1.21.5mmtracking支持的torch版本有限,需要找到合适的condainstallpytorch==1.11.0torchvision==0.12.0cudatoolkit=10.2-cpytor

- Python(PyTorch)和MATLAB及Rust和C++结构相似度指数测量导图

亚图跨际

Python交叉知识算法量化检查图像压缩质量低分辨率多光谱峰值信噪比端到端优化图像压缩手术机器人三维实景实时可微分渲染重建三维可视化

要点量化检查图像压缩质量低分辨率多光谱和高分辨率图像实现超分辨率分析图像质量图像索引/多尺度结构相似度指数和光谱角映射器及视觉信息保真度多种指标峰值信噪比和结构相似度指数测量结构相似性图像分类PNG和JPEG图像相似性近似算法图像压缩,视频压缩、端到端优化图像压缩、神经图像压缩、GPU变速图像压缩手术机器人深度估计算法重建三维可视化推理图像超分辨率算法模型三维实景实时可微分渲染算法MATLAB结构

- 【深度学习】训练过程中一个OOM的问题,太难查了

weixin_40293999

深度学习深度学习人工智能

现象:各位大佬又遇到过ubuntu的这个问题么?现象是在训练过程中,ssh上不去了,能ping通,没死机,但是ubunutu的pc侧的显示器,鼠标啥都不好用了。只能重启。问题原因:OOM了95G,尼玛!!!!pytorch爆内存了,然后journald假死了,在journald被watchdog干掉之后,系统就崩溃了。这种规模的爆内存一般,即使被oomkill了,也要卡半天的,确实会这样,能不能配

- Pyorch中 nn.Conv1d 与 nn.Linear 的区别

迪三

#NN_Layer神经网络

即一维卷积层和全联接层的区别nn.Conv1d和nn.Linear都是PyTorch中的层,它们用于不同的目的,主要区别在于它们处理输入数据的方式和执行的操作类型。nn.Conv1d通过应用滑动过滤器来捕捉序列数据中的局部模式,适用于处理具有时间或序列结构的数据。nn.Linear通过将每个输入与每个输出相连接,捕捉全局关系,适用于将输入数据作为整体处理的任务。1.维度与输入nn.Conv1d(一

- 图片中的上采样,下采样和通道融合(up-sample, down-sample, channel confusion)

迪三

#图像处理_PyTorch计算机视觉深度学习人工智能

前言以conv2d为例(即图片),Pytorch中输入的数据格式为tensor,格式为:[N,C,W,H,W]第一维N.代表图片个数,类似一个batch里面有N张图片第二维C.代表通道数,在模型中输入如果为彩色,常用RGB三色图,那么就是3维,即C=3。如果是黑白的,即灰度图,那么只有一个通道,即C=1第三维H.代表图片的高度,H的数量是图片像素的列数第四维W.代表图片的宽度,W的数量是图片像素的

- 深度学习:怎么看pth文件的参数

奥利给少年

深度学习人工智能

.pth文件是PyTorch模型的权重文件,它通常包含了训练好的模型的参数。要查看或使用这个文件,你可以按照以下步骤操作:1.确保你有模型的定义你需要有创建这个.pth文件时所用的模型的代码。这意味着你需要有模型的类定义和架构。2.加载模型权重使用PyTorch的load_state_dict方法来加载权重。这里是如何操作的:importtorchimporttorch.nnasnn#定义模型结构

- 每天五分钟玩转深度学习PyTorch:模型参数优化器torch.optim

幻风_huanfeng

深度学习框架pytorch深度学习pytorch人工智能神经网络机器学习优化算法

本文重点在机器学习或者深度学习中,我们需要通过修改参数使得损失函数最小化(或最大化),优化算法就是一种调整模型参数更新的策略。在pytorch中定义了优化器optim,我们可以使用它调用封装好的优化算法,然后传递给它神经网络模型参数,就可以对模型进行优化。本文是学习第6步(优化器),参考链接pytorch的学习路线随机梯度下降算法在深度学习和机器学习中,梯度下降算法是最常用的参数更新方法,它的公式

- 【深度学习】【OnnxRuntime】【Python】模型转化、环境搭建以及模型部署的详细教程

牙牙要健康

深度学习onnxonnxruntime深度学习python人工智能

【深度学习】【OnnxRuntime】【Python】模型转化、环境搭建以及模型部署的详细教程提示:博主取舍了很多大佬的博文并亲测有效,分享笔记邀大家共同学习讨论文章目录【深度学习】【OnnxRuntime】【Python】模型转化、环境搭建以及模型部署的详细教程前言模型转换--pytorch转onnxWindows平台搭建依赖环境onnxruntime调用onnx模型ONNXRuntime推理核

- 天下苦英伟达久矣!PyTorch官方免CUDA加速推理,Triton时代要来?

诗者才子酒中仙

物联网/互联网/人工智能/其他pytorch人工智能python

在做大语言模型(LLM)的训练、微调和推理时,使用英伟达的GPU和CUDA是常见的做法。在更大的机器学习编程与计算范畴,同样严重依赖CUDA,使用它加速的机器学习模型可以实现更大的性能提升。虽然CUDA在加速计算领域占据主导地位,并成为英伟达重要的护城河之一。但其他一些工作的出现正在向CUDA发起挑战,比如OpenAI推出的Triton,它在可用性、内存开销、AI编译器堆栈构建等方面具有一定的优势

- pytorch安装(windows)

m0_62244898

windows人工智能

(1)下载pycharmPyCharm:thePythonIDEforProfessionalDevelopersbyJetBrains(2)下载anacondaAnaconda|TheWorld'sMostPopularDataSciencePlatform(3)创建一个新环境:torchcondacreate-ntorch-y(4)进入新环境condaactivatetorch(5)加入清华源

- 深度学习入门篇:PyTorch实现手写数字识别

AI_Guru人工智能

深度学习pytorch人工智能

深度学习作为机器学习的一个分支,近年来在图像识别、自然语言处理等领域取得了显著的成就。在众多的深度学习框架中,PyTorch以其动态计算图、易用性强和灵活度高等特点,受到了广泛的喜爱。本篇文章将带领大家使用PyTorch框架,实现一个手写数字识别的基础模型。手写数字识别简介手写数字识别是计算机视觉领域的一个经典问题,目的是让计算机能够识别并理解手写数字图像。这个问题通常作为深度学习入门的练习,因为

- 【ShuQiHere】小白也能懂的 TensorFlow 和 PyTorch GPU 配置教程

ShuQiHere

tensorflowpytorch人工智能

【ShuQiHere】在深度学习中,GPU的使用对于加速模型训练至关重要。然而,对于许多刚刚入门的小白来说,如何在TensorFlow和PyTorch中指定使用GPU进行训练可能会感到困惑。在本文中,我将详细介绍如何在这两个主流的深度学习框架中指定使用GPU进行训练,并确保每一个步骤都简单易懂,跟着我的步骤来,你也能轻松上手!1.安装所需库首先,确保你已经安装了TensorFlow或PyTorch

- 解决ModuleNotFoundError: No module named ‘torch的方法

梅菊林

各种问题解决方案开发语言

ModuleNotFoundError:Nomodulenamed‘torch’错误是Python在尝试导入名为torch的模块时找不到该模块而抛出的异常。torch是PyTorch深度学习框架的核心库,如果你的Python环境中没有安装这个库,尝试导入时就会遇到这个错误。文章目录报错问题报错原因解决方法报错问题当你尝试在Python脚本或交互式环境中执行以下命令时:importtorch如果Py

- Python中item()和items()的用处

~|Bernard|

深度学习疑点总结pythonpytorch深度学习

item()区别一:在pytorch训练时,一般用到.item()。比如loss.item()。我们可以做个简单测试代码看看它的区别:importtorchx=torch.randn(2,2)print(x)print(x[1,1])print(x[1,1].item())运行结果:tensor([[-2.0743,0.1675],[0.7016,-0.6779]])tensor(-0.6779)

- GPU版pytorch安装

普通攻击往后拉

pythontips神经网络基础模型关键点

由于经常重装系统,导致电脑的环境需要经常重新配置,其中尤其是cudatorch比较难以安装,因此记录一下安装GPU版本torch的过程。1)安装CUDAtoolkit这个可以看做是N卡所有cuda计算的基础,一般都会随驱动的更新自动安装,但是不全,仍然需要安装toolkit,并不需要先看已有版本是哪个,反正下载完后会自动覆盖原有的cuda。下载网站两个:国内网站:只能下载最新的toolkit,但是

- 轻松升级:Ollama + OpenWebUI 安装与配置【AIStarter】

ai_xiaogui

AI作画AI软件人工智能AI写作AIStarter

Ollama是一个开源项目,用于构建和训练大规模语言模型,而OpenWebUI则提供了一个方便的前端界面来管理和监控这些模型。本文将指导你如何更新这两个工具,并顺利完成配置。准备工作确保你的系统已安装Git和Python环境。安装必要的依赖库,如TensorFlow或PyTorch等。更新步骤克隆项目:使用Git命令行工具克隆最新的Ollama和OpenWebUI仓库到本地。更新代码:确保你正在使

- conda环境管理

Johnson0722

pythonpythonconda环境管理

Anaconda使用软件包管理系统Conda进行包管理,为用户对不同版本、不同功能的工具包的环境进行配置和管理提供便利。来看一看使用conda来进行环境管理的基本命令创建环境创建一个名为test的python环境,指定python版本是3.7.3,并在test环境中安装pytorchcondacreate--nametestpython=3.7.3pytorch查看系统中的所有环境用户安装的不同环

- R-Drop pytorch实现

warpin

深度学习深度学习pytorch

Pytorch实现了R-Drop,可以用于训练分类模型。#-*-coding:utf-8-*-"""Description:AnimplementationofR-Drop(https://arxiv.org/pdf/2106.14448.pdf).Authors:lihpCreateDate:2021/8/24"""fromtorchimportnnfromtorch.nnimportfunct

- Transformer模型:WordEmbedding实现

Galaxy.404

Transformertransformer深度学习人工智能embedding

前言最近在学Transformer,学了理论的部分之后就开始学代码的实现,这里是跟着b站的up主的视频记的笔记,视频链接:19、Transformer模型Encoder原理精讲及其PyTorch逐行实现_哔哩哔哩_bilibili正文首先导入所需要的包:importtorchimportnumpyasnpimporttorch.nnasnnimporttorch.nn.functionalasF关

- 如何使用Pytorch-Metric-Learning?

鱼儿也有烦恼

PyTorchpytorch

文章目录如何使用Pytorch-Metric-Learning?1.Pytorch-Metric-Learning库9个模块的功能1.1Sampler模块1.2Miner模块1.3Loss模块1.4Reducer模块1.5Distance模块1.6Regularizer模块1.7Trainer模块1.8Tester模块1.9Utils模块2.如何使用PyTorchMetricLearning库中的

- 每天五分钟玩转深度学习框架PyTorch:获取神经网络模型的参数

幻风_huanfeng

深度学习框架pytorch深度学习pytorch神经网络人工智能模型参数python

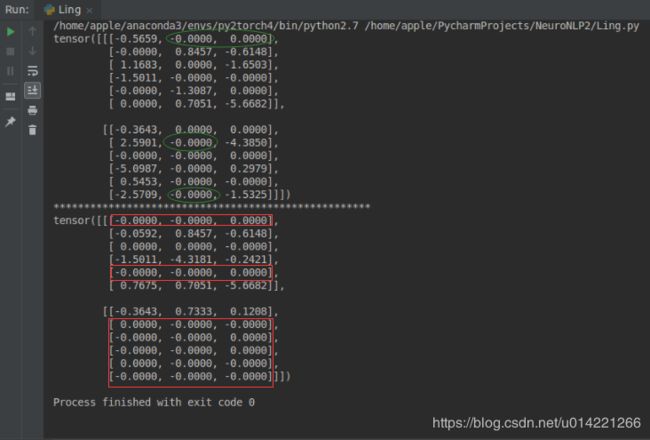

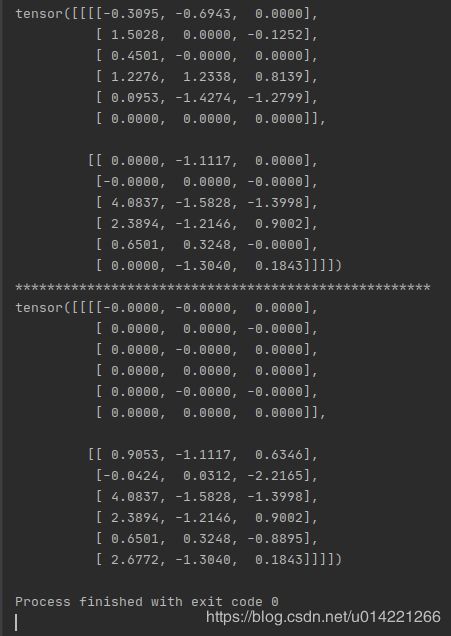

本文重点当我们定义好神经网络之后,这个网络是由多个网络层构成的,每层都有参数,我们如何才能获取到这些参数呢?我们将再下面介绍几个方法来获取神经网络的模型参数,此文我们是为了学习第6步(优化器)。获取所有参数Parametersfromtorchimportnnnet=nn.Sequential(nn.Linear(4,2),nn.Linear(2,2))print(list(net.paramet

- 一维数组 list 呢 ,怎么转换成 (批次 句子长度 特征值 )三维向量 python pytorch lstm 编程 人工智能

zhangfeng1133

pythonpytorch人工智能数据挖掘

一、介绍对于一维数组,如果你想将其转换成适合深度学习模型(如LSTM)输入的格式,你需要考虑将其扩展为三维张量。这通常涉及到批次大小(batchsize)、序列长度(sequencelength)和特征数量(numberoffeatures)的维度。以下是如何将一维数组转换为这种格式的步骤:###1.确定维度-**批次大小(BatchSize)**:这是你一次处理的样本数量。-**序列长度(Seq

- 每天五分钟玩转深度学习框架PyTorch:将nn的神经网络层连接起来

幻风_huanfeng

深度学习框架pytorch深度学习pytorch神经网络人工智能机器学习python

本文重点前面我们学习pytorch中已经封装好的神经网络层,有全连接层,激活层,卷积层等等,我们可以直接使用。如代码所示我们直接使用了两个nn.Linear(),这两个linear之间并没有组合在一起,所以forward的之后,分别调用了,在实际使用中我们常常将几个神经层组合在一起,这样不仅操作方便,而且代码清晰。这里介绍一下Sequential()和ModuleList(),它们可以将多个神经网

- 项目实训十四

qq_51946537

项目实训python

将pytorch模型封装成接口由于前面对于模型的构建、训练、评估都以完成,接下来要做的就是将按照项目要求,将模型封装成接口,供后端直接调用。我需要做的是后端直接调用系统命令pythonprase.py-img图片便可以直接得到解析结果。由于前面的测试模型的正确率都是批量处理过的图片,而现在前端只会传过来要解析的图片或者图片路径,而且图片也是未经处理过的,显然直接输入不会得到好的结果,并且性能也会比

- pytorch矩阵乘法

weixin_45694975

pytorch深度学习神经网络

一、torch.bmminput1shape:(batch_size,seq1_len,emb_dim)input2shape:(batch_size,emb_dim,seq2_len)outputshape:(batch_size,seq1_len,seq2_len)注意:torch.bmm只适合三维tensor做矩阵运算特别地,torch.bmm支持tenso广播运算input1shape:(

- pytorch矩阵乘法总结

chenxi yan

PyTorch学习pytorch矩阵深度学习

1.element-wise(*)按元素相乘,支持广播,等价于torch.mul()a=torch.tensor([[1,2],[3,4]])b=torch.tensor([[2,3],[4,5]])c=a*b#等价于torch.mul(a,b)#tensor([[2,6],#[12,20]])a*torch.tensor([1,2])#广播,等价于torch.mul(a,torch.tensor

- 推荐开源项目:PyTorch-Metric-Learning

潘惟妍

推荐开源项目:PyTorch-Metric-Learningpytorch-metric-learningTheeasiestwaytousedeepmetriclearninginyourapplication.Modular,flexible,andextensible.WritteninPyTorch.项目地址:https://gitcode.com/gh_mirrors/py/pytorc

- pytroch2.4 提示到不到fbgemm.dll

bziyue

pythonpytorch

#python/pytorch/问题记录```>>>importtorchTraceback(mostrecentcalllast):File"",line1,inFile"C:\Users\95416\AppData\Local\Programs\Python\Python312\Lib\site-packages\torch\__init__.py",line148,inraiseerrOSE

- jQuery 键盘事件keydown ,keypress ,keyup介绍

107x

jsjquerykeydownkeypresskeyup

本文章总结了下些关于jQuery 键盘事件keydown ,keypress ,keyup介绍,有需要了解的朋友可参考。

一、首先需要知道的是: 1、keydown() keydown事件会在键盘按下时触发. 2、keyup() 代码如下 复制代码

$('input').keyup(funciton(){

- AngularJS中的Promise

bijian1013

JavaScriptAngularJSPromise

一.Promise

Promise是一个接口,它用来处理的对象具有这样的特点:在未来某一时刻(主要是异步调用)会从服务端返回或者被填充属性。其核心是,promise是一个带有then()函数的对象。

为了展示它的优点,下面来看一个例子,其中需要获取用户当前的配置文件:

var cu

- c++ 用数组实现栈类

CrazyMizzz

数据结构C++

#include<iostream>

#include<cassert>

using namespace std;

template<class T, int SIZE = 50>

class Stack{

private:

T list[SIZE];//数组存放栈的元素

int top;//栈顶位置

public:

Stack(

- java和c语言的雷同

麦田的设计者

java递归scaner

软件启动时的初始化代码,加载用户信息2015年5月27号

从头学java二

1、语言的三种基本结构:顺序、选择、循环。废话不多说,需要指出一下几点:

a、return语句的功能除了作为函数返回值以外,还起到结束本函数的功能,return后的语句

不会再继续执行。

b、for循环相比于whi

- LINUX环境并发服务器的三种实现模型

被触发

linux

服务器设计技术有很多,按使用的协议来分有TCP服务器和UDP服务器。按处理方式来分有循环服务器和并发服务器。

1 循环服务器与并发服务器模型

在网络程序里面,一般来说都是许多客户对应一个服务器,为了处理客户的请求,对服务端的程序就提出了特殊的要求。

目前最常用的服务器模型有:

·循环服务器:服务器在同一时刻只能响应一个客户端的请求

·并发服务器:服

- Oracle数据库查询指令

肆无忌惮_

oracle数据库

20140920

单表查询

-- 查询************************************************************************************************************

-- 使用scott用户登录

-- 查看emp表

desc emp

- ext右下角浮动窗口

知了ing

JavaScriptext

第一种

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/

- 浅谈REDIS数据库的键值设计

矮蛋蛋

redis

http://www.cnblogs.com/aidandan/

原文地址:http://www.hoterran.info/redis_kv_design

丰富的数据结构使得redis的设计非常的有趣。不像关系型数据库那样,DEV和DBA需要深度沟通,review每行sql语句,也不像memcached那样,不需要DBA的参与。redis的DBA需要熟悉数据结构,并能了解使用场景。

- maven编译可执行jar包

alleni123

maven

http://stackoverflow.com/questions/574594/how-can-i-create-an-executable-jar-with-dependencies-using-maven

<build>

<plugins>

<plugin>

<artifactId>maven-asse

- 人力资源在现代企业中的作用

百合不是茶

HR 企业管理

//人力资源在在企业中的作用人力资源为什么会存在,人力资源究竟是干什么的 人力资源管理是对管理模式一次大的创新,人力资源兴起的原因有以下点: 工业时代的国际化竞争,现代市场的风险管控等等。所以人力资源 在现代经济竞争中的优势明显的存在,人力资源在集团类公司中存在着 明显的优势(鸿海集团),有一次笔者亲自去体验过红海集团的招聘,只 知道人力资源是管理企业招聘的 当时我被招聘上了,当时给我们培训 的人

- Linux自启动设置详解

bijian1013

linux

linux有自己一套完整的启动体系,抓住了linux启动的脉络,linux的启动过程将不再神秘。

阅读之前建议先看一下附图。

本文中假设inittab中设置的init tree为:

/etc/rc.d/rc0.d

/etc/rc.d/rc1.d

/etc/rc.d/rc2.d

/etc/rc.d/rc3.d

/etc/rc.d/rc4.d

/etc/rc.d/rc5.d

/etc

- Spring Aop Schema实现

bijian1013

javaspringAOP

本例使用的是Spring2.5

1.Aop配置文件spring-aop.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans

xmlns="http://www.springframework.org/schema/beans"

xmln

- 【Gson七】Gson预定义类型适配器

bit1129

gson

Gson提供了丰富的预定义类型适配器,在对象和JSON串之间进行序列化和反序列化时,指定对象和字符串之间的转换方式,

DateTypeAdapter

public final class DateTypeAdapter extends TypeAdapter<Date> {

public static final TypeAdapterFacto

- 【Spark八十八】Spark Streaming累加器操作(updateStateByKey)

bit1129

update

在实时计算的实际应用中,有时除了需要关心一个时间间隔内的数据,有时还可能会对整个实时计算的所有时间间隔内产生的相关数据进行统计。

比如: 对Nginx的access.log实时监控请求404时,有时除了需要统计某个时间间隔内出现的次数,有时还需要统计一整天出现了多少次404,也就是说404监控横跨多个时间间隔。

Spark Streaming的解决方案是累加器,工作原理是,定义

- linux系统下通过shell脚本快速找到哪个进程在写文件

ronin47

一个文件正在被进程写 我想查看这个进程 文件一直在增大 找不到谁在写 使用lsof也没找到

这个问题挺有普遍性的,解决方法应该很多,这里我给大家提个比较直观的方法。

linux下每个文件都会在某个块设备上存放,当然也都有相应的inode, 那么透过vfs.write我们就可以知道谁在不停的写入特定的设备上的inode。

幸运的是systemtap的安装包里带了inodewatch.stp,位

- java-两种方法求第一个最长的可重复子串

bylijinnan

java算法

import java.util.Arrays;

import java.util.Collections;

import java.util.List;

public class MaxPrefix {

public static void main(String[] args) {

String str="abbdabcdabcx";

- Netty源码学习-ServerBootstrap启动及事件处理过程

bylijinnan

javanetty

Netty是采用了Reactor模式的多线程版本,建议先看下面这篇文章了解一下Reactor模式:

http://bylijinnan.iteye.com/blog/1992325

Netty的启动及事件处理的流程,基本上是按照上面这篇文章来走的

文章里面提到的操作,每一步都能在Netty里面找到对应的代码

其中Reactor里面的Acceptor就对应Netty的ServerBo

- servelt filter listener 的生命周期

cngolon

filterlistenerservelt生命周期

1. servlet 当第一次请求一个servlet资源时,servlet容器创建这个servlet实例,并调用他的 init(ServletConfig config)做一些初始化的工作,然后调用它的service方法处理请求。当第二次请求这个servlet资源时,servlet容器就不在创建实例,而是直接调用它的service方法处理请求,也就是说

- jmpopups获取input元素值

ctrain

JavaScript

jmpopups 获取弹出层form表单

首先,我有一个div,里面包含了一个表单,默认是隐藏的,使用jmpopups时,会弹出这个隐藏的div,其实jmpopups是将我们的代码生成一份拷贝。

当我直接获取这个form表单中的文本框时,使用方法:$('#form input[name=test1]').val();这样是获取不到的。

我们必须到jmpopups生成的代码中去查找这个值,$(

- vi查找替换命令详解

daizj

linux正则表达式替换查找vim

一、查找

查找命令

/pattern<Enter> :向下查找pattern匹配字符串

?pattern<Enter>:向上查找pattern匹配字符串

使用了查找命令之后,使用如下两个键快速查找:

n:按照同一方向继续查找

N:按照反方向查找

字符串匹配

pattern是需要匹配的字符串,例如:

1: /abc<En

- 对网站中的js,css文件进行打包

dcj3sjt126com

PHP打包

一,为什么要用smarty进行打包

apache中也有给js,css这样的静态文件进行打包压缩的模块,但是本文所说的不是以这种方式进行的打包,而是和smarty结合的方式来把网站中的js,css文件进行打包。

为什么要进行打包呢,主要目的是为了合理的管理自己的代码 。现在有好多网站,你查看一下网站的源码的话,你会发现网站的头部有大量的JS文件和CSS文件,网站的尾部也有可能有大量的J

- php Yii: 出现undefined offset 或者 undefined index解决方案

dcj3sjt126com

undefined

在开发Yii 时,在程序中定义了如下方式:

if($this->menuoption[2] === 'test'),那么在运行程序时会报:undefined offset:2,这样的错误主要是由于php.ini 里的错误等级太高了,在windows下错误等级

- linux 文件格式(1) sed工具

eksliang

linuxlinux sed工具sed工具linux sed详解

转载请出自出处:

http://eksliang.iteye.com/blog/2106082

简介

sed 是一种在线编辑器,它一次处理一行内容。处理时,把当前处理的行存储在临时缓冲区中,称为“模式空间”(pattern space),接着用sed命令处理缓冲区中的内容,处理完成后,把缓冲区的内容送往屏幕。接着处理下一行,这样不断重复,直到文件末尾

- Android应用程序获取系统权限

gqdy365

android

引用

如何使Android应用程序获取系统权限

第一个方法简单点,不过需要在Android系统源码的环境下用make来编译:

1. 在应用程序的AndroidManifest.xml中的manifest节点

- HoverTree开发日志之验证码

hvt

.netC#asp.nethovertreewebform

HoverTree是一个ASP.NET的开源CMS,目前包含文章系统,图库和留言板功能。代码完全开放,文章内容页生成了静态的HTM页面,留言板提供留言审核功能,文章可以发布HTML源代码,图片上传同时生成高品质缩略图。推出之后得到许多网友的支持,再此表示感谢!留言板不断收到许多有益留言,但同时也有不少广告,因此决定在提交留言页面增加验证码功能。ASP.NET验证码在网上找,如果不是很多,就是特别多

- JSON API:用 JSON 构建 API 的标准指南中文版

justjavac

json

译文地址:https://github.com/justjavac/json-api-zh_CN

如果你和你的团队曾经争论过使用什么方式构建合理 JSON 响应格式, 那么 JSON API 就是你的 anti-bikeshedding 武器。

通过遵循共同的约定,可以提高开发效率,利用更普遍的工具,可以是你更加专注于开发重点:你的程序。

基于 JSON API 的客户端还能够充分利用缓存,

- 数据结构随记_2

lx.asymmetric

数据结构笔记

第三章 栈与队列

一.简答题

1. 在一个循环队列中,队首指针指向队首元素的 前一个 位置。

2.在具有n个单元的循环队列中,队满时共有 n-1 个元素。

3. 向栈中压入元素的操作是先 移动栈顶指针&n

- Linux下的监控工具dstat

网络接口

linux

1) 工具说明dstat是一个用来替换 vmstat,iostat netstat,nfsstat和ifstat这些命令的工具, 是一个全能系统信息统计工具. 与sysstat相比, dstat拥有一个彩色的界面, 在手动观察性能状况时, 数据比较显眼容易观察; 而且dstat支持即时刷新, 譬如输入dstat 3, 即每三秒收集一次, 但最新的数据都会每秒刷新显示. 和sysstat相同的是,

- C 语言初级入门--二维数组和指针

1140566087

二维数组c/c++指针

/*

二维数组的定义和二维数组元素的引用

二维数组的定义:

当数组中的每个元素带有两个下标时,称这样的数组为二维数组;

(逻辑上把数组看成一个具有行和列的表格或一个矩阵);

语法:

类型名 数组名[常量表达式1][常量表达式2]

二维数组的引用:

引用二维数组元素时必须带有两个下标,引用形式如下:

例如:

int a[3][4]; 引用:

- 10点睛Spring4.1-Application Event

wiselyman

application

10.1 Application Event

Spring使用Application Event给bean之间的消息通讯提供了手段

应按照如下部分实现bean之间的消息通讯

继承ApplicationEvent类实现自己的事件

实现继承ApplicationListener接口实现监听事件

使用ApplicationContext发布消息