【QLBD】LSTM情感分析实验复现(一)one-hot encoding

注1:参考学习 科学空间论坛 苏剑林 的博文

注2:记录实验复现细节,并根据版本更新对代码作出修正。

注3:python3.5;keras2.0.9

【QLBD】LSTM情感分析实验复现(一)one-hot encoding

【QLBD】LSTM情感分析实验复现(二)分词&one-hot

【QLBD】LSTM情感分析实验复现(三)one embedding

【QLBD】LSTM情感分析实验复现(四)word embdding

中文最小的单位是字,再上一级是词,那么在文本表示的时候就有两种选择,一个是直接以字作为基本单位,一个是以词作为基本单位。以词作为基本单位时就涉及到分词,这个以后再说。以字作为基本单位时,有一种是用one-hot encoding的方法去表示一个字。

本文测试模型为one-hot表示方法:以字为单位,不分词,将每个句子截断为两百字(不够补空字符串),然后将句子以one-hot矩阵的形式输入LSTM模型进行训练分类。

one-hot的优缺点这里不再细说,直接从代码上看整个实验的思路吧。

一、程序模块调用

import numpy as np

import pandas as pd

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense, Activation, Dropout

from keras.layers import LSTM

import sys

sys.setrecursionlimit(10000) #增大堆栈最大深度(递归深度),据说默认为1000,报错二、语料

pos = pd.read_excel('E:/Coding/yuliao/pos.xls',header=None,index=None)#10677篇

pos['label'] = 1

neg = pd.read_excel('E:/Coding/yuliao/neg.xls',header=None,index=None)#10428篇

neg['label'] = 0

all_ = pos.append(neg, ignore_index=True)#共21105篇maxlen = 200 #截断字数

min_count = 20 #出现次数少于该值的字扔掉。这是最简单的降维方法content = ''.join(all_[0]) #所有语料拼接成一个字符串

abc = pd.Series(list(content)).value_counts() #统计每个字的频率

abc = abc[abc >= min_count] #去掉低频字,简单降维

abc[:] = range(len(abc)) #用0-2416间的整数对每个字按顺序重新赋值,一个整数代表一个字

word_set = set(abc.index) #构建字典def doc2num(s, maxlen): #构建将文本转化为数字向量的函数,maxlen=200

s = [i for i in s if i in word_set]

s = s[:maxlen] #截取200字

return list(abc[s])

all_['doc2num'] = all_[0].apply(lambda s: doc2num(s, maxlen)) #使用函数将文本转化为数字向量##all_中的文本向量表示形式:[8, 65, 795, 90, 152, 152, 289, 37, 22, 49, 12...idx = list(range(len(all_))) #生成实际的索引列表

np.random.shuffle(idx) #根据索引打乱文本顺序

all_ = all_.loc[idx] #重新生成表格#按keras的输入要求来生成数据

x = np.array(list(all_['doc2num'])) #

y = np.array(list(all_['label'])) #

y = y.reshape((-1,1)) #调整标签形状# x:array([ list([8, 65, 795, 90, 152, 152, 289, 37, 22, 49, 125, 324, 64, 28, 38, 261, 1, 876, 74, 248, 43, 54, 175, 537, 213, 671, 0, 33, 713, 8, 373, 222, 1, 3, 10, 534, 29, 420, 261, 324, 64, 976, 832, 1, 120, 64, 15, 674, 106, 480, 70, 51, 169, 42, 28, 46, 95, 268, 83, 5, 51, 152, 42, 28, 0, 269, 41, 6, 256, 1, 3, 15, 10, 51, 152, 42, 28, 111, 333, 625, 211, 326, 54, 180, 74, 70, 122, 19, 19, 19, 8, 45, 24, 9, 373, 222, 0, 151, 47, 231, 534, 120, 64, 125, 72, 142, 32, 9, 4, 68, 0, 91, 70, 215, 4, 453, 353, 118, 8, 45, 24, 61, 824, 742, 1, 4, 194, 518, 0, 151, 47, 10, 54, 1206, 529, 1, 143, 921, 71, 31, 5, 603, 175, 276, 3, 691, 37, 731, 314, 372, 314, 3, 6, 17, 713, 12, 2227, 2215, 1, 109, 131, 0, 66, 8, 4, 403, 222, 824, 742, 25, 3, 10, 84, 0, 37, 4, 82, 175, 276, 49, 607, 5, 601, 81, 54, 462, 815, 0, 8, 57, 20, 32, 194, 745, 144]),

list([667, 426, 581, 635, 478, 196, 294, 140, 99, 1071, 1052, 0, 47, 127, 44, 4, 506, 129, 428, 559, 341, 382, 144, 489, 100, 667, 426, 581, 635, 478, 196, 294, 0, 622, 591, 129, 478, 265, 140, 99, 1150, 313, 0, 483, 5, 1154, 115, 323, 1432, 126, 290, 0, 40, 28, 6, 481, 165, 620, 154, 166, 166, 166, 166, 13, 20, 7, 458, 135, 7, 150, 187, 0, 33, 32, 3, 7, 257, 129, 881, 1036, 1, 1049, 504, 0, 114, 288, 178, 106, 129, 163, 458, 135, 192, 7, 150, 187, 74, 86]),...],dtype=int64) # y:array([[0],

[0],

[1],

...,

[0],

[0],

[1]], dtype=int64)八、建模

#建立模型,maxlen=200

model = Sequential()

model.add(LSTM(128, input_shape=(maxlen,len(abc))))

model.add(Dropout(0.5))

model.add(Dense(1))

model.add(Activation('sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])#单个句子的one hot矩阵的大小是maxlen*len(abc)的,非常消耗内存

#为了方便低内存的PC进行测试,这里使用了生成器的方式来生成one hot矩阵

#仅在调用时才生成one hot矩阵

batch_size = 128 #批尺寸设置

train_num = 15000 #训练集大小#句子不足200字的补全0行,maxlen=200,每个句子表示成一个200*2417的矩阵

gen_matrix = lambda z: np.vstack((np_utils.to_categorical(z, len(abc)), np.zeros((maxlen-len(z), len(abc))) ))

#定义数据生成器函数

def data_generator(data, labels, batch_size):

batches = [range(batch_size*i, min(len(data), batch_size*(i+1))) for i in range(int(len(data)/batch_size)+1)]

while True:

for i in batches:

xx = np.zeros((maxlen, len(abc)))

xx, yy = np.array(list(map(gen_matrix, data[i]))), labels[i]

yield (xx, yy)# batches:[range(0, 128),

# range(128, 256),

# range(256, 384),...]# np_utils.to_categorical 进行one-hot编码;np.vstack(a,b)将a,b两个矩阵拼接

# python3中的map函数返回的不在数列表,所以需要加上list(map())的形式

十、开始训练

model.fit_generator(data_generator(x[:train_num], y[:train_num], batch_size), steps_per_epoch=118, epochs=50)#注:keras2版本不再使用samples_per_epoch这个参数,增加了steps_per_epoch,表示每一个epoch迭代多少次,整数,运算方式为:steps_per_epoch = train_num/batch_size;nb_epoch也改成了epochs十一、模型测试

model.evaluate_generator(data_generator(x[train_num:], y[train_num:], batch_size), steps = 50)#注:keras2版本不再使用val_samples这个参数,增加了steps这个参数,表示迭代的轮数。十二、结果

实验使用GPU型号为Geforce 940MX(苦逼学生党自己搞,凑合着用),每轮耗时在155s左右,训练了50轮。

训练集准确率为:0.9511,测试集准确率为:0.88413771399910435。

过程如下:

训练过程: Epoch 1/50

118/118 [==============================] - 159s 1s/step - loss: 0.6897 - acc: 0.5360

Epoch 2/50

118/118 [==============================] - 155s 1s/step - loss: 0.6693 - acc: 0.6070

Epoch 3/50

118/118 [==============================] - 154s 1s/step - loss: 0.6687 - acc: 0.5774

Epoch 4/50

118/118 [==============================] - 154s 1s/step - loss: 0.6890 - acc: 0.5328

Epoch 5/50

118/118 [==============================] - 154s 1s/step - loss: 0.6694 - acc: 0.5874

Epoch 6/50

118/118 [==============================] - 155s 1s/step - loss: 0.6162 - acc: 0.6806

Epoch 7/50

118/118 [==============================] - 155s 1s/step - loss: 0.6257 - acc: 0.6446

Epoch 8/50

118/118 [==============================] - 155s 1s/step - loss: 0.6214 - acc: 0.6621

Epoch 9/50

118/118 [==============================] - 158s 1s/step - loss: 0.6214 - acc: 0.6476

Epoch 10/50

118/118 [==============================] - 155s 1s/step - loss: 0.6134 - acc: 0.6279

Epoch 11/50

118/118 [==============================] - 154s 1s/step - loss: 0.6257 - acc: 0.6110

Epoch 12/50

118/118 [==============================] - 155s 1s/step - loss: 0.5853 - acc: 0.6810

Epoch 13/50

118/118 [==============================] - 155s 1s/step - loss: 0.5562 - acc: 0.6740

Epoch 14/50

118/118 [==============================] - 155s 1s/step - loss: 0.5573 - acc: 0.6978

Epoch 15/50

118/118 [==============================] - 155s 1s/step - loss: 0.6018 - acc: 0.6500

Epoch 16/50

118/118 [==============================] - 155s 1s/step - loss: 0.5985 - acc: 0.6574

Epoch 17/50

118/118 [==============================] - 155s 1s/step - loss: 0.6494 - acc: 0.6494

Epoch 18/50

118/118 [==============================] - 155s 1s/step - loss: 0.5674 - acc: 0.6869

Epoch 19/50

118/118 [==============================] - 157s 1s/step - loss: 0.5080 - acc: 0.7618

Epoch 20/50

118/118 [==============================] - 155s 1s/step - loss: 0.5805 - acc: 0.7010

Epoch 21/50

118/118 [==============================] - 155s 1s/step - loss: 0.5836 - acc: 0.6854

Epoch 22/50

118/118 [==============================] - 155s 1s/step - loss: 0.5778 - acc: 0.7051

Epoch 23/50

118/118 [==============================] - 155s 1s/step - loss: 0.5744 - acc: 0.6682

Epoch 24/50

118/118 [==============================] - 154s 1s/step - loss: 0.5482 - acc: 0.6822

Epoch 25/50

118/118 [==============================] - 158s 1s/step - loss: 0.5583 - acc: 0.6722

Epoch 26/50

118/118 [==============================] - 155s 1s/step - loss: 0.5428 - acc: 0.7136

Epoch 27/50

118/118 [==============================] - 155s 1s/step - loss: 0.5123 - acc: 0.7660

Epoch 28/50

118/118 [==============================] - 155s 1s/step - loss: 0.5067 - acc: 0.7396

Epoch 29/50

118/118 [==============================] - 155s 1s/step - loss: 0.5952 - acc: 0.7206

Epoch 30/50

118/118 [==============================] - 156s 1s/step - loss: 0.7223 - acc: 0.4976

Epoch 31/50

118/118 [==============================] - 155s 1s/step - loss: 0.6380 - acc: 0.6132

Epoch 32/50

118/118 [==============================] - 158s 1s/step - loss: 0.4696 - acc: 0.7926

Epoch 33/50

118/118 [==============================] - 155s 1s/step - loss: 0.4085 - acc: 0.8298

Epoch 34/50

118/118 [==============================] - 155s 1s/step - loss: 0.3881 - acc: 0.8446

Epoch 35/50

118/118 [==============================] - 155s 1s/step - loss: 0.4269 - acc: 0.8049

Epoch 36/50

118/118 [==============================] - 155s 1s/step - loss: 0.4422 - acc: 0.8034

Epoch 37/50

118/118 [==============================] - 155s 1s/step - loss: 0.3943 - acc: 0.8330

Epoch 38/50

118/118 [==============================] - 157s 1s/step - loss: 0.3226 - acc: 0.8753

Epoch 39/50

118/118 [==============================] - 155s 1s/step - loss: 0.2895 - acc: 0.8893

Epoch 40/50

118/118 [==============================] - 155s 1s/step - loss: 0.2597 - acc: 0.9065

Epoch 41/50

118/118 [==============================] - 156s 1s/step - loss: 0.2800 - acc: 0.8984

Epoch 42/50

118/118 [==============================] - 159s 1s/step - loss: 0.2323 - acc: 0.9189

Epoch 43/50

118/118 [==============================] - 157s 1s/step - loss: 0.2111 - acc: 0.9266

Epoch 44/50

118/118 [==============================] - 157s 1s/step - loss: 0.1933 - acc: 0.9352

Epoch 45/50

118/118 [==============================] - 156s 1s/step - loss: 0.1822 - acc: 0.9379

Epoch 46/50

118/118 [==============================] - 156s 1s/step - loss: 0.1721 - acc: 0.9419

Epoch 47/50

118/118 [==============================] - 156s 1s/step - loss: 0.1632 - acc: 0.9490

Epoch 48/50

118/118 [==============================] - 156s 1s/step - loss: 0.1535 - acc: 0.9486

Epoch 49/50

118/118 [==============================] - 157s 1s/step - loss: 0.1489 - acc: 0.9511

Epoch 50/50

118/118 [==============================] - 156s 1s/step - loss: 0.1497 - acc: 0.9511 #测试:[0.31111064370070929, 0.88413771399910435] #[loss,acc]十三、预测单个句子

def predict_one(s): #单个句子的预测函数

s = gen_matrix(doc2num(s, maxlen))

s = s.reshape((1, s.shape[0], s.shape[1]))

return model.predict_classes(s, verbose=0)[0][0]comment = pd.read_excel('E:/Coding/comment/sum.xls') #载入待预测语料

comment = comment[comment['rateContent'].notnull()] #评论非空

comment['text'] = comment['rateContent'] #提取评论共11182篇#取一百篇用模型预测,太多耗时比较长

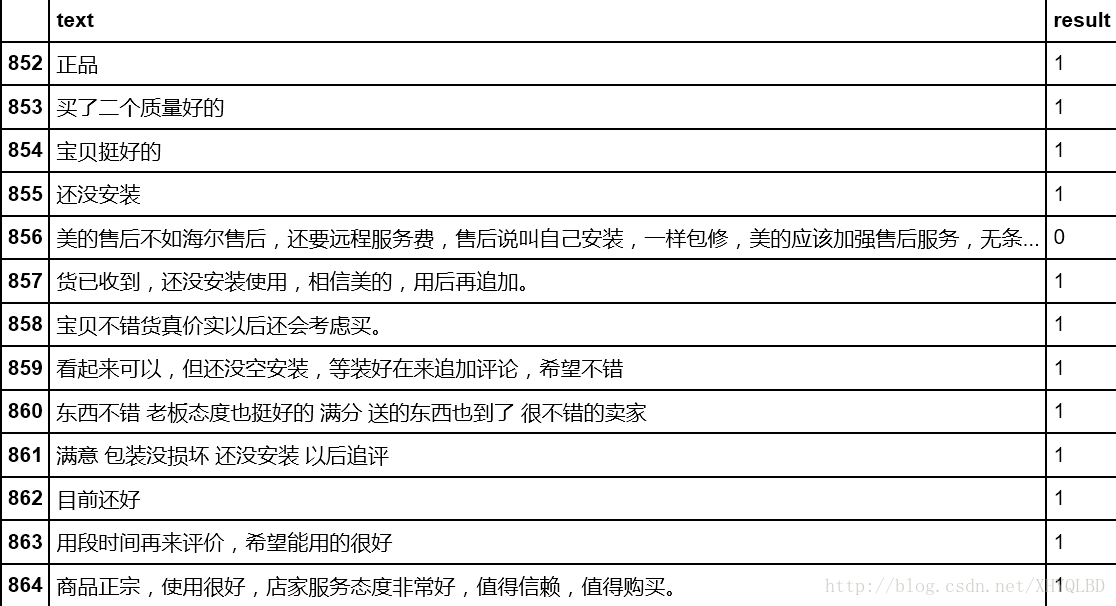

comment['text'][500:600].apply(lambda s: predict_one(s))#结果如图:

(实验一 完)

|

|

|

|||||||||||||||