pytorch学习笔记十一:查看模型的层和参数信息的几种方式

首先,我们先创建一个只有两层的简单的模型,第一层是没有参数的FlattenLayer,第二层是有参数的Linear.

import torch

from torch import nn

num_inputs = 784

num_outputs = 10

class FlattenLayer(nn.Module):

def __init__(self):

super(FlattenLayer, self).__init__()

def forward(self, x): # x shape: (batch, *, *, ...)

return x.view(x.shape[0], -1)

from collections import OrderedDict

net = nn.Sequential(

# FlattenLayer(),

# nn.Linear(num_inputs, num_outputs)

OrderedDict([

('flatten', FlattenLayer()),

('linear', nn.Linear(num_inputs, num_outputs))])

)

一、.state_dict()

print(net.state_dict())

for name in net.state_dict():

print(name)

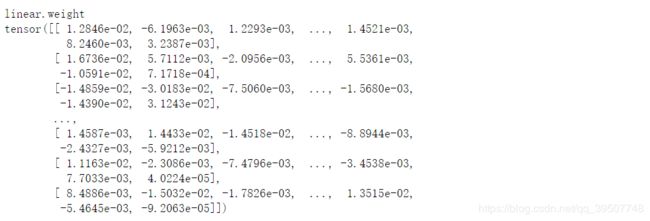

二、.named_parameters()

print(list(net.named_parameters()))

for name,param in net.named_parameters():

print(name)

print(param)

如果想要只取出numpy数据,我们不能直接使用param.numpy(),应该使用param.detach().numpy()

我们还可以通过下面这种方式取出参数名和数值:

params = list(net.named_parameters())

print(params[0][0])#name

print(params[0][1].data)#data

三、.modules()

print(list(net.modules()))

for layer in net.modules():

print(layer)

输出:

[Sequential(

(flatten): FlattenLayer()

(linear): Linear(in_features=784, out_features=10, bias=True)

), FlattenLayer(), Linear(in_features=784, out_features=10, bias=True)]

Sequential(

(flatten): FlattenLayer()

(linear): Linear(in_features=784, out_features=10, bias=True)

)

FlattenLayer()

Linear(in_features=784, out_features=10, bias=True)

四、._modules

print(net._modules)

print(net._modules.items())

for name,layer in net._modules.items():

print(name)

print(layer)

输出:

OrderedDict([('flatten', FlattenLayer()),

('linear', Linear(in_features=784, out_features=10, bias=True))])

odict_items([('flatten', FlattenLayer()),

('linear', Linear(in_features=784, out_features=10, bias=True))])

flatten

FlattenLayer()

linear

Linear(in_features=784, out_features=10, bias=True)