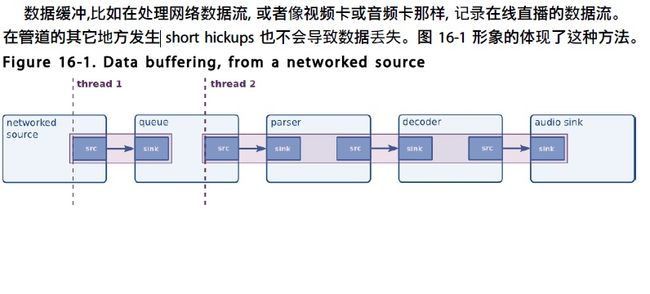

gstreamer network buffering mechanism

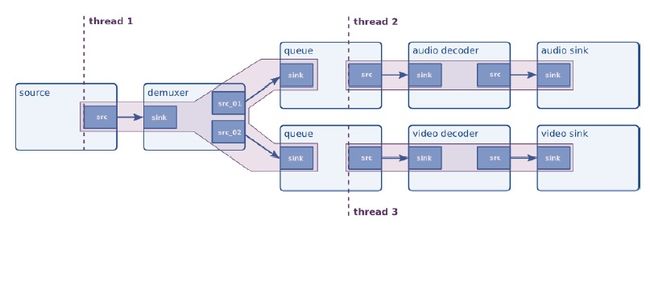

同步输出设备,比如播放一段混合了视频和音频的流,使用双线程输出的话,音频流和视频流就可以独立的运行并达到更好的同步效果。

《插件开发指南》 详细介绍了如何在 GStreamer 框架编写元件,这一节将单独讨论如何

在你的应用程序中静态的嵌入元件,这对 GStreamer 一些特殊应用的元件非常有用。

动态载入插件包含一个由 GST_PLUGIN_DEFINE ()定义的结构,在 GStreamer 内核装载

插件的同时会载入这个结构。这个结构包含一个初始化函数(plugin_init),在载入后可调用

该函数。初始化函数主要是用来注册 GStreamer 框架提 供 的 插 件 的 元 件 。 如 果 你

想 直 接 把 元 件 嵌 入 到 你 的 应 用 程 序 , 只 需 要 将 GST_PLUGIN_DEFINE () 替 换

成GST_PLUGIN_DEFINE_STATIC ()。在你的应用程序启动的时候,元件就会成功注册,像别

的元件一样,而不需要动态装载库。下面的例子调用 gst_element_factory_make ("myelement-

name", "some-name")函数来创建元件的实例。

Playbin 拥有如下一些功能,这些功能先前都被提起过:

可设置的(Settable)视频和音频输出(使用"video-sink" and "audio-sink")。

可控并可跟踪的 GstElement 元件。包括错误处理、eos 处理、标签处理、状态处理(通过

GstBus)、媒体位置处理与查询。

带缓存的网络数据源,通过 GstBus 来通知缓存已满。

支持可视化的音频媒体。

GstQueue 插件描述

Data is queued until one of the limits specified by the "max-size-buffers", "max-size-bytes" and/or "max-size-time" properties has been reached. Any attempt to push more buffers into the queue will block the pushing thread until more space becomes available.

The queue will create a new thread on the source pad to decouple the processing on sink and source pad.

You can query how many buffers are queued by reading the "current-level-buffers" property. You can track changes by connecting to the notify::current-level-buffers signal (which like all signals will be emitted from the streaming thread). The same applies to the"current-level-time" and "current-level-bytes" properties.

The default queue size limits are 200 buffers, 10MB of data, or one second worth of data, whichever is reached first.

As said earlier, the queue blocks by default when one of the specified maximums (bytes, time, buffers) has been reached. You can set the"leaky" property to specify that instead of blocking it should leak (drop) new or old buffers.

The "underrun" signal is emitted when the queue has less data than the specified minimum thresholds require (by default: when the queue is empty). The"overrun" signal is emitted when the queue is filled up. Both signals are emitted from the context of the streaming thread.

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------

转自:http://docs.gstreamer.com/display/GstSDK/Basic+tutorial+12%3A+Streaming

playbin2中的buffering机制的使用方法:

We focus on some of the other properties of playbin2, though:

|

50

51

52

53

54

|

/* Set flags to show Audio and Video but ignore Subtitles */

g_object_get (data.playbin2,

"flags"

, &flags, NULL);

flags |= GST_PLAY_FLAG_VIDEO | GST_PLAY_FLAG_AUDIO;

flags &= ~GST_PLAY_FLAG_TEXT;

g_object_set (data.playbin2,

"flags"

, flags, NULL);

|

playbin2's behavior can be changed through its flags property, which can have any combination of GstPlayFlags. The most interesting values are:

|

|

Enable video rendering. If this flag is not set, there will be no video output. |

|

|

Enable audio rendering. If this flag is not set, there will be no audio output. |

|

|

Enable subtitle rendering. If this flag is not set, subtitles will not be shown in the video output. |

|

|

Enable rendering of visualisations when there is no video stream.Playback tutorial 6: Audio visualization goes into more details. |

|

|

See Basic tutorial 12: Streaming and Playback tutorial 4: Progressive streaming. |

|

|

See Basic tutorial 12: Streaming and Playback tutorial 4: Progressive streaming. |

|

|

If the video content was interlaced, this flag instructs |

basic-tutorial-12.c

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

|

#include

#include

typedef

struct

_CustomData {

gboolean is_live;

GstElement *pipeline;

GMainLoop *loop;

} CustomData;

static

void

cb_message (GstBus *bus, GstMessage *msg, CustomData *data) {

switch

(GST_MESSAGE_TYPE (msg)) {

case

GST_MESSAGE_ERROR: {

GError *err;

gchar *debug;

gst_message_parse_error (msg, &err, &debug);

g_print (

"Error: %s\n"

, err->message);

g_error_free (err);

g_free (debug);

gst_element_set_state (data->pipeline, GST_STATE_READY);

g_main_loop_quit (data->loop);

break

;

}

case

GST_MESSAGE_EOS:

/* end-of-stream */

gst_element_set_state (data->pipeline, GST_STATE_READY);

g_main_loop_quit (data->loop);

break

;

case

GST_MESSAGE_BUFFERING: {

gint percent = 0;

/* If the stream is live, we do not care about buffering. */

if

(data->is_live)

break

;

gst_message_parse_buffering (msg, &percent);

g_print (

"Buffering (%3d%%)\r"

, percent);

/* Wait until buffering is complete before start/resume playing */

if

(percent < 100)

gst_element_set_state (data->pipeline, GST_STATE_PAUSED);

else

gst_element_set_state (data->pipeline, GST_STATE_PLAYING);

break

;

}

case

GST_MESSAGE_CLOCK_LOST:

/* Get a new clock */

gst_element_set_state (data->pipeline, GST_STATE_PAUSED);

gst_element_set_state (data->pipeline, GST_STATE_PLAYING);

break

;

default

:

/* Unhandled message */

break

;

}

}

int

main(

int

argc,

char

*argv[]) {

GstElement *pipeline;

GstBus *bus;

GstStateChangeReturn ret;

GMainLoop *main_loop;

CustomData data;

/* Initialize GStreamer */

gst_init (&argc, &argv);

/* Initialize our data structure */

memset

(&data, 0,

sizeof

(data));

/* Build the pipeline */

pipeline = gst_parse_launch (

"playbin2 uri=http://docs.gstreamer.com/media/sintel_trailer-480p.webm"

, NULL);

bus = gst_element_get_bus (pipeline);

/* Start playing */

ret = gst_element_set_state (pipeline, GST_STATE_PLAYING);

if

(ret == GST_STATE_CHANGE_FAILURE) {

g_printerr (

"Unable to set the pipeline to the playing state.\n"

);

gst_object_unref (pipeline);

return

-1;

}

else

if

(ret == GST_STATE_CHANGE_NO_PREROLL) {

data.is_live = TRUE;

}

main_loop = g_main_loop_new (NULL, FALSE);

data.loop = main_loop;

data.pipeline = pipeline;

gst_bus_add_signal_watch (bus);

g_signal_connect (bus,

"message"

, G_CALLBACK (cb_message), &data);

g_main_loop_run (main_loop);

/* Free resources */

g_main_loop_unref (main_loop);

gst_object_unref (bus);

gst_element_set_state (pipeline, GST_STATE_NULL);

gst_object_unref (pipeline);

return

0;

}

|