Hadoop的IO处理

1.HDFS数据完整性

HDFS会写入所有数据的计算校验和,并对正在读取的数据进行校验,默认校验方式是RCR-32。

不只是读写数据时会进行校验,datanode也会在后台线程中运行DataBlockScanner进行校验,定期检查数据的缺失情况。

客户端读写数据时,发现数据损坏了,向namenode汇报,抛出ChecksumException,namenode将该datanode上的数据转移到其他的datanode,最后删除该损坏的数据块。

在使用open()方法读取文件之前,将false传送给FileSystem对象的setVerfyChecksum()方法,即可以禁止校验和验证,如果你读取的文件时损坏的,那么在文件删除之前,你还可以恢复部分数据,以免该datanode转移数据失败后直接被删除。

2.LocalFileSysytem

localfileSystem继承于checkFileSysytem,

checkFileSysytem继承于FilterFileSystem,

FilterFileSystem继承于FileSysytem。

localfileSystem可以对文件进行校验。

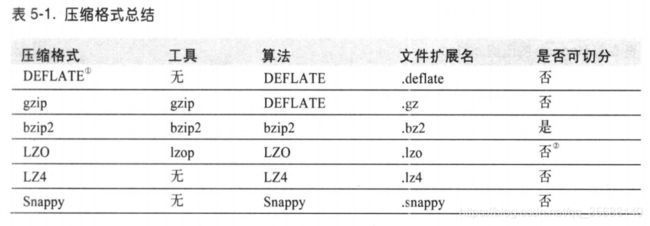

3.几种压缩方式

4.读取压缩数据

5.对文件进行压缩

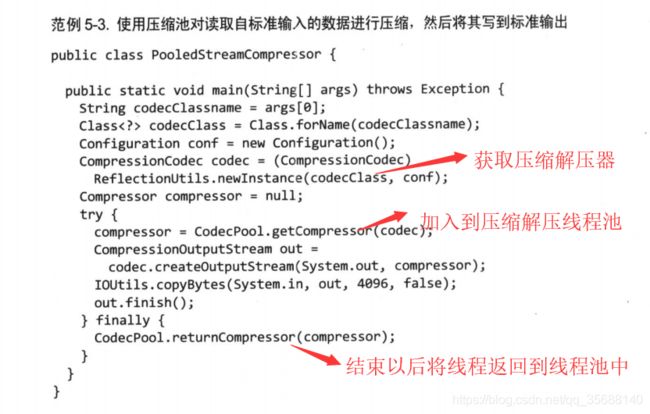

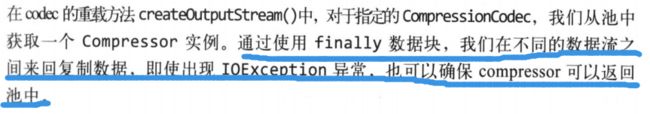

6.压缩解压线程池

7.压缩文件的处理

hdfs中每片数据128m,1GB的gzip压缩文件分成8片,但只会被一个马匹处理,因为压缩文件无法切片以后对每一片处理。

但是我们处理大文件时一定要确保数据格式可以被切片:

8.mapredeuce 的结果文件进行压缩

9.定制comparator和WritableComparable接口

下面的程序实现了:对每个id相同的商品中挑选出价格最贵的那个。

这里map和reduce期间经过了两个过程:

1.因为实现了WritableComparable接口,故会对其排序

2.因为实现了WritableComparator接口,会将相同的kay放在一起

查找源码可以发现,WritableComparator调用compare方法实质是调用WritableComparable接口的comareTo方法进行比较。

package com.qianliu.bigdata.mr.secondarysort;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

/**

* 利用reduce端的GroupingComparator来实现将一组bean看成相同的key,相当于自定义shuffle的分组规则

* @author

*

*/

public class ItemidGroupingComparator extends WritableComparator {

//注册OrderBean,以及制定需要让框架做反射获取实例对象

protected ItemidGroupingComparator() {

super(OrderBean.class, true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

OrderBean abean = (OrderBean) a;

OrderBean bbean = (OrderBean) b;

/*比较两个bean时,指定只比较bean中的orderid,id相等则认为是相等的,

其实key早就被拼接生成了id+amount的形式,如果此地方不重写此方法,他默认比较key

*/

return abean.getItemid().compareTo(bbean.getItemid());

}

}

package com.qianliu.bigdata.mr.secondarysort;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.SequenceFile;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.WritableComparable;

public class OrderBean implements WritableComparable<OrderBean>{

private Text itemid;//商品id

private DoubleWritable amount;//商品价格double类型

public OrderBean() {

}

public OrderBean(Text itemid, DoubleWritable amount) {

set(itemid, amount);

}

public void set(Text itemid, DoubleWritable amount) {

this.itemid = itemid;

this.amount = amount;

}

public Text getItemid() {

return itemid;

}

public DoubleWritable getAmount() {

return amount;

}

@Override

public int compareTo(OrderBean o) {

int cmp = this.itemid.compareTo(o.getItemid());//compareTo是比较前后Text是否相同的一个方法,相同则返回0

if (cmp == 0) {

/*compareTo是DoubleWritable比较前后大小的方法,后面的大为-1

*最前面加一个“负号”是因为return的值为正,最后job输出时列出该数据排在前面,加符号使得大数排序后在后

*/

cmp = -this.amount.compareTo(o.getAmount());

}

return cmp;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(itemid.toString());

out.writeDouble(amount.get());

}

@Override

public void readFields(DataInput in) throws IOException {

String readUTF = in.readUTF();

double readDouble = in.readDouble();

this.itemid = new Text(readUTF);

this.amount= new DoubleWritable(readDouble);

}

@Override

public String toString() {

return itemid.toString() + "\t" + amount.get();

}

}

package com.qianliu.bigdata.mr.secondarysort;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Partitioner;

public class ItemIdPartitioner extends Partitioner<OrderBean, NullWritable>{

@Override

public int getPartition(OrderBean bean, NullWritable value, int numReduceTasks) {

//相同id的订单bean,会发往相同的partition

//而且,产生的分区数,是会跟用户设置的reduce task数保持一致

return (bean.getItemid().hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

}

package com.qianliu.bigdata.mr.secondarysort;

import java.io.IOException;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import com.sun.xml.bind.v2.schemagen.xmlschema.List;

/**

*

* @author [email protected]

*

*/

public class SecondarySort {

static class SecondarySortMapper extends Mapper<LongWritable, Text, OrderBean, NullWritable>{

OrderBean bean = new OrderBean();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

String[] fields = StringUtils.split(line, ",");

bean.set(new Text(fields[0]), new DoubleWritable(Double.parseDouble(fields[2])));

context.write(bean, NullWritable.get());

}

}

static class SecondarySortReducer extends Reducer<OrderBean, NullWritable, OrderBean, NullWritable>{

//这里map和reduce期间经过了两个过程:

//1.因为实现了WritableComparable接口,故会对其排序

//2.因为实现了WritableComparator接口,会将相同的kay放在一起

//到达reduce时,相同id的所有bean已经被看成一组,且金额最大的那个一排在第一位

@Override

protected void reduce(OrderBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key, NullWritable.get());

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(SecondarySort.class);

job.setMapperClass(SecondarySortMapper.class);

job.setReducerClass(SecondarySortReducer.class);

job.setOutputKeyClass(OrderBean.class);

job.setOutputValueClass(NullWritable.class);

FileInputFormat.setInputPaths(job, new Path("E:\\IDEA\\MapReduceLocalhost\\secondarysort\\input"));

FileOutputFormat.setOutputPath(job, new Path("E:\\IDEA\\MapReduceLocalhost\\secondarysort\\output"));

//在此设置自定义的Groupingcomparator类

job.setGroupingComparatorClass(ItemidGroupingComparator.class);

//在此设置自定义的partitioner类

job.setPartitionerClass(ItemIdPartitioner.class);

job.setNumReduceTasks(2);

job.waitForCompletion(true);

}

}