Android Audio Framework 之AudioPolicyService

AudioPolicyService 是audio framework 的一大service, 另外一个是AudioFlinger。

一直觉得通过代码来分析的话写起来很费劲, 最近去看了下UML, 通过同UML 去分析感觉比较清晰,而我自己看回来的话也很容易就知道框架是怎样。

先看下AudioPolicyService 的各个主要类的关系。

这个看起来简单清晰多了。

有必要说一下代码中AudioPolicyClient, 它是AudioPolicyService 的子类,它是这么存在的。

void AudioPolicyService::onFirstRef()

{

......

mAudioPolicyClient = new AudioPolicyClient(this);

mAudioPolicyManager = createAudioPolicyManager(mAudioPolicyClient);

......

}

extern "C" AudioPolicyInterface* createAudioPolicyManager(

AudioPolicyClientInterface *clientInterface)

{

return new AudioPolicyManager(clientInterface);

}AudioPolicyManager::AudioPolicyManager(AudioPolicyClientInterface *clientInterface)

:

#ifdef AUDIO_POLICY_TEST

Thread(false),

#endif //AUDIO_POLICY_TEST

mPrimaryOutput((audio_io_handle_t)0),

mPhoneState(AUDIO_MODE_NORMAL),

mLimitRingtoneVolume(false), mLastVoiceVolume(-1.0f),

mTotalEffectsCpuLoad(0), mTotalEffectsMemory(0),

mA2dpSuspended(false),

mSpeakerDrcEnabled(false), mNextUniqueId(1),

mAudioPortGeneration(1),

mBeaconMuteRefCount(0),

mBeaconPlayingRefCount(0),

mBeaconMuted(false)

{

mUidCached = getuid();

mpClientInterface = clientInterface;

}status_t AudioPolicyService::AudioPolicyClient::openInput(audio_module_handle_t module,

audio_io_handle_t *input,

audio_config_t *config,

audio_devices_t *device,

const String8& address,

audio_source_t source,

audio_input_flags_t flags)

{

sp af = AudioSystem::get_audio_flinger();

if (af == 0) {

ALOGW("%s: could not get AudioFlinger", __func__);

return PERMISSION_DENIED;

}

return af->openInput(module, input, config, device, address, source, flags);

} 另外一种方法就是调用AudioPolicyService 的方法, 将cmd作为一个node放到AudioPolicyService::AudioCommandThread 的队列中,然后一个CommandThread 会不断的处理这些cmd node, 在处理的时候也是通过第一种方式call 到AudioFlinger, 加个队列我想是考虑某些cmd 比较频繁, 考虑性能问题。

status_t AudioPolicyService::AudioPolicyClient::createAudioPatch(const struct audio_patch *patch,

audio_patch_handle_t *handle,

int delayMs)

{

return mAudioPolicyService->clientCreateAudioPatch(patch, handle, delayMs);

}status_t AudioPolicyService::clientCreateAudioPatch(const struct audio_patch *patch,

audio_patch_handle_t *handle,

int delayMs)

{

return mAudioCommandThread->createAudioPatchCommand(patch, handle, delayMs);

}

status_t AudioPolicyService::AudioCommandThread::createAudioPatchCommand(

const struct audio_patch *patch,

audio_patch_handle_t *handle,

int delayMs)

{

status_t status = NO_ERROR;

sp command = new AudioCommand();

command->mCommand = CREATE_AUDIO_PATCH;

CreateAudioPatchData *data = new CreateAudioPatchData();

data->mPatch = *patch;

data->mHandle = *handle;

command->mParam = data;

command->mWaitStatus = true;

ALOGV("AudioCommandThread() adding create patch delay %d", delayMs);

status = sendCommand(command, delayMs);

if (status == NO_ERROR) {

*handle = data->mHandle;

}

return status;

}

case CREATE_AUDIO_PATCH: {

CreateAudioPatchData *data = (CreateAudioPatchData *)command->mParam.get();

ALOGV("AudioCommandThread() processing create audio patch");

sp af = AudioSystem::get_audio_flinger();

if (af == 0) {

command->mStatus = PERMISSION_DENIED;

} else {

command->mStatus = af->createAudioPatch(&data->mPatch, &data->mHandle);

}

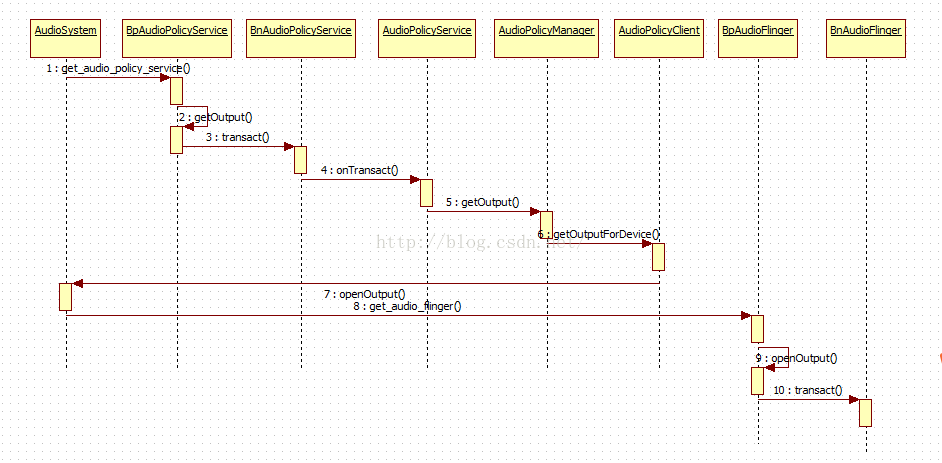

} break; 然后再以getOutput 的时序图看下,整个AudioPolicyService 的各种调用关系。

在Audio Framework中 两个service 的入口都是AudioSystem 类, 所以这个时序图以AduioSystem开始,同时通过这个时序图也可以发现AudioPolicyService 是怎么走到AudioFlinger 的,也是通过AudioSystem类。

AudioPolicyService 的框架基本就这样了, 代码中的一些具体实现就是业务相关的了。。。