ubuntu 基于 docker 搭建 hadoop 3.2 集群【成功】

文章目录

- 安装 docker

- 拉取 ubuntu 16.04 镜像

- 安装 java 和 scala

- 配置 apt 阿里源

- 安装 java

- 安装 scala

- 配置 hadoop 集群

- 常用工具:vim,ifconfig,ssh

- 下载 hadoop

- 配置环境变量

- 修改 hadoop 配置文件

- 1. hadoop-env.sh

- 2. core-site.xml

- 3. hdfs-site.xml

- 4. mapred-site.xml

- 5. yarn-site.xml

- 6. workers

- 提交(保存) docker 镜像

- 建立虚拟网络

- 建立集群

- 启动 hadoop 集群

- 查看各个节点状态 `jps`

- 查看文件系统状态

- 测试 hadoop

- 将文件传入 hadoop

- wordcount

- 显示结果

参考:https://zhuanlan.zhihu.com/p/59758201

真是良心文章,虽然有一些小 bug,非常有参考价值!

安装 docker

ubuntu 18.04 安装 docker

拉取 ubuntu 16.04 镜像

docker pull ubuntu:16.04

安装 java 和 scala

配置 apt 阿里源

cp /etc/apt/sources.list /etc/apt/sources_init.list

echo "" > /etc/apt/sources.list

echo "deb http://mirrors.aliyun.com/ubuntu/ xenial main

deb-src http://mirrors.aliyun.com/ubuntu/ xenial main

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates main

deb http://mirrors.aliyun.com/ubuntu/ xenial universe

deb-src http://mirrors.aliyun.com/ubuntu/ xenial universe

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates universe

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates universe

deb http://mirrors.aliyun.com/ubuntu/ xenial-security main

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-security main

deb http://mirrors.aliyun.com/ubuntu/ xenial-security universe

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-security universe" >> /etc/apt/sources.list

安装 java

apt update

apt install openjdk-8-jdk

java -version # 检查安装成功

update-alternatives --config java # 查看安装路径

安装 scala

apt install scala

配置 hadoop 集群

常用工具:vim,ifconfig,ssh

apt install vim

apt install net-tools

apt-get install openssh-server openssh-client

cd

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat .ssh/id_rsa.pub >> .ssh/authorized_keys

echo "service ssh start" >> ~/.bashrc

下载 hadoop

wget http://mirrors.hust.edu.cn/apache/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz

tar -zxvf hadoop-3.2.1.tar.gz -C /usr/local/

cd /usr/local/

mv hadoop-3.2.1 hadoop

配置环境变量

vim /etc/profile

加入

#java

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

#hadoop

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_CONF_DIR=$HADOOP_HOME

export HADOOP_LIBEXEC_DIR=$HADOOP_HOME/libexec

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HDFS_DATANODE_USER=root

export HDFS_DATANODE_SECURE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export HDFS_NAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

我在用 docker 的时候发现 /etc/profile 并没有默认开机执行,所以在 ~/.bashrc 里加了一句:

source /etc/profile

修改 hadoop 配置文件

cd $HADOOP_CONF_DIR

ls

capacity-scheduler.xml hadoop-user-functions.sh.example kms-log4j.properties ssl-client.xml.example

configuration.xsl hdfs-site.xml kms-site.xml ssl-server.xml.example

container-executor.cfg httpfs-env.sh log4j.properties user_ec_policies.xml.template

core-site.xml httpfs-log4j.properties mapred-env.cmd workers

hadoop-env.cmd httpfs-signature.secret mapred-env.sh yarn-env.cmd

hadoop-env.sh httpfs-site.xml mapred-queues.xml.template yarn-env.sh

hadoop-metrics2.properties kms-acls.xml mapred-site.xml yarn-site.xml

hadoop-policy.xml kms-env.sh shellprofile.d yarnservice-log4j.properties

需要修改的有如下文件

1. hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

2. core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://h01:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/tmp</value>

</property>

</configuration>

创建文件夹

mkdir /data/hadoop/tmp

3. hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.namenode.data.dir</name>

<value>/data/hadoop/hdfs/data</value>

</property>

</configuration>

mkdir /data/hadoop/hdfs

mkdir /data/hadoop/hdfs/name

mkdir /data/hadoop/hdfs/data

4. mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>

/usr/local/hadoop/etc/hadoop,

/usr/local/hadoop/share/hadoop/common/*,

/usr/local/hadoop/share/hadoop/common/lib/*,

/usr/local/hadoop/share/hadoop/hdfs/*,

/usr/local/hadoop/share/hadoop/hdfs/lib/*,

/usr/local/hadoop/share/hadoop/mapreduce/*,

/usr/local/hadoop/share/hadoop/mapreduce/lib/*,

/usr/local/hadoop/share/hadoop/yarn/*,

/usr/local/hadoop/share/hadoop/yarn/lib/*

</value>

</property>

</configuration>

5. yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>h01</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

6. workers

3 个节点的集群, h01 为主节点

h01

h02

h03

至此,hadoop 集群已经配置完成了!!

提交(保存) docker 镜像

镜像命名为 hadoop

docker ps -a # 找到当前容器id

docker commit -m "install haddop" 8a2a24b54e6e hadoop

建立虚拟网络

Docker 网络提供 DNS 解析功能,使用如下命令为接下来的 Hadoop 集群单独构建一个虚拟的网络。

docker network create --driver=bridge hadoop

以上命令创建了一个名为 hadoop 的虚拟桥接网络,该虚拟网络内部提供了自动的DNS解析服务。

建立集群

-hhostname--name容器名-p端口映射

启动 3 个容器

docker run -it --network hadoop -h h01 --name h01 -p 9870:9870 -p 8088:8088 hadoop /bin/bash

docker run -it --network hadoop -h h02 --name h02 hadoop /bin/bash

docker run -it --network hadoop -h h03 --name h03 hadoop /bin/bash

注:上面第一个 hadoop 是网络名,第二个 hadoop 为docker镜像名

启动 hadoop 集群

确认环境变量无误后,

root@h01:/# hdfs namenode -format

root@h01:/# start-all.sh

Starting namenodes on [h01]

h01: Warning: Permanently added 'h01,172.18.0.3' (ECDSA) to the list of known hosts.

Starting datanodes

h03: Warning: Permanently added 'h03,172.18.0.2' (ECDSA) to the list of known hosts.

h02: Warning: Permanently added 'h02,172.18.0.4' (ECDSA) to the list of known hosts.

h02: WARNING: /usr/local/hadoop/logs does not exist. Creating.

h03: WARNING: /usr/local/hadoop/logs does not exist. Creating.

Starting secondary namenodes [h01]

Starting resourcemanager

Starting nodemanagers

查看各个节点状态 jps

root@h01:~# jps

336 NameNode

1665 Jps

996 ResourceManager

1141 NodeManager

662 SecondaryNameNode

475 DataNode

root@h02:/# jps

369 Jps

253 NodeManager

127 DataNode

root@h03:/# jps

388 Jps

252 NodeManager

126 DataNode

查看文件系统状态

root@h01:~# hdfs dfsadmin -report

Configured Capacity: 127487361024 (118.73 GB)

Present Capacity: 79322148864 (73.87 GB)

DFS Remaining: 79322062848 (73.87 GB)

DFS Used: 86016 (84 KB)

DFS Used%: 0.00%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (3):

Name: 172.18.0.2:9866 (h03.hadoop)

Hostname: h03

Decommission Status : Normal

Configured Capacity: 42495787008 (39.58 GB)

DFS Used: 28672 (28 KB)

Non DFS Used: 13865959424 (12.91 GB)

DFS Remaining: 26440687616 (24.62 GB)

DFS Used%: 0.00%

DFS Remaining%: 62.22%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Apr 07 12:17:15 UTC 2020

Last Block Report: Tue Apr 07 11:26:12 UTC 2020

Num of Blocks: 0

Name: 172.18.0.3:9866 (h01)

Hostname: h01

Decommission Status : Normal

Configured Capacity: 42495787008 (39.58 GB)

DFS Used: 28672 (28 KB)

Non DFS Used: 13865959424 (12.91 GB)

DFS Remaining: 26440687616 (24.62 GB)

DFS Used%: 0.00%

DFS Remaining%: 62.22%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Apr 07 12:17:15 UTC 2020

Last Block Report: Tue Apr 07 11:26:12 UTC 2020

Num of Blocks: 0

Name: 172.18.0.4:9866 (h02.hadoop)

Hostname: h02

Decommission Status : Normal

Configured Capacity: 42495787008 (39.58 GB)

DFS Used: 28672 (28 KB)

Non DFS Used: 13865959424 (12.91 GB)

DFS Remaining: 26440687616 (24.62 GB)

DFS Used%: 0.00%

DFS Remaining%: 62.22%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Apr 07 12:17:15 UTC 2020

Last Block Report: Tue Apr 07 11:26:12 UTC 2020

Num of Blocks: 0

哈哈,3个容器的剩余空间都是 24.62 GB,这就是破电脑当前真实的剩余空间。

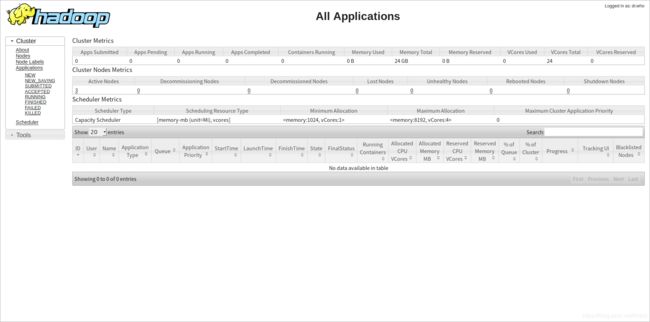

在浏览器中打开 localhost:8088 即可查看集群状态:

测试 hadoop

运行内置 WordCount 例子,以 license 作为统计词频的文件

cat $HADOOP_HOME/LICENSE.txt > file.txt

将文件传入 hadoop

hadoop fs -ls /

hadoop fs -mkdir /input

hadoop fs -put file.txt /input

hadoop fs -ls /input

wordcount

root@h01:~# hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount /input /output

2020-04-07 12:34:10,112 INFO client.RMProxy: Connecting to ResourceManager at h01/172.18.0.3:8032

2020-04-07 12:34:10,552 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1586258781362_0001

2020-04-07 12:34:10,682 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-04-07 12:34:10,852 INFO input.FileInputFormat: Total input files to process : 1

2020-04-07 12:34:10,888 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-04-07 12:34:11,041 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-04-07 12:34:11,054 INFO mapreduce.JobSubmitter: number of splits:1

2020-04-07 12:34:11,268 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-04-07 12:34:11,301 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1586258781362_0001

2020-04-07 12:34:11,302 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-04-07 12:34:11,538 INFO conf.Configuration: resource-types.xml not found

2020-04-07 12:34:11,538 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2020-04-07 12:34:11,825 INFO impl.YarnClientImpl: Submitted application application_1586258781362_0001

2020-04-07 12:34:11,912 INFO mapreduce.Job: The url to track the job: http://h01:8088/proxy/application_1586258781362_0001/

2020-04-07 12:34:11,913 INFO mapreduce.Job: Running job: job_1586258781362_0001

2020-04-07 12:34:20,096 INFO mapreduce.Job: Job job_1586258781362_0001 running in uber mode : false

2020-04-07 12:34:20,099 INFO mapreduce.Job: map 0% reduce 0%

2020-04-07 12:34:26,207 INFO mapreduce.Job: map 100% reduce 0%

2020-04-07 12:34:33,262 INFO mapreduce.Job: map 100% reduce 100%

2020-04-07 12:34:33,283 INFO mapreduce.Job: Job job_1586258781362_0001 completed successfully

2020-04-07 12:34:33,492 INFO mapreduce.Job: Counters: 54

File System Counters

FILE: Number of bytes read=46852

FILE: Number of bytes written=546105

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=150664

HDFS: Number of bytes written=35324

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=3553

Total time spent by all reduces in occupied slots (ms)=3546

Total time spent by all map tasks (ms)=3553

Total time spent by all reduce tasks (ms)=3546

Total vcore-milliseconds taken by all map tasks=3553

Total vcore-milliseconds taken by all reduce tasks=3546

Total megabyte-milliseconds taken by all map tasks=3638272

Total megabyte-milliseconds taken by all reduce tasks=3631104

Map-Reduce Framework

Map input records=2814

Map output records=21904

Map output bytes=234035

Map output materialized bytes=46852

Input split bytes=95

Combine input records=21904

Combine output records=2981

Reduce input groups=2981

Reduce shuffle bytes=46852

Reduce input records=2981

Reduce output records=2981

Spilled Records=5962

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=94

CPU time spent (ms)=2080

Physical memory (bytes) snapshot=465178624

Virtual memory (bytes) snapshot=5319913472

Total committed heap usage (bytes)=429916160

Peak Map Physical memory (bytes)=284471296

Peak Map Virtual memory (bytes)=2651869184

Peak Reduce Physical memory (bytes)=180707328

Peak Reduce Virtual memory (bytes)=2668044288

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=150569

File Output Format Counters

Bytes Written=35324

显示结果

root@h01:~# hadoop fs -ls /output

Found 2 items

-rw-r--r-- 2 root supergroup 0 2020-04-07 12:34 /output/_SUCCESS

-rw-r--r-- 2 root supergroup 35324 2020-04-07 12:34 /output/part-r-00000

root@h01:~# hadoop fs -cat /output/part-r-00000