使用colab运行tensorflow版本的faster-rcnn

**

在colab下运行tensorflow版本的faster- rcnn

**

操作流程

操作过程我已经录成视频上传至B站,链接为

https://www.bilibili.com/video/BV1iK4y1k7yK

以下是具体的代码实现

具体的代码实现

装载google云盘

在云盘中创建文件夹coco,以便于稍后进行存放文件,可以利用如下代码创建

//

!mkdir -p /content/drive/My Drive/coco/

获取faster-rcnn代码

// 获取tf版的faster-rcnn代码

!git clone https://github.com/endernewton/tf-faster-rcnn.git

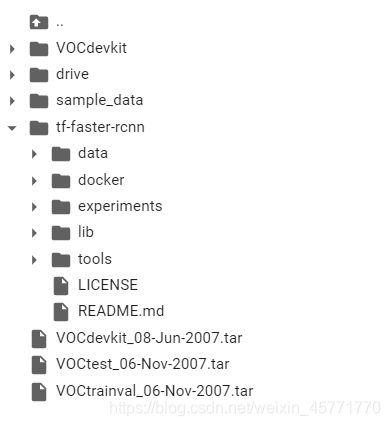

结果如图

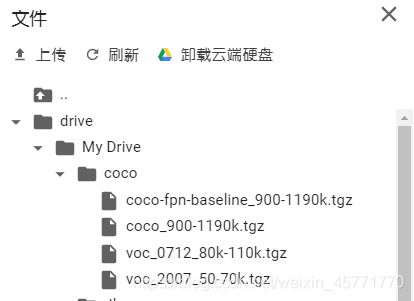

下载coco-fpn-baseline_900-1190k.tgz、coco_900-1190k.tgz、voc_0712_80k-110k.tgz、voc_2007_50-70k.tgz四个压缩文件,保存至google网盘,我的保存路径为’/content/drive/My Drive/coco/’ (若无法获取文件,私信我,我发链接)

// 获取VOC压缩文件

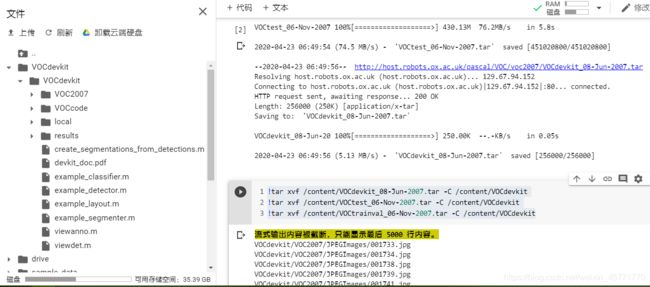

!wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

!wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

!wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCdevkit_08-Jun-2007.tar

结果如图,文件区有三个压缩文件,执行结果如图

创建名为VOCdevkit的文件夹

// An highlighted block

!mkdir -p /content/VOCdevkit/

结果如图

解压三个下载的压缩文件到VOCdevkit文件夹下

// 解压三个压缩文件到VOCdevkit文件夹下

!tar xvf /content/VOCdevkit_08-Jun-2007.tar -C /content/VOCdevkit

!tar xvf /content/VOCtest_06-Nov-2007.tar -C /content/VOCdevkit

!tar xvf /content/VOCtrainval_06-Nov-2007.tar -C /content/VOCdevkit

// 创建default文件夹

!mkdir -p /content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default

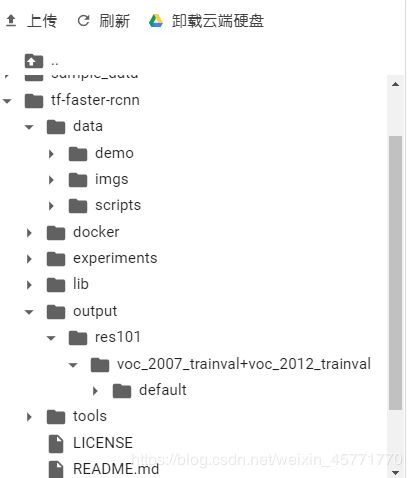

结果如图

复制VOCdevkit文件夹到tf-faster-rcnn文件夹下

// 创建default文件夹

!cp -r /content/VOCdevkit/VOCdevkit /content/tf-faster-rcnn/

结果如图

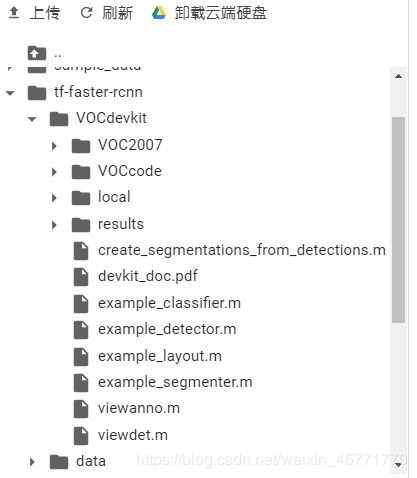

检查一下之前下载的四个文件coco-fpn-baseline_900-1190k.tgz、coco_900-1190k.tgz、voc_0712_80k-110k.tgz、voc_2007_50-70k.tgz保存的位置(当然,coco是我自己命名的,你也可以起别的名字)

// 检查下载的数据集

import os

#进入到这个文件夹下

os.chdir('/content/drive/My Drive/coco/')

!ls

结果如图

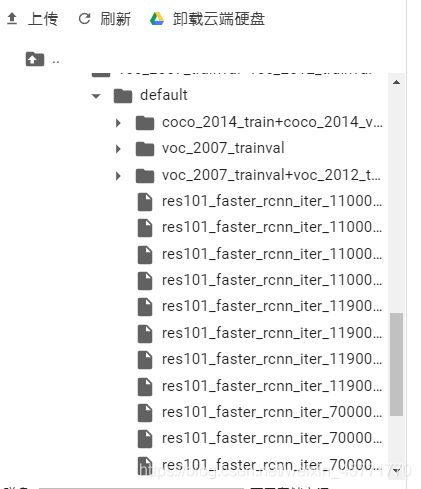

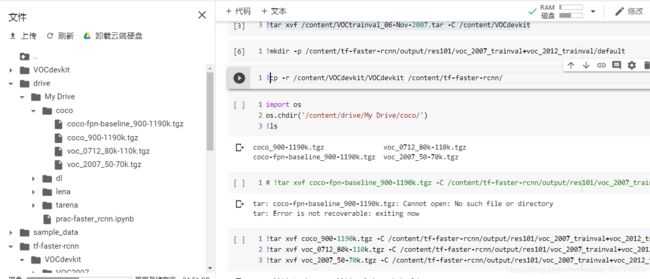

解压其中的后三个文件夹到之前创建的default文件夹下(为啥是三个,因为前两个重复了)

// 解压数据集

!tar xvf coco_900-1190k.tgz -C /content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/

!tar xvf voc_0712_80k-110k.tgz -C /content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/

!tar xvf voc_2007_50-70k.tgz -C /content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/

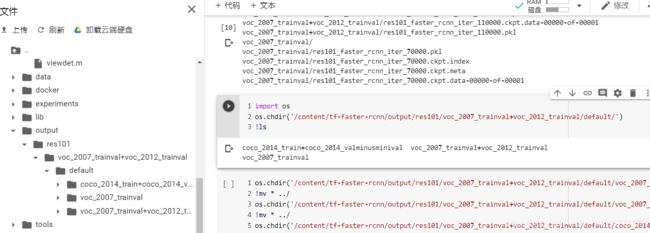

// 进入目录并查看

import os

os.chdir('/content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/')

!ls

将default下三个文件夹中所有的文件移动到default目录下,原来三个文件夹移动后为空文件夹

os.chdir('/content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/voc_2007_trainval/')

!mv * ../

os.chdir('/content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/voc_2007_trainval+voc_2012_trainval/')

!mv * ../

os.chdir('/content/tf-faster-rcnn/output/res101/voc_2007_trainval+voc_2012_trainval/default/coco_2014_train+coco_2014_valminusminival/')

!mv * ../

//创建并查看创建后的结果

!mkdir -p /content/tf-faster-rcnn/testfigs/

os.chdir("/content/tf-faster-rcnn")

!ls

至此,所有该创建的就都创建完了(给自己一巴掌精神精神)创建完了咱们继续剩下的操作

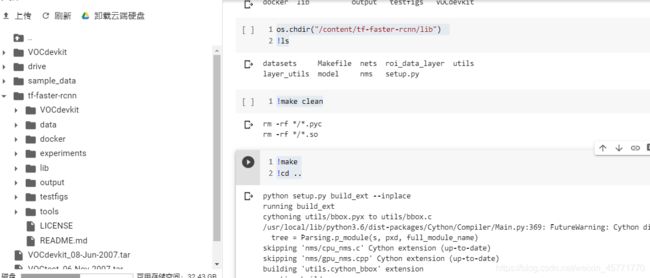

执行如下代码,此段代码即在lib文件夹下创建软链接,并返回到tf-faster-rcnn目录

//创建并查看创建后的结果

os.chdir("/content/tf-faster-rcnn/lib")

!ls

!make clean

!make

!cd ..

结果如图

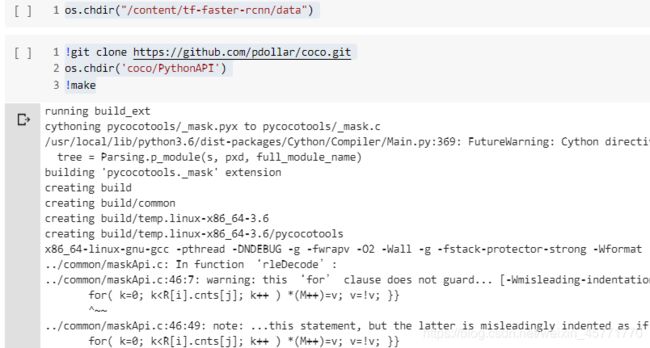

执行如下代码,意思为进入到data文件夹下下载coco.git并创建链接

//创建并查看创建后的结果

os.chdir("/content/tf-faster-rcnn/data")

!git clone https://github.com/pdollar/coco.git

os.chdir('coco/PythonAPI')

!make.

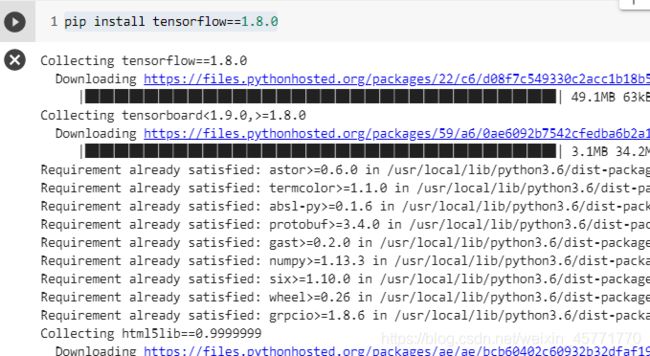

//安装1.8版本的tensorflow

pip install tensorflow==1.8.0

修改/content/tf-faster-rcnn/lib/setup.py目录下setup.py里面的参数,根据colab的GPU型号,更改为-arch=sm_60’,若君找不到,可以直接替换为下面的代码

# --------------------------------------------------------

# Fast R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick

# --------------------------------------------------------

import os

from os.path import join as pjoin

import numpy as np

from distutils.core import setup

from distutils.extension import Extension

from Cython.Distutils import build_ext

def find_in_path(name, path):

"Find a file in a search path"

#adapted fom http://code.activestate.com/recipes/52224-find-a-file-given-a-search-path/

for dir in path.split(os.pathsep):

binpath = pjoin(dir, name)

if os.path.exists(binpath):

return os.path.abspath(binpath)

return None

def locate_cuda():

"""Locate the CUDA environment on the system

Returns a dict with keys 'home', 'nvcc', 'include', and 'lib64'

and values giving the absolute path to each directory.

Starts by looking for the CUDAHOME env variable. If not found, everything

is based on finding 'nvcc' in the PATH.

"""

# first check if the CUDAHOME env variable is in use

if 'CUDAHOME' in os.environ:

home = os.environ['CUDAHOME']

nvcc = pjoin(home, 'bin', 'nvcc')

else:

# otherwise, search the PATH for NVCC

default_path = pjoin(os.sep, 'usr', 'local', 'cuda', 'bin')

nvcc = find_in_path('nvcc', os.environ['PATH'] + os.pathsep + default_path)

if nvcc is None:

raise EnvironmentError('The nvcc binary could not be '

'located in your $PATH. Either add it to your path, or set $CUDAHOME')

home = os.path.dirname(os.path.dirname(nvcc))

cudaconfig = {'home':home, 'nvcc':nvcc,

'include': pjoin(home, 'include'),

'lib64': pjoin(home, 'lib64')}

for k, v in cudaconfig.items():

if not os.path.exists(v):

raise EnvironmentError('The CUDA %s path could not be located in %s' % (k, v))

return cudaconfig

CUDA = locate_cuda()

# Obtain the numpy include directory. This logic works across numpy versions.

try:

numpy_include = np.get_include()

except AttributeError:

numpy_include = np.get_numpy_include()

def customize_compiler_for_nvcc(self):

"""inject deep into distutils to customize how the dispatch

to gcc/nvcc works.

If you subclass UnixCCompiler, it's not trivial to get your subclass

injected in, and still have the right customizations (i.e.

distutils.sysconfig.customize_compiler) run on it. So instead of going

the OO route, I have this. Note, it's kindof like a wierd functional

subclassing going on."""

# tell the compiler it can processes .cu

self.src_extensions.append('.cu')

# save references to the default compiler_so and _comple methods

default_compiler_so = self.compiler_so

super = self._compile

# now redefine the _compile method. This gets executed for each

# object but distutils doesn't have the ability to change compilers

# based on source extension: we add it.

def _compile(obj, src, ext, cc_args, extra_postargs, pp_opts):

print(extra_postargs)

if os.path.splitext(src)[1] == '.cu':

# use the cuda for .cu files

self.set_executable('compiler_so', CUDA['nvcc'])

# use only a subset of the extra_postargs, which are 1-1 translated

# from the extra_compile_args in the Extension class

postargs = extra_postargs['nvcc']

else:

postargs = extra_postargs['gcc']

super(obj, src, ext, cc_args, postargs, pp_opts)

# reset the default compiler_so, which we might have changed for cuda

self.compiler_so = default_compiler_so

# inject our redefined _compile method into the class

self._compile = _compile

# run the customize_compiler

class custom_build_ext(build_ext):

def build_extensions(self):

customize_compiler_for_nvcc(self.compiler)

build_ext.build_extensions(self)

ext_modules = [

Extension(

"utils.cython_bbox",

["utils/bbox.pyx"],

extra_compile_args={'gcc': ["-Wno-cpp", "-Wno-unused-function"]},

include_dirs = [numpy_include]

),

Extension(

"nms.cpu_nms",

["nms/cpu_nms.pyx"],

extra_compile_args={'gcc': ["-Wno-cpp", "-Wno-unused-function"]},

include_dirs = [numpy_include]

),

Extension('nms.gpu_nms',

['nms/nms_kernel.cu', 'nms/gpu_nms.pyx'],

library_dirs=[CUDA['lib64']],

libraries=['cudart'],

language='c++',

runtime_library_dirs=[CUDA['lib64']],

# this syntax is specific to this build system

# we're only going to use certain compiler args with nvcc and not with gcc

# the implementation of this trick is in customize_compiler() below

extra_compile_args={'gcc': ["-Wno-unused-function"],

'nvcc': ['-arch=sm_60',

'--ptxas-options=-v',

'-c',

'--compiler-options',

"'-fPIC'"]},

include_dirs = [numpy_include, CUDA['include']]

)

]

setup(

name='tf_faster_rcnn',

ext_modules=ext_modules,

# inject our custom trigger

cmdclass={'build_ext': custom_build_ext},

)

更改demo.py文件为如下代码,直接替换即可。代码内容我不做解释了,写到这里有点累了

’

// An highlighted block

#!/usr/bin/env python

# --------------------------------------------------------

# Tensorflow Faster R-CNN

# Licensed under The MIT License [see LICENSE for details]

# Written by Xinlei Chen, based on code from Ross Girshick

# --------------------------------------------------------

"""

Demo script showing detections in sample images.

See README.md for installation instructions before running.

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import _init_paths

from model.config import cfg

from model.test import im_detect

from model.nms_wrapper import nms

from utils.timer import Timer

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import os, cv2

import argparse

from nets.vgg16 import vgg16

from nets.resnet_v1 import resnetv1

CLASSES = ('__background__',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

NETS = {'vgg16': ('vgg16_faster_rcnn_iter_70000.ckpt',),'res101': ('res101_faster_rcnn_iter_110000.ckpt',)}

DATASETS= {'pascal_voc': ('voc_2007_trainval',),'pascal_voc_0712': ('voc_2007_trainval+voc_2012_trainval',)}

def vis_detections(im, class_name, dets,ax,thresh=0.5):

"""Draw detected bounding boxes."""

inds = np.where(dets[:, -1] >= thresh)[0]

if len(inds) == 0:

return

# im = im[:, :, (2, 1, 0)]

# fig, ax = plt.subplots(figsize=(12, 12))

# ax.imshow(im, aspect='equal')

for i in inds:

bbox = dets[i, :4]

score = dets[i, -1]

ax.add_patch(

plt.Rectangle((bbox[0], bbox[1]),

bbox[2] - bbox[0],

bbox[3] - bbox[1], fill=False,

edgecolor='red', linewidth=3.5)

)

ax.text(bbox[0], bbox[1] - 2,

'{:s} {:.3f}'.format(class_name, score),

bbox=dict(facecolor='blue', alpha=0.5),

fontsize=14, color='white')

ax.set_title(('{} detections with '

'p({} | box) >= {:.1f}').format(class_name, class_name,

thresh),

fontsize=14)

# plt.axis('off')

# plt.tight_layout()

# plt.draw()

def demo(sess, net, image_name):

"""Detect object classes in an image using pre-computed object proposals."""

# Load the demo image

im_file = os.path.join(cfg.DATA_DIR, 'demo', image_name)

im = cv2.imread(im_file)

# Detect all object classes and regress object bounds

timer = Timer()

timer.tic()

scores, boxes = im_detect(sess, net, im)

timer.toc()

print('Detection took {:.3f}s for {:d} object proposals'.format(timer.total_time, boxes.shape[0]))

# Visualize detections for each class

CONF_THRESH = 0.8

NMS_THRESH = 0.3

im = im[:, :, (2, 1, 0)]

fig, ax = plt.subplots(figsize=(12, 12))

ax.imshow(im, aspect='equal')

for cls_ind, cls in enumerate(CLASSES[1:]):

cls_ind += 1 # because we skipped background

cls_boxes = boxes[:, 4*cls_ind:4*(cls_ind + 1)]

cls_scores = scores[:, cls_ind]

dets = np.hstack((cls_boxes,

cls_scores[:, np.newaxis])).astype(np.float32)

keep = nms(dets, NMS_THRESH)

dets = dets[keep, :]

vis_detections(im, cls, dets,ax,thresh=CONF_THRESH)

plt.axis('off')

plt.tight_layout()

plt.draw()

def parse_args():

"""Parse input arguments."""

parser = argparse.ArgumentParser(description='Tensorflow Faster R-CNN demo')

parser.add_argument('--net', dest='demo_net', help='Network to use [vgg16 res101]',

choices=NETS.keys(), default='res101')

parser.add_argument('--dataset', dest='dataset', help='Trained dataset [pascal_voc pascal_voc_0712]',

choices=DATASETS.keys(), default='pascal_voc_0712')

args = parser.parse_args()

return args

if __name__ == '__main__':

cfg.TEST.HAS_RPN = True # Use RPN for proposals

args = parse_args()

# model path

demonet = args.demo_net

dataset = args.dataset

tfmodel = os.path.join('output', demonet, DATASETS[dataset][0], 'default',

NETS[demonet][0])

if not os.path.isfile(tfmodel + '.meta'):

raise IOError(('{:s} not found.\nDid you download the proper networks from '

'our server and place them properly?').format(tfmodel + '.meta'))

# set config

tfconfig = tf.ConfigProto(allow_soft_placement=True)

tfconfig.gpu_options.allow_growth=True

# init session

sess = tf.Session(config=tfconfig)

# load network

if demonet == 'vgg16':

net = vgg16()

elif demonet == 'res101':

net = resnetv1(num_layers=101)

else:

raise NotImplementedError

net.create_architecture("TEST", 21,

tag='default', anchor_scales=[8, 16, 32])

saver = tf.train.Saver()

saver.restore(sess, tfmodel)

print('Loaded network {:s}'.format(tfmodel))

im_names = ['000456.jpg', '000542.jpg', '001150.jpg',

'001763.jpg', '004545.jpg']

for im_name in im_names:

print('~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~')

print('Demo for data/demo/{}'.format(im_name))

demo(sess, net, im_name)

plt.savefig("/content/tf-faster-rcnn/testfigs/" + im_name)

# plt.show()

最后一步,运行demo.py,代码如下,执行结果保存在testfigs中,在我B站上的视频里会教你们下载到本地

// An highlighted block

!python3 /content/tf-faster-rcnn/tools/demo.py

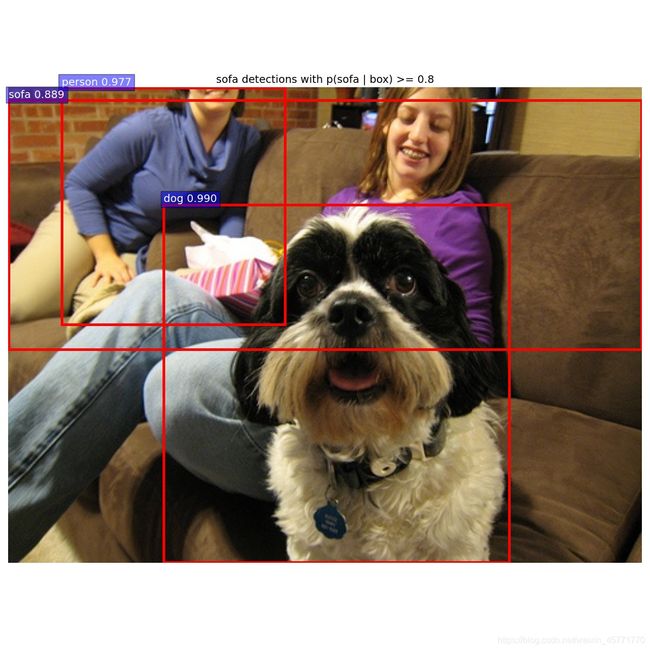

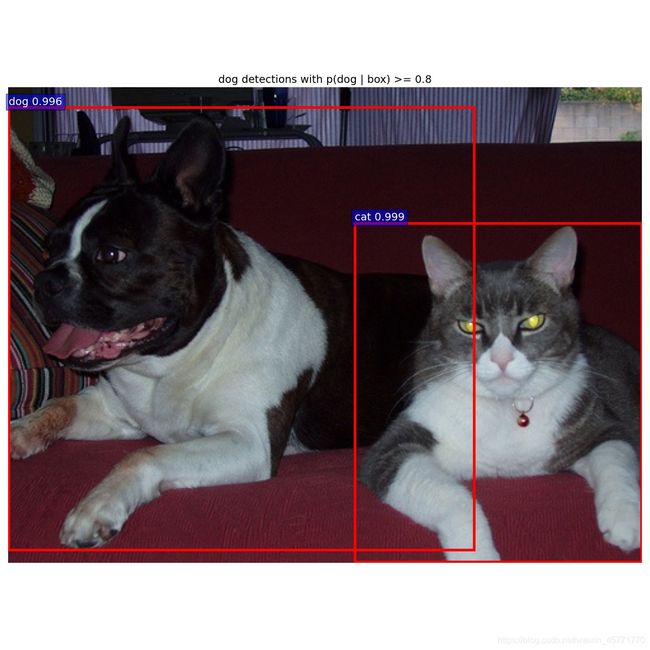

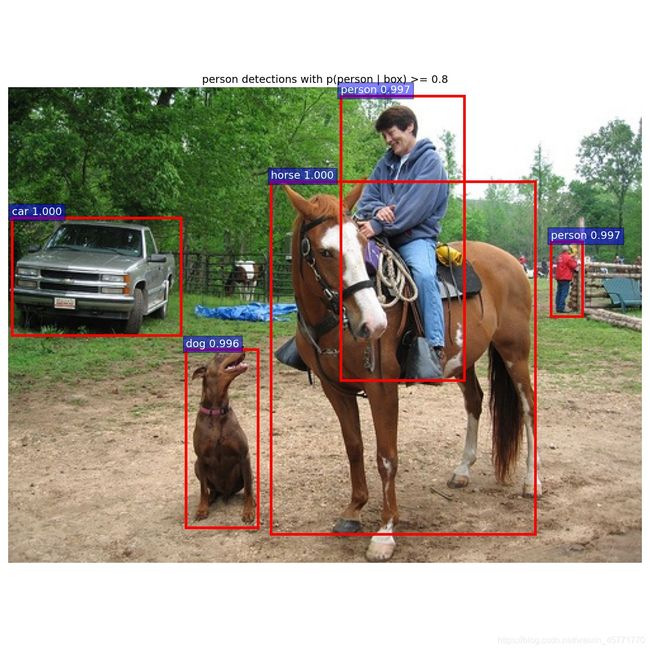

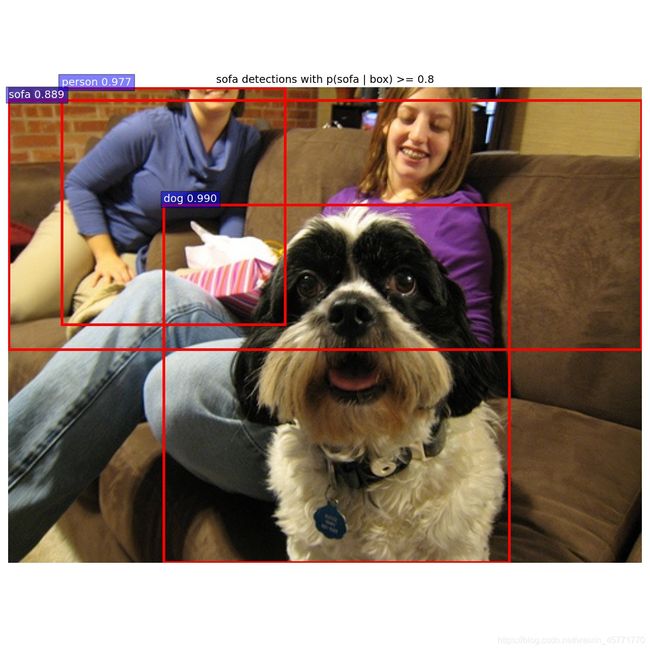

做个总结,写这么详细,是为了帮助小白们在colab平台上跑起来faster-rcnn,欢迎多多交流(私信即可),最后结果如图