windows本地运行hadoop的MapReduce程序

1.下载hadoo安装到windows本地

地址 https://archive.apache.org/dist/hadoop/core/hadoop-2.6.0/hadoop-2.6.0.tar.gz

2. 解压之后进行设置环境变量

新建 HADOOP_HOME D:\software\hadoop-2.6.0

Path中增加 %HADOOP_HOME%\bin 和 %HADOOP_HOME%\sbin

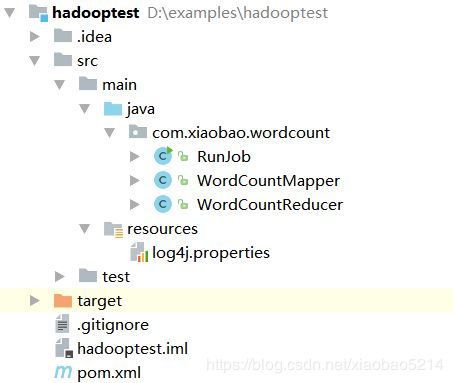

3.范例代码

项目结构

pom.xml

4.0.0

com.xiaobao

hadooptest

0.0.1-SNAPSHOT

jar

org.apache.hadoop

hadoop-client

2.6.0

log4j.properties

log4j.rootLogger = debug,stdout

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern = [%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%nWordCountMapper.java

package com.xiaobao.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class WordCountMapper extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] words = value.toString().split("\\s+");

for (String word : words) {

context.write(new Text(word), new IntWritable(1));

}

}

}

WordCountReducer.java

package com.xiaobao.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WordCountReducer extends Reducer {

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

context.write(new Text(key), new IntWritable(sum));

}

}

RunJob.java

package com.xiaobao.wordcount;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class RunJob {

public static void main(String[] args) throws Exception {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(configuration);

Job job = Job.getInstance(configuration);

job.setJarByClass(RunJob.class);

job.setJobName("wordCount");

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path("/wordcount/input"));

Path outPath = new Path("/wordcount/output");

if (fs.exists(outPath)) {

fs.delete(outPath, true);

}

FileOutputFormat.setOutputPath(job, outPath);

boolean completion = job.waitForCompletion(true);

if (completion) {

System.out.println("执行完成");

}

}

}

4. 然后在项目的根目录下创建 D:\wordcount\input 和 D:\wordcount\output文件夹input 文件夹中放入 words.txt内容为

hello world

hello hadoop

hadoop yes

hadoop no5. 然后启动RunJob的main方法,会出现报错

[ERROR] 2018-11-13 10:12:43,981 method:org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:373)

Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:355)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:370)

at org.apache.hadoop.util.Shell.(Shell.java:363)

at org.apache.hadoop.util.StringUtils.(StringUtils.java:79)

at org.apache.hadoop.security.Groups.parseStaticMapping(Groups.java:104)

at org.apache.hadoop.security.Groups.(Groups.java:86)

at org.apache.hadoop.security.Groups.(Groups.java:66)

at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:280)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:271)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:248)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:763)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:748)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:621)

at org.apache.hadoop.fs.FileSystem$Cache$Key.(FileSystem.java:2753)

at org.apache.hadoop.fs.FileSystem$Cache$Key.(FileSystem.java:2745)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2611)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:370)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:169)

at com.xiaobao.wordcount.RunJob.main(RunJob.java:24) 5.看日志的错误信息是没有winutils.exe这个文件,需要下载winutils.exe文件,注意要和自己hadoop版本一致,否则还是报错

下载地址: https://github.com/steveloughran/winutils 从github下载下来压缩包,将对应版本的bin下的文件拷贝并替换自己本 地的hadoop的bin目录中文件

6. 继续运行main方法,执行成功

此时 D:\wordcount\output 文件夹中出现执行完成的文件_SUCCESS 和 part-r-00000 等文件, _SUCCESS 代表执行成功, part-r-00000中存单词统计的结果

hadoop 3

hello 2

no 1

world 1

yes 1