Pytorch

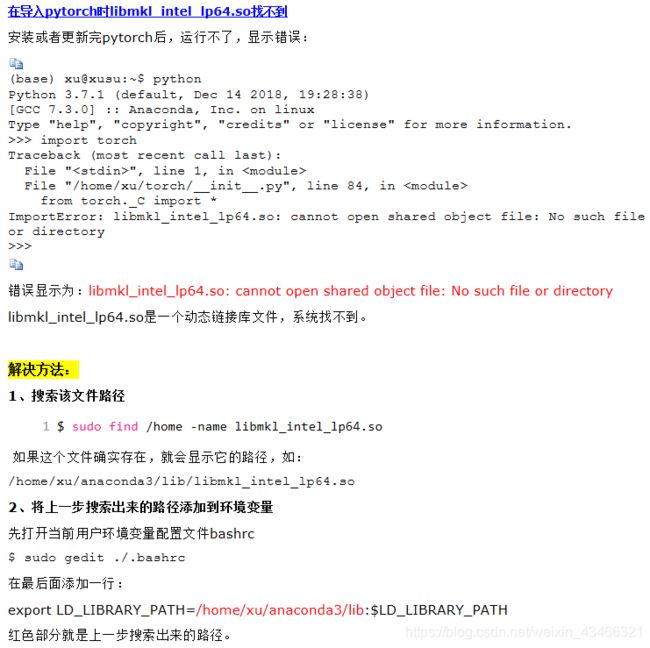

一、动态库链接文件找不到

二、Pytorch的Dataloader报错

三、pytorch bug

四、常用函数功能使用

1.view

view(a,b)中第一个参数a代表目标张量的行数,b代表列数,为了简便起见,也可以只指定第一个参数a,b这个参数设置成-1,函数会自动计算对应的列数。

import torch

number_1 = torch.randn(2,3)

print(number_1.view(3,-1))

tensor([[1.0506, -0.5875],

[-1.2477, 0.0635],

[0.8997, 0.1551]])

2.squeeze

其功能是进行维度缩减(维度为1的删除)。Squeeze(a,b)中第一个参数a代表传入的张量,b代表要缩减的维数。如果第二个参数没有指定,则默认删除所有维度为1的维度。

number_2 = torch.randn(2, 1)

print(number_2)

3.torch.sum(input, dim, keepdim=False, out=None) → Tensor

返回新的张量,其中包括输入张量input中指定维度dim中每行的和。

若keepdim值为True,则在输出张量中,除了被操作的dim维度值降为1,其它维度与输入张量input相同。否则,dim维度相当于被执行torch.squeeze()维度压缩操作,导致此维度消失,最终输出张量会比输入张量少一个维度。

参数:

例子:

a = torch.rand(4, 4)

a

0.6117 0.2066 0.1838 0.5582

0.7751 0.5258 0.8898 0.4822

0.8238 0.4217 0.2266 0.2178

0.2121 0.6614 0.4635 0.0368

[torch.FloatTensor of size 4x4]

torch.sum(a, 1, True)

1.5602

2.6728

1.6900

1.3737

[torch.FloatTensor of size 4x1]

4.torch.prod(input, dim, keepdim=False, out=None) → Tensor

返回新的张量,其中包括输入张量input中指定维度dim中每行的乘积。

若keepdim值为True,则在输出张量中,除了被操作的dim维度值降为1,其它维度与输入张量input相同。否则,dim维度相当于被执行torch.squeeze()维度压缩操作,导致此维度消失,最终输出张量会比输入张量少一个维度。

参数:

例子:

a = torch.randn(4, 2)

a

-1.8626 -0.5725

-0.6924 -0.8738

-0.2659 0.3540

-0.4500 1.4647

[torch.FloatTensor of size 4x2]

torch.prod(a, 1, True)

1.0664

0.6050

-0.0941

-0.6592

[torch.FloatTensor of size 4x1]

5.线性插值

torch.lerp(star,end,weight) → Tensor

基于weight对输入的两个张量start与end逐个元素计算线性插值,结果返回至输出张量。

返回结果是:

参数:

例子:

start = torch.arange(1, 5)

end = torch.Tensor(4).fill_(10)

start

1

2

3

4

[torch.FloatTensor of size 4]

end

10

10

10

10

[torch.FloatTensor of size 4]

torch.lerp(start, end, 0.5)

5.5000

6.0000

6.5000

7.0000

[torch.FloatTensor of size 4]

来自

6.pytorch:torch.clamp()

2018年09月11日 16:02:43 大雄没有叮当猫 阅读数 7215

版权声明:微信公众号:数据挖掘与机器学习进阶之路。本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/u013230189/article/details/82627375

torch.clamp(input, min, max, out=None) → Tensor

将输入input张量每个元素的夹紧到区间 [min,max][min,max],并返回结果到一个新张量。

操作定义如下:

- | min, if x_i < min

- y_i = | x_i, if min <= x_i <= max

- | max, if x_i > max

如果输入是FloatTensor or DoubleTensor类型,则参数min max 必须为实数,否则须为整数。【译注:似乎并非如此,无关输入类型,min, max取整数、实数皆可。】

参数:

- input (Tensor) – 输入张量

- min (Number) – 限制范围下限

- max (Number) – 限制范围上限

- out (Tensor, optional) – 输出张量

代码示例如下:

- a=torch.randint(low=0,high=10,size=(10,1))

- print(a)

- a=torch.clamp(a,3,9)

- print(a)

输出如下

- tensor([[9.],

- [3.],

- [0.],

- [4.],

- [4.],

- [2.],

- [4.],

- [1.],

- [2.],

- [9.]])

- tensor([[9.],

- [3.],

- [3.],

- [4.],

- [4.],

- [3.],

- [4.],

- [3.],

- [3.],

- [9.]])

来自

来自

五、损失

| class MarginRankingLoss(_Loss): |

|

|

|

r"""Creates a criterion that measures the loss given |

|

|

inputs :math:`x1`, :math:`x2`, two 1D mini-batch `Tensors`, |

|

|

and a label 1D mini-batch tensor :math:`y` (containing 1 or -1). |

|

|

If :math:`y = 1` then it assumed the first input should be ranked higher |

|

|

(have a larger value) than the second input, and vice-versa for :math:`y = -1`. |

|

|

The loss function for each sample in the mini-batch is: |

|

|

.. math:: |

|

|

\text{loss}(x, y) = \max(0, -y * (x1 - x2) + \text{margin}) |

|

|

Args: |

|

|

margin (float, optional): Has a default value of :math:`0`. |

|

|

size_average (bool, optional): Deprecated (see :attr:`reduction`). By default, |

|

|

the losses are averaged over each loss element in the batch. Note that for |

|

|

some losses, there are multiple elements per sample. If the field :attr:`size_average` |

|

|

is set to ``False``, the losses are instead summed for each minibatch. Ignored |

|

|

when reduce is ``False``. Default: ``True`` |

|

|

reduce (bool, optional): Deprecated (see :attr:`reduction`). By default, the |

|

|

losses are averaged or summed over observations for each minibatch depending |

|

|

on :attr:`size_average`. When :attr:`reduce` is ``False``, returns a loss per |

|

|

batch element instead and ignores :attr:`size_average`. Default: ``True`` |

|

|

reduction (string, optional): Specifies the reduction to apply to the output: |

|

|

``'none'`` | ``'mean'`` | ``'sum'``. ``'none'``: no reduction will be applied, |

|

|

``'mean'``: the sum of the output will be divided by the number of |

|

|

elements in the output, ``'sum'``: the output will be summed. Note: :attr:`size_average` |

|

|

and :attr:`reduce` are in the process of being deprecated, and in the meantime, |

|

|

specifying either of those two args will override :attr:`reduction`. Default: ``'mean'`` |

|

|

Shape: |

|

|

- Input: :math:`(N, D)` where `N` is the batch size and `D` is the size of a sample. |

|

|

- Target: :math:`(N)` |

|

|

- Output: scalar. If :attr:`reduction` is ``'none'``, then :math:`(N)`. |

|

|

""" |

|

|

__constants__ = ['margin', 'reduction'] |

|

|

def __init__(self, margin=0., size_average=None, reduce=None, reduction='mean'): |

|

|

super(MarginRankingLoss, self).__init__(size_average, reduce, reduction) |

|

|

self.margin = margin |

|

|

def forward(self, input1, input2, target): |

|

|

return F.margin_ranking_loss(input1, input2, target, margin=self.margin, reduction=self.reduction) |

| class CrossEntropyLoss(_WeightedLoss): |

|

|

|

r"""This criterion combines :func:`nn.LogSoftmax` and :func:`nn.NLLLoss` in one single class. |

|

|

It is useful when training a classification problem with `C` classes. |

|

|

If provided, the optional argument :attr:`weight` should be a 1D `Tensor` |

|

|

assigning weight to each of the classes. |

|

|

This is particularly useful when you have an unbalanced training set. |

|

|

The `input` is expected to contain raw, unnormalized scores for each class. |

|

|

`input` has to be a Tensor of size either :math:`(minibatch, C)` or |

|

|

:math:`(minibatch, C, d_1, d_2, ..., d_K)` |

|

|

with :math:`K \geq 1` for the `K`-dimensional case (described later). |

|

|

This criterion expects a class index in the range :math:`[0, C-1]` as the |

|

|

`target` for each value of a 1D tensor of size `minibatch`; if `ignore_index` |

|

|

is specified, this criterion also accepts this class index (this index may not |

|

|

necessarily be in the class range). |

|

|

The loss can be described as: |

|

|

.. math:: |

|

|

\text{loss}(x, class) = -\log\left(\frac{\exp(x[class])}{\sum_j \exp(x[j])}\right) |

|

|

= -x[class] + \log\left(\sum_j \exp(x[j])\right) |

|

|

or in the case of the :attr:`weight` argument being specified: |

|

|

.. math:: |

|

|

\text{loss}(x, class) = weight[class] \left(-x[class] + \log\left(\sum_j \exp(x[j])\right)\right) |

|

|

The losses are averaged across observations for each minibatch. |

|

|

Can also be used for higher dimension inputs, such as 2D images, by providing |

|

|

an input of size :math:`(minibatch, C, d_1, d_2, ..., d_K)` with :math:`K \geq 1`, |

|

|

where :math:`K` is the number of dimensions, and a target of appropriate shape |

|

|

(see below). |

|

|

Args: |

|

|

weight (Tensor, optional): a manual rescaling weight given to each class. |

|

|

If given, has to be a Tensor of size `C` |

|

|

size_average (bool, optional): Deprecated (see :attr:`reduction`). By default, |

|

|

the losses are averaged over each loss element in the batch. Note that for |

|

|

some losses, there are multiple elements per sample. If the field :attr:`size_average` |

|

|

is set to ``False``, the losses are instead summed for each minibatch. Ignored |

|

|

when reduce is ``False``. Default: ``True`` |

|

|

ignore_index (int, optional): Specifies a target value that is ignored |

|

|

and does not contribute to the input gradient. When :attr:`size_average` is |

|

|

``True``, the loss is averaged over non-ignored targets. |

|

|

reduce (bool, optional): Deprecated (see :attr:`reduction`). By default, the |

|

|

losses are averaged or summed over observations for each minibatch depending |

|

|

on :attr:`size_average`. When :attr:`reduce` is ``False``, returns a loss per |

|

|

batch element instead and ignores :attr:`size_average`. Default: ``True`` |

|

|

reduction (string, optional): Specifies the reduction to apply to the output: |

|

|

``'none'`` | ``'mean'`` | ``'sum'``. ``'none'``: no reduction will be applied, |

|

|

``'mean'``: the sum of the output will be divided by the number of |

|

|

elements in the output, ``'sum'``: the output will be summed. Note: :attr:`size_average` |

|

|

and :attr:`reduce` are in the process of being deprecated, and in the meantime, |

|

|

specifying either of those two args will override :attr:`reduction`. Default: ``'mean'`` |

|

|

Shape: |

|

|

- Input: :math:`(N, C)` where `C = number of classes`, or |

|

|

:math:`(N, C, d_1, d_2, ..., d_K)` with :math:`K \geq 1` |

|

|

in the case of `K`-dimensional loss. |

|

|

- Target: :math:`(N)` where each value is :math:`0 \leq \text{targets}[i] \leq C-1`, or |

|

|

:math:`(N, d_1, d_2, ..., d_K)` with :math:`K \geq 1` in the case of |

|

|

K-dimensional loss. |

|

|

- Output: scalar. |

|

|

If :attr:`reduction` is ``'none'``, then the same size as the target: |

|

|

:math:`(N)`, or |

|

|

:math:`(N, d_1, d_2, ..., d_K)` with :math:`K \geq 1` in the case |

|

|

of K-dimensional loss. |

|

|

Examples:: |

|

|

>>> loss = nn.CrossEntropyLoss() |

|

|

>>> input = torch.randn(3, 5, requires_grad=True) |

|

|

>>> target = torch.empty(3, dtype=torch.long).random_(5) |

|

|

>>> output = loss(input, target) |

|

|

>>> output.backward() |

|

|

""" |

|

|

__constants__ = ['weight', 'ignore_index', 'reduction'] |

|

|

def __init__(self, weight=None, size_average=None, ignore_index=-100, |

|

|

reduce=None, reduction='mean'): |

|

|

super(CrossEntropyLoss, self).__init__(weight, size_average, reduce, reduction) |

|

|

self.ignore_index = ignore_index |

|

|

def forward(self, input, target): |

|

|

return F.cross_entropy(input, target, weight=self.weight, |

|

|

ignore_index=self.ignore_index, reduction=self.reduction) |

来自

[loss]Triphard loss优雅的写法

之前一直自己手写各种triphard,triplet损失函数, 写的比较暴力,然后今天一个学长给我在github上看了一个别人的triphard的写法,一开始没看懂,用的pytorch函数没怎么见过,看懂了之后, 被惊艳到了。。因此在此记录一下,以及详细注释一下

class TripletLoss(nn.Module):

def __init__(self, margin=0.3):

super(TripletLoss, self).__init__()

self.margin = margin

self.ranking_loss = nn.MarginRankingLoss(margin=margin) # 获得一个简单的距离triplet函数

def forward(self, inputs, labels):

n = inputs.size(0) # 获取batch_size

# Compute pairwise distance, replace by the official when merged

# 每个数平方后, 进行加和(通过keepdim保持2维),再扩展成nxn维

dist = torch.pow(inputs, 2).sum(dim=1, keepdim=True).expand(n, n)

dist = dist + dist.t() # 这样每个dis[i][j]代表的是第i个特征与第j个特征的平方的和

# 然后减去2倍的 第i个特征*第j个特征 从而通过完全平方式得到 (a-b)^2

dist.addmm_(1, -2, inputs, inputs.t())

dist = dist.clamp(min=1e-12).sqrt()# 然后开方

# For each anchor, find the hardest positive and negative

# 这里dist[i][j] = 1代表i和j的label相同, =0代表i和j的label不相同

mask = labels.expand(n, n).eq(labels.expand(n, n).t())

dist_ap, dist_an = [], []

for i in range(n):

dist_ap.append(dist[i][mask[i]].max().unsqueeze(0)) # 在i与所有有相同label的j的距离中找一个最大的

dist_an.append(dist[i][mask[i] == 0].min().unsqueeze(0)) # 在i与所有不同label的j的距离找一个最小的

dist_ap = torch.cat(dist_ap) # 将list里的tensor拼接成新的tensor

dist_an = torch.cat(dist_an)

# Compute ranking hinge loss

y = torch.ones_like(dist_an) # 声明一个与dist_an相同shape的全1tensor

loss = self.ranking_loss(dist_an, dist_ap, y)

return loss

来自

六、MGN

来自

七、加载数据

加载自己的数据集

对于torchvision.datasets中有两个不同的类,分别为DatasetFolder和ImageFolder,ImageFolder是继承自DatasetFolder。

下面我们通过源码来看一看folder文件中DatasetFolder和ImageFolder分别做了些什么

import torch.utils.data as data

from PIL import Image

import os

import os.path

def has_file_allowed_extension(filename, extensions): //检查输入是否是规定的扩展名

"""Checks if a file is an allowed extension.

Args:

filename (string): path to a file

Returns:

bool: True if the filename ends with a known image extension

"""

filename_lower = filename.lower()

return any(filename_lower.endswith(ext) for ext in extensions)

def find_classes(dir):

classes = [d for d in os.listdir(dir) if os.path.isdir(os.path.join(dir, d))] //获取root目录下所有的文件夹名称

classes.sort()

class_to_idx = {classes[i]: i for i in range(len(classes))} //生成类别名称与类别id的对应Dictionary

return classes, class_to_idx

def make_dataset(dir, class_to_idx, extensions):

images = []

dir = os.path.expanduser(dir)// 将~和~user转化为用户目录,对参数中出现~进行处理

for target in sorted(os.listdir(dir)):

d = os.path.join(dir, target)

if not os.path.isdir(d):

continue

for root, _, fnames in sorted(os.walk(d)): //os.work包含三个部分,root代表该目录路径 _代表该路径下的文件夹名称集合,fnames代表该路径下的文件名称集合

for fname in sorted(fnames):

if has_file_allowed_extension(fname, extensions):

path = os.path.join(root, fname)

item = (path, class_to_idx[target])

images.append(item) //生成(训练样本图像目录,训练样本所属类别)的元组

return images //返回上述元组的列表

class DatasetFolder(data.Dataset):

"""A generic data loader where the samples are arranged in this way: ::

root/class_x/xxx.ext

root/class_x/xxy.ext

root/class_x/xxz.ext

root/class_y/123.ext

root/class_y/nsdf3.ext

root/class_y/asd932_.ext

Args:

root (string): Root directory path.

loader (callable): A function to load a sample given its path.

extensions (list[string]): A list of allowed extensions.

transform (callable, optional): A function/transform that takes in

a sample and returns a transformed version.

E.g, ``transforms.RandomCrop`` for images.

target_transform (callable, optional): A function/transform that takes

in the target and transforms it.

Attributes:

classes (list): List of the class names.

class_to_idx (dict): Dict with items (class_name, class_index).

samples (list): List of (sample path, class_index) tuples

"""

def __init__(self, root, loader, extensions, transform=None, target_transform=None):

classes, class_to_idx = find_classes(root)

samples = make_dataset(root, class_to_idx, extensions)

if len(samples) == 0:

raise(RuntimeError("Found 0 files in subfolders of: " + root + "\n"

"Supported extensions are: " + ",".join(extensions)))

self.root = root

self.loader = loader

self.extensions = extensions

self.classes = classes

self.class_to_idx = class_to_idx

self.samples = samples

self.transform = transform

self.target_transform = target_transform

def __getitem__(self, index):

"""

根据index获取sample 返回值为(sample,target)元组,同时如果该类输入参数中有transform和target_transform,torchvision.transforms类型的参数时,将获取的元组分别执行transform和target_transform中的数据转换方法。

Args:

index (int): Index

Returns:

tuple: (sample, target) where target is class_index of the target class.

"""

path, target = self.samples[index]

sample = self.loader(path)

if self.transform is not None:

sample = self.transform(sample)

if self.target_transform is not None:

target = self.target_transform(target)

return sample, target

def __len__(self):

return len(self.samples)

def __repr__(self): //定义输出对象格式 其中和__str__的区别是__repr__无论是print输出还是直接输出对象自身 都是以定义的格式进行输出,而__str__ 只有在print输出的时候会是以定义的格式进行输出

fmt_str = 'Dataset ' + self.__class__.__name__ + '\n'

fmt_str += ' Number of datapoints: {}\n'.format(self.__len__())

fmt_str += ' Root Location: {}\n'.format(self.root)

tmp = ' Transforms (if any): '

fmt_str += '{0}{1}\n'.format(tmp, self.transform.__repr__().replace('\n', '\n' + ' ' * len(tmp)))

tmp = ' Target Transforms (if any): '

fmt_str += '{0}{1}'.format(tmp, self.target_transform.__repr__().replace('\n', '\n' + ' ' * len(tmp)))

return fmt_str

IMG_EXTENSIONS = ['.jpg', '.jpeg', '.png', '.ppm', '.bmp', '.pgm', '.tif']

def pil_loader(path):

# open path as file to avoid ResourceWarning (https://github.com/python-pillow/Pillow/issues/835)

with open(path, 'rb') as f:

img = Image.open(f)

return img.convert('RGB')

def accimage_loader(path):

import accimage

try:

return accimage.Image(path)

except IOError:

# Potentially a decoding problem, fall back to PIL.Image

return pil_loader(path)

def default_loader(path):

from torchvision import get_image_backend

if get_image_backend() == 'accimage':

return accimage_loader(path)

else:

return pil_loader(path)

class ImageFolder(DatasetFolder):

"""A generic data loader where the images are arranged in this way: ::

root/dog/xxx.png

root/dog/xxy.png

root/dog/xxz.png

root/cat/123.png

root/cat/nsdf3.png

root/cat/asd932_.png

Args:

root (string): Root directory path.

transform (callable, optional): A function/transform that takes in an PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

target_transform (callable, optional): A function/transform that takes in the

target and transforms it.

loader (callable, optional): A function to load an image given its path.

Attributes:

classes (list): List of the class names.

class_to_idx (dict): Dict with items (class_name, class_index).

imgs (list): List of (image path, class_index) tuples

"""

def __init__(self, root, transform=None, target_transform=None,

loader=default_loader):

super(ImageFolder, self).__init__(root, loader, IMG_EXTENSIONS,

transform=transform,

target_transform=target_transform)

self.imgs = self.samples

如果自己所要加载的数据组织形式如下

root/dog/xxx.png

root/dog/xxy.png

root/dog/xxz.png

root/cat/123.png

root/cat/nsdf3.png

root/cat/asd932_.png

即不同类别的训练数据分别存储在不同的文件夹中,这些文件夹都在root(即形如 D:/animals 或者 /usr/animals )路径下

class torchvision.datasets.ImageFolder(root, transform=None, target_transform=None, loader=

参数如下:

root (string) – Root directory path.

transform (callable, optional) – A function/transform that takes in an PIL image and returns a transformed version. E.g, transforms.RandomCrop

target_transform (callable, optional) – A function/transform that takes in the target and transforms it.

loader – A function to load an image given its path. 就是上述源码中

__getitem__(index)

Parameters: index (int) – Index

Returns: (sample, target) where target is class_index of the target class.

Return type: tuple

可以通过torchvision.datasets.ImageFolder进行加载

img_data = torchvision.datasets.ImageFolder('D:/bnu/database/flower',

transform=transforms.Compose([

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()])

)

print(len(img_data))

data_loader = torch.utils.data.DataLoader(img_data, batch_size=20,shuffle=True)

print(len(data_loader))

对于所有的训练样本都在一个文件夹中 同时有一个对应的txt文件每一行分别是对应图像的路径以及其所属的类别,可以参照上述class写出对应的加载类

def default_loader(path):

return Image.open(path).convert('RGB')

class MyDataset(Dataset):

def __init__(self, txt, transform=None, target_transform=None, loader=default_loader):

fh = open(txt, 'r')

imgs = []

for line in fh:

line = line.strip('\n')

line = line.rstrip()

words = line.split()

imgs.append((words[0],int(words[1])))

self.imgs = imgs

self.transform = transform

self.target_transform = target_transform

self.loader = loader

def __getitem__(self, index):

fn, label = self.imgs[index]

img = self.loader(fn)

if self.transform is not None:

img = self.transform(img)

return img,label

def __len__(self):

return len(self.imgs)

train_data=MyDataset(txt='mnist_test.txt', transform=transforms.ToTensor())

data_loader = DataLoader(train_data, batch_size=100,shuffle=True)

print(len(data_loader))

DataLoader解析

位于torch.util.data.DataLoader中 源代码

该接口的主要目的是将pytorch中已有的数据接口如torchvision.datasets.ImageFolder,或者自定义的数据读取接口转化按照batch_size的大小封装为Tensor,即相当于在内置数据接口或者自定义数据接口的基础上增加一维,大小为batch_size的大小,得到的数据在之后可以通过封装为Variable,作为模型的输出

_ _ init _ _中所需的参数如下

1. dataset torch.utils.data.Dataset类的子类,可以是torchvision.datasets.ImageFolder等内置类,也可是继承了torch.utils.data.Dataset的自定义类

2. batch_size 每一个batch中包含的样本个数,默认是1

3. shuffle 一般在训练集中采用,默认是false,设置为true则每一个epoch都会将训练样本打乱

4. sampler 训练样本选取策略,和shuffle是互斥的 如果 shuffle为true,该参数一定要为None

5. batch_sampler BatchSampler 一次产生一个 batch 的 indices,和sampler以及shuffle互斥,一般使用默认的即可

上述Sampler的源代码地址如下[源代码](https://github.com/pytorch/pytorch/blob/master/torch/utils/data/sampler.py)

6. num_workers 用于数据加载的线程数量 默认为0 即只有主线程用来加载数据

7. collate_fn 用来聚合数据生成mini_batch

使用的时候一般为如下使用方法:

train_data=torch.utils.data.DataLoader(...)

for i, (input, target) in enumerate(train_data):

...

循环取DataLoader中的数据会触发类中_ _ iter __方法,查看源代码可知 其中调用的方法为 return _DataLoaderIter(self),因此需要查看 DataLoaderIter 这一内部类

class DataLoaderIter(object):

"Iterates once over the DataLoader's dataset, as specified by the sampler"

def __init__(self, loader):

self.dataset = loader.dataset

self.collate_fn = loader.collate_fn

self.batch_sampler = loader.batch_sampler

self.num_workers = loader.num_workers

self.pin_memory = loader.pin_memory and torch.cuda.is_available()

self.timeout = loader.timeout

self.done_event = threading.Event()

self.sample_iter = iter(self.batch_sampler)

if self.num_workers > 0:

self.worker_init_fn = loader.worker_init_fn

self.index_queue = multiprocessing.SimpleQueue()

self.worker_result_queue = multiprocessing.SimpleQueue()

self.batches_outstanding = 0

self.worker_pids_set = False

self.shutdown = False

self.send_idx = 0

self.rcvd_idx = 0

self.reorder_dict = {}

base_seed = torch.LongTensor(1).random_()[0]

self.workers = [

multiprocessing.Process(

target=_worker_loop,

args=(self.dataset, self.index_queue, self.worker_result_queue, self.collate_fn,

base_seed + i, self.worker_init_fn, i))

for i in range(self.num_workers)]

if self.pin_memory or self.timeout > 0:

self.data_queue = queue.Queue()

self.worker_manager_thread = threading.Thread(

target=_worker_manager_loop,

args=(self.worker_result_queue, self.data_queue, self.done_event, self.pin_memory,

torch.cuda.current_device()))

self.worker_manager_thread.daemon = True

self.worker_manager_thread.start()

else:

self.data_queue = self.worker_result_queue

for w in self.workers:

w.daemon = True # ensure that the worker exits on process exit

w.start()

_update_worker_pids(id(self), tuple(w.pid for w in self.workers))

_set_SIGCHLD_handler()

self.worker_pids_set = True

# prime the prefetch loop

for _ in range(2 * self.num_workers):

self._put_indices()

来自

八、基础

1.2 在_init_()里开头的那一句

super(Net, self).__init__()

这句的意思是_init_()函数继承自父类nn.Module

super()函数是用于调用父类的一个方法, super(Net, self)首先找到Net的父类(就是nn.Module), 然后把类Net的对象转换为类nn.Module的对象, 具体的可以看一下

http://www.runoob.com/python/python-func-super.html

Python super() 函数

Python 内置函数

描述

super() 函数是用于调用父类(超类)的一个方法。

super 是用来解决多重继承问题的,直接用类名调用父类方法在使用单继承的时候没问题,但是如果使用多继承,会涉及到查找顺序(MRO)、重复调用(钻石继承)等种种问题。

MRO 就是类的方法解析顺序表, 其实也就是继承父类方法时的顺序表。

语法

以下是 super() 方法的语法:

super(type[, object-or-type])

参数

Python3.x 和 Python2.x 的一个区别是: Python 3 可以使用直接使用 super().xxx 代替 super(Class, self).xxx :

Python3.x 实例:

实例

class A:

def add(self, x):

y = x+1

print(y)

class B(A):

def add(self, x):

super().add(x)

b = B()

b.add(2) # 3

Python2.x 实例:

实例

#!/usr/bin/python # -*- coding: UTF-8 -*- class A(object): # Python2.x 记得继承 object def add(self, x): y = x+1 print(y) class B(A): def add(self, x): super(B, self).add(x) b = B() b.add(2) # 3

返回值

无。

实例

以下展示了使用 super 函数的实例:

实例

#!/usr/bin/python # -*- coding: UTF-8 -*- class FooParent(object): def __init__(self): self.parent = 'I\'m the parent.' print ('Parent') def bar(self,message): print ("%s from Parent" % message) class FooChild(FooParent): def __init__(self): # super(FooChild,self) 首先找到 FooChild 的父类(就是类 FooParent),然后把类 FooChild 的对象转换为类 FooParent 的对象 super(FooChild,self).__init__() print ('Child') def bar(self,message): super(FooChild, self).bar(message) print ('Child bar fuction') print (self.parent) if __name__ == '__main__': fooChild = FooChild() fooChild.bar('HelloWorld')

执行结果:

Parent

Child

HelloWorld from Parent

Child bar fuction

I'm the parent.

我们在学习 Python 类的时候,总会碰见书上的类中有 __init__() 这样一个函数,很多同学百思不得其解,其实它就是 Python 的构造方法。

构造方法类似于类似 init() 这种初始化方法,来初始化新创建对象的状态,在一个对象呗创建以后会立即调用,比如像实例化一个类:

f = FooBar()

f.init()

使用构造方法就能让它简化成如下形式:

f = FooBar()

你可能还没理解到底什么是构造方法,什么是初始化,下面我们再来举个例子:

class FooBar:

def __init__(self):

self.somevar = 42

>>>f = FooBar()

>>>f.somevar

我们会发现在初始化 FooBar 中的 somevar 的值为 42 之后,实例化直接就能够调用 somevar 的值;如果说你没有用构造方法初始化值得话,就不能够调用,明白了吗?

在明白了构造方法之后,我们来点进阶的问题,那就是构造方法中的初始值无法继承的问题。

例子:

class Bird:

def __init__(self):

self.hungry = True

def eat(self):

if self.hungry:

print 'Ahahahah'

else:

print 'No thanks!'

class SongBird(Bird):

def __init__(self):

self.sound = 'Squawk'

def sing(self):

print self.song()

sb = SongBird()

sb.sing() # 能正常输出

sb.eat() # 报错,因为 songgird 中没有 hungry 特性

那解决这个问题的办法有两种:

1、调用未绑定的超类构造方法(多用于旧版 python 阵营)

class SongBird(Bird):

def __init__(self):

Bird.__init__(self)

self.sound = 'Squawk'

def sing(self):

print self.song()

原理:在调用了一个实例的方法时,该方法的self参数会自动绑定到实例上(称为绑定方法);如果直接调用类的方法(比如Bird.__init__),那么就没有实例会被绑定,可以自由提供需要的self参数(未绑定方法)。

2、使用super函数(只在新式类中有用)

class SongBird(Bird):

def __init__(self):

super(SongBird,self).__init__()

self.sound = 'Squawk'

def sing(self):

print self.song()

原理:它会查找所有的超类,以及超类的超类,直到找到所需的特性为止。

1.4 torch.nn.functional as F

这个是通常写法, 我们用到的激活函数, pool函数都在这个F里面(损失函数还有conv/fc等层在nn里面), 这个要注意一下, 那BN/dropout是在nn还是在F里面?

2. 如何开始训练

1中是把网络定义好了, 那离能够训练这个网络还有几步呢?我们需要想一下训练一个神经网络需要一些什么基本的步骤

2.1 输入值格式

我们的应用都是图片输入, 那pytorch有没有keras那种直接一个文件夹输入作为训练集或测试集(ImageDataGenerator())的那种方法呢?是有的, 就是Dataloader()

import torchvision

import torchvision.transforms as transforms

# torchvision数据集的输出是在[0, 1]范围内的PILImage图片。

# 我们此处使用归一化的方法将其转化为Tensor,数据范围为[-1, 1]

transform=transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

'''注:这一部分需要下载部分数据集 因此速度可能会有一些慢 同时你会看到这样的输出

Downloading http://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz to ./data/cifar-10-python.tar.gz

Extracting tar file

Done!

Files already downloaded and verified

'''

这段代码就是用DataLoader()来生成patch的方法, 这个和keras的ImageDataGenerator()就很像了, 生成的trainloader是一个包含tensor的迭代器, 在训练的过程中就可以直接从迭代器里去除tensor, 然后装入Variable中作为神经网络的输入, 这就是输入值格式

当然, 这只是一种简单的从数据集中得到batch的方法, 要是我们的数据是从某个文件夹来还得经过点pre-process的话(比如SR)会更复杂一些, 我们会在SR benchmark笔记里面再详细说

2.2 Optimizer

import torch.optim as optim

# create your optimizer

optimizer = optim.SGD(net.parameters(), lr = 0.01)

# in your training loop:

optimizer.zero_grad() # zero the gradient buffers

optimizer.step()

Optimizer的定义和Keras差不多, 可以用参数定义lr, momentum, weight_decay等, 这个去查文档就好, optimizer.zero_grad()的意思是初始化所有的梯度buffer为0, 这个是在每一次计算梯度更新之前做的, optimizer.step()就是根据你定义的optimizer执行梯度更新

2.3 loss function

import torch.optim as optim

# make your loss function

criterion = nn.MSELoss()

# in your training loop:

output = net(input)

loss = criterion(output, target)

loss.backward()

criterion定义了loss function, 然后在训练的loop中loss就根据criterion不停去算, loss是一个Variable, 它具有backward属性, 就是在训练过程中可以直接用.backward来计算loss对每个weight的梯度值

2.4 训练过程

有了输入, loss function和Optimizer, 我们就可以开始进行训练了, 过程就是从迭代器trainloader把input tensor送入网络, 然后算output, 根据loss function算loss, 从loss再反过去算梯度, 根据Optimizer去更新权重

下面是MGN的训练代码:

for batch, (inputs, labels) in enumerate(self.train_loader):

inputs = inputs.to(self.device)

labels = labels.to(self.device)

self.optimizer.zero_grad()#optimizer.zero_grad()的意思是初始化所有的梯度buffer为0, 这个是在每一次计算梯度更新之前做的

outputs = self.model(inputs)

loss = self.loss(outputs, labels)

loss.backward()#criterion定义了loss function, 然后在训练的loop中loss就根据criterion不停去算, loss是一个Variable, 它具有backward属性, 就是在训练过程中可以直接用.backward来计算loss对每个weight的梯度值

self.optimizer.step()#optimizer.step()就是根据你定义的optimizer执行梯度更新

*****************************************************************

for epoch in range(2): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs

inputs, labels = data

# wrap them in Variable

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.data[0]

if i % 2000 == 1999: # print every 2000 mini-batches

print('[%d, %5d] loss: %.3f' % (epoch+1, i+1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

2.5 神经网络的快速搭建方法

除了上面提到的搭建神经网络的方法之外, pytorch还提供了另一种更快速的搭建方法, 有点类似于Keras的Sequentiao模型

http://keras-cn.readthedocs.io/en/latest/getting_started/sequential_model/

net = torch.nn.Sequential(

torch.nn.Linear(2,10),

torch.nn.ReLU,

torch.nn.Linear(10,2),

)

# fast implementation of FC 2->10->2

快速开始序贯(Sequential)模型

序贯模型是多个网络层的线性堆叠,也就是“一条路走到黑”。

可以通过向Sequential模型传递一个layer的list来构造该模型:

from keras.models import Sequential

from keras.layers import Dense, Activation

model = Sequential([

Dense(32, units=784),

Activation('relu'),

Dense(10),

Activation('softmax'),

])

也可以通过.add()方法一个个的将layer加入模型中:

model = Sequential()

model.add(Dense(32, input_shape=(784,)))

model.add(Activation('relu'))

3. 保存和提取训练结果

保存和加载网络模型有两种方法, 一种是把模型和参数一起save(相当于keras的save()), 还有一种就是只save参数(相当于keras的save_weight())

# 保存和加载整个模型

torch.save(model_object, 'model.pth')

model = torch.load('model.pth')

# 仅保存和加载模型参数

torch.save(model_object.state_dict(), 'params.pth')

model_object.load_state_dict(torch.load('params.pth'))

当网络比较大的时候, 用第一种方法会花比较多的时间, 同时所占的存储空间也比较大

还有一个问题就是存储模型的时候, 有的时候会存成.pkl格式, 应该是没有本质区别, 都是Pickle格式, 后续在用C来读取网络的时候, 也从Pickle的C实现来考虑直接解析Pytorch的模型

- 输入值格式(就是patch的格式)

- Optimizer

- loss fucntion

- 梯度更新过程

- type -- 类。

- object-or-type -- 类,一般是 self

九、PIL

在PIL库中可以实现图形的缩放,但是 如果使用下面的写法的话,会造成部分的信息丢失

img = img.resize((width, height))

但是涅,在PIL中 带ANTIALIAS滤镜缩放结果,程序如下:

img = img.resize((width, height),Image.ANTIALIAS)

这样的话就不会有信息丢失啦

---------------------

作者:sunshinefcx

来源:CSDN

原文:https://blog.csdn.net/sunshinefcx/article/details/85623227

版权声明:本文为博主原创文章,转载请附上博文链接!

创建绘画对象 ImageDraw module creates drawing surface for image

import Image, ImageDraw

im = Image.open(“vacation.jpeg")

drawSurface = ImageDraw.Draw(im)

基本绘画操作 Basic methods of drawing surface

- 弧/弦/扇形 chord arc pieslice (bbox, strtAng, endAng)

- 椭圆 ellipse (bbox)

- 线段/多段线 line (L) draw.line(((60,60),(90,60), (90,90), (60,90), (60,60))) #draw a square

- 点 point (xy) #单像素点很小看不清,实际中可用实心小圆代替

- 多边形 polygon (L) draw.polygon([(60,60), (90,60), (90,90), (60,90)]) #draw a square

- 矩形 rectangle (bbox) # first coord属于矩形, second coord不属于

- 文字 text(xy,message,font=None) 绘制文字message,文本区域左上角坐标为xy

drawable.text((10, 10), "Hello", fill=(255,0,0), font=None) - 文字大小 textsize(message,font=None) 给定文字message,返回所占像素(width,height)

可选参数 Common optional args for these methods

- fill=fillColor

- outline=outlineColor

矢量字体支持 TrueType Font support

import ImageFont

ttFont = ImageFont.truetype (“arial.ttf”, 16)

drawable.text ((10, 10), “Hello”, fill=(255,0,0), font=ttFont)

来自

9、 Rectangle

定义:draw.rectangle(box,options)

含义:绘制一个长边形。

变量box是包含2元组[(x,y),…]或者数字[x,y,…]的任何序列对象。它应该包括2个坐标值。

注意:当长方形没有没有被填充时,第二个坐标对定义了一个长方形外面的点。

变量options的fill给定长边形内部的颜色。

例子:

>>>from PIL import Image, ImageDraw

>>>im01 = Image.open("D:\\Code\\Python\\test\\img\\test01.jpg")

>>>draw = ImageDraw.Draw(im01)

>>>draw.rectangle((0,0,100,200), fill = (255,0,0))

>>> draw.rectangle([100,200,300,500],fill = (0,255,0))

>>>draw.rectangle([(300,500),(600,700)], fill = (0,0,255))

>>>im01.show()

---------------------

作者:icamera0

来源:CSDN

原文:https://blog.csdn.net/icamera0/article/details/50747084

版权声明:本文为博主原创文章,转载请附上博文链接!

from PIL import Image

img = Image.open(‘D:\image_for_test\Spee.jpg’)

print(“初始尺寸”,img.size)

print(“默认缩放NEARESET”,img.resize((128,128)).size)

print(“BILINEAR”,img.resize((127,127),Image.BILINEAR).size)

print(“BICUBIC”,img.resize((126,126),Image.BICUBIC).size)

print(“ANTIALIAS”,img.resize((125,125),Image.ANTIALIAS).size)

双线性差值,只考虑了旁边4个的点,并且是用的加权平均

双三次插值,考虑了16个点,做加权平均

抗锯齿插值,会考虑所有影响到的像素点,反正很大

---------------------

作者:luojiaao

来源:CSDN

原文:https://blog.csdn.net/m0_37789876/article/details/82596984

版权声明:本文为博主原创文章,转载请附上博文链接!

1. 函数

用 OpenCV 标注 bounding box 主要用到下面两个工具——cv2.rectangle() 和 cv2.putText()。用法如下:

# cv2.rectangle()

# 输入参数分别为图像、左上角坐标、右下角坐标、颜色数组、粗细

cv2.rectangle(img, (x,y), (x+w,y+h), (B,G,R), Thickness)

# cv2.putText()

# 输入参数为图像、文本、位置、字体、大小、颜色数组、粗细

cv2.putText(img, text, (x,y), Font, Size, (B,G,R), Thickness)

1

2

3

4

5

6

7

2. Example

import cv2

fname = '001.jpg'

img = cv2.imread(fname)

# 画矩形框

cv2.rectangle(img, (212,317), (290,436), (0,255,0), 4)

# 标注文本

font = cv2.FONT_HERSHEY_SUPLEX

text = '001'

cv2.putText(img, text, (212, 310), font, 2, (0,0,255), 1)

cv2.imwrite('001_new.jpg', img)