直线拟合的三种方法

近日考虑直线拟合相关的知识,大概有所了解,所以打算进行一些总结。

直线拟合常用的三种方法:

一、最小二乘法进行直线拟合

二、梯度下降法进行直线拟合

三、高斯牛顿,列-马算法进行直线拟合

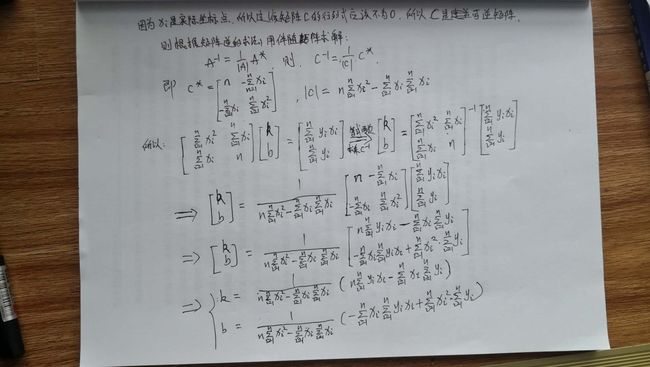

一、使用最多的就是最小二乘法,这里我也对最小二乘法进行了一个总结。

1. 假设x是正确值,y存在误差。

根据上面两图的手推公式我们可以编写相关的代码了。此处我们借助opencv工具进行结果显示和分析。

void fitline3(){

float b = 0.0f, k=0.0f;

vectorpoints;

points.push_back(Point(27, 39));

points.push_back(Point(8, 5));

points.push_back(Point(8, 9));

points.push_back(Point(16, 22));

points.push_back(Point(44, 71));

points.push_back(Point(35, 44));

points.push_back(Point(43, 57));

points.push_back(Point(19, 24));

points.push_back(Point(27, 39));

points.push_back(Point(37, 52));

Mat src = Mat::zeros(400, 400, CV_8UC3);

for (int i = 0; i < points.size(); i++)

{

//在原图上画出点

circle(src, points[i], 3, Scalar(0, 255, 0), 1, 8);

}

int n = points.size();

double xx_sum = 0;

double x_sum = 0;

double y_sum = 0;

double xy_sum = 0;

for (int i = 0; i < n; i++)

{

x_sum += points[i].x; //x的累加和

y_sum += points[i].y; //y的累加和

xx_sum += points[i].x * points[i].x; //x的平方累加和

xy_sum += points[i].x * points[i].y; //x,y的累加和

}

k = (n*xy_sum - x_sum * y_sum) / (n*xx_sum - x_sum * x_sum); //根据公式求解k

b = (-x_sum * xy_sum + xx_sum*y_sum) / (n*xx_sum - x_sum * x_sum);//根据公式求解b

printf("k = %f, b = %f\n", k, b); //k = 1.555569, b = -4.867031

cv::Point first = { 5, int(k * 5 + b) }, second = { int((400 - b) / k), 400 };

cv::line(src, first, second, cv::Scalar(0, 0, 255), 2);

cv::imshow("name", src);

cv::waitKey(0);

} 上面求解出来的结果是k = 1.555569, b = -4.867031。

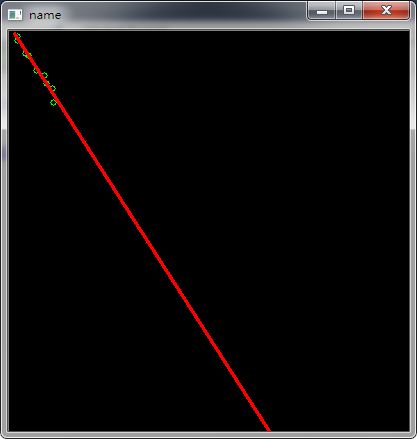

图像显示结果:

下面我们使用opencv自带的函数求解k和b值。此处代码来自互联网。

void fitline1()

{

vectorpoints;

//(27 39) (8 5) (8 9) (16 22) (44 71) (35 44) (43 57) (19 24) (27 39) (37 52)

points.push_back(Point(27, 39));

points.push_back(Point(8, 5));

points.push_back(Point(8, 9));

points.push_back(Point(16, 22));

points.push_back(Point(44, 71));

points.push_back(Point(35, 44));

points.push_back(Point(43, 57));

points.push_back(Point(19, 24));

points.push_back(Point(27, 39));

points.push_back(Point(37, 52));

Mat src = Mat::zeros(400, 400, CV_8UC3);

for (int i = 0; i < points.size(); i++)

{

//在原图上画出点

circle(src, points[i], 3, Scalar(0, 0, 255), 1, 8);

}

//构建A矩阵

int N = 2;

Mat A = Mat::zeros(N, N, CV_64FC1);

for (int row = 0; row < A.rows; row++)

{

for (int col = 0; col < A.cols; col++)

{

for (int k = 0; k < points.size(); k++)

{

A.at(row, col) = A.at(row, col) + pow(points[k].x, row + col);

}

}

}

//构建B矩阵

Mat B = Mat::zeros(N, 1, CV_64FC1);

for (int row = 0; row < B.rows; row++)

{

for (int k = 0; k < points.size(); k++)

{

B.at(row, 0) = B.at(row, 0) + pow(points[k].x, row)*points[k].y;

}

}

//A*X=B

Mat X;

//cout << A << endl << B << endl;

solve(A, B, X, DECOMP_LU);

cout << X << endl;

vectorlines;

for (int x = 0; x < src.size().width; x++)

{ // y = b + ax;

double y = X.at(0, 0) + X.at(1, 0)*x; //b = -4.867031, k = 1.555569,

printf("b = %f, k = %f, (%d,%lf)\n", X.at(0, 0), X.at(1, 0), x, y);

lines.push_back(Point(x, y));

}

polylines(src, lines, false, Scalar(255, 0, 0), 1, 8);

imshow("src", src);

//imshow("src", A);

waitKey(0);

} 求解得出对应的k = 1.555569, b = -4.867031。

这两个结果值是一样的。说明我们的推导结果是正确的。

2. 上面的公式推导是假设了x是正确值,y存在误差,是普通的一元线性回归。

此处将离散的点拟合为 ax + by +c = 0型直线。

即假设每个点的x,y坐标的误差都是 符合0均值的正态分布的。

有如下代码:

void fitline2(){

float a = 0.0f, b = 0.0f, c = 0.0f;

vectorpoints;

points.push_back(Point(27, 39));

points.push_back(Point(8, 5));

points.push_back(Point(8, 9));

points.push_back(Point(16, 22));

points.push_back(Point(44, 71));

points.push_back(Point(35, 44));

points.push_back(Point(43, 57));

points.push_back(Point(19, 24));

points.push_back(Point(27, 39));

points.push_back(Point(37, 52));

Mat src = Mat::zeros(400, 400, CV_8UC3);

for (int i = 0; i < points.size(); i++)

{

//在原图上画出点

circle(src, points[i], 3, Scalar(0, 255, 0), 1, 8);

}

int size = points.size();

double x_mean = 0;

double y_mean = 0;

for (int i = 0; i < size; i++)

{

x_mean += points[i].x;

y_mean += points[i].y;

}

x_mean /= size;

y_mean /= size; //至此,计算出了 x y 的均值

double Dxx = 0, Dxy = 0, Dyy = 0;

for (int i = 0; i < size; i++)

{

Dxx += (points[i].x - x_mean) * (points[i].x - x_mean);

Dxy += (points[i].x - x_mean) * (points[i].y - y_mean);

Dyy += (points[i].y - y_mean) * (points[i].y - y_mean);

}

double lambda = ((Dxx + Dyy) - sqrt((Dxx - Dyy) * (Dxx - Dyy) + 4 * Dxy * Dxy)) / 2.0;

double den = sqrt(Dxy * Dxy + (lambda - Dxx) * (lambda - Dxx));

a = Dxy / den;

b = (lambda - Dxx) / den;

c = -a * x_mean - b * y_mean;

printf("a = %f, b = %f, c = %f\n", a, b, c);

printf("k = %f, b = %f\n", - a/b, -c/b); //k = 1.593043, b = -5.856340

float k = -a / b;

b = -c / b;

cv::Point first = { 5, int(k * 5 + b) }, second = { int((400 - b) / k), 400 };

cv::line(src, first, second, cv::Scalar(0, 0, 255), 2);

cv::imshow("name", src);

cv::waitKey(0);

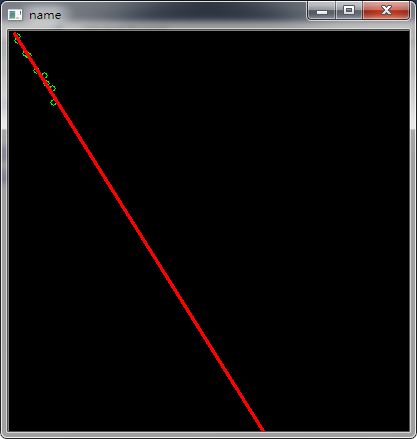

} 求解结果为k = 1.593043, b = -5.856340。(对比发现此方法和方法一结果还是存在差异的)

显示结果:

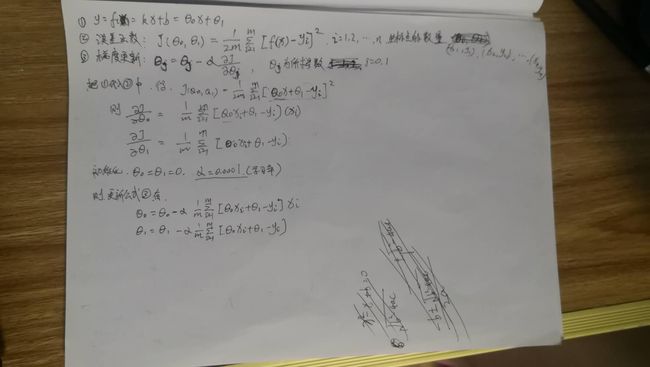

二、梯度下降法进行直线拟合

下图是我的手写推导公式:

推导公式中alpha为学习率,theta0和theta1分别是斜率k和截距b。m是为了求平均损失,2是为了求偏导方便。

根据推导公式写出如下代码:

//梯度下降法进行线性拟合

// y = theta0 * x + theta1

void fitline4(){

const int m = 10;

double Train_set_x[m] = { 27, 8, 8, 16, 44, 35, 43, 19, 27, 37 };

double Train_set_y[m] = { 39, 5, 9, 22, 71, 44, 57, 24, 39, 52 };

double theta0 = 0.0, theta1 = 0.0, alpha = 0.002, error = 1e-8; //alpha是学习率,error是结束误差,theta0就是k,theta1就是b。

double tmp_theta0 = theta0, tmp_theta1 = theta1;

double sum_theta0 = 0.0, sum_theta1 = 0.0;

while (1){

sum_theta0 = 0.0, sum_theta1 = 0.0;

for (size_t i = 0; i < m; i++) {

sum_theta0 += (theta0 * Train_set_x[i] + theta1 - Train_set_y[i])*Train_set_x[i]; //累加和

sum_theta1 += (theta0 * Train_set_x[i] + theta1 - Train_set_y[i]); //累加和

}

theta0 = theta0 - alpha * (1.0 / m) * sum_theta0; //k更新公式

theta1 = theta1 - alpha * (1.0 / m) * sum_theta1; //b更新公式

printf("k=%lf, b=%lf\n", theta0, theta1);

if (abs(theta0 - tmp_theta0) < error && abs(theta1 - tmp_theta1) < error){ //

break;

}

tmp_theta0 = theta0;

tmp_theta1 = theta1;

}

}

求解结果为k=1.555569, b=-4.867004。与最小二乘法的结果极为相似。

三、高斯牛顿,列-马算法