Logstash 安装 mongoDB 插件,从MongoDB同步数据到Elasticsearch,修复第一条数据同步失败的问题,以及多表同步

./logstash-plugin install logstash-input-mongodb

实际步骤:

1.安装rvm(ruby version manager)

curl -L get.rvm.io | bash -s stable

source /home/knicks/.rvm/scripts/rvm

测试是否安装成功:

rvm -v

获取rvm列表:

rvm list known

2.安装ruby:

rvm install 2.4

查看安装好的ruby版本

ruby -v

查看gem(用于对 Ruby组件进行打包的 Ruby 打包系统)版本

gem -v

查看gem 镜像地址

gem sources -l

3.替换为国内的ruby-china库

gem sources --add https://gems.ruby-china.org/ --remove https://rubygems.org/

进入logstash目录对 Gemfile文件 进行编辑

将文件里的 source "https://rubygems.org" 换成 source "https://gems.ruby-china.org"

到bin目录执行 ./logstash-plugin install logstash-input-mongodb

等待时间较长,安装成功!

添加配置文件,内容如下

input {

mongodb {

uri => 'mongodb://172.16.24.207:27017/pubhealth'

placeholder_db_dir => '/opt/logstash-mongodb/'

#存放mongo最近一条上传的_id值

placeholder_db_name =>'logstash_sqlite.db'collection => 'health_records'

batch_size => 5000

}

}

filter

{

#date {

# match => ["logdate","ISO8601"]

# target => "@timestamp"

#}

ruby {

code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)"

}

ruby {

code => "event.set('@timestamp',event.get('timestamp'))"

}

mutate {

rename => ["_id", "uid"]

convert => ["id_card", "string"]

convert => ["phone","string"]

remove_field => ["host","@version","logdate","log_entry","mongo_id","timestamp"]

}

}

output {

file {

path => "/var/log/mongons.log"

}

stdout {

codec => json_lines

}

elasticsearch {

hosts => ["172.16.24.207:9200"]

index => "pubhealth"

manage_template=>true

document_type => "health_doc"

}

}

执行 bin/logstash -f 配置文件名称

日志中查看同步状态

修复第一条数据同步失败的问题:

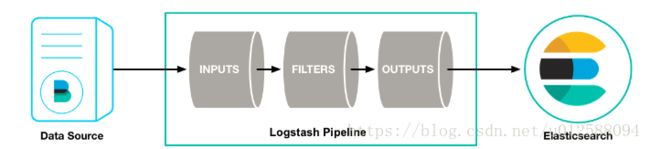

1.首先需要了解下logstash的工作原理:

Inputs: 把每一条数据形成一个Event

filter: 解析、过滤、格式化等操作

outputs: 输出

在logstash管道的输入阶段,她运行自己的线程,处理输入的数据包装成events ,然后放入到一个 队列中(队列在内存中,也可以通过配置实现持久化)。管道工作线程主要从队列中拿到批量的数据然后经过filter插件最后输出到定义的目的地。其中管道工作线程数量是可以通过配置文件增加的,可以应对同步数据比较多的情况,这种模式类似于生产者和消费者模式。

logstash-input-mongodb主要是从mongo库中增量的取出一定的数据,然后把每一条数据包装成event,放到队列中供后续流程

这里衍生出一个问题,增量同步是如何实现的呢?

logstash-input-mongodb每次从mongo数据库中取出数据的最后一条的标识字段(默认_id),放到本地的sqllite数据库中的一个自定义的表中,下次需要取数据的时候就从这个表里把标识字段的值取出来按照 > _id 条件从mongo库取数据,取完之后更行sqllite数据库表的_id为最新所取的数据的最后一条的_id值,这样就能实现增量同步。

那么,第一条数据为何会丢失呢?

每次数据同步的初始化阶段,logstash-input-mongodb都会按照_id的正序序列从mongoDB中取最早的记录,记录他的_id更新到sqllite数据库中,我们刚刚讲过,他从此以后取数据的条件就变成了 > _id 的数据,那么第一条数据也就不会被查询到,那么我们只需要在第一次初始化的时候,让他查询的条件变成 >= 不就可以了,下面是我贴出的整个代码的地方,我把改动的地方加粗了。

修改mongodb.rb 文件内容如下:

# encoding: utf-8

require "logstash/inputs/base"

require "logstash/namespace"

require "logstash/timestamp"

require "stud/interval"

require "socket" # for Socket.gethostname

require "json"

require "mongo"

include Mongo

class LogStash::Inputs::MongoDB < LogStash::Inputs::Base

config_name "mongodb"

# If undefined, Logstash will complain, even if codec is unused.

default :codec, "plain"

# Example URI: mongodb://mydb.host:27017/mydbname?ssl=true

config :uri, :validate => :string, :required => true

# The directory that will contain the sqlite database file.

config :placeholder_db_dir, :validate => :string, :required => true

# The name of the sqlite databse file

config :placeholder_db_name, :validate => :string, :default => "logstash_sqlite.db"

# Any table to exclude by name

config :exclude_tables, :validate => :array, :default => []

config :batch_size, :avlidate => :number, :default => 30

config :since_table, :validate => :string, :default => "logstash_since"

# This allows you to select the column you would like compare the since info

config :since_column, :validate => :string, :default => "_id"

# This allows you to select the type of since info, like "id", "date"

config :since_type, :validate => :string, :default => "id"

# The collection to use. Is turned into a regex so 'events' will match 'events_20150227'

# Example collection: events_20150227 or events_

config :collection, :validate => :string, :required => true

# This allows you to select the method you would like to use to parse your data

config :parse_method, :validate => :string, :default => 'flatten'

# If not flattening you can dig to flatten select fields

config :dig_fields, :validate => :array, :default => []

# This is the second level of hash flattening

config :dig_dig_fields, :validate => :array, :default => []

# If true, store the @timestamp field in mongodb as an ISODate type instead

# of an ISO8601 string. For more information about this, see

# http://www.mongodb.org/display/DOCS/Dates

config :isodate, :validate => :boolean, :default => false

# Number of seconds to wait after failure before retrying

config :retry_delay, :validate => :number, :default => 3, :required => false

# If true, an "_id" field will be added to the document before insertion.

# The "_id" field will use the timestamp of the event and overwrite an existing

# "_id" field in the event.

config :generateId, :validate => :boolean, :default => false

config :unpack_mongo_id, :validate => :boolean, :default => false

# The message string to use in the event.

config :message, :validate => :string, :default => "Default message..."

# Set how frequently messages should be sent.

# The default, `1`, means send a message every second.

config :interval, :validate => :number, :default => 1

SINCE_TABLE = :since_table

public

def init_placeholder_table(sqlitedb)

begin

sqlitedb.create_table "#{SINCE_TABLE}" do

String :table

Int :place

end

rescue

@logger.debug("since table already exists")

end

end

public

def init_placeholder(sqlitedb, since_table, mongodb, mongo_collection_name)

@logger.debug("init placeholder for #{since_table}_#{mongo_collection_name}")

since = sqlitedb[SINCE_TABLE]

mongo_collection = mongodb.collection(mongo_collection_name)

first_entry = mongo_collection.find({}).sort(since_column => 1).limit(1).first

first_entry_id = ''

if since_type == 'id'

first_entry_id = first_entry[since_column].to_s

else

first_entry_id = first_entry[since_column].to_i

end

since.insert(:table => "#{since_table}_#{mongo_collection_name}", :place => first_entry_id)

@logger.info("init placeholder for #{since_table}_#{mongo_collection_name}: #{first_entry}")

return first_entry_id

end

public

def get_placeholder(sqlitedb, since_table, mongodb, mongo_collection_name)

since = sqlitedb[SINCE_TABLE]

x = since.where(:table => "#{since_table}_#{mongo_collection_name}")

if x[:place].nil? || x[:place] == 0

first_entry_id = init_placeholder(sqlitedb, since_table, mongodb, mongo_collection_name)

@logger.debug("FIRST ENTRY ID for #{mongo_collection_name} is #{first_entry_id}")

return first_entry_id, 1

else

@logger.debug("placeholder already exists, it is #{x[:place]}")

return x[:place][:place], 0

end

end

public

def update_placeholder(sqlitedb, since_table, mongo_collection_name, place)

#@logger.debug("updating placeholder for #{since_table}_#{mongo_collection_name} to #{place}")

since = sqlitedb[SINCE_TABLE]

since.where(:table => "#{since_table}_#{mongo_collection_name}").update(:place => place)

end

public

def get_all_tables(mongodb)

return @mongodb.collection_names

end

public

def get_collection_names(mongodb, collection)

collection_names = []

@mongodb.collection_names.each do |coll|

#这个地方我们在传集合名程的时候可以 为 A|B|C达到多表,A、B、C会匹配到A.*?、B.*?、C.*?的

if /#{collection}/ =~ collcollection_names.push(coll)

@logger.debug("Added #{coll} to the collection list as it matches our collection search")

end

end

return collection_names

end

public

def get_cursor_for_collection(mongodb, is_first_init, mongo_collection_name, last_id_object, batch_size)

if is_first_init == 1

collection = mongodb.collection(mongo_collection_name)

# Need to make this sort by date in object id then get the first of the series

# db.events_20150320.find().limit(1).sort({ts:1})

return collection.find({:_id => {:$gte => last_id_object}}).limit(batch_size)

else

collection = mongodb.collection(mongo_collection_name)

# Need to make this sort by date in object id then get the first of the series

# db.events_20150320.find().limit(1).sort({ts:1})

return collection.find({:_id => {:$gt => last_id_object}}).limit(batch_size)

end

end

public

def update_watched_collections(mongodb, collection, sqlitedb)

collections = get_collection_names(mongodb, collection)

collection_data = {}

collections.each do |my_collection|

init_placeholder_table(sqlitedb)

last_id , is_first_init = get_placeholder(sqlitedb, since_table, mongodb, my_collection)

if !collection_data[my_collection]

collection_data[my_collection] = { :name => my_collection, :last_id => last_id , :is_first_init => is_first_init}

end

end

return collection_data

end

public

def register

require "jdbc/sqlite3"

require "sequel"

placeholder_db_path = File.join(@placeholder_db_dir, @placeholder_db_name)

conn = Mongo::Client.new(@uri)

@host = Socket.gethostname

@logger.info("Registering MongoDB input")

@mongodb = conn.database

@sqlitedb = Sequel.connect("jdbc:sqlite:#{placeholder_db_path}")

# Should check to see if there are new matching tables at a predefined interval or on some trigger

@collection_data = update_watched_collections(@mongodb, @collection, @sqlitedb)

end # def register

class BSON::OrderedHash

def to_h

inject({}) { |acc, element| k,v = element; acc[k] = (if v.class == BSON::OrderedHash then v.to_h else v end); acc }

end

def to_json

JSON.parse(self.to_h.to_json, :allow_nan => true)

end

end

def flatten(my_hash)

new_hash = {}

@logger.debug("Raw Hash: #{my_hash}")

if my_hash.respond_to? :each

my_hash.each do |k1,v1|

if v1.is_a?(Hash)

v1.each do |k2,v2|

if v2.is_a?(Hash)

# puts "Found a nested hash"

result = flatten(v2)

result.each do |k3,v3|

new_hash[k1.to_s+"_"+k2.to_s+"_"+k3.to_s] = v3

end

# puts "result: "+result.to_s+" k2: "+k2.to_s+" v2: "+v2.to_s

else

new_hash[k1.to_s+"_"+k2.to_s] = v2

end

end

else

# puts "Key: "+k1.to_s+" is not a hash"

new_hash[k1.to_s] = v1

end

end

else

@logger.debug("Flatten [ERROR]: hash did not respond to :each")

end

@logger.debug("Flattened Hash: #{new_hash}")

return new_hash

end

def run(queue)

sleep_min = 0.01

sleep_max = 5

sleeptime = sleep_min

@logger.debug("Tailing MongoDB")

@logger.debug("Collection data is: #{@collection_data}")

while true && !stop?

begin

@collection_data.each do |index, collection|

collection_name = collection[:name]

@logger.debug("collection_data is: #{@collection_data}")

last_id = @collection_data[index][:last_id]

is_first_init = @collection_data[index][:is_first_init]

@logger.debug("is_first_init is #{is_first_init}")

#@logger.debug("last_id is #{last_id}", :index => index, :collection => collection_name)

# get batch of events starting at the last_place if it is set

last_id_object = last_id

if since_type == 'id'

last_id_object = BSON::ObjectId(last_id)

elsif since_type == 'time'

if last_id != ''

last_id_object = Time.at(last_id)

end

end

cursor = get_cursor_for_collection(@mongodb, is_first_init, collection_name, last_id_object, batch_size)

if is_first_init == 1

@collection_data[index][:is_first_init] = 0

end

cursor.each do |doc|

logdate = DateTime.parse(doc['_id'].generation_time.to_s)

event = LogStash::Event.new("host" => @host)

decorate(event)

event.set("logdate",logdate.iso8601.force_encoding(Encoding::UTF_8))

log_entry = doc.to_h.to_s

log_entry['_id'] = log_entry['_id'].to_s

event.set("log_entry",log_entry.force_encoding(Encoding::UTF_8))

event.set("mongo_id",doc['_id'].to_s)

#为多表输出到ES不同集合的时候提供依据

event.set("collection_name",collection_name)

@logger.debug("mongo_id: "+doc['_id'].to_s)#@logger.debug("EVENT looks like: "+event.to_s)

#@logger.debug("Sent message: "+doc.to_h.to_s)

#@logger.debug("EVENT looks like: "+event.to_s)

# Extract the HOST_ID and PID from the MongoDB BSON::ObjectID

if @unpack_mongo_id

doc_hex_bytes = doc['_id'].to_s.each_char.each_slice(2).map {|b| b.join.to_i(16) }

doc_obj_bin = doc_hex_bytes.pack("C*").unpack("a4 a3 a2 a3")

host_id = doc_obj_bin[1].unpack("S")

process_id = doc_obj_bin[2].unpack("S")

event.set('host_id',host_id.first.to_i)

event.set('process_id',process_id.first.to_i)

end

if @parse_method == 'flatten'

# Flatten the JSON so that the data is usable in Kibana

flat_doc = flatten(doc)

# Check for different types of expected values and add them to the event

if flat_doc['info_message'] && (flat_doc['info_message'] =~ /collection stats: .+/)

# Some custom stuff I'm having to do to fix formatting in past logs...

sub_value = flat_doc['info_message'].sub("collection stats: ", "")

JSON.parse(sub_value).each do |k1,v1|

flat_doc["collection_stats_#{k1.to_s}"] = v1

end

end

flat_doc.each do |k,v|

# Check for an integer

@logger.debug("key: #{k.to_s} value: #{v.to_s}")

if v.is_a? Numeric

event.set(k.to_s,v)

elsif v.is_a? Time

event.set(k.to_s,v.iso8601)

elsif v.is_a? String

if v == "NaN"

event.set(k.to_s, Float::NAN)

elsif /\A[-+]?\d+[.][\d]+\z/ == v

event.set(k.to_s, v.to_f)

elsif (/\A[-+]?\d+\z/ === v) || (v.is_a? Integer)

event.set(k.to_s, v.to_i)

else

event.set(k.to_s, v)

end

else

if k.to_s == "_id" || k.to_s == "tags"

event.set(k.to_s, v.to_s )

end

if (k.to_s == "tags") && (v.is_a? Array)

event.set('tags',v)

end

end

end

elsif @parse_method == 'dig'

# Dig into the JSON and flatten select elements

doc.each do |k, v|

if k != "_id"

if (@dig_fields.include? k) && (v.respond_to? :each)

v.each do |kk, vv|

if (@dig_dig_fields.include? kk) && (vv.respond_to? :each)

vv.each do |kkk, vvv|

if /\A[-+]?\d+\z/ === vvv

event.set("#{k}_#{kk}_#{kkk}",vvv.to_i)

else

event.set("#{k}_#{kk}_#{kkk}", vvv.to_s)

end

end

else

if /\A[-+]?\d+\z/ === vv

event.set("#{k}_#{kk}", vv.to_i)

else

event.set("#{k}_#{kk}",vv.to_s)

end

end

end

else

if /\A[-+]?\d+\z/ === v

event.set(k,v.to_i)

else

event.set(k,v.to_s)

end

end

end

end

elsif @parse_method == 'simple'

doc.each do |k, v|

if v.is_a? Numeric

event.set(k, v.abs)

elsif v.is_a? Array

event.set(k, v)

elsif v == "NaN"

event.set(k, Float::NAN)

else

event.set(k, v.to_s)

end

end

end

queue << event

since_id = doc[since_column]

if since_type == 'id'

since_id = doc[since_column].to_s

elsif since_type == 'time'

since_id = doc[since_column].to_i

end

@collection_data[index][:last_id] = since_id

end

# Store the last-seen doc in the database

update_placeholder(@sqlitedb, since_table, collection_name, @collection_data[index][:last_id])

end

@logger.debug("Updating watch collections")

@collection_data = update_watched_collections(@mongodb, @collection, @sqlitedb)

# nothing found in that iteration

# sleep a bit

@logger.debug("No new rows. Sleeping.", :time => sleeptime)

sleeptime = [sleeptime * 2, sleep_max].min

sleep(sleeptime)

rescue => e

@logger.warn('MongoDB Input threw an exception, restarting', :exception => e)

end

end

end # def run

def close

# If needed, use this to tidy up on shutdown

@logger.debug("Shutting down...")

end

end # class LogStash::Inputs::Example