深度学习的一般性流程3-------PyTorch选择优化器进行训练

深度学习的一般性流程:

1. 构建网络模型结构

2. 选择损失函数

3. 选择优化器进行训练

梯度下降法(gradient descent)是一个最优化算法,常用于机器学习和人工智能当中用来递归性地逼近最小偏差模型。

torch.optim.SGD 是随机梯度下降的优化函数

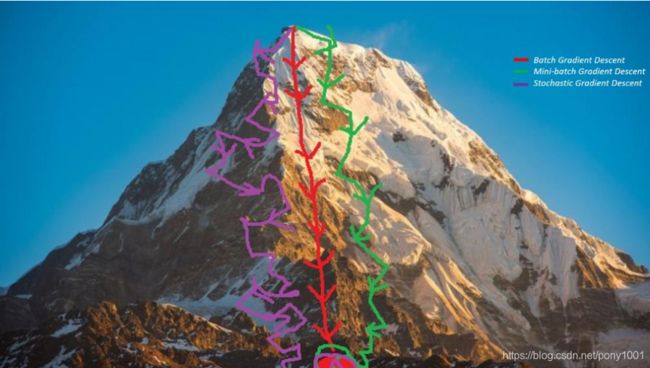

梯度下降(Gradient Descent)方法变种:

- (full) Batch gradient descent : 使用全部数据集来计算梯度,并更新所有参数。效果稳定,对于凸优化能保证全局最优,对于非凸也能保证局部最优。但由于每次更新适用所有数据,消耗内存,效率较低。

- Stochastic gradient descent : 使用单个样本计算梯度,并更新参数. 效率高,支持在线学习(增量学习)。不够稳定,波动较大。

- Mini-batch gradient descent: 选取一小部分(n个)样本来计算梯度,并更新参数。优化过程比较稳定,利用了矩阵乘法,效率较高。但需要手动设置mini-batch的值。

下图来自https://towardsdatascience.com/gradient-descent-algrithm-and-its-variants-10f652806a3

回归问题的训练代码

#--------------data--------------------

x = torch.unsqueeze(torch.linspace(-1,1,100),dim=1)

y = x.pow(2)

#--------------train-------------------

optimizer = torch.optim.SGD(mynet.parameters(),lr=0.1) #优化器

loss_func = torch.nn.MSELoss() #损失函数

for epoch in range(1000):

optimizer.zero_grad()

#forward + backward + optimize

pred = mynet(x)

loss = loss_func(pred,y)

loss.backward()

optimizer.step()

#----------------prediction---------------

test_data = torch.tensor([-1.0])

pred = mynet(test_data)

print(test_data, pred.data)分类问题的训练代码

#----------------data------------------

data_num = 100

x = torch.unsqueeze(torch.linspace(-1,1,data_num), dim=1)

y0 = torch.zeros(50)

y1 = torch.ones(50)

y = torch.cat((y0, y1), ).type(torch.LongTensor) #数据

#----------------train------------------

optimizer = torch.optim.SGD(mynet.parameters(),lr=0.1) #优化器

loss_func = torch.nn.CrossEntropyLoss() #损失函数

for epoch in range(1000):

optimizer.zero_grad()

#forward + backward + optimize

pred = mynet(x)

loss = loss_func(pred,y)

loss.backward()

optimizer.step()

#----------------prediction---------------

test_data = torch.tensor([-1.0])

pred = mynet(test_data)

print(test_data, pred.data)注意:optimizer.zero_grad() 每次做反向传播之前都要归零梯度,不然梯度会累加在一起,造成结果不收敛。

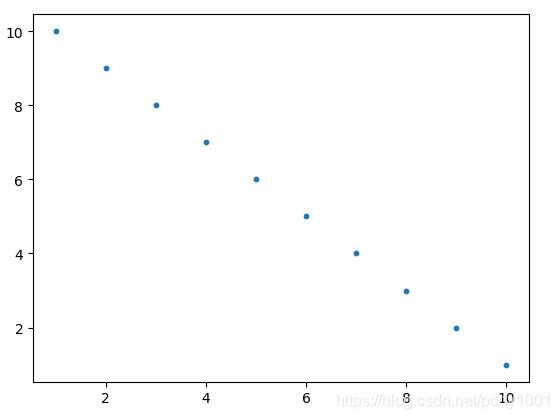

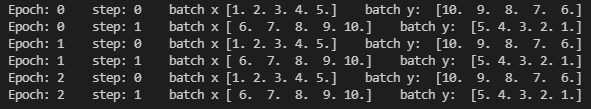

mini_batch的训练代码:

数据

import torch

import torch.utils.data as Data

import matplotlib.pyplot as plt

x = torch.linspace(1,10,10)

y = torch.linspace(10,1,10)

torch_database = Data.TensorDataset(x,y)

plt.scatter(x.data,y.data,s=10,cmap="autumn")

plt.show()

#支持批处理的操作

BATCH_SIZE = 5

loader = Data.DataLoader(

dataset = torch_database,

batch_size = BATCH_SIZE

)

for epoch in range(3):

for step,(batch_x,batch_y) in enumerate(loader):

print("Epoch:",epoch," step:",step," batch x",batch_x.numpy()," batch y: ",batch_y.numpy())高级梯度下降方法:

- Momentum冲量法:模拟物理动量的概念,积累之前的动量来替代真正的梯度。增加了同向的更新,减少了异向的更新。

- Nesterov accelerated gradient(NAG):再之前加速的梯度方向进行一个大的跳跃(棕色向量),计算梯度然后进行校正(绿色梯向量)

- Adagrad(Adaptive Gradient)<推荐>:将每一个参数的每一次迭代的梯度取平方累加再开方,用基础学习率除以这个数,来做学习率的动态更新。

- RMSprop<推荐>:为了缓解Adgrad学习率衰减过快,RMSprop对累计的信息进行衰减

- Adadelta<推荐>:Adadelta是对Adagrad的扩展,Adagrad不需要设置初始学习速率,而是利用之前的步长估计下一步的步长。

- Adam:

更详细可参考:https://blog.csdn.net/fishmai/article/details/52510826

https://blog.csdn.net/tsyccnh/article/details/76769232