【深度学习】Fashion-mnist && softmax实现(pyTorch)

torchvision包是服务于PyTorch深度学习框架的,主要用来构建计算机视觉模型。

torchvision主要由以下几部分构成:

- torchvision.datasets: 一些加载数据的函数及常用的数据集接口;

- torchvision.models: 包含常用的模型结构(含预训练模型),例如AlexNet、VGG、ResNet等;

- torchvision.transforms: 常用的图片变换,例如裁剪、旋转等;

- torchvision.utils: 其他的一些有用的方法。

3.5 图像分类数据集(Fashion-mnist)

3.5.1 获取数据集

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import time

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

mnist_train = torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST', train=True, download=True, transform=transforms.ToTensor())

mnist_test = torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST', train=False, download=True, transform=transforms.ToTensor())

print(type(mnist_train))

print(len(mnist_train), len(mnist_test))

60000 10000

feature, label = mnist_train[0]

print(feature.shape, label)

torch.Size([1, 28, 28]) 9

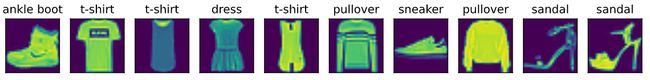

X, y = [], []

for i in range(10):

X.append(mnist_train[i][0])

y.append(mnist_train[i][1])

d2l.show_fashion_mnist(X, d2l.get_fashion_mnist_labels(y))

3.5.2 读取小批量

batch_size = 256

if sys.platform.startswith('win'):

num_workers = 0

else:

num_workers = 4

train_iter = torch.utils.data.DataLoader(mnist_train, batch_size=batch_size, shuffle=True, num_workers=num_workers)

test_iter = torch.utils.data.DataLoader(mnist_test, batch_size=batch_size, shuffle=False, num_workers=num_workers)

start = time.time()

for X, y in train_iter:

continue

print('%.2f sec' % (time.time()-start))

5.28 sec

Fashion-MNIST是一个10类服饰分类数据集,用它来检验不同算法的表现;

执行过程中,需提前安装tqdm、torchtext库;

sys.platform.startswith(‘win’)用于判断操作系统类型。

3.6 Softmax回归的从零开始实现

import torch

import torchvision

import numpy as np

import sys

#sys.path.append("..")

import d2lzh_pytorch as d2l

3.6.1 获取和读取数据

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

3.6.2 初始化模型参数

num_inputs = 784

num_outputs = 10

W = torch.tensor(np.random.normal(0,0.01, (num_inputs, num_outputs)), dtype = torch.float)

b = torch.zeros(num_outputs, dtype = torch.float)

W.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], requires_grad=True)

3.6.3 实现softmax运算

def softmax(X):

X_exp = X.exp()

partition = X_exp.sum(dim=1, keepdim=True) #dim=0是列向求和;dim=1是横向求和

return X_exp / partition

X = torch.rand((2,5))

X_Prob = softmax(X)

print(X_Prob, X_Prob.sum(dim=1))

tensor([[0.2019, 0.2636, 0.1090, 0.1379, 0.2877],

[0.3513, 0.1575, 0.1652, 0.1324, 0.1935]]) tensor([1., 1.])

3.6.4 定义模型

def net(X):

return softmax(torch.mm(X.view(-1, num_inputs), W) + b)

3.6.5 定义损失函数

def cross_entory(y_hat, y):

return - torch.log(y_hat.gather(1, y.view(-1,1)))

3.6.6 计算分类准确率

def accuracy(y_hat, y):

return (y_hat.argmax(dim=1)==y).float().mean().item()

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1)== y).float().sum().item()

n += y.shape[0]

return acc_sum / n

print(evaluate_accuracy(test_iter, net))

0.1231

3.6.7 训练模型

num_epochs, lr = 5, 0.1

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

#梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

d2l.sgd(params, lr, batch_size)

else:

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1)==y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print("epoch %d, Loss %.4f, train acc %.3f, test acc %.3f" % (epoch+1, train_l_sum / n, train_acc_sum / n, test_acc))

train_ch3(net, train_iter, test_iter, cross_entory, num_epochs, batch_size, [W, b], lr)

epoch 1, Loss 0.7861, train acc 0.750, test acc 0.789

epoch 2, Loss 0.5707, train acc 0.813, test acc 0.811

epoch 3, Loss 0.5263, train acc 0.825, test acc 0.822

epoch 4, Loss 0.5006, train acc 0.833, test acc 0.824

epoch 5, Loss 0.4849, train acc 0.837, test acc 0.829

3.6.8 预测

X,y = iter(test_iter).next()

true_labels = d2l.get_fashion_mnist_labels(y.numpy())

pred_labels = d2l.get_fashion_mnist_labels(net(X).argmax(dim=1).numpy())

titles = [true + '\n ' + pred for true, pred in zip(true_labels, pred_labels)]

d2l.show_fashion_mnist(X[0:9], titles[0:9])

可以使用softmax回归做多类别分类。与训练线性回归相比,训练softmax回归的步骤和它非常相似:

获取并读取数据、定义模型和损失函数并使用优化算法训练模型。

事实上,绝大多数深度学习模型的训练都有着类似的步骤。

3.7 Softmax回归的简洁实现

from torch import nn

from torch.nn import init

3.7.1 获取和读取数据

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

3.7.2 定义和初始化模型

num_inputs = 784

num_outputs = 10

class LinearNet(nn.Module):

def __init__(self, num_inputs, num_outputs):

super(LinearNet, self).__init__()

self.linear = nn.Linear(num_inputs, num_outputs)

def forward(self, x):

y = self.linear(x.view(x.shape[0], -1))

return y

net = LinearNet(num_inputs, num_outputs)

class FlattenLayer(nn.Module):

def __init__(self):

super(FlattenLayer, self).__init__()

def forward(self, x):

return x.view(x.shape[0], -1)

from collections import OrderedDict

net = nn.Sequential(

OrderedDict([

('flatten', FlattenLayer()),

('linear', nn.Linear(num_inputs, num_outputs))

])

)

init.normal_(net.linear.weight, mean = 0, std = 0.01) #正态分布

init.constant_(net.linear.bias, val = 0)

Parameter containing:

tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], requires_grad=True)

3.7.3 softmax和交叉熵损失函数

loss = nn.CrossEntropyLoss()

3.7.4 定义优化算法

optimizer = torch.optim.SGD(net.parameters(), lr = 0.1)

3.7.5 训练模型

num_epochs, lr = 5, 0.1

train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, None, None, optimizer)

epoch 1, Loss 0.0031, train acc 0.748, test acc 0.783

epoch 2, Loss 0.0022, train acc 0.812, test acc 0.802

epoch 3, Loss 0.0021, train acc 0.825, test acc 0.816

epoch 4, Loss 0.0020, train acc 0.833, test acc 0.813

epoch 5, Loss 0.0019, train acc 0.836, test acc 0.826

PyTorch提供的函数nn.CrossEntropyLoss()往往具有更好的数值稳定性,当自己分开定义softmax运算和交叉熵损失函数可能会造成数值不稳定。

欢迎关注【OAOA】

![]()