OpenAI Gym--Classical Control 环境详解

OpenAI Gym-Toy Examples

- 概述

- 一、Classic Control参数

- 1.1 CartPole-v1

- 1.2 Acrobot-v1

- 1.3 MountainCar-v0

- 1.4 MountainCarContinuous-v0

- 1.5 Pendulum-v0

- 二、查看gym中Classic Control的信息

- 三、总结

概述

OpenAI Gym Lists

OpenAI Gym Github

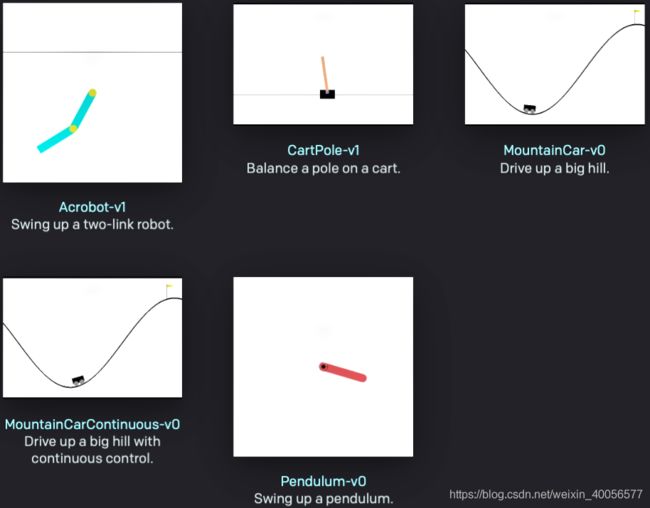

OpenAI Gym中Classical Control一共有五个环境,都是检验复杂算法work的toy examples,稍微理解环境的写法以及一些具体参数。比如state、action、reward的类型,是离散还是连续,数值范围,环境意义,任务结束的标志,reward signal的给予等等。

一、Classic Control参数

目标:通过移动Cart,维持Pole的稳定

1.1 CartPole-v1

- 四维的状态:类型是gym中定义的Box(4,0)

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | Cart Position x x x | [-4.8,4.8] |

| 1 | Cart Velocity x ′ x' x′ | [-Inf,Inf] |

| 2 | Pole Angle θ \theta θ | [-24deg,24deg] |

| 3 | Pole Velocity At Tip θ ′ \theta' θ′ | [-Inf,Inf] |

- 二维的离散动作:类型是gym中定义的Discrete

| Num | Meaning |

|---|---|

| 0 | Push Cart Left |

| 1 | Push Cart Right |

-

Reward Signal

每一个timestep reward +1,直到Termination。意思就是Pole初始化的时候是满足Angles的,所以得到reward + 1。当它fail时,得到的reward为0,而且episode end,因此这样设计reward的方式就是希望Pole尽可能地维持稳定状态,并且不超过Cart Position。 -

Termination的条件

- Pole Angle 超过12 degrees

- Cart Position 超过[-2.4,2.4]

- Episode length 超过200

- 进行100次Trials,Average Return大于200时,问题解决!

可能会有一些问题:

5. Cart、Pole的质量多少?Pole的长度是多少?

6. Action这两个左右动作施加了多少力?是怎么衡量degrees的?

7. 为什么结束的条件是2.4而不是4.8?怎么表达12度时Terminate?

作为小白入门,确实是想知道一些问题背景以及物理意义的,就是相当于问环境怎么写。如果想深入toy examples的环境,建议看Classic Control的环境源码进行学习。

https://github.com/openai/gym/blob/master/gym/envs/classic_control/cartpole.py

1.2 Acrobot-v1

目标:通过施加力矩,使得Link1与Link2之间的end-effector超过base一个关节长度

有两个关节,Link 1与Link 2,其中驱动器在Link1与Link2之间,只有一个施加力的end-effector即动作,所以要记录的状态量有四个:Link 1相对于竖直向下方向的角度 θ 1 \theta_1 θ1,Link2相对于Link1的角度 θ 2 \theta_2 θ2及其角速度 θ 1 ′ \theta_1' θ1′, θ 2 ′ \theta_2' θ2′

- 六维的状态:类型是gym中的Box(6,)

初始状态为[1,0,1,0,0,0],即完全竖直向下,用sin与cos表示角度,因此范围都是 [ − 1 , 1 ] [-1,1] [−1,1],至于角速度就在源码中定义了。

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | c o s θ 1 cos\theta_1 cosθ1 | [ − 1 , 1 ] [-1,1] [−1,1] |

| 1 | s i n θ 1 sin\theta_1 sinθ1 | [ − 1 , 1 ] [-1,1] [−1,1] |

| 2 | c o s θ 2 cos\theta_2 cosθ2 | [ − 1 , 1 ] [-1,1] [−1,1] |

| 3 | s i n θ 2 sin\theta_2 sinθ2 | [ − 1 , 1 ] [-1,1] [−1,1] |

| 4 | θ 1 ′ \theta'_1 θ1′角速度 | [ − 4 π , 4 π ] [-4\pi,4\pi] [−4π,4π] |

| 5 | θ 2 ′ \theta'_2 θ2′角速度 | [ − 9 π , 9 π ] [-9\pi,9\pi] [−9π,9π] |

- 三维的离散动作:类型是gym中定义的Discrete(3,)

| Num | Meaning |

|---|---|

| 0 | +1的力矩torque |

| 1 | 0的力矩 |

| 2 | -1的力矩torque |

-

Reward Signal

没完成目标时,reward = -1 ;完成目标后,reward = 0。目标为,通过施力使得Link1与Line2之间的关节点位置高于base一个关节长度。 -

Termination的条件:

- 任务完成时结束,达成目标,reward=0

- 超过episode最大长度时结束

https://github.com/openai/gym/blob/master/gym/envs/classic_control/acrobot.py

1.3 MountainCar-v0

目标:使小车运动到旗帜的位置即goal position ≥ 0.5 \ge 0.5 ≥0.5的地方

- 二维的状态:类型是gym中的Box(2,)

初始状态Car Position为 [ − 0.6 , 0.4 ] [-0.6,0.4] [−0.6,0.4],Car Velocity为0

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | Car Position | [ − 1.2 , 0.6 ] [-1.2,0.6] [−1.2,0.6] |

| 1 | Car Velocity | [ − 0.07 , 0.07 ] [-0.07,0.07] [−0.07,0.07] |

- 三维的离散动作:类型是gym中的Discrete(3,)

| Num | Meaning |

|---|---|

| 0 | 向左加速 |

| 1 | 不加速 |

| 2 | 向右加速 |

-

Reward Signal

当小车位置 ≤ 0.5 \le0.5 ≤0.5时,reward = -1;当小车到达0.5时,reward = 0; -

Termination的条件

- Car Position ≥ 0.5 \ge 0.5 ≥0.5

- Episode的长度大于200

https://github.com/openai/gym/blob/master/gym/envs/classic_control/mountain_car.py

1.4 MountainCarContinuous-v0

连续动作版本的MountainCar,但旗帜位置在goal position ≥ 0.45 \ge 0.45 ≥0.45的地方

- 二维的状态:类型是gym中的Box(2,)

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | Car Position | [ − 1.2 , 0.6 ] [-1.2,0.6] [−1.2,0.6] |

| 1 | Car Velocity | [ − 0.07 , 0.07 ] [-0.07,0.07] [−0.07,0.07] |

- 一维的连续动作:类型是gym中的Box(1,)

动作变成是施加力的值,而不是向左加速,不加速,向右加速,实际上向左加速可以看成是向左施加一单位的力即force

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | Car Force | [ − 1.0 , 1.0 ] [-1.0,1.0] [−1.0,1.0] |

- Reward Signal

此处Reward就需要进行设计了,当小车成功Reach Goal的时候,Reward = 100;当小车每个timestep都没达到目标时: r t + 1 = r t − 0.1 a 2 , r 0 = 0 r_{t+1}=r_t-0.1a^2,r_0=0 rt+1=rt−0.1a2,r0=0

结束条件与离散的Mountain Car类似。

https://github.com/openai/gym/blob/master/gym/envs/classic_control/continuous_mountain_car.py

1.5 Pendulum-v0

目标:使倒立摆向上甩,然后保持竖直状态

描述倒立摆只需要一个与竖直向下的角度 θ 1 \theta_1 θ1以及角速度 θ 1 ′ \theta_1' θ1′,同样用cos与sin对角度进行描述,然后动作是end-effector是一个力矩torque,该环境描述为一维连续动作值。

- 三维的状态:类型是gym中的Box(3,)

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | c o s θ 1 cos\theta_1 cosθ1 | [-1,1] |

| 1 | s i n θ 1 sin\theta_1 sinθ1 | [-1,1] |

| 2 | θ 1 ′ \theta_1' θ1′ | [-8,8] |

- 一维的连续动作:类型是gym中的Box(1,)

| Num | Meaning | [ M i n , M a x ] [Min,Max] [Min,Max] |

|---|---|---|

| 0 | Torque | [ − 2.0 , 2.0 ] [-2.0,2.0] [−2.0,2.0] |

- Reward Signal

同样的,此处的Reward也需要设计,假设动作值为 u ∈ [ − 2.0 , 2.0 ] u\in [-2.0,2.0] u∈[−2.0,2.0]: r t = θ t 2 + 0.1 × θ t ′ 2 + 0.001 u 2 r_t = \theta_t^2+0.1\times\theta_t'^2+0.001u^2 rt=θt2+0.1×θt′2+0.001u2

所以reward由角度的平方、角速度的平方以及动作值决定。

当Pendulum越往上,角度越大,reward越大,从而鼓励Pendulum往上摆;角速度越大,reward越大,从而鼓励Pendulum快速往上摆;动作值越大,摆动的速度也就越大,从而加速Pendulum往上摆。系数的大小说明了,角度更重要,其次是角速度,最后才是动作值。因为摆过头,角度 θ \theta θ就下降得快,reward就跌得快。理想状态下,角度为180度时,角速度为0,动作值为0时,reward达到最大。

要注意Pendulum没有=终止条件!

二、查看gym中Classic Control的信息

import gym

from gym.spaces import Box, Discrete

classic_control_envs = ['MountainCarContinuous-v0', 'CartPole-v1', 'MountainCar-v0', 'Acrobot-v1','Pendulum-v0']

for env_name in classic_control_envs:

env = gym.make(env_name)

initial_state = env.reset()

action = env.action_space.sample()

next_state, reward, done, info = env.step(action)

state_type = env.observation_space

state_shape = env.observation_space.shape

state_high,state_low = env.observation_space.high, env.observation_space.low

act_type = env.action_space

if isinstance(act_type, Box):

act_shape = env.action_space.shape

act_high = env.action_space.high

act_low = env.action_space.low

elif isinstance(act_type, Discrete):

act_shape = env.action_space.n

print(env_name)

print('reward:{0}, info:{1}'.format(reward, info))

print('s_0:{0}\n a_0:{1}\n s_1:{2}'.format(initial_state, action, next_state)) #初始状态与动作

print('state_shape:{0}, state_type:{1}\n act_shape:{2}, act_type:{3}'.format(state_shape, state_type, act_shape, act_type)) # 状态与动作的维度与类型

print('state_high:{0}, state_low:{1}'.format(state_high, state_low)) #状态取值范围

if isinstance(act_type, Box):

print('act_high:{0}, act_low:{1}'.format(act_high, act_low))

print('reward_range:{0}\n'.format(env.reward_range))

# output

MountainCarContinuous-v0

reward:-0.010385887875339339, info:{}

s_0:[-0.56134546 0. ]

a_0:[0.32227144]

s_1:[-0.56057956 0.0007659 ]

state_shape:(2,), state_type:Box(2,)

act_shape:(1,), act_type:Box(1,)

state_high:[0.6 0.07], state_low:[-1.2 -0.07]

act_high:[1.], act_low:[-1.]

reward_range:(-inf, inf)

CartPole-v1

reward:1.0, info:{}

s_0:[0.04742338 0.00526253 0.04165975 0.01950199]

a_0:0

s_1:[ 0.04752863 -0.19043134 0.04204979 0.32503253]

state_shape:(4,), state_type:Box(4,)

act_shape:2, act_type:Discrete(2)

state_high:[4.8000002e+00 3.4028235e+38 4.1887903e-01 3.4028235e+38], state_low:[-4.8000002e+00 -3.4028235e+38 -4.1887903e-01 -3.4028235e+38]

reward_range:(-inf, inf)

MountainCar-v0

reward:-1.0, info:{}

s_0:[-0.59964167 0. ]

a_0:0

s_1:[-6.00076278e-01 -4.34612310e-04]

state_shape:(2,), state_type:Box(2,)

act_shape:3, act_type:Discrete(3)

state_high:[0.6 0.07], state_low:[-1.2 -0.07]

reward_range:(-inf, inf)

Acrobot-v1

reward:-1.0, info:{}

s_0:[ 0.99721319 -0.0746046 0.9999644 0.00843852 -0.0879928 0.0643567 ]

a_0:1

s_1:[ 0.99661934 -0.08215768 0.99992096 0.01257248 0.01377026 -0.02470094]

state_shape:(6,), state_type:Box(6,)

act_shape:3, act_type:Discrete(3)

state_high:[ 1. 1. 1. 1. 12.566371 28.274334], state_low:[ -1. -1. -1. -1. -12.566371 -28.274334]

reward_range:(-inf, inf)

Pendulum-v0

reward:-0.6898954731489645, info:{}

s_0:[0.67970183 0.73348853 0.30016823]

a_0:[1.6827022]

s_1:[0.63824897 0.76983001 1.10268995]

state_shape:(3,), state_type:Box(3,)

act_shape:(1,), act_type:Box(1,)

state_high:[1. 1. 8.], state_low:[-1. -1. -8.]

act_high:[2.], act_low:[-2.]

reward_range:(-inf, inf)

gym的常规交互

import gym

env = gym.make('CartPole-v0')

for i_episode in range(20):

observation = env.reset() #The process gets started by calling reset(), which returns an initial observation.

for t in range(100):

env.render()

print(observation)

action = env.action_space.sample()

observation, reward, done, info = env.step(action)

if done:

print("Episode finished after {} timesteps".format(t+1))

break

env.close()

三、总结

| 环境名 | 状态 | 动作 | 奖励(normal/end) |

|---|---|---|---|

| CartPole-v1 | Box(4,) | Discrete(2,) | +1/0 |

| Acrobot-v1 | Box(6,) | Discrete(3,) | -1/0 |

| MountainCar-v0 | Box(2,) | Discrete(3,) | -1/0 |

| MountainCarContinuous-v0 | Box(2,) | Box(1,) | Design |

| Pendulum-v0 | Box(3,) | Box(1,) | Design |