【pytorch】简单的一个模型做cifar10 分类(二)-构建复杂一点的模型

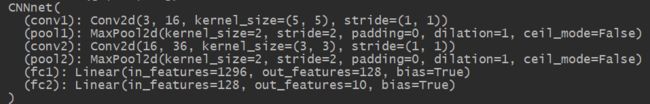

前面用的网络是pytorch官方给的一个实例网络,本文参照书本换了一个网络,如下:

代码如下:

class CNNnet(nn.Module):

def __init__(self):

super(CNNnet,self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5,stride=1)

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=36,kernel_size=3,stride=1)

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(1296, 128) # 1296 = 36 * 6 *6

self.fc2 = nn.Linear(128, 10)

def forward(self,x):

x =self.pool1(F.relu(self.conv1(x)))

x =self.pool2(F.relu(self.conv2(x)))

x = x.view(-1, 36*6*6)

x = F.relu(self.fc2(F.relu(self.fc1(x))))

return x

其中36*6*6怎么计算来的,c*H*W,H和W都是用如下链接给的计算方式得到的:【pytorch】卷积层输出尺寸的计算公式和分组卷积的weight尺寸的计算https://mp.csdn.net/console/editor/html/107954603

结果如何呢?

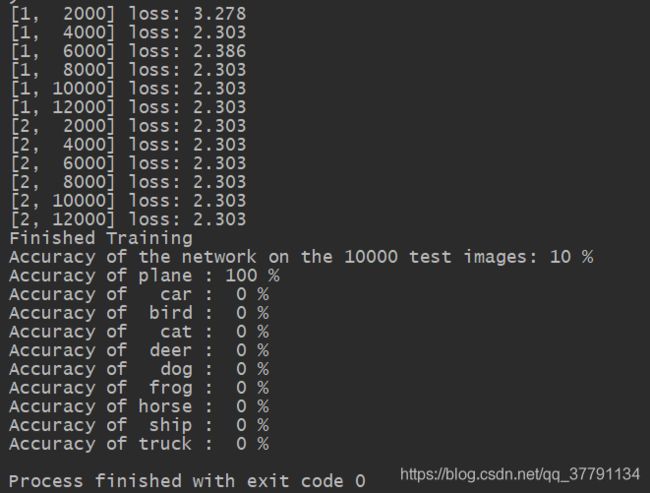

当用了如下显示初始化方式后,结果为:

for m in net.modules():

if isinstance(m, nn.Conv2d):

nn.init.normal_(m.weight)

nn.init.xavier_normal_(m.weight)

nn.init.kaiming_normal_(m.weight) # 卷积层初始化

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight) # 全连接层参数初始化

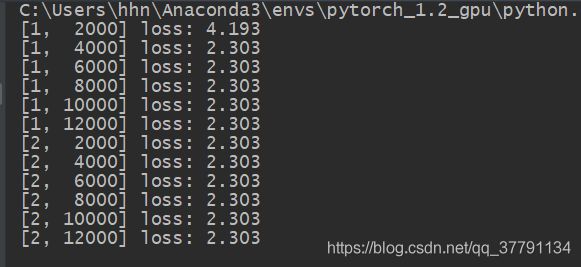

可以看出,其好像陷入了鞍点。其损失没有下降了,那我还是把这个显式初始化参数去掉试一下。

还真有效果,终于loss有值了,但是基本稳定在2.多,2个epoch时:

Accuracy of the network on the 10000 test images: 9 %

Accuracy of plane : 0 %

Accuracy of car : 89 %

Accuracy of bird : 0 %

Accuracy of cat : 0 %

Accuracy of deer : 0 %

Accuracy of dog : 0 %

Accuracy of frog : 4 %

Accuracy of horse : 0 %

Accuracy of ship : 0 %

Accuracy of truck : 0 %

那epoch=10来说,还是加上了显式初始化哈,结果如下:

[10, 2000] loss: 1.785

[10, 4000] loss: 1.834

[10, 6000] loss: 1.833

[10, 8000] loss: 1.813

[10, 10000] loss: 1.865

[10, 12000] loss: 1.834

Finished Training

Accuracy of the network on the 10000 test images: 35 %

Accuracy of plane : 27 %

Accuracy of car : 44 %

Accuracy of bird : 12 %

Accuracy of cat : 42 %

Accuracy of deer : 38 %

Accuracy of dog : 9 %

Accuracy of frog : 47 %

Accuracy of horse : 42 %

Accuracy of ship : 64 %

Accuracy of truck : 24 %

堪忧呀。没有达到书本上的结果。才发现初始学习率lr太高啦!改为lr=0.001。

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

还是如下:

我把初始化全部去掉看看

[1, 2000] loss: 2.133

[1, 4000] loss: 1.955

[1, 6000] loss: 1.930

[1, 8000] loss: 1.907

[1, 10000] loss: 1.850

[1, 12000] loss: 1.849

[2, 2000] loss: 1.774

[2, 4000] loss: 1.805

[2, 6000] loss: 1.802

[2, 8000] loss: 1.781

[2, 10000] loss: 1.790

[2, 12000] loss: 1.796

[3, 2000] loss: 1.763

[3, 4000] loss: 1.784

[3, 6000] loss: 1.830

[3, 8000] loss: 1.771

[3, 10000] loss: 1.805

[3, 12000] loss: 1.830

[4, 2000] loss: 1.803

[4, 4000] loss: 1.805

[4, 6000] loss: 1.814

[4, 8000] loss: 1.790

[4, 10000] loss: 1.805

[4, 12000] loss: 1.805

[5, 2000] loss: 1.834

[5, 4000] loss: 1.851

[5, 6000] loss: 1.846

[5, 8000] loss: 1.836

[5, 10000] loss: 1.857

[5, 12000] loss: 1.859

[6, 2000] loss: 1.814

[6, 4000] loss: 1.811

[6, 6000] loss: 1.859

[6, 8000] loss: 1.908

[6, 10000] loss: 1.873

[6, 12000] loss: 1.857

[7, 2000] loss: 1.812

[7, 4000] loss: 1.865

[7, 6000] loss: 1.836

[7, 8000] loss: 1.873

[7, 10000] loss: 1.873

[7, 12000] loss: 1.912

[8, 2000] loss: 1.840

[8, 4000] loss: 1.880

[8, 6000] loss: 1.897

[8, 8000] loss: 1.881

[8, 10000] loss: 1.855

[8, 12000] loss: 1.882

[9, 2000] loss: 1.812

[9, 4000] loss: 1.820

[9, 6000] loss: 1.873

[9, 8000] loss: 1.824

[9, 10000] loss: 1.868

[9, 12000] loss: 1.870

[10, 2000] loss: 1.853

[10, 4000] loss: 1.842

[10, 6000] loss: 1.832

[10, 8000] loss: 1.820

[10, 10000] loss: 1.878

[10, 12000] loss: 1.838

Finished Training

Accuracy of the network on the 10000 test images: 34 %

Accuracy of plane : 13 %

Accuracy of car : 49 %

Accuracy of bird : 12 %

Accuracy of cat : 38 %

Accuracy of deer : 48 %

Accuracy of dog : 14 %

Accuracy of frog : 35 %

Accuracy of horse : 39 %

Accuracy of ship : 59 %

Accuracy of truck : 30 %总体而言初始学习率很重要,设置的不好会导致loss不降低,权重初始参数选择也很重要,可能导致loss是NAN或者loss一直某个值

lr = 0.001,pytorch默认初始化方法,结果如下:

[10, 2000] loss: 0.367

[10, 4000] loss: 0.382

[10, 6000] loss: 0.417

[10, 8000] loss: 0.472

[10, 10000] loss: 0.452

[10, 12000] loss: 0.488

Finished Training

Accuracy of the network on the 10000 test images: 68 %

Accuracy of plane : 75 %

Accuracy of car : 78 %

Accuracy of bird : 64 %

Accuracy of cat : 50 %

Accuracy of deer : 49 %

Accuracy of dog : 65 %

Accuracy of frog : 79 %

Accuracy of horse : 71 %

Accuracy of ship : 80 %

Accuracy of truck : 70 %初始化参数+lr=0.001,loss=2.303不降低了,从下面结果看的话,似乎只学习到了plane的特征,其余特征都没有学习到

[10, 2000] loss: 2.303

[10, 4000] loss: 2.303

[10, 6000] loss: 2.303

[10, 8000] loss: 2.303

[10, 10000] loss: 2.303

[10, 12000] loss: 2.303

Finished Training

Accuracy of the network on the 10000 test images: 10 %

Accuracy of plane : 100 %

Accuracy of car : 0 %

Accuracy of bird : 0 %

Accuracy of cat : 0 %

Accuracy of deer : 0 %

Accuracy of dog : 0 %

Accuracy of frog : 0 %

Accuracy of horse : 0 %

Accuracy of ship : 0 %

Accuracy of truck : 0 %

总的代码:

# -*- coding: utf-8 -*-

'''

@Time : 2020/8/11 17:14

@Author : HHNa

@FileName: add.image.py

@Software: PyCharm

'''

# 导入库及下载数据

import torch

import torchvision

import torchvision.transforms as transforms

import torch.utils.data as data

transform = transforms.Compose([

transforms.ToTensor(),

# torchvision datasets are PILImage images of range [0, 1]

# Tensors of normalized range [-1, 1]

transforms.Normalize((0.5, .5, .5), (.5, .5, .5))

])

trainset = torchvision.datasets.CIFAR10(root=r'./data', train=True, download=False, transform=transform)

trainloader = data.DataLoader(trainset, batch_size=4, shuffle=True, num_workers=0)

testset = torchvision.datasets.CIFAR10(root=r'./data', train=False, download=False, transform=transform)

testLoader = data.DataLoader(testset, batch_size=4, shuffle=False, num_workers=0)

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

import torch.nn as nn

import torch.nn.functional as F

# 定义网络

class CNNnet(nn.Module):

def __init__(self):

super(CNNnet, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5, stride=1)

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=36, kernel_size=3,stride=1)

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(1296, 128) # 1296 = 36 * 6 *6

self.fc2 = nn.Linear(128, 10)

def forward(self,x):

x =self.pool1(F.relu(self.conv1(x)))

x =self.pool2(F.relu(self.conv2(x)))

x = x.view(-1, 36*6*6)

x = F.relu(self.fc2(F.relu(self.fc1(x))))

return x

# 检测是否有用的GPU,如果有使用GPU,否则CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

net = CNNnet()

net = net.to(device)

# 查看网络定义了哪些层

print(net)

# 取模型的前面几层

# print(nn.Sequential(*list(net.children()))[:4])

# 显示初始化参数

# for m in net.modules():

# if isinstance(m, nn.Conv2d):

# nn.init.normal_(m.weight)

# nn.init.xavier_normal_(m.weight)

# nn.init.kaiming_normal_(m.weight) # 卷积层初始化

# nn.init.constant_(m.bias, 0)

# elif isinstance(m, nn.Linear):

# nn.init.normal_(m.weight) # 全连接层参数初始化

# 选择优化器

import torch.optim as optim

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

# train循环

for epoch in range(10): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 2000 == 1999: # print every 2000 mini-batches

print('[%d, %5d] loss: %.3f' % (epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

# 打印参数

# print("net have {} paramerters in total".format(sum(x.numel() for x in net.parameters())))

# 保存模型

PATH = './cifar_CNNet_epoch-10-init-weight.pth'

torch.save(net.state_dict(), PATH)

# 测试在数据集上的表现

corret = 0

total = 0

with torch.no_grad():

for data in testLoader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

corret += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * corret / total))

# 测试在不同类别上的表现情况

class_correct = list(0. for i in range(10))

# print(class_correct)

class_total = list(0. for i in range(10))

# print(class_total)

with torch.no_grad():

for data in testLoader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs, 1)

c = (predicted == labels).squeeze() # 去掉为1的维度

for i in range(4):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (classes[i], 100 * class_correct[i] / class_total[i]))