笔记 - 数据读取:cifar10多线程读取2

总结:

1.

images_train, labels_train = cifar10_input.distorted_inputs(

data_dir=data_dir, batch_size=batch_size

)

并不是真的读取数据,而是定义了一系列逻辑操作,返回tensor

* tensor里面那些读文件的操作,如何做到在sess.run的时候才真正执行

2.

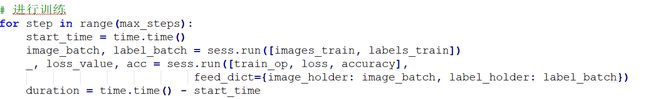

for step in range(max_steps):

start_time = time.time()

image_batch, label_batch = sess.run([images_train, labels_train])

_, loss_value, acc = sess.run([train_op, loss, accuracy],

feed_dict={image_holder: image_batch, label_holder: label_batch})

duration = time.time() - start_time

每次获取一个批次的数据,都要从头开始 读取和增强 所有数据,最后只取一部分?

有空debug跟踪一下

debug一下tensor程序运行的流程,验证是否每次都需要重新加载并处理所有数据

# tf.train.start_queue_runners() tensorflow里面的线程队列到底再搞啥名堂

笔记 - tensorflow:sess.run机制

tensor还不是一般的懒加载...

而是真的只调用了一次...

3.

多线程并发读取处理图片,

reader = tf.FixedLengthRecordReader(record_bytes=record_bytes)

result.key, value = reader.read(filename_queue)

不会重复加载同一份数据进行处理吗??

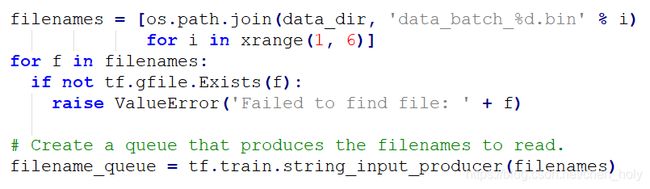

主程序中 cifar10_input.distorted_inputs 的流程

1.主程序调用

2.cifar10_input.py

2.def distorted_inputs(data_dir, batch_size):

3.读取原始图片

distorted_inputs调用内部read_cifar10函数

read_cifar10

def read_cifar10(filename_queue):

class CIFAR10Record(object):

pass

result = CIFAR10Record()

label_bytes = 1 # 2 for CIFAR-100

result.height = 32

result.width = 32

result.depth = 3

image_bytes = result.height * result.width * result.depth

record_bytes = label_bytes + image_bytes

reader = tf.FixedLengthRecordReader(record_bytes=record_bytes)

result.key, value = reader.read(filename_queue)

record_bytes = tf.decode_raw(value, tf.uint8)

result.label = tf.cast(

tf.strided_slice(record_bytes, [0], [label_bytes]), tf.int32)

depth_major = tf.reshape(

tf.strided_slice(record_bytes, [label_bytes],

[label_bytes + image_bytes]),

[result.depth, result.height, result.width])

result.uint8image = tf.transpose(depth_major, [1, 2, 0])

return result

"""

传入文件队列

解析读取bin文件,编写了单张图片解析的逻辑

最后返回了一个自定义的Cifar10对象,存储解析后的数据

"""

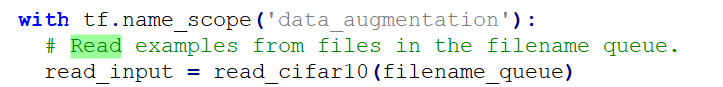

4.数据增强

reshaped_image = tf.cast(read_input.uint8image, tf.float32)

height = IMAGE_SIZE

width = IMAGE_SIZE

# Image processing for training the network. Note the many random

# distortions applied to the image.

# Randomly crop a [height, width] section of the image.

distorted_image = tf.random_crop(reshaped_image, [height, width, 3])

# Randomly flip the image horizontally.

distorted_image = tf.image.random_flip_left_right(distorted_image)

# Because these operations are not commutative, consider randomizing

# the order their operation.

# NOTE: since per_image_standardization zeros the mean and makes

# the stddev unit, this likely has no effect see tensorflow#1458.

distorted_image = tf.image.random_brightness(distorted_image,

max_delta=63)

distorted_image = tf.image.random_contrast(distorted_image,

lower=0.2, upper=1.8)

# Subtract off the mean and divide by the variance of the pixels.

float_image = tf.image.per_image_standardization(distorted_image)

# Set the shapes of tensors.

float_image.set_shape([height, width, 3])

read_input.label.set_shape([1])

5.最后输出的准备

NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN = 50000

min_fraction_of_examples_in_queue = 0.4

min_queue_examples = int(NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN *

min_fraction_of_examples_in_queue)

print('Filling queue with %d CIFAR images before starting to train. '

'This will take a few minutes.' % min_queue_examples)

# Generate a batch of images and labels by building up a queue of examples.

return _generate_image_and_label_batch(float_image, read_input.label,

min_queue_examples, batch_size,

shuffle=True)

_generate_image_and_label_batch

# Create a queue that shuffles the examples, and then

# read 'batch_size' images + labels from the example queue.

num_preprocess_threads = 16

if shuffle:

images, label_batch = tf.train.shuffle_batch(

[image, label],

batch_size=batch_size,

num_threads=num_preprocess_threads,

capacity=min_queue_examples + 3 * batch_size,

min_after_dequeue=min_queue_examples)

else:

images, label_batch = tf.train.batch(

[image, label],

batch_size=batch_size,

num_threads=num_preprocess_threads,

capacity=min_queue_examples + 3 * batch_size)

# Display the training images in the visualizer.

tf.summary.image('images', images)

return images, tf.reshape(label_batch, [batch_size])

"""

这开了16个线程进行读取数据???

"""