python 文本聚类

读取excel

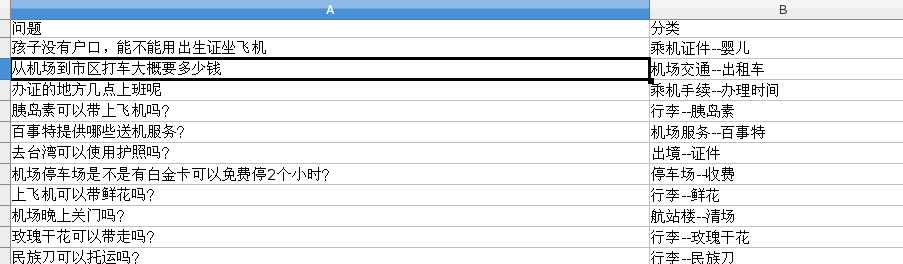

excel 格式

excel.py

# -*- coding: utf-8 -*-

import xdrlib ,sys

import xlrd

import json

def open_excel(file= '/home/lhy/data/data.xlsx'):

try:

data = xlrd.open_workbook(file)

return data

except Exception,e:

print str(e)

#根据索引获取Excel表格中的数据 参数:file:Excel文件路径 colnameindex:表头列名所在行的所以 ,by_index:表的索引

def excel_table_byindex(file= '/home/lhy/data/data.xlsx',colnameindex=0,by_index=0):

data = open_excel(file)

table = data.sheets()[by_index]

nrows = table.nrows #行数

ncols = table.ncols #列数

colnames = table.row_values(colnameindex) #某一行数据

list =[]

for rownum in range(1,nrows):

row = table.row_values(rownum)

if row:

app = {}

for i in range(len(colnames)):

app[colnames[i]] = row[i]

list.append(app)

return list

#根据名称获取Excel表格中的数据 参数:file:Excel文件路径 colnameindex:表头列名所在行的所以 ,by_name:Sheet1名称

#def excel_table_byname(file= '/home/lhy/data/data.xlsx',colnameindex=0,by_name=u'Sheet1'):

def excel_table_byname(file='/home/lhy/data/data.xlsx', colnameindex=0, by_name=u'word'):

data = open_excel(file)

table = data.sheet_by_name(by_name)

nrows = table.nrows #行数

colnames = table.row_values(colnameindex) #某一行数据

list =[]

for rownum in range(1,nrows):

row = table.row_values(rownum)

if row:

app = {}

for i in range(len(colnames)):

app[colnames[i]] = row[i]

list.append(app)

return list

def main():

tables = excel_table_byindex()

for row in tables:

'''print row.decode('utf-8')'''

wenti=row[u'问题']

# wenti=wenti[1:len(wenti)-1]

print json.dumps(wenti, encoding="UTF-8", ensure_ascii=False)

#print type(row)

# tables = excel_table_byname()

# for row in tables:

# print row

if __name__=="__main__":

main()分词

TextFenci.py

# -*- coding: UTF-8 -*-

import jieba.posseg as pseg

import excel

import json

def getWordXL():

#words=pseg.cut("对这句话进行分词")

list=excel.excel_table_byindex();

aList = []

for index in range(len(list)):

wenti = list[index][u'问题']

words = pseg.cut(wenti)

word_str=""

for key in words:

#aList.insert()import json

# print type(key)

word_str=word_str+key.word+" "

# print key.word," ",

aList.insert(index,word_str)

return aList,list #第一个参数为分词结果,第儿歌参数为原始文档

def main():

aList=getWordXL()

print "1234"

print json.dumps(aList, encoding="UTF-8", ensure_ascii=False)

if __name__=="__main__":

main()TF_IDF 权重生成

TF_IDF.py

# -*- coding: UTF-8 -*-

import jieba.posseg as pseg

import excel

import json

def getWordXL():

#words=pseg.cut("对这句话进行分词")

list=excel.excel_table_byindex();

aList = []

for index in range(len(list)):

wenti = list[index][u'问题']

words = pseg.cut(wenti)

word_str=""

for key in words:

#aList.insert()import json

# print type(key)

word_str=word_str+key.word+" "

# print key.word," ",

aList.insert(index,word_str)

return aList,list #第一个参数为分词结果,第儿歌参数为原始文档

def main():

aList=getWordXL()

print "1234"

print json.dumps(aList, encoding="UTF-8", ensure_ascii=False)

if __name__=="__main__":

main()k-means 聚类

KMeans.py

# -*- coding: utf-8 -*-

from sklearn.cluster import KMeans

import TF_IDF

import json,sys

reload(sys)

sys.setdefaultencoding('utf-8')

weight, textList = TF_IDF.getTFIDF()

def getCU(leibieNum):

LEIBI=leibieNum #100个类别

#print "####################Start Kmeans:分成"+str(LEIBI)+"个类"

clf = KMeans(n_clusters=LEIBI)

s = clf.fit(weight)

#print s

# 20个中心点

#print(clf.cluster_centers_)

# 每个样本所属的簇

#print(clf.labels_)

i = 1

textFencuList=[]

for i in range(0,LEIBI):

textFencu2=[]

textFencuList.append(textFencu2)

for i in range(len(clf.labels_)):

try:

textFencuList[clf.labels_[i - 1]].append(textList[i])

except Exception, e:

print "#######错误:"+str(clf.labels_[i - 1])+" "+str(i)

fo = open("/home/lhy/data/wbjl.txt", "wb")

for index in range(len(textFencuList)):

fo.write("\n#############################第"+str(index)+"个分类##################\n"); # 写入文件

print ""

print "#############################第"+str(index)+"个分类##################";

print ""

for ab in textFencuList[index]:

thisword=json.dumps(ab, encoding="UTF-8", ensure_ascii=False)

#thisword = json.dumps(ab)

fo.write(thisword + "\n") # 写入文件

print thisword

fo.close();

# 用来评估簇的个数是否合适,距离越小说明簇分的越好,选取临界点的簇个数

print("############评估因子大小,用来评估簇的个数是否合适,距离越小说明簇分的越好,选取临界点的簇个数:类别"+str(LEIBI)+" 因子"+str(clf.inertia_))

getCU(300)

'''for index in range(100,1000,10):

getCU(index)

'''