转载请注明出处:http://www.cnblogs.com/gambler/p/9028741.html

一、要想在eclipse上运行hadoop,首先要依次解决下面问题:

1、安装jdk

2、安装ant

3、安装eclipse

4、安装hadoop

5、编译hadoop-eclipse-plugin插件

前面四个网上方案很多,但是却参差不齐,很多都是敷衍了事,于是我整理了一些优秀的文章,按照上面来,几乎没什么问题(对于小白,一定要注意路径问题,别到后面自己都不知道自己安装了什么)

1、安装jdk(注意,这里会出现一些小问题,例如本机已经安装了openjdk,可以百度)

2、安装ant(这个很简单,但是也要记住,在配置文件的时候,也是在前面jdk配置文件(sudo gedit /etc/profile)那里添加)

3、安装eclipse(这里jdk就不用重新安装了,选择下载java EE,毕竟什么都有)

4、安装hadoop(免密登录,貌似这伙有问题,再贴一个,大家可以参考这个人的免密登录)

5、编译hadoop-eclipse-plugin(这个很麻烦,而且网上的教程写的很乱,而且大多很老或者没用,主要是版本的更新)

二、如何编译hadoop-eclipse-plugin文件

1、首先,大家一般都是hadoop-2.*,所以可以在GitHub上下载提供的hadoop-eclipse-plugin

2、下载完之后,直接进行解压,然后打开hadoop2x-eclipse-plugin-master --->ivy ---->libraries.properties

打开之后是这样的(当然,这是我更改之后的,我使用的是hadoop-2.7.6,如果你跟我一样,可以直接跳,在文章末尾下载编译好的)

# Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. #This properties file lists the versions of the various artifacts used by hadoop and components. #It drives ivy and the generation of a maven POM # This is the version of hadoop we are generating hadoop.version=2.7.6 hadoop-gpl-compression.version=0.1.0 #These are the versions of our dependencies (in alphabetical order) apacheant.version=1.7.0 ant-task.version=2.0.10 asm.version=3.2 aspectj.version=1.6.5 aspectj.version=1.6.11 checkstyle.version=4.2 commons-cli.version=1.2 commons-codec.version=1.4 commons-collections.version=3.2.2 commons-configuration.version=1.6 commons-daemon.version=1.0.13 commons-httpclient.version=4.2.5 commons-lang.version=2.6 commons-logging.version=1.1.3 commons-logging-api.version=1.0.4 commons-math.version=2.1 commons-el.version=1.0 commons-fileupload.version=1.2 commons-io.version=2.4 commons-net.version=3.1 core.version=3.1.1 coreplugin.version=1.3.2 hsqldb.version=1.8.0.10 htrace.version=3.0.4 ivy.version=2.1.0 jasper.version=5.5.12 jackson.version=1.9.13 #not able to figureout the version of jsp & jsp-api version to get it resolved throught ivy # but still declared here as we are going to have a local copy from the lib folder jsp.version=2.1 jsp-api.version=5.5.12 jsp-api-2.1.version=6.1.14 jsp-2.1.version=6.1.14 jets3t.version=0.9.0 jetty.version=6.1.26 jetty-util.version=6.1.26 jersey-core.version=1.9 jersey-json.version=1.9 jersey-server.version=1.9 junit.version=4.11 jdeb.version=0.8 jdiff.version=1.0.9 json.version=1.0 kfs.version=0.1 log4j.version=1.2.17 lucene-core.version=2.3.1 mockito-all.version=1.8.5 jsch.version=0.1.54 oro.version=2.0.8 rats-lib.version=0.5.1 servlet.version=4.0.6 servlet-api.version=2.5 slf4j-api.version=1.7.10 slf4j-log4j12.version=1.7.10 wagon-http.version=1.0-beta-2 xmlenc.version=0.52 xerces.version=1.4.4 protobuf.version=2.5.0 guava.version=11.0.2 netty.version=3.6.2.Final

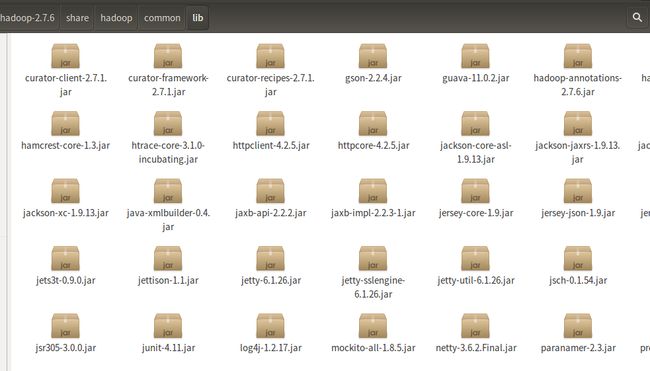

3、找到你最开始放置解压后hadoop文件的地方

打开hadoop文件 ----> share ----> hadoop

打开后是这样的:

根据这个来更改版本(重点),比如前面

log4j.version=1.2.17(最开始我是log4j.version=1.2.10的,但是根据上面最下面一排,左边第三个,发现是1.2.17,所以需要改为log4j.version=1.2.17)

这里有个小技巧,你可以一行一行的来查看,先对着libraries.properties,查看版本version,然后打开文件夹/你的hadoop文件夹/share/hadoop/,点击右边的那个放大镜(搜索),输入几个关键字符,这样就可以快速对照更改

4、更改build.xml(重点,因为随着版本不同,有些jar包的名称发生改变)

打开hadoop2x-eclipse-plugin-master ---> src ----> contrib ---->eclipse-plugin ---->build.xml

(注意,有些版本不同,可能位置会不一样,只要在hadoop2x-eclipse-plugin-master找到eclipse-plugin就行)

打开后:

"1.0" encoding="UTF-8" standalone="no"?>default="jar" name="eclipse-plugin"> "../build-contrib.xml"/> "eclipse-sdk-jars"> "${eclipse.home}/plugins/"> "org.eclipse.ui*.jar"/> "org.eclipse.jdt*.jar"/> "org.eclipse.core*.jar"/> "org.eclipse.equinox*.jar"/> "org.eclipse.debug*.jar"/> "org.eclipse.osgi*.jar"/> "org.eclipse.swt*.jar"/> "org.eclipse.jface*.jar"/> "org.eclipse.team.cvs.ssh2*.jar"/> "com.jcraft.jsch*.jar"/> "hadoop-sdk-jars"> "${hadoop.home}/share/hadoop/mapreduce"> "hadoop*.jar"/> "${hadoop.home}/share/hadoop/hdfs"> "hadoop*.jar"/> "${hadoop.home}/share/hadoop/common"> "hadoop*.jar"/> "classpath"> "${build.classes}"/> "eclipse-sdk-jars"/> "hadoop-sdk-jars"/> "check-contrib" unless="eclipse.home"> "skip.contrib" value="yes"/> "eclipse.home unset: skipping eclipse plugin"/> "compile" depends="init, ivy-retrieve-common" unless="skip.contrib"> "contrib: ${name}"/> <javac encoding="${build.encoding}" srcdir="${src.dir}" includes="**/*.java" destdir="${build.classes}" debug="${javac.debug}" deprecation="${javac.deprecation}"> "classpath"/> "jar" depends="compile" unless="skip.contrib"> "${build.dir}/lib"/> "${build.dir}/lib/" verbose="true"> "${hadoop.home}/share/hadoop/mapreduce"> "hadoop*.jar"/> "${build.dir}/lib/" verbose="true"> "${hadoop.home}/share/hadoop/common"> "hadoop*.jar"/> "${build.dir}/lib/" verbose="true"> "${hadoop.home}/share/hadoop/hdfs"> "hadoop*.jar"/> "${build.dir}/lib/" verbose="true"> "${hadoop.home}/share/hadoop/yarn"> "hadoop*.jar"/> "${build.dir}/classes" verbose="true"> "${root}/src/java"> "*.xml"/> "${hadoop.home}/share/hadoop/common/lib/protobuf-java-${protobuf.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/log4j-${log4j.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-cli-${commons-cli.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-configuration-${commons-configuration.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-lang-${commons-lang.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-collections-${commons-collections.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/jackson-core-asl-${jackson.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/jackson-mapper-asl-${jackson.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/slf4j-log4j12-${slf4j-log4j12.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/slf4j-api-${slf4j-api.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/guava-${guava.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/hadoop-auth-${hadoop.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-cli-${commons-cli.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/netty-${netty.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/htrace-core-${htrace.version}.jar" todir="${build.dir}/lib" verbose="true"/> <jar jarfile="${build.dir}/hadoop-${name}-${hadoop.version}.jar" manifest="${root}/META-INF/MANIFEST.MF"> "Bundle-ClassPath" value="classes/, lib/hadoop-mapreduce-client-core-${hadoop.version}.jar, lib/hadoop-mapreduce-client-common-${hadoop.version}.jar, lib/hadoop-mapreduce-client-jobclient-${hadoop.version}.jar, lib/hadoop-auth-${hadoop.version}.jar, lib/hadoop-common-${hadoop.version}.jar, lib/hadoop-hdfs-${hadoop.version}.jar, lib/protobuf-java-${protobuf.version}.jar, lib/log4j-${log4j.version}.jar, lib/commons-cli-${commons-cli.version}.jar, lib/commons-configuration-${commons-configuration.version}.jar, lib/commons-httpclient-${commons-httpclient.version}.jar, lib/commons-lang-${commons-lang.version}.jar, lib/commons-collections-${commons-collections.version}.jar, lib/jackson-core-asl-${jackson.version}.jar, lib/jackson-mapper-asl-${jackson.version}.jar, lib/slf4j-log4j12-${slf4j-log4j12.version}.jar, lib/slf4j-api-${slf4j-api.version}.jar, lib/guava-${guava.version}.jar, lib/netty-${netty.version}.jar, lib/htrace-core-${htrace.version}.jar"/> "${build.dir}" includes="classes/ lib/"/> "${root}" includes="resources/ plugin.xml"/> "${hadoop.home}/share/hadoop/common/lib/protobuf-java-${protobuf.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/log4j-${log4j.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-cli-${commons-cli.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-configuration-${commons-configuration.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-lang-${commons-lang.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-collections-${commons-collections.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/jackson-core-asl-${jackson.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/jackson-mapper-asl-${jackson.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/slf4j-log4j12-${slf4j-log4j12.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/slf4j-api-${slf4j-api.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/guava-${guava.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/hadoop-auth-${hadoop.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/commons-cli-${commons-cli.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/netty-${netty.version}.jar" todir="${build.dir}/lib" verbose="true"/> "${hadoop.home}/share/hadoop/common/lib/htrace-core-${htrace.version}.jar" todir="${build.dir}/lib" verbose="true"/> <jar jarfile="${build.dir}/hadoop-${name}-${hadoop.version}.jar" manifest="${root}/META-INF/MANIFEST.MF"> "Bundle-ClassPath" value="classes/, lib/hadoop-mapreduce-client-core-${hadoop.version}.jar, lib/hadoop-mapreduce-client-common-${hadoop.version}.jar, lib/hadoop-mapreduce-client-jobclient-${hadoop.version}.jar, lib/hadoop-auth-${hadoop.version}.jar, lib/hadoop-common-${hadoop.version}.jar, lib/hadoop-hdfs-${hadoop.version}.jar, lib/protobuf-java-${protobuf.version}.jar, lib/log4j-${log4j.version}.jar, lib/commons-cli-${commons-cli.version}.jar, lib/commons-configuration-${commons-configuration.version}.jar, lib/commons-httpclient-${commons-httpclient.version}.jar, lib/commons-lang-${commons-lang.version}.jar, lib/commons-collections-${commons-collections.version}.jar, lib/jackson-core-asl-${jackson.version}.jar, lib/jackson-mapper-asl-${jackson.version}.jar, lib/slf4j-log4j12-${slf4j-log4j12.version}.jar, lib/slf4j-api-${slf4j-api.version}.jar, lib/guava-${guava.version}.jar, lib/netty-${netty.version}.jar, lib/htrace-core-${htrace.version}.jar"/> "${build.dir}" includes="classes/ lib/"/> "${root}" includes="resources/ plugin.xml"/>

(提示:

在前面的jar包里没有htrace-core-...jar只有htrace-core-3.1.0-incubating.jar,所以更改为:

)做法:

找到

然后找到标签lib/servlet-api-${servlet-api.version}.jar,

lib/commons-io-${commons-io.version}.jar,

lib/htrace-core-${htrace.version}-incubating.jar"/>

当然,如果出现其他类似错误,对照更改就行(当然,如果报错,先备份后,尝试删除部分,毕竟不是每个都能用到)

5、最后开始进行编译工作

在hadoop2x-eclipse-plugin-master ---> src ----> contrib ---->eclipse-plugin文件夹中执行:

ant jar -Dversion=2.7.6 -Declipse.home=/opt/eclipse -Dhadoop.home=/home/gambler/hadoop-2.7.6

注意 -Dversion=你的hadoop版本,-Declipse.home=你的eclipse文件路径,-Dhadoop=你的hadoop文件路径,

还有关键的一点是,它创建jar包的默认路径是hadoop2x-eclipse-plugin-master ---->build ---->contrib ---->eclipse-plugin,如果没有的话可以提前创建一个,至少需要build文件夹,否则会报错编译完成后出现下面这个表示成功:

三、使用eclipse创建MapReduce工程

1、首先将编译好的jar包移动到eclipse ----> plugins中去

命令是: sudo mv hadoop-eclipse-plugin-2.7.6 .jar /你的eclipse文件/plugins

2、启动eclipse,可以直接在search中搜索eclipse,

新建一个工程,找到MapReduce,会出现下面这种问题(invalid Hadoop Runtime specified;please click'Configure Hadoop install directory')

点击右下边的'Configure Hadoop install directory'

在里面填入/hadoop的绝对路径/share/hadoop/mapreduce

填完之后点击完成,然后就OK了,最后放一张成功的图,博主也是刚刚建好的;

好累啊,写完这个,麻烦大伙要是觉得还ok,就点个赞!!!

最后的编译完成的后的jar包