《Hadoop权威指南》学习笔记(一)

本博文是我学习《Hadoop权威指南》3.5节的笔记,主要是里面范例程序的实现,部分实现有修改

1 从Hadoop读取数据

首先新建一个文本文件test.txt作为测试

hadoop fs -mkdir /poems //在Hadoop集群上新建一个目录poems

hadoop fs -copyFromLocal test.txt /poems/test.txt //将本地test.txt文件上传到Hadoop集群

hadoop fs -ls /poems //检查是否成功上传上传无误就可以在poems目录下看见刚才上传的文件,准备工作完成,接下来用两种方法读取Hadoop集群上的test.txt文件

1.1 URL读取

新建类URLCat如下

package com.tuan.hadoopLearn.hdfs;

import java.net.URL;

import java.io.InputStream;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;

public class URLCat {

static {

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

public static void main(String[] args) {

InputStream in = null;

try {

in = new URL(args[0]).openStream();

IOUtils.copyBytes(in, System.out, 4096, false);

} catch (Exception e) {

System.out.println(e);

} finally {

IOUtils.closeStream(in);

}

}

}maven install后,进入工程jar包目录下(一般是.m2目录下),cmd执行命令,注意两个路径要写对,一个是URLCat类的路径,一个是Hadoop集群的路径

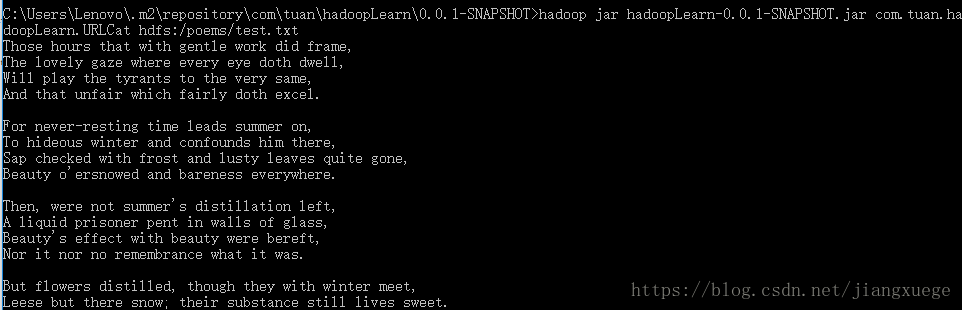

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.URLCat hdfs:/poems/test.txt //执行jar包下的URLCat类这里可能会出现找不到或无法加载主类的错误,一般有两个原因,一个是CLASSPATH环境变量没有配置,我使用的Java1.8配置CLASSPATH应该为配置为“.;%JAVA_HOME\lib\dt.jar;%JAVA_HOME%\lib\tools.jar”,最前面有一个“.”,表示当前目录;另一个原因是类的路径没有写完整

执行成功后就可以通过URLCat类读取到刚才上传的test.txt文件并在控制台打印

1.2 FileSystem读取

类似地,写一个FileSystemCat类

package com.tuan.hadoopLearn.hdfs;

import java.io.InputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class FileSystemCat {

public static void main(String[] args) {

String uri = args[0];

Configuration conf = new Configuration();

InputStream in = null;

try {

FileSystem fs = FileSystem.get(URI.create(uri), conf);

in = fs.open(new Path(uri));

IOUtils.copyBytes(in, System.out, 4096, false);

} catch (Exception e) {

System.out.println(e);

} finally {

IOUtils.closeStream(in);

}

}

}同样maven install后,cmd输入下列命令运行,可以看到输出test.txt内容

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.FileSystemCat hdfs:/poems/test.txt1.3 读取位置定位

将java.io的InputStream替换为hadoop的FsDataInputStream,可以实现按指定偏移量读取文件的功能,新建FileSystemDoubleCat类如下

package com.tuan.hadoopLearn.hdfs;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class FileSystemDoubleCat {

public static void main(String[] args) {

String uri = args[0];

Configuration conf = new Configuration();

FSDataInputStream in = null;

try {

FileSystem fs = FileSystem.get(URI.create(uri), conf);

in = fs.open(new Path(uri));

IOUtils.copyBytes(in, System.out, 4096, false);

System.out.println("\n------------------------------------------------------------------");

in.seek(4);

IOUtils.copyBytes(in, System.out, 4096, false);

} catch (Exception e) {

System.out.println(e);

} finally {

IOUtils.closeStream(in);

}

}

}同样执行类似的cmd命令,结果如图,第二次输出的时候开始的4个字母没有输出,因为执行了seek(4)

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.FileSystemDoubleCat hdfs:/poems/test.txt2 向Hadoop写入数据

在本地新建一个poem1.txt文件,准备等会通过Java传到Hadoop集群上

新建类FileCopyWithProgress

package com.tuan.hadoopLearn.hdfs;

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

public class FileCopyWithProgress {

public static void main(String[] args) {

String localSrc = args[0];

String dst = args[1];

InputStream in = null;

OutputStream out = null;

Configuration conf = new Configuration();

try {

in = new BufferedInputStream(new FileInputStream(localSrc));

FileSystem fs = FileSystem.get(URI.create(dst), conf);

out = fs.create(new Path(dst), new Progressable() {

@Override

public void progress() { //回调函数,显示上传过程

System.out.print(".");

}

});

IOUtils.copyBytes(in, out, 4096, true);

} catch (Exception e) {

System.out.println(e);

}

}

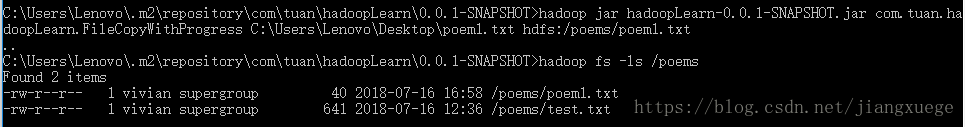

}输入cmd命令,将文件poems.txt传到hadoop集群上

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.FileCopyWithProgress C:\Users\Lenovo\Desktop\poem1.txt hdfs:/poems/poem1.txt

hadoop fs -ls /poems //检查是否文件成功上传3 文件信息查询

3.1 读取文件状态

这个和书上的例子不太一样,书上做了一个测试小集群,这里直接在集群上测试,新建类ShowFileStatusTest

package com.tuan.hadoopLearn.hdfs;

import java.io.IOException;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.FileStatus;

public class ShowFileStatusTest {

public static void main(String[] args) {

String uri = args[0];

try {

FileSystem fs = FileSystem.get(URI.create(uri), new Configuration());

FileStatus stat = fs.getFileStatus(new Path(uri));

if (stat.isDir()) {

System.out.println(uri + " is a directory");

} else {

System.out.println(uri + " is a file");

}

System.out.println("The path is " + stat.getPath());

System.out.println("The length is " + stat.getLen());

System.out.println("The modification time is " + stat.getModificationTime());

System.out.println("The permission is " + stat.getPermission().toString());

} catch (IOException e) {

e.printStackTrace();

}

}

}

输入cmd命令

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.ShowFileStatusTest hdfs:/poems //读取poems目录信息

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.ShowFileStatusTest hdfs:/poems/poem1.txt //读取poem1.txt文件信息3.2 列出目录下文件

这次同时列出两个指定目录下的文件,再新建一个jokes目录,放入一个joke1.txt

写好类ListStatus

package com.tuan.hadoopLearn.hdfs;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.FileUtil;

import org.apache.hadoop.fs.Path;

public class ListStatus {

public static void main(String[] args) {

try {

FileSystem fs = FileSystem.get( new Configuration());

Path[] paths = new Path[args.length];

for (int i = 0; i < args.length; i ++) {

paths[i] = new Path(args[i]);

}

FileStatus[] stats = fs.listStatus(paths);

Path[] listPaths = FileUtil.stat2Paths(stats);

for (Path p : listPaths) {

System.out.println(p);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

cmd命令

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.ListStatus hdfs:/poems hdfs:/jokes3.3 目录过滤

新建类RegexExcludedPathFilter,将指定目录下符合条件的目录过滤并将其他目录列出

package com.tuan.hadoopLearn.hdfs;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.FileUtil;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.PathFilter;

public class RegexExcludedPathFilter implements PathFilter{

private final String reg;

public RegexExcludedPathFilter(String reg) {

this.reg = reg;

}

@Override

public boolean accept(Path path) {

return !path.toString().matches(reg);

}

public static void main(String[] args) {

String uri = args[0];

String reg = args[1];

try {

FileSystem fs = FileSystem.get(new Configuration());

FileStatus[] stats = fs.globStatus(new Path(uri), new RegexExcludedPathFilter(reg));

Path[] listPaths = FileUtil.stat2Paths(stats);

for (Path p : listPaths) {

System.out.println(p);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

输入cmd命令,这里我卡了很久,本来poems目录下有两个文件poem1.txt和test.txt,我试图用/poems/t.*过滤掉test.txt,一直不成功,后来改成.*/poems/t.*就成功了,似乎globStatus()两个参数目录的输入方式不同,PathFilter的目录是完成的目录,这仅是本人不负责任的推测,留待以后继续研究

hadoop jar hadoopLearn-0.0.1-SNAPSHOT.jar com.tuan.hadoopLearn.RegexExcludedPathFilter /poems/* .*/poems/t.*