Keras BP神经网络运用于波士顿房价预测

Keras 运用于波士顿房价预测

导入相关的包

import keras

from sklearn.datasets import load_boston

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import SGD,Adam

import numpy as np

from sklearn.model_selection import train_test_split

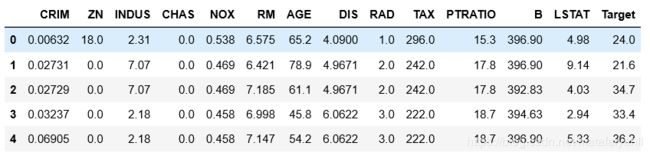

载入数据

因为数据各个特征取值范围各不相同,不能直接送到神经网络模型中进行处理。尽管网络模型能适应数据的多样性,但是相应的学习过程变得非常困难。一种常见的数据处理方法是特征归一化normalization—减均值除以标准差;数据0中心化,方差为1.

house = load_boston()

x = house.data

y = house.target

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.1)

print(x.shape)

print(y.shape)

#归一化

mean = x.mean(axis=0)

std = x.std(axis=0)

x_train -= mean

x_train /= std

x_test -= mean

x_test /= std

print(x_train.shape)

print(y_train.shape)

print(x_test.shape)

print(y_test.shape)

(506, 13)

(506,)

(455, 13)

(455,)

(51, 13)

(51,)

模型构建

构建13-64-64-1神经网络

def build_model():

model = Sequential()

model.add(Dense(64, activation='relu',input_shape=(x_train.shape[1],)))

model.add(Dense(64, activation='relu'))

model.add(Dense(1))

adam = Adam(lr = 0.001)

model.compile(optimizer=adam, loss='mse', metrics=['accuracy'])

return model

训练模型

K折交叉验证

当调整模型参数时,为了评估模型,我们通常将数据集分成训练集和验证集。但是当数据量过小时,验证集数目也变得很小,导致验证集上的评估结果相互之间差异性很大—与训练集和测试集的划分结果相关。评估结果可信度不高。

最好的评估方式是采用K折交叉验证–将数据集分成K份(K=4或5),实例化K个模型,每个模型在K-1份数据上进行训练,在1份数据上进行评估,最后用K次评估分数的平均值做最后的评估结果。

k = 4

num_val_samples = len(x_train) // k

num_epochs = 100

all_scores = []

for i in range(k):

print('processing fold #',i)

val_data = x_train[i*num_val_samples : (i+1)*num_val_samples] # 划分出验证集部分

val_targets = y_train[i*num_val_samples : (i+1)*num_val_samples]

partial_train_data = np.concatenate([x_train[:i*num_val_samples],x_train[(i+1)* num_val_samples:] ],axis=0) # 将训练集拼接到一起

partial_train_targets = np.concatenate([y_train[:i*num_val_samples],y_train[(i+1)* num_val_samples:] ],axis=0)

model = build_model()

model.fit(partial_train_data,partial_train_targets,epochs=num_epochs,batch_size=16,verbose=0)#模型训练silent模型

val_mse, val_mae = model.evaluate(val_data, val_targets, verbose=0) # 验证集上评估

all_scores.append(val_mae)

processing fold # 0

processing fold # 1

processing fold # 2

processing fold # 3

model = build_model()

for step in range(30001):

cost = model.train_on_batch(x_train,y_train)

评估模型

#评估模型

test_loss,test_accuracy = model.evaluate(x_test,y_test)

print('loss:',test_loss)

51/51 [==============================] - 0s 6ms/step

loss: 16.295210819618376

预测结果

y_pred = model.predict(x_test)

y_test = y_test.reshape(-1,1)

print(y_test.shape)

print(y_pred.shape)

y = np.concatenate((y_test,y_pred),axis=1)

print(y)

(51, 1)

(51, 1)

[[22.4 29.00861359]

[26.4 20.00535774]

[19. 19.89637184]

[22.2 23.57507515]

[ 8.8 16.45183182]

[13.9 12.0325613 ]

[15.6 17.47181129]

[22.9 30.84517479]

[20.7 21.34505653]

[25. 24.71461678]

[ 9.7 14.47148418]

[13.8 14.16065502]

[20.8 21.88388634]

[32.2 32.33004379]

[21.2 20.74515915]

[34.9 37.37858963]

[18.4 14.51666927]

[25.2 27.09690285]

[36. 35.71841049]

[24.7 24.20606422]

[23.9 24.70848465]

[15.2 10.63304234]

[12.5 13.14098263]

[23.2 18.67226028]

[24.2 24.51757431]

[44.8 46.45916367]

[16.4 16.53090096]

[17.4 20.09835815]

[19.6 17.38390923]

[25.1 23.30638313]

[20.3 20.64103889]

[18.6 21.87194443]

[15.2 15.76328373]

[43.1 40.18106461]

[15.3 4.6482749 ]

[22.9 21.33856583]

[22.1 24.22558022]

[35.4 37.21940994]

[21.2 21.54840088]

[13.5 11.08192062]

[13.4 18.37044334]

[50. 61.65808868]

[28.7 24.61343956]

[26.5 31.44546509]

[12.7 16.45353699]

[50. 48.83145905]

[14.3 13.62646389]

[18.5 23.34628105]

[17.4 20.22313881]

[24. 13.78490162]

[30.7 29.4163208 ]]

小结

回归问题:损失函数通常为MSE均方误差;

模型评估监测指标通常为MAE(mean absolute error);

当数据取值范围不一致时,需要对特征进行预处理;

数据量小时,可以采用K折验证来衡量模型;

数据量小时,模型复杂度也应该相应的简单,可以避免模型过拟合。