神经网络的前向传播和反向传播公式及代码实现

1.前向传播:

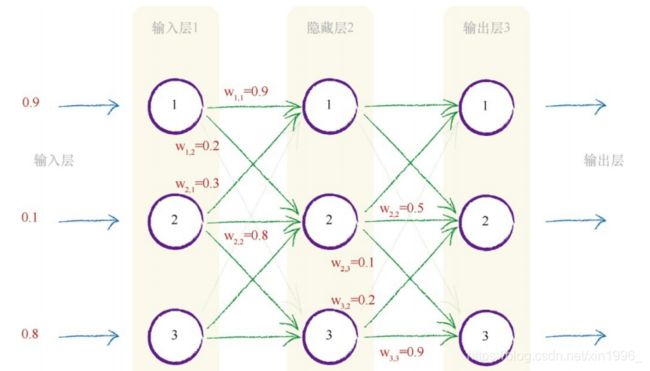

以三层神经网络为例,图中input为0.9,0.1,0.8,我们不用数字,用字母代替,设输入为x1,x2,x3,隐藏层的第一个神经元输出Ohidden1=S[(w11x1+b1)+(w21x2+b2)+(w31*x1+b3)](原文中未考虑偏置,这里我们加上偏置),S为激活函数sigmoid函数,其他神经元也是如此,用归纳的公式写出第l层第j个神经元的输出为:

![]()

也可以用矩阵表示:Xhidden = Whidden • I+bhidden,其中Whidden为输入层到隐藏层的权值矩阵,I为输入层的输入矩阵,因为是第一层所以不需要激活函数激活,b为偏置矩阵,X为隐藏层的输入矩阵,该层的输出需要经过激活函数,所以Ohidden=sigmoid(X),输出层的输入矩阵Xout=Wout• Ohidden+bout,Wout为隐藏层到输出层的权值矩阵,bout为隐藏层到输入层的偏置矩阵,最后的输出矩阵O=sigmoid(Xout)

2.反向传播

第一个输出节点的误差标记为e1,这个值等于由训练数据提供的所期望的输出值t1 与实际输出值o1 之间的差。也就是,e 1 = ( t 1-O 1)。

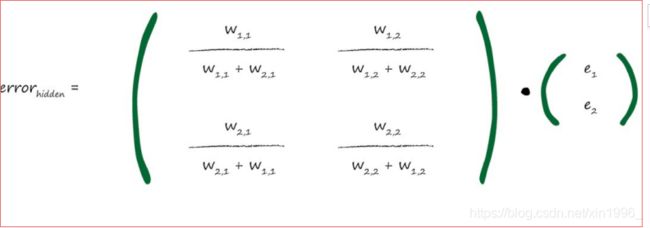

用矩阵来表示:

为了方便计算和好看我们使用简

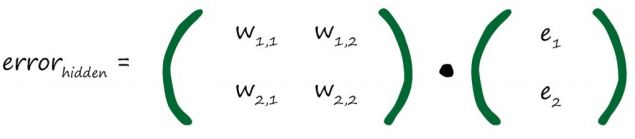

单得多的e1 * w1,1 来代替e1 * w1,1/ ( w1,1 + w2,1),所以:

即误差反向传播的误差权重矩阵为前向传播的转置矩阵,同理,有偏置时偏置反向矩阵为bT所以

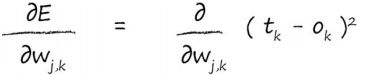

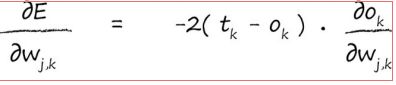

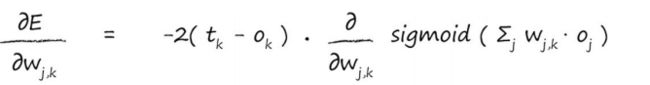

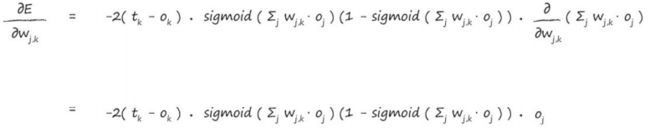

ehidden=woutT *e+bT,当然这是简单的误差反向传播。实际中:我们用梯度下降法减少误差。下面是公式推导过程

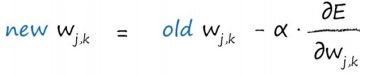

α为学习率,调节变化的强度,确保不超调

用矩阵形式表示:![]()

![]()

新偏置newb=b-αb

3.程序实现:

定义神经网络:

import numpy

import scipy.special

import matplotlib.pyplot

class neuralNetwork :

def __init__(self, inputnodes, hiddennodes, outputnodes,learningrate) :

self.inodes = inputnodes

self.hnodes = hiddennodes

self.onodes = outputnodes

self.wih = numpy.random.normal(0.0, pow(self.hnodes, -0.5),(self.hnodes, self.inodes))

self.who = numpy.random.normal(0.0, pow(self.onodes, -0.5),(self.onodes, self.hnodes))

self.lr = learningrate

self.activation_function = lambda x: scipy.special.expit(x)

pass

#这是定义参数变量

def train(self, inputs_list, targets_list) :

inputs = numpy.array(inputs_list, ndmin=2).T

targets = numpy.array(targets_list, ndmin=2).T

hidden_inputs = numpy.dot(self.wih, inputs)

hidden_outputs = self.activation_function(hidden_inputs)

final_inputs = numpy.dot(self.who, hidden_outputs)

final_outputs = self.activation_function(final_inputs) #以上为前向传播

output_errors = targets - final_outputs

hidden_errors = numpy.dot(self.who.T, output_errors)

self.who += self.lr * numpy.dot((output_errors *final_outputs * (1.0 - final_outputs)),numpy.transpose(hidden_outputs))

self.wih += self.lr * numpy.dot((hidden_errors *hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs)) #以上为反向传播权重更新

pass

def query(self, inputs_list) :

inputs = numpy.array(inputs_list, ndmin=2).T

hidden_inputs = numpy.dot(self.wih, inputs)

hidden_outputs = self.activation_function(hidden_inputs)

final_inputs = numpy.dot(self.who, hidden_outputs)

final_outputs = self.activation_function(final_inputs)

return final_outputs

#这是前反向传播之后输入输出关系,即训练后输入输入值,返回输出值

以上是定义了基本的神经网络结构,我们用神经网络结构来训练和测试相关的训练集测试集,总体代码为下:

import numpy

import scipy.special

class neuralNetwork :

def __init__(self, inputnodes, hiddennodes, outputnodes,learningrate) :

self.inodes = inputnodes

self.hnodes = hiddennodes

self.onodes = outputnodes

self.wih = numpy.random.normal(0.0, pow(self.hnodes, -0.5),(self.hnodes, self.inodes))

self.who = numpy.random.normal(0.0, pow(self.onodes, -0.5),(self.onodes, self.hnodes))

self.lr = learningrate

self.activation_function = lambda x: scipy.special.expit(x)

pass

def train(self, inputs_list, targets_list) :

inputs = numpy.array(inputs_list, ndmin=2).T

targets = numpy.array(targets_list, ndmin=2).T

hidden_inputs = numpy.dot(self.wih, inputs)

hidden_outputs = self.activation_function(hidden_inputs)

final_inputs = numpy.dot(self.who, hidden_outputs)

final_outputs = self.activation_function(final_inputs)

output_errors = targets - final_outputs

hidden_errors = numpy.dot(self.who.T, output_errors)

self.who += self.lr * numpy.dot((output_errors *final_outputs * (1.0 - final_outputs)),numpy.transpose(hidden_outputs))

self.wih += self.lr * numpy.dot((hidden_errors *hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs))

pass

# query the neural network

def query(self, inputs_list) :

inputs = numpy.array(inputs_list, ndmin=2).T

hidden_inputs = numpy.dot(self.wih, inputs)

hidden_outputs = self.activation_function(hidden_inputs)

final_inputs = numpy.dot(self.who, hidden_outputs)

final_outputs = self.activation_function(final_inputs)

return final_outputs

input_nodes=784

hidden_notes=200

output_nodes=10

learning_rate=0.1

n=neuralNetwork(input_nodes,hidden_notes,output_nodes,learning_rate)

training_data_file = open(r'C:\Users\尚鑫\Desktop\文件\mnist_train_100.csv','r',encoding='utf-8-sig')

training_data_list = training_data_file.readlines()

training_data_file.close()

epochs=5

for i in range(epochs):

for record in training_data_list:

all_values=record.split(',')

inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) +0.01

targets = numpy.zeros(output_nodes) + 0.01

targets[int(all_values[0])] = 0.99

n.train(inputs, targets)

pass

pass

test_data_file = open(r'C:\Users\尚鑫\Desktop\文件\mnist_train_100.csv','r',encoding='utf-8-sig')

test_data_list = test_data_file.readlines()

test_data_file.close()

scorecard=[]

for record in test_data_list:

all_values = record.split(',')

correct_label = int(all_values[0])

inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01

outputs = n.query(inputs)

label = numpy.argmax(outputs)

if (label == correct_label):

scorecard.append(1)

else:

scorecard.append(0)

pass

pass

scorecard_array = numpy.asarray(scorecard)

print ("performance = ", scorecard_array.sum()/scorecard_array.size)

最后输出的结果为:performance = 1.0