tensorflow 学习专栏(六):使用卷积神经网络(CNN)在mnist数据集上实现分类

卷积神经网络(Convolutional Neural Network, CNN)是一种前馈神经网络,它的人工神经元可以响应一部分覆盖范围内的周围单元,对于大型图像处理有出色表现。

卷积神经网络CNN的结构一般包含这几个层:

- 输入层:用于数据的输入

- 卷积层:使用卷积核进行特征提取和特征映射

- 激励层:由于卷积也是一种线性运算,因此需要增加非线性映射

- 池化层:进行下采样,对特征图稀疏处理,减少数据运算量。

- 全连接层:通常在CNN的尾部进行重新拟合,减少特征信息的损失

- 输出层:用于输出结果

卷积神经网络结构如下:

我们使用两种方法来实现卷积神经网络:

方法一:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

tf.set_random_seed(1)

np.random.seed(1)

LR = 0.001

batch_size=50

mnist = input_data.read_data_sets('./mnist',one_hot=True)

test_x = mnist.test.images[:2000]

test_y = mnist.test.labels[:2000]

x = tf.placeholder(tf.float32,[None,784])

img = tf.reshape(x,[-1,28,28,1])

y = tf.placeholder(tf.int32,[None,10])

def add_cnn_layer(inputs,filters,strides,Activation_function=None):

x = tf.nn.conv2d(inputs,filters,[1,strides,strides,1],padding='SAME')

if Activation_function is None:

out = x

else:

out = Activation_function(x)

return out

def add_maxpooling_layer(inputs,k):

out = tf.nn.max_pool(inputs,ksize=[1,k,k,1],strides=[1,k,k,1],padding='SAME')

return out

# bulid cnn net

w1 = tf.Variable(tf.random_normal([5,5,1,16]))

w2 = tf.Variable(tf.random_normal([5,5,16,32]))

conv1 = add_cnn_layer(img,w1,1,tf.nn.relu)

pool1 = add_maxpooling_layer(conv1,2)

conv2 = add_cnn_layer(pool1,w2,1,tf.nn.relu)

pool2 = add_maxpooling_layer(conv2,2)

flat = tf.reshape(pool2,[-1,7*7*32])

output = tf.layers.dense(flat,10)

loss = tf.losses.softmax_cross_entropy(onehot_labels = y,logits = output)

train = tf.train.AdamOptimizer(LR).minimize(loss)

accuracy = tf.metrics.accuracy(labels=tf.argmax(y,axis=1),predictions=tf.argmax(output,axis=1),)[1]

sess = tf.Session()

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

for step in range(5000):

b_x,b_y = mnist.train.next_batch(batch_size)

_,loss_ = sess.run([train,loss],feed_dict={x:b_x,y:b_y})

if step%50==0:

accuracy_ = sess.run(accuracy,feed_dict={x:test_x,y:test_y})

print('train loss:%.4f'%loss_, '|test accuracy%.4f'%accuracy_)

方法二:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

tf.set_random_seed(1)

np.random.seed(1)

LR = 0.001

batch_size=50

mnist = input_data.read_data_sets('./mnist',one_hot=True)

test_x = mnist.test.images[:2000]

test_y = mnist.test.labels[:2000]

x = tf.placeholder(tf.float32,[None,784])

img = tf.reshape(x,[-1,28,28,1])

y = tf.placeholder(tf.int32,[None,10])

def add_cnn_layer(input,filter,k,stride,Activation_function=None):

x = tf.layers.conv2d(

inputs = input,

filters = filter,

kernel_size = k,

strides = stride,

padding ='same',

activation=Activation_function)

return x

def add_maxpooling_layer(inputs,k):

out = tf.nn.max_pool(inputs,ksize=[1,k,k,1],strides=[1,k,k,1],padding='SAME')

return out

# bulid cnn net

conv1 = add_cnn_layer(img,16,5,1,tf.nn.relu)

pool1 = add_maxpooling_layer(conv1,2)

conv2 = add_cnn_layer(pool1,32,5,1,tf.nn.relu)

pool2 = add_maxpooling_layer(conv2,2)

flat = tf.reshape(pool2,[-1,7*7*32])

output = tf.layers.dense(flat,10)

loss = tf.losses.softmax_cross_entropy(onehot_labels = y,logits = output)

train = tf.train.AdamOptimizer(LR).minimize(loss)

accuracy = tf.metrics.accuracy(labels=tf.argmax(y,axis=1),predictions=tf.argmax(output,axis=1),)[1]

sess = tf.Session()

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

for step in range(5000):

b_x,b_y = mnist.train.next_batch(batch_size)

_,loss_ = sess.run([train,loss],feed_dict={x:b_x,y:b_y})

if step%50==0:

accuracy_ = sess.run(accuracy,feed_dict={x:test_x,y:test_y})

print('train loss:%.4f'%loss_, '|test accuracy%.4f'%accuracy_)

我们可以发现上述两种方法的差别在于定义卷积神经网络的函数不同,方法一使用:

tf.nn.conv2d(input, filter, strides, padding, use_cudnn_on_gpu=None, data_format=None, name=None)来定义卷积网络,而方法二则使用了:

tf.layers.conv2d(inputs, filters, kernel_size, strides=(1,1),

padding='valid', data_format='channels_last',

dilation_rate=(1,1), activation=None,

use_bias=True, kernel_initializer=None,

bias_initializer=init_ops.zeros_initializer(),

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None, trainable=True,

name=None, reuse=None)来定义卷积神经网络.

对于卷积神经网络而言,上述两者算法的实现的功能是一样的,只不过tf.layers.conv2d使用tf.nn.conv2d作为后端。

需要注意的是 tf.nn.conv2d中的filter为一个四维张量其格式必须为:

[filter_height, filter_width, in_channels, out_channels]而 tf.layers.conv2d中的filters为一个整数,即输出空间的维度。

两者应用的选择如下:

tf.layers.conv2d参数丰富,一般用于从头训练一个模型。

tf.nn.conv2d,一般在下载预训练好的模型时使用。

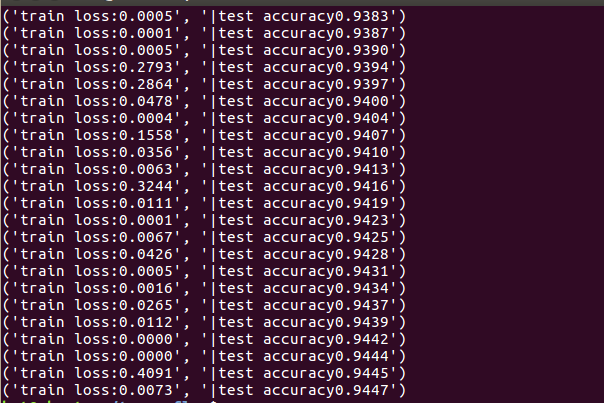

实验结果如下图所示:

由于电脑未使用GPU加速,训练速度缓慢,所以accuracy达到94.47%便停止了训练,

如继续训练accuracy还有进一步的提升空间!