实战 MLP CNN 实践mnist

一、CNN基础知识点

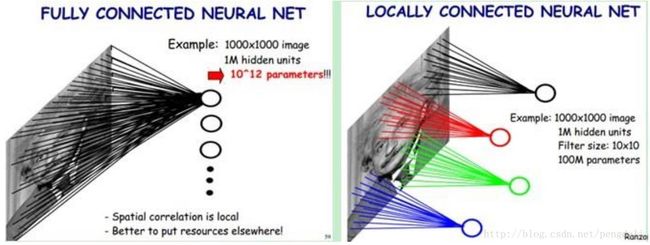

1、局部感知

生物视觉系统中的视觉皮层是局部接受信息的, 图像的部分关键像素信息也是有局部联系的。MLP中神经元是全连接,而可以改进成先由局部到全局的一个过程,如图:

如图每个卷积单元与10*10个像素相连,那么参数(1000/10)(1000/10)(10×10)=10^6个参数,减少为全连接的百万分之一

2、权值参数共享

简单来说就是一个卷积核的权重参数是相同的或者说只有一套,每个卷积核是去提取传入图片像素的某一个特征的,而这个特征与在图片中的位置无关,我们有多少个卷积核就提取到了多少特征.

如图这是一个3*3的卷积核在5*5的图上做卷积操作,卷积核与每个像素进行运算,图像中符合条件的部分被卷积核提取出来了

3、多卷积核

1个卷积核只提取一部分特征,那要抽象复杂信息,自然是不够的。多个卷积核就像一张图片的多个通道,每个通道表示不同的信息

4、池化

通常卷积操作出的特征后就可以进全连接进行分类了,但是这时又面临计算量的问题,而且非常容易过拟合。池化简单理解和图像模糊的算法一样,对一个区域的特征信息进行平均或最大值操作,实践证明这种操作后可以减少过拟合现象的出现

5、多层卷积

简单来说一层卷积学到的特征是相对局部的,层数月多学到的特征越全局,实践中一般都会使用多层卷积

重点内容

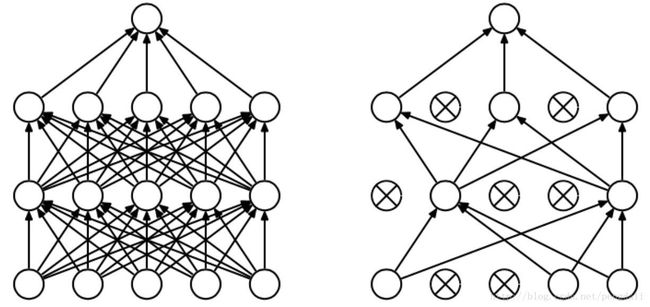

6、dropout

也是用来减少过拟合的问题的,它会在当前层一定的范围内(参数设定),随机让一些神经元不与下层神经元连接

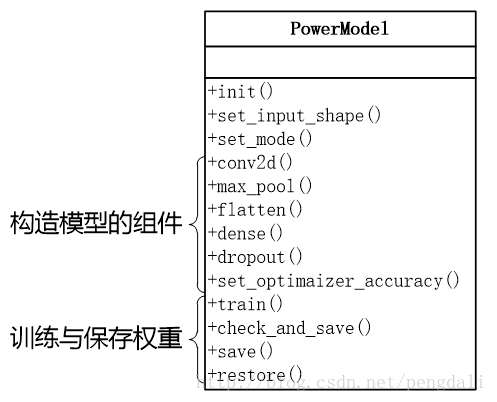

二、程序结构

为了快速调试使用不同的网络,我们稍微封装一个类来根据不同的参数构造不同模型。

大概的类图是这的

1、一些主要的函数

dense是全连接层,如果我们构造一个mlp基本用他就可以了

conv2d是卷积层,构造卷积神经网络的基本组件

max_pool是池化层

flatten是用来连接卷积网络和全连接网络的,主要作用是全连接的输出的tensor形状,转换为dense层输入的形状

dropout是防止过拟合的利器

set_optimizer_accuracy是封装了优化和检验方法

check_and_save方法用来检查当前的训练精度,如果检查出来的结果好,那就保存当前的权重

2、实践mlp

接下来我们来用个小模型实践下

先构建这个模型,is_restore的意思是不使用之前的保存的权重参数,重新开始训练,如果你改变了模型结构这个参数要设置为False,我偷懒没写兼容:)

x = PowerMode('mnist_mlp' ,is_debug=1 ,is_restore=False)

x.set_input_shape([None ,28**2] ,[None ,10] ,True)

mode_config = [{'t':'dense','u':256,'a':tf.nn.relu},

{'t':'dropout'},

{'t':'dense','u':10,'a':tf.nn.softmax},

]

x.set_mode(mode_config)

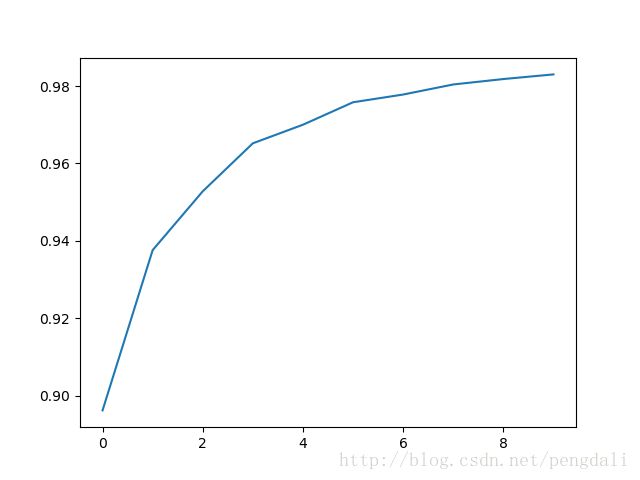

x.set_optimizer_accuracy()然后训练10轮,dropout设置为0.75,acc_step设置为1是每1轮都验证模型

x.train(mnist.train.images, mnist.train.labels, mnist.validation.images, mnist.validation.labels

,epochs=10 ,batch_size=100 ,dropout=0.75 ,acc_step=1)3、实践CNN

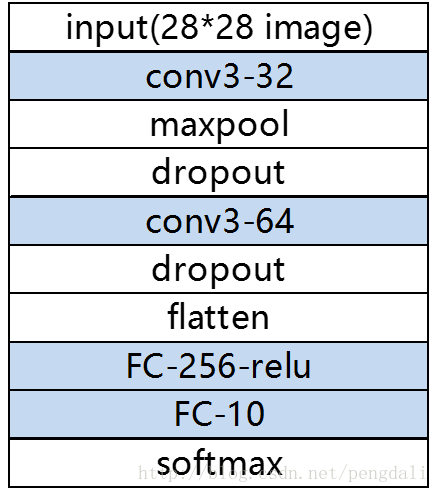

我们再用一个简单的cnn模型测试下,模型结构如图

代码也很简单

def main_cnn():

mnist = input_data.read_data_sets(DATA_DIR,one_hot=True)

x = PowerMode('mnist_cnn' ,is_debug=1 ,is_restore=False)

x.set_input_shape([None ,28 ,28 ,1] ,[None ,10] ,True)

mode_config = [{'t':'conv2d','x':3,'y':3,'n':32,'s':1,'a':tf.nn.relu},

{'t':'max_pool','k':2,'s':2},

{'t':'dropout'},

{'t':'conv2d','x':3,'y':3,'n':64,'s':1,'a':tf.nn.relu},

{'t':'max_pool','k':2,'s':2},

{'t':'dropout'},

{'t':'flatten'},

{'t':'dense','u':256,'a':tf.nn.relu},

{'t':'dense','u':10,'a':tf.nn.softmax},

]

x.set_mode(mode_config)

x.set_optimizer_accuracy(1e-4)

x.train(np.reshape(mnist.train.images, [-1,28,28,1]) ,mnist.train.labels

,np.reshape(mnist.validation.images, [-1,28,28,1]) ,mnist.validation.labels

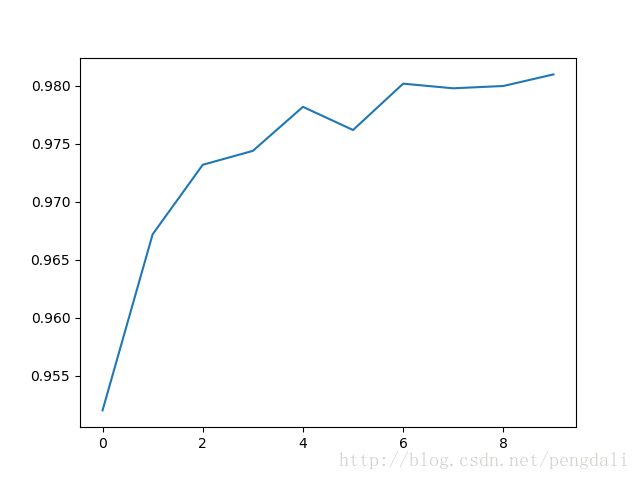

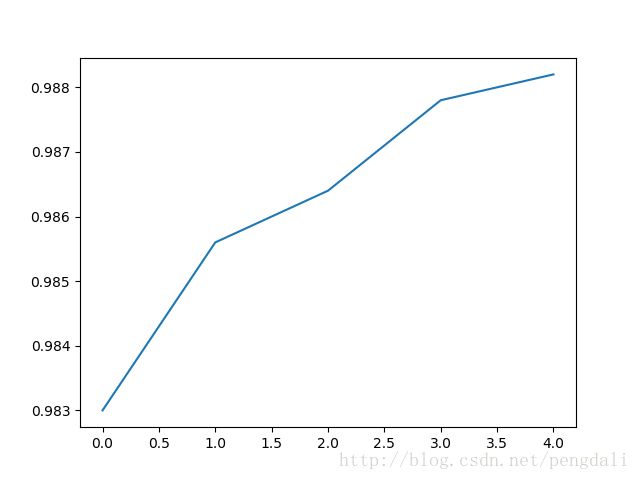

,epochs=15 ,batch_size=100 ,dropout=0.75 ,acc_step=1)训练的前10轮结果和上面那个mlp差不多

再训练5轮的数据已经接近0.99了

4、总结

大家应该可以随意更改模型的设计,调整各种参数的配置,更改训练、验证的次数来体会各种模型的差异

最后附上PowerMode的完整代码

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import os

from tensorflow.python.framework import ops

class PowerMode:

def __init__(self ,mode_name ,is_debug=1 ,is_restore=True):

ops.reset_default_graph()

self.config_key_map = {

'conv2d' : self.conv2d,

'max_pool' : self.max_pool,

'dense' : self.dense,

'flatten' : self.flatten,

'dropout' : self.dropout,

}

self.is_debug = is_debug

self.is_restore = is_restore

self.mode_name = mode_name

#private

self._layer_data = None

self._train = None

self._accuracy = None

self._pre_validation = tf.Variable(0. ,trainable=False) #之前的精度

#输入参数

def set_input_shape(self ,x_shape ,y_shape ,is_dropout = False):

self._layer_data = self.x = tf.placeholder(tf.float32 ,x_shape ,name='input_data')

self.debug('input shape' ,self.x.shape.as_list())

self.y_ = tf.placeholder(tf.float32 ,y_shape ,name='y_data')

if is_dropout:

self.dropout = tf.placeholder(tf.float32 ,name='dropout_param')

#----------模型部分-------------#

#设置模型

def set_mode(self ,config):

for k,v in enumerate(config):

v['id'] = k + 1

fun = self.config_key_map[v['t']]

self._layer_data , name = fun(v)

self.debug(name+' out shape' ,self._layer_data.shape.as_list())

#卷集层

def conv2d(self ,param):

name = 'conv2d_' + str(param['id'])

w_shape = [param['x'] ,param['y'] ,self._layer_data.shape.as_list()[3] ,param['n']]

step = param['s']

activation = param['a'] if 'a' in param else None

p = param['p'] if 'p' in param else 'SAME'

self.debug(name + ' shape' ,w_shape)

w = tf.Variable(tf.truncated_normal(w_shape ,stddev = 0.1))

b = tf.Variable(tf.constant(0.1 ,shape=[w_shape[3]]))

if activation != None:

return activation(tf.nn.conv2d(self._layer_data ,w ,strides=[1, step, step, 1] ,padding=p ,name=name) + b) , name

else:

return tf.nn.conv2d(self._layer_data ,w ,strides=[1, step, step, 1] ,padding=p ,name=name) + b , name

#定义池化层

def max_pool(self ,param):

ksize = param['k']

step = param['s']

name = 'max_pool_' + str(param['id'])

self.debug(name + ' shape' ,self._layer_data.shape.as_list())

return tf.nn.max_pool(self._layer_data ,ksize=[1 ,ksize ,ksize ,1] ,strides=[1 ,step ,step ,1] ,padding='SAME' ,name=name) , name

#抹平参数

def flatten(self ,param):

name = 'flatten_' + str(param['id'])

k = self._layer_data.shape.as_list()

self.debug(name + ' shape' ,k)

return tf.reshape(self._layer_data ,[-1 ,np.prod(k[1:])] ,name=name) , name

#全连接层

def dense(self ,param):

units = param['u']

activation = param['a']

name = 'dense_' + str(param['id'])

input_units = self._layer_data.shape.as_list()

self.debug(name + ' shape' ,input_units)

w = tf.Variable(tf.truncated_normal([input_units[1] ,units] ,stddev = 0.1) ,name=name+'_w')

b = tf.Variable(tf.constant(0.1 ,shape = [units]) ,name=name+'_b')

if activation:

return activation(tf.matmul(self._layer_data ,w) + b ,name=name) , name

else:

return tf.matmul(self._layer_data ,w ,name=name) + b , name

#dropout层

def dropout(self ,param):

name = 'dropout_' + str(param['id'])

k = self._layer_data.shape.as_list()

self.debug(name + ' shape' ,k)

return tf.nn.dropout(self._layer_data ,self.dropout ,name=name) , name

#损失函数

def set_optimizer_accuracy(self ,learning_rate=1e-3):

self.debug('loss shape',self._layer_data.shape.as_list())

#训练优化器

cross_entropy = tf.reduce_mean(-tf.reduce_sum(self.y_ * tf.log(self._layer_data) ,reduction_indices=[1]))

self._train = tf.train.AdamOptimizer(learning_rate).minimize(cross_entropy)

#检查函数

correct_prediction = tf.equal(tf.argmax(self._layer_data, 1), tf.argmax(self.y_, 1))

self._accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32) ,name = 'accuracy')

#----------训练---------------#

#训练

def train(self ,train_x ,train_y ,test_x ,test_y ,epochs=1 ,batch_size=32 ,dropout=0.75 ,acc_step=10):

with tf.Session() as sess:

if not self.restore(sess): #是否还原

sess.run(tf.initialize_all_variables()) #执行初始化变量

data_size = train_x.shape[0]

batch_num = int(np.ceil(data_size/float(batch_size)))

accuracy_plot = [] #

for i in range(epochs):

for j in range(batch_num):

start ,end = j * batch_size ,min((j+1) * batch_size ,data_size)

batch_xs = train_x[start:end]

batch_ys = train_y[start:end]

sess.run(self._train,{self.x:batch_xs ,self.y_:batch_ys ,self.dropout:dropout})

if i % acc_step == 0 :

acc = sess.run(self._accuracy,{self.x:test_x ,self.y_:test_y ,self.dropout:1})

accuracy_plot.append(acc)

self.debug('batch_num: %d' % i ,'total: %f' % acc)

self.check_and_save(sess ,acc)

if self.is_debug!=0 and len(accuracy_plot)>1:

plt.plot(accuracy_plot)

plt.show()

#检查准确率是否有所提高

def check_and_save(self ,sess ,validation):

if sess.run(self._pre_validation) < validation:

sess.run(tf.assign(self._pre_validation ,validation) )

self.save(sess)

#保存当前会话

def save(self ,sess):

save_dir = self.mode_name + '_cp'

if not os.path.exists(save_dir):

os.makedirs(save_dir)

self.debug('save' ,save_dir)

saver = tf.train.Saver() # 用于保存变量

saver.save(sess, os.path.join(save_dir,'best_validation')) #保存最佳验证结果

#恢复之前的数据

def restore(self ,sess):

if not self.is_restore:

return False

#得到检查点文件

re_path = self.mode_name + '_cp'

ckpt = tf.train.get_checkpoint_state(re_path)

if ckpt and ckpt.model_checkpoint_path:

self.debug('restore' ,ckpt.model_checkpoint_path)

saver = tf.train.Saver()

saver.restore(sess, ckpt.model_checkpoint_path) # 还原所有的变量

self.debug('restore validation',sess.run(self._pre_validation))

return True

return False

#调试信息打印

def debug(self ,name ,message):

if self.is_debug!=0:

print (name ,' : ' ,message)这是完整的测试程序

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

from PowerMode import PowerMode

import numpy as np

DATA_DIR = 'data/MNIST_data/'

def main_mlp():

mnist = input_data.read_data_sets(DATA_DIR,one_hot=True)

x = PowerMode('mnist_mlp' ,is_debug=1 ,is_restore=False)

x.set_input_shape([None ,28**2] ,[None ,10] ,True)

mode_config = [{'t':'dense','u':256,'a':tf.nn.relu},

{'t':'dropout'},

{'t':'dense','u':10,'a':tf.nn.softmax},

]

x.set_mode(mode_config)

x.set_optimizer_accuracy()

x.train(mnist.train.images, mnist.train.labels, mnist.validation.images, mnist.validation.labels

,epochs=10 ,batch_size=100 ,dropout=0.75 ,acc_step=1)

def main_cnn():

mnist = input_data.read_data_sets(DATA_DIR,one_hot=True)

x = PowerMode('mnist_cnn' ,is_debug=1 ,is_restore=False)

x.set_input_shape([None ,28 ,28 ,1] ,[None ,10] ,True)

mode_config = [{'t':'conv2d','x':3,'y':3,'n':32,'s':1,'a':tf.nn.relu},

{'t':'max_pool','k':2,'s':2},

{'t':'dropout'},

{'t':'conv2d','x':3,'y':3,'n':64,'s':1,'a':tf.nn.relu},

{'t':'max_pool','k':2,'s':2},

{'t':'dropout'},

{'t':'flatten'},

{'t':'dense','u':256,'a':tf.nn.relu},

{'t':'dense','u':10,'a':tf.nn.softmax},

]

x.set_mode(mode_config)

x.set_optimizer_accuracy(1e-4)

x.train(np.reshape(mnist.train.images, [-1,28,28,1]) ,mnist.train.labels

,np.reshape(mnist.validation.images, [-1,28,28,1]) ,mnist.validation.labels

,epochs=15 ,batch_size=100 ,dropout=0.75 ,acc_step=1)

if __name__ == '__main__':

#main_mlp()

main_cnn()